Jina Embeddings v2 to v3 Migration Guide

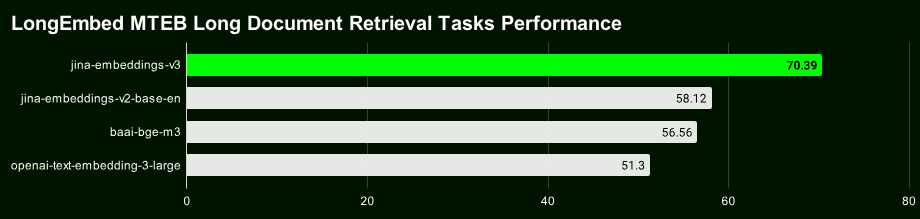

Jina Embeddings v3 Our latest 570 million-parameter top-level text vector model that achieves current best-in-class SOTA on multilingual and long text retrieval tasks.

v3 is not only more powerful, but also has a lot of new and exciting features. If you are still using Jina Embeddings v2, which was released in October 2023, we strongly recommend that you migrate to v3 as soon as possible.

Let's start with a brief overview of the highlights of Jina Embeddings v3:

- Support for 89 languages : Breaking through the limitation that v2 can only handle a few bilingual languages, realizing true multilingual text processing.

- Built-in Lora adapter: v2 is a generic Embedding model, while v3 has a built-in Lora Adapter that generates vectors optimized specifically for your retrieval, classification, clustering, and other tasks for better performance.

- Longer text search is more accurate :: v3 Utilization of 8192 token The context length and the Late Chunking technique, which generates block vectors with richer contextual information, can significantly improve the accuracy of long text retrieval.

- Flexible and controllable vector dimensions The vector dimensions of : v3 can be flexibly adjusted to strike a balance between performance and storage space, avoiding the high storage overhead associated with high-dimensional vectors. This is made possible by Matryoshka Representation Learning (MRL).

Link to open source model: https://huggingface.co/jinaai/jina-embeddings-v3

Model API Link: https://jina.ai/?sui=apikey

Link to modeling paper: https://arxiv.org/abs/2409.10173

Quick Migration Guide

- v3 is a brand new model, so the vectors and indexes of v2 can't be reused directly, and you need to reindex the data again.

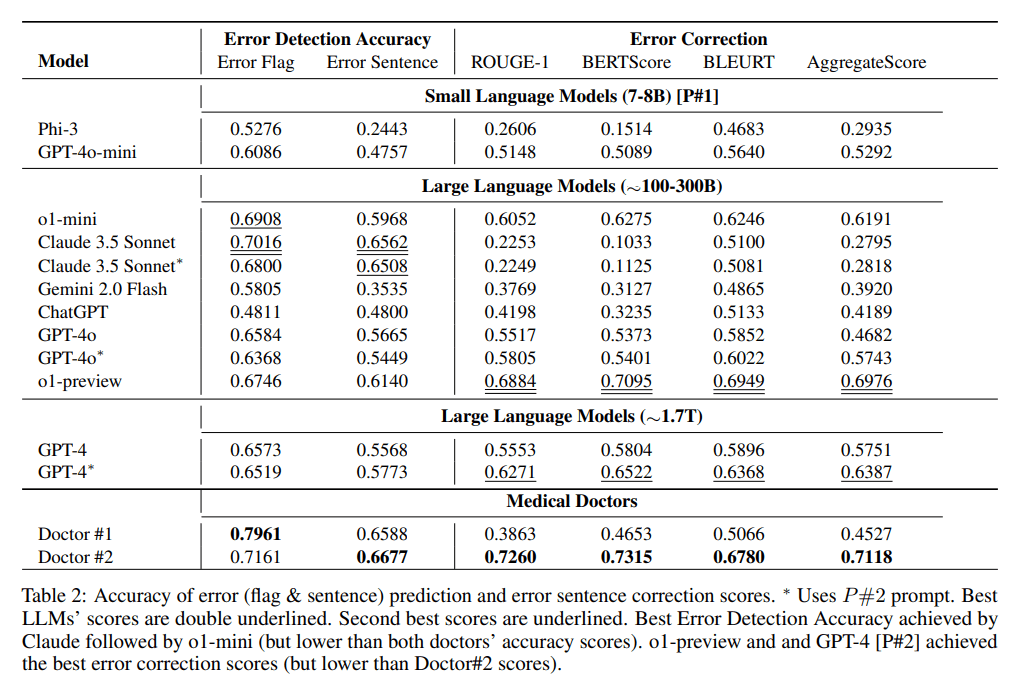

- In most scenarios (96%), v3 significantly outperforms v2, while v2 only occasionally ties or even slightly outperforms v3 in the English summary task. However, given v3's multi-language support and advanced features, v3 should be preferred in most scenarios.

- The v3 API now includes

task,dimensionscap (a poem)late_chunkingThree parameters, the exact usage of which can be found in our blog post.

Dimensional adjustment

- v3 outputs a 1024-dimensional vector by default, while v2 only has 768 dimensions. With Matryoshka representation learning, v3 can now theoretically output any dimension. Developers can set the

dimensionsParameters flexibly control the dimensionality of the output vectors to find the best balance between storage cost and performance - If your previous project was developed based on the v2 API, change the model name directly to

jina-embeddings-v3is not possible because the default dimensions have changed. If you want to keep the data structure or size consistent with v2, you can set thedimensions=768The distribution of the vectors of v3 and v2 is completely different even if they have the same dimension. Even if the dimensions are the same, the vectors of v3 and v2 have completely different distributions on the semantic space and thus cannot be used directly interchangeably.

Model Replacement

- v3's strong multilingual support has fully replaced the bilingual model in v2 (v2-base-de, v2-base-es, v2-base-zh).

- For pure coding tasks, jina-embeddings-v2-based-code is still the best choice. Tests show it scores as high as 0.7753, compared to 0.7537 for v3 generic vectors (no task set) and 0.7564 for the LoRA adapter, giving v2 encoding a performance lead of about 2.81 TP3T over v3.

Mission parameters

- The v3 API generates good quality generic vectors when the task parameter is unspecified, but it is highly recommended to set the task parameter according to the specific task type to get a better vector representation.

- To make v3 emulate the behavior of v2, use the

task="text-matching"We recommend trying different task options to find the best solution, though, rather than setting thetext-matchingAs a universal program. - If your project uses v2 for information retrieval, it is recommended to switch to v3 for the retrieval task type (

retrieval.passagecap (a poem)retrieval.query), better retrieval results can be obtained.

Other considerations

- For brand new task types (which are rare), try setting the task parameter to None as a starting point.

- If you used the label rewriting technique in v2 for zero-sample classification tasks, then in v3 you can just set the

task="classification"Similar results are obtained because v3 has optimized the vector representation for the classification task. - Both v2 and v3 support context lengths of up to 8192 tokens, but v3 is more efficient, thanks to FlashAttention2 technology, and lays the groundwork for v3's late-scoring feature.

Late Chunking

- v3 introduces a late-splitting function, using 8192 tokens long context, Mr. into a vector and then split into chunks, so that each small piece of the cut out contains contextual information, the retrieval will naturally be more accurate.

late_chunkingIt's currently only available in the API, so if you're running models locally, you won't be able to use this feature for a while.- start using

late_chunkingThe text length of each request cannot exceed 8192 tokens, because v3 can only process so much content at once.

Performance and speed

- In terms of speed, even though v3 has three times as many parameters as v2, the inference is faster than v2 or at least equal, mainly due to the FlashAttention2 technology.

- Not all GPUs support FlashAttention2. v3 will still run if you're using a GPU that doesn't, but it may be slightly slower than v2.

- When using the API, factors such as network latency, rate limitations, and availability zones also affect latency, so the API latency does not fully reflect the true performance of the v3 model.

Unlike v2, Jina Embeddings v3 is licensed under CC BY-NC 4.0. v3 can be used commercially via our API or Azure. v3 can be used commercially via our API, AWS, or Azure. research and non-commercial use is no problem. For local commercial deployment, please contact our sales team for licensing:

https://jina.ai/contact-sales

Multi-language support

v3 is currently the industry's leading multilingual vector model,** and is ranked #2 in the M****TEB charts for models with less than 1 billion parameters. **It supports 89 languages, covering most of the world's major languages.

These include Chinese, English, Japanese, Korean, German, Spanish, French, Arabic, Bengali, Danish, Dutch, Finnish, Georgian, Greek, Hindi, Indonesian, Italian, Latvian, Norwegian, Polish, Portuguese, Romanian, Russian, Slovak, Swedish, Thai, Turkish, Ukrainian, Urdu and Vietnamese. Turkish, Ukrainian, Urdu and Vietnamese.

If you were using v2's English, English/German, English/Spanish, or English/Chinese models, you now only need to modify the model parameter and select the appropriate task type, you can easily switch to v3.

# v2 英语-德语

data = {

"model": "jina-embeddings-v2-base-de",

"input": [

"The Force will be with you. Always.",

"Die Macht wird mit dir sein. Immer.",

"The ability to destroy a planet is insignificant next to the power of the Force.",

"Die Fähigkeit, einen Planeten zu zerstören, ist nichts im Vergleich zur Macht der Macht."

]

}

# v3 多语言

data = {

"model": "jina-embeddings-v3",

"task": "retrieval.passage",

"input": [

"The Force will be with you. Always.",

"Die Macht wird mit dir sein. Immer.",

"力量与你同在。永远。",

"La Forza sarà con te. Sempre.",

"フォースと共にあらんことを。いつも。"

]

}

response = requests.post(url, headers=headers, json=data)

Task-specific vector representation

v2 uses a generic vector representation, i.e., all tasks share the same model. v3 provides vector representations optimized specifically for different tasks (e.g., retrieval, classification, clustering, etc.) to improve performance in specific scenarios.

Select different task type, which is equivalent to telling the model which features relevant to that task to extract, generating a vector representation that is more adapted to the task requirements.

The following is an example of the Lightsaber Repair Knowledge Base, demonstrating how to migrate v2 code to v3 and experience the performance gains from task-specific vector representations:

# 实际项目中我们会使用更大的数据集,这个只是示例

knowledge_base = [

"为什么我的光剑刀锋在闪烁?刀锋闪烁可能表示电池电量不足或不稳定的水晶。请为电池充电并检查水晶的稳定性。如果闪烁持续,可能需要重新校准或更换水晶。",

"为什么我的刀锋比以前暗淡?刀锋变暗可能意味着电池电量低或电源分配有问题。首先,请为电池充电。如果问题仍然存在,可能需要更换LED。",

"我可以更换我的光剑刀锋颜色吗?许多光剑允许通过更换水晶或使用剑柄上的控制面板更改颜色设置来自定义刀锋颜色。请参阅您的型号手册以获得详细说明。",

"如果我的光剑过热,我该怎么办?过热可能是由于长时间使用导致的。关闭光剑并让其冷却至少10分钟。如果频繁过热,可能表明内部问题,需由技术人员检查。",

"如何为我的光剑充电?通过剑柄附近的端口,将光剑连接到提供的充电线,确保使用官方充电器以避免损坏电池和电子设备。",

"为什么我的光剑发出奇怪的声音?奇怪的声音可能表示音响板或扬声器有问题。尝试关闭光剑并重新开启。如果问题仍然存在,请联系我们的支持团队以更换音响板。"

]

query = "光剑太暗了"

For v2, there is only one task (text matching), so we only need one example code block:

# v2 代码:使用文本匹配任务对知识库和查询进行编码

data = {

"model": "jina-embeddings-v2-base-en",

"normalized": True, # 注意:v3 中不再需要此参数

"input": knowledge_base

}

docs_response = requests.post(url, headers=headers, json=data)

data = {

"model": "jina-embeddings-v2-base-en",

"task": "text-matching",

"input": [query]

}

query_response = requests.post(url, headers=headers, json=data)

v3 provides vector representations optimized for specific tasks, including retrieval, separation, classification, and text matching.

Vector representation of the search task

We demonstrate the difference between v2 and v3 when dealing with text retrieval tasks, using a simple lightsaber repair knowledge base as an example.

For semantic retrieval tasks, v3 introduces asymmetric encoding using, respectively, the retrieval.passage cap (a poem) retrieval.query Coding documents and queries to improve retrieval performance and accuracy.

Document coding: retrieval.passage

data = {

"model": "jina-embeddings-v3",

"task": "retrieval.passage", # "task" 参数是 v3 中的新功能

"late_chunking": True,

"input": knowledge_base

}

response = requests.post(url, headers=headers, json=data)

Query Code: retrieval.query

data = {

"model": "jina-embeddings-v3",

"task": "retrieval.query",

"late_chunking": True,

"input": [query]

}

response = requests.post(url, headers=headers, json=data)

Note: The above code enables thelate_chunkingfunction, which can enhance the encoding of long text, we will introduce it in detail later.

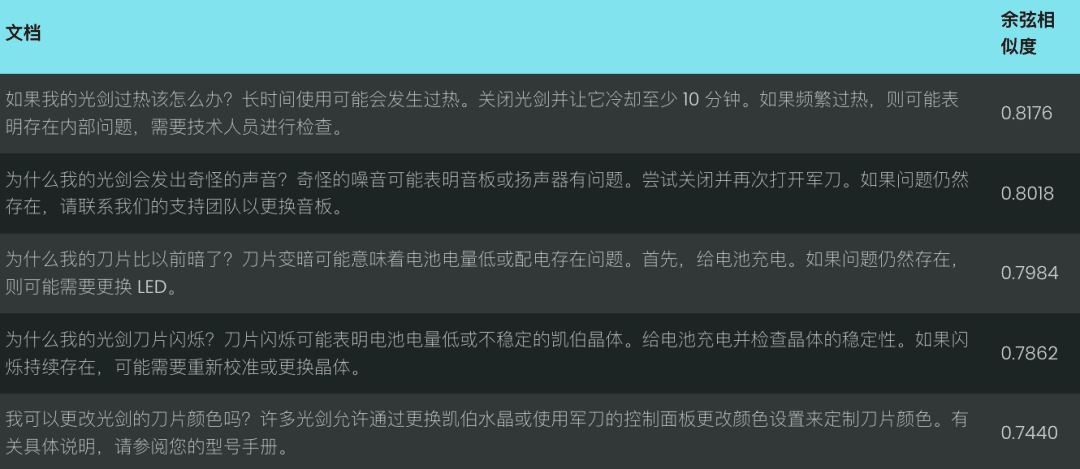

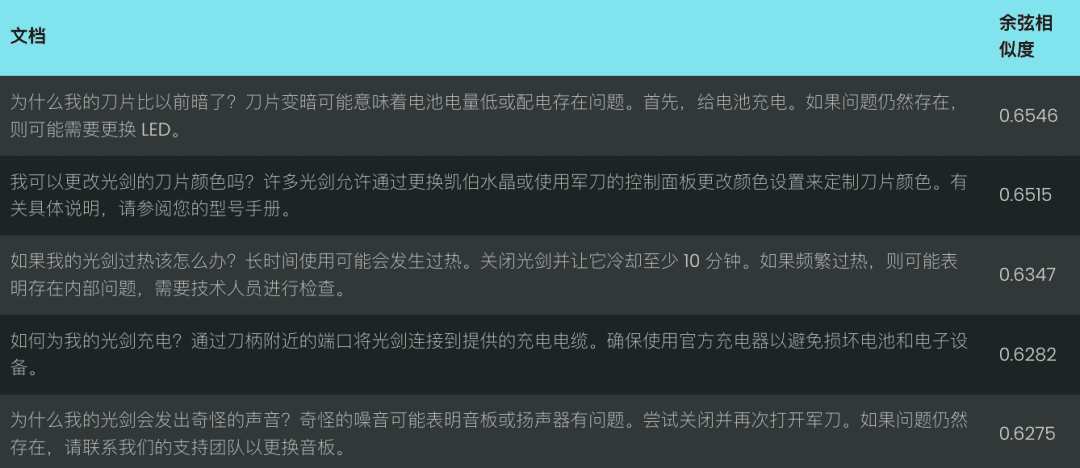

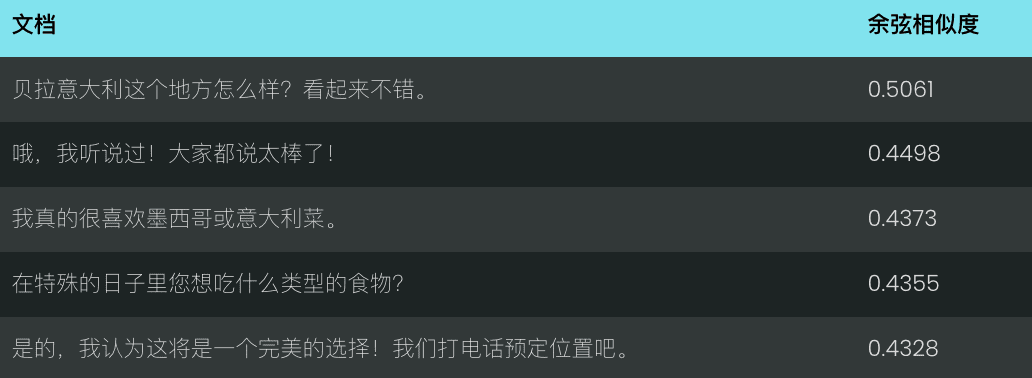

Let's compare the performance of v2 and v3 for the query "lightsabers are too dark". v2 returns a set of less relevant matches based on cosine similarity, as shown below:

In contrast, v3 understands the intent of the query better and returns more accurate results related to the "appearance of lightsaber blades", as shown below.

v3 does more than just retrieval; it also provides several other task-specific vector representations:

Vector representation of separation tasks

v3's separation The task is optimized for separation tasks such as clustering and re-ranking, for example, separating different types of entities, which is useful for organizing and visualizing large corpora.

Example: Distinguishing Star Wars and Disney Characters

data = {

"model": "jina-embeddings-v3",

"task": "separation", # 使用 separation 任务

"late_chunking": True,

"input": [

"Darth Vader",

"Luke Skywalker",

"Mickey Mouse",

"Donald Duck"

]

}

response = requests.post(url, headers=headers, json=data)

Vector representation of the classification task

v3's classification The task is optimized for text categorization tasks such as sentiment analysis and document categorization, for example, categorizing text into positive and negative comments.

EXAMPLE: Analyzing the Emotional Tendencies of Star Wars Movie Reviews

data = {

"model": "jina-embeddings-v3",

"task": "classification",

"late_chunking": True,

"input": [

"《星球大战》是一部划时代的杰作,彻底改变了电影业,并永远重新定义了科幻电影!",

"《星球大战》拥有令人惊叹的视觉效果、令人难忘的角色和传奇的叙事,是一部无与伦比的文化现象。",

"《星球大战》是一场过度炒作的灾难,充满了浅薄的角色,毫无有意义的情节!",

}

response = requests.post(url, headers=headers, json=data)

Vector representation of text matching

v3's text-matching Focus on semantic similarity tasks such as sentence similarity or de-emphasis, e.g., excluding repeated sentences or paragraphs.

Example: Recognizing repetition in Star Wars lines

data = {

"model": "jina-embeddings-v3",

"task": "text-matching",

"late_chunking": True,

"input": [

"Luke, I am your father.",

"No, I am your father.",

"Fear leads to anger, anger leads to hate, hate leads to the dark side.",

"Fear leads to anger. Anger leads to hate. Hate leads to suffering."

]

}

response = requests.post(url, headers=headers, json=data)

Late Chunking: Improving Long Text Encoding Results

v3 introduces the late_chunking parameter, when the late_chunking=True When the model processes the whole document first and then splits it into multiple blocks to generate a block vector containing complete contextual information; when the late_chunking=False When the model processes each block independently, the generated block vectors do not contain contextual information across blocks.

take note of

- start using

late_chunking=TrueThe total number of tokens per API request cannot exceed 8192, which is the maximum context length supported by v3. late_chunking=FalseThe total number of tokens is not limited, but is subject to the rate limit of the Embeddings API.

For long text processing, enable the late_chunkingcan significantly improve coding because it preserves contextual information across blocks, making the resulting vector representation more complete and accurate.

We use a transcript of a chat to evaluate late_chunking Impact on the effectiveness of long text retrieval.

history = [

"Sita,你决定好周六生日晚餐要去哪儿了吗?",

"我不确定,对这里的餐厅不太熟悉。",

"我们可以上网看看推荐。",

"那听起来不错,我们就这么办吧!",

"你生日那天想吃什么类型的菜?",

"我特别喜欢墨西哥菜或者意大利菜。",

"这个地方怎么样,Bella Italia?看起来不错。",

"哦,我听说过那个地方!大家都说那儿很好!",

"那我们订张桌子吧?",

"好,我觉得这会是个完美的选择!我们打电话预定吧。"

]

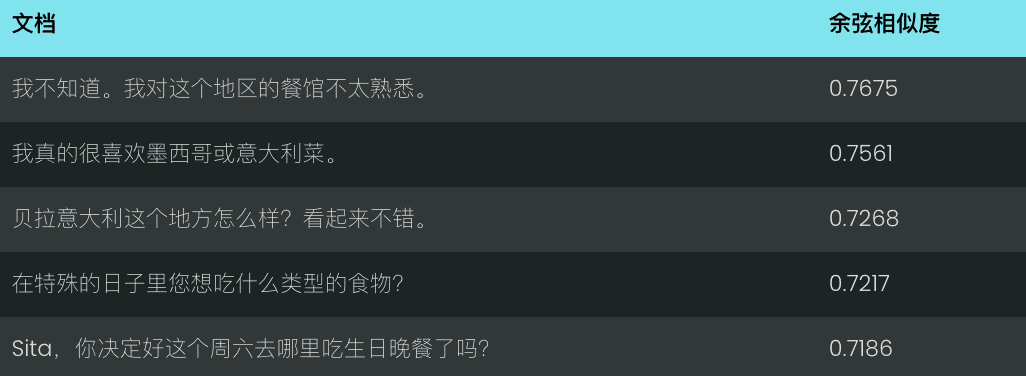

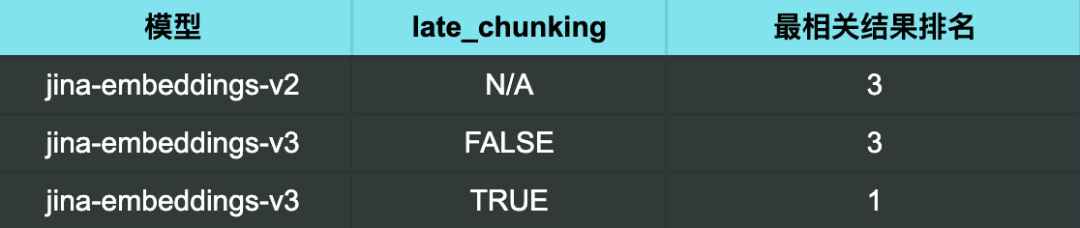

Using v2 for the query "What are some good restaurant recommendations?" , the results obtained are not particularly relevant.

With v3 and no late chunking enabled, the results are equally unsatisfactory.

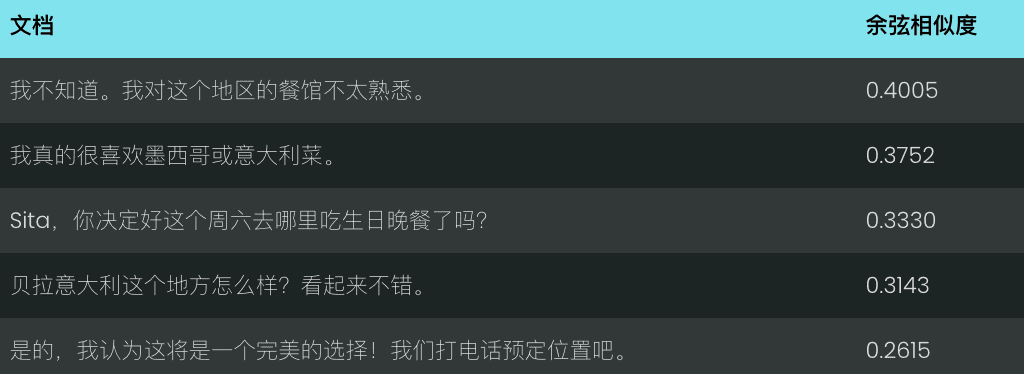

However, when using v3 and enabling late chunking When the most relevant result (a good restaurant recommendation) was ranked exactly first.

Search results:

It is clear from the search results that enabling late_chunking Afterwards, v3 is able to more accurately identify chat content relevant to the query, ranking the most relevant results first.

It also shows that late_chunking<span> </span>The accuracy of long text retrieval can be effectively and efficiently improved, especially in scenarios that require deep understanding of contextual semantics.

Using Russian nested vectors to represent equilibrium efficiency and performance

v3 Adopted dimensions The parameter supports flexible vector dimension control, you can adjust the dimension of the output vector according to the actual demand, and strike a balance between performance and storage space.

Smaller vector dimensions can reduce the storage overhead of vector databases and improve retrieval speed, but some information may be lost, resulting in performance degradation.

data = {

"model": "jina-embeddings-v3",

"task": "text-matching",

"dimensions": 768, # 设置向量维度为 768,默认值为 1024

"input": [

"The Force will be with you. Always.",

"力量与你同在。永远。",

"La Forza sarà con te. Sempre.",

"フォースと共にあらんことを。いつも。"

]

}

response = requests.post(url, headers=headers, json=data)

common problems

Q1: What are the advantages of using Late Chunking if I have already chunked the document before vectorization?

A1: The advantage of late splitting over pre-splitting is the ability to Processes the entire document before chunking, thus retaining more complete contextual information . Late chunking is important for processing complex or lengthy documents, it can help to provide a more relevant response during retrieval because the model has an overall understanding of the document before chunking. Whereas pre-segmentation blocks are processed independently of blocks without complete context.

Q2: Why does v2 have a higher benchmark score than v3 on the pairwise classification task? Do I need to worry?

A2: v2's seemingly higher scores on the pairwise classification task are mainly due to the fact that the average scores are computed differently. v3's test set contains more languages, so its average scores are likely to be lower than v2's. In fact, v3 performs as well as, if not better than, state-of-the-art models such as multilingual-e5 on the pairwise classification task in all languages.

Q3: Does v3 perform better on specific languages supported by the v2 bilingual model?

A3: Performance Comparison of v3 and v2 Bilingual Models on Specific Languages Depends on the specific language and type of task The bilingual model of v2 is highly optimized for specific languages, and therefore may perform better on some specific tasks. However, v3 is designed to support a wider range of multilingual scenarios, with stronger cross-language generalization capabilities and optimized for a variety of downstream tasks through task-specific LoRA adapters. As a result, v3 typically achieves better overall performance across multiple languages or in more complex task-specific scenarios such as semantic retrieval and text categorization.

If you only need to deal with one specific language supported by the v2 bilingual model (Chinese-English, English-German, Spanish-English) and your task is relatively simple, v2 is still a good choice and may even perform better in some cases.

But if you need to work with multiple languages, or if your task is more complex (e.g., you need to perform semantic retrieval or text categorization), then v3, with its strong cross-language generalization capabilities and optimization strategies based on downstream tasks, is a better choice.

Q4: Why does v2 outperform v3 on summary tasks and do I need to worry?

A4: v2 performs better on the summarization task, mainly because its model architecture is specifically optimized for tasks such as semantic similarity, which is closely related to the summarization task. v3 was designed with the goal of providing a wider range of task support, especially on the retrieval and classification tasks, and thus is not as optimized as v2 on the summarization task.

However, one should not worry too much, as the evaluation of the summarization task currently relies on SummEval, a test that measures semantic similarity and does not fully represent the model's overall ability on the summarization task. Given that v3 performs well on other critical tasks such as retrieval, slight performance differences on the summarization task usually do not have a significant impact on real-world applications.

summarize

Jina Embeddings v3 is our major model upgrade, reaching the current best level SOTA on multilingual and long text retrieval tasks, and it comes with a variety of built-in LoRA adapters that can be customized according to your needs for different scenarios of retrieval, clustering, classification, and matching for more accurate vectorization results. We strongly recommend that you migrate to v3 as soon as possible.

These are just some of our introductions to Jina Embeddings v3. We hope you find them helpful. If you have any questions, please feel free to leave a comment to discuss!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...