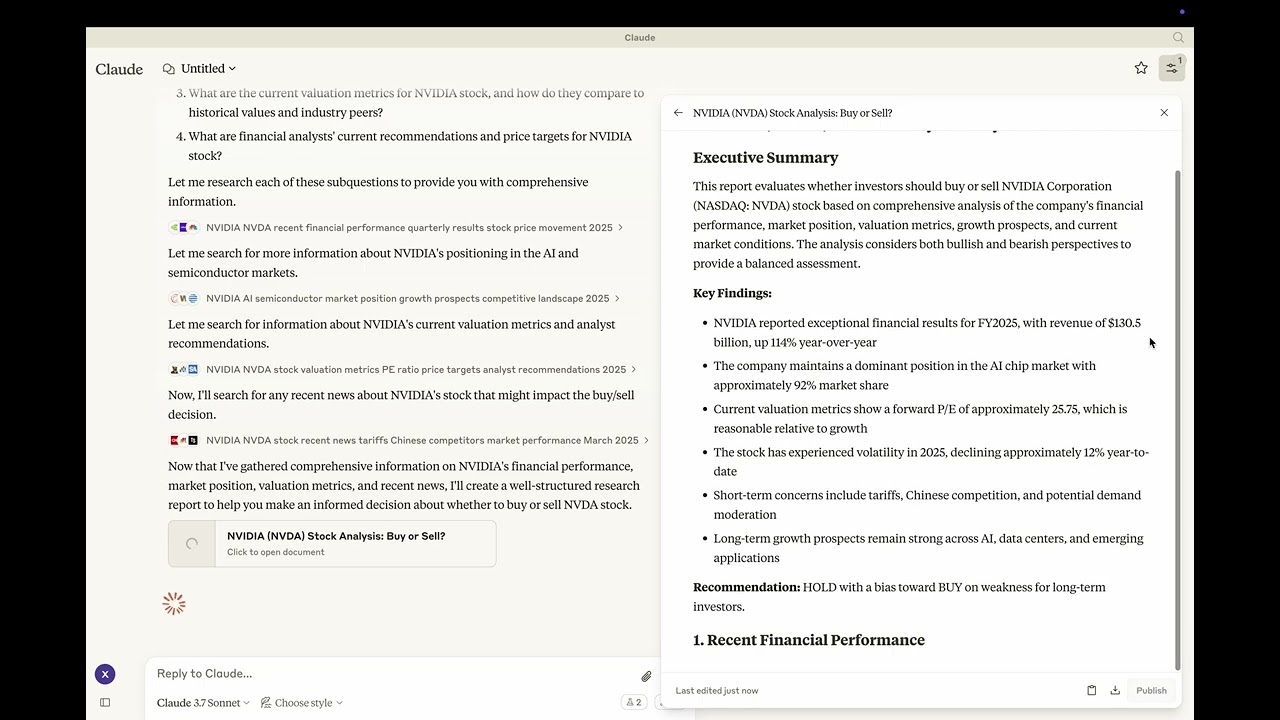

Instructor: a Python library to simplify structured output workflows for large language models

General Introduction

Instructor is a popular Python library designed for processing structured output from large language models (LLMs). Built on Pydantic, it provides a simple, transparent, and user-friendly API for managing data validation, retries, and streaming responses.Instructor has over a million downloads per month and is widely used in a variety of LLM workflows. The library supports multiple programming languages, including Python, TypeScript, Ruby, Go, and Elixir, and seamlessly integrates with a wide range of LLM providers, going beyond OpenAI support.

Recommended Reading:Structured Data Output Methods for Large Models: A Selected List of LLM JSON Resources,Outlines: Generate structured text output via regular expressions, JSON or Pydantic models,AI Functions: (API) services that convert input content into structured outputsThe

Function List

- response model: Use the Pydantic model to define the structure of the LLM output.

- Retest management: Easily configure the number of retries for a request.

- data validation: Ensure that the LLM response is as expected.

- Streaming Support: Easily handle lists and partial responses.

- Flexible Backend: Seamless integration with multiple LLM providers.

- Multi-language support: Supports multiple programming languages such as Python, TypeScript, Ruby, Go, and Elixir.

Using Help

Installation process

To install Instructor, simply run the following command:

pip install -U instructor

Basic use

Here is a simple example showing how to use Instructor to extract structured data from natural language:

import instructor

from pydantic import BaseModel

from openai import OpenAI

# 定义所需的输出结构

class UserInfo(BaseModel):

name: str

age: int

# 初始化 OpenAI 客户端并与 Instructor 集成

client = instructor.from_openai(OpenAI())

# 从自然语言中提取结构化数据

user_info = client.chat.completions.create(

model="gpt-4o-mini",

response_model=UserInfo,

messages=[{"role": "user", "content": "John Doe is 30 years old."}]

)

print(user_info.name) # 输出: John Doe

print(user_info.age) # 输出: 30

Using Hooks

Instructor provides a powerful hook system that allows you to intercept and log at various stages of the LLM interaction process. Below is a simple example showing how to use hooks:

import instructor

from openai import OpenAI

from pydantic import BaseModel

class UserInfo(BaseModel):

name: str

age: int

# 初始化 OpenAI 客户端并与 Instructor 集成

client = instructor.from_openai(OpenAI())

# 使用钩子记录交互过程

client.add_hook("before_request", lambda request: print(f"Request: {request}"))

client.add_hook("after_response", lambda response: print(f"Response: {response}"))

# 从自然语言中提取结构化数据

user_info = client.chat.completions.create(

model="gpt-4o-mini",

response_model=UserInfo,

messages=[{"role": "user", "content": "Jane Doe is 25 years old."}]

)

print(user_info.name) # 输出: Jane Doe

print(user_info.age) # 输出: 25

Advanced Usage

Instructor also supports integration with other LLM providers and offers flexible configuration options. You can customize the number of retries for requests, data validation rules, and streaming response handling as needed.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...