Infinity: bitwise autoregressive modeling for generating high-resolution images for unlimited high-resolution image generation

General Introduction

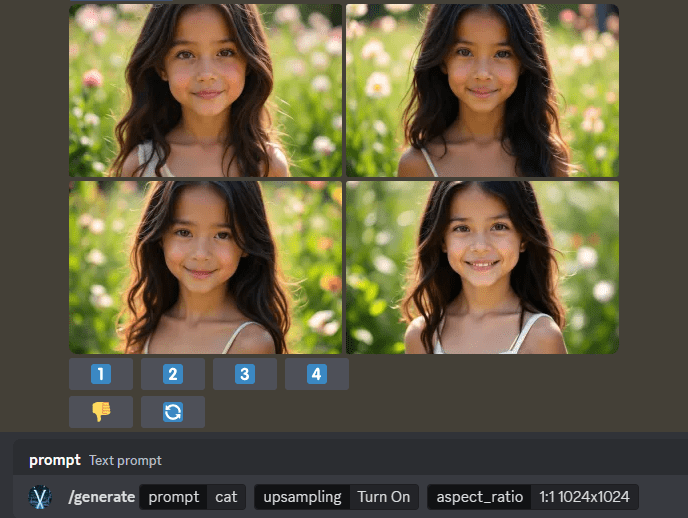

Infinity is a groundbreaking high-resolution image generation framework developed by the FoundationVision team. The project breaks through the limitations of traditional image generation models through an innovative bit-level visual autoregressive modeling approach.The core feature of Infinity is the use of unlimited vocabulary of disambiguators and classifiers, together with the bit-level self-correction mechanism, which is capable of generating ultra-high-quality realism images. The project is fully open-source and provides a choice of model sizes from 2B to 20B parameter scales, supporting image generation at resolutions up to 1024x1024. As a cutting-edge research project, Infinity not only pushes forward the technological progress in the field of computer vision, but also provides new solutions for image generation tasks.

Join the discord channel to experience the Infinity image generation model!

Function List

- 2B parametric model supports high quality image generation up to 1024x1024 resolution

- Provides a visual lexicon with unlimited vocabulary to support finer image feature extraction

- Realization of bit-level self-correction mechanism to improve the quality and accuracy of generated images

- Supports flexible selection of multiple model sizes (125M, 1B, 2B, 20B parameters)

- Provide an interactive inference interface to facilitate user experiments on image generation

- Integrated with a complete training and evaluation framework

- Supports multi-dimensional evaluation of model performance (GenEval, DPG, HPSv2.1 and other metrics)

- Provides an online demo platform that allows users to experience image generation directly

Using Help

1. Environmental configuration

1.1 Basic requirements:

- Python environment

- PyTorch >= 2.5.1 (requires FlexAttention support)

- Install other dependencies via pip:

pip3 install -r requirements.txt

2. Use of models

2.1 Quick start:

- Download the pre-trained model from HuggingFace: infinity_2b_reg.pth

- Download Visual Segmenter: infinity_vae_d32_reg.pth

- Interactive image generation using interactive_infer.ipynb

2.2 Training configuration:

# 使用单条命令启动训练

bash scripts/train.sh

# 不同规模模型的训练命令

# 125M模型(256x256分辨率)

torchrun --nproc_per_node=8 train.py --model=layer12c4 --pn 0.06M

# 2B模型(1024x1024分辨率)

torchrun --nproc_per_node=8 train.py --model=2bc8 --pn 1M

2.3 Data preparation:

- The training data needs to be prepared in JSONL format

- Each data item contains: image path, long and short text description, image aspect ratio and other information

- Sample datasets are provided by the project for reference

2.4 Model Evaluation:

- Support for multiple assessment indicators:

- ImageReward: assessing human preference scores for generating images

- HPS v2.1: Evaluation metrics based on 798K manual rankings

- GenEval: Evaluating text-to-image alignment

- FID: Assessing the quality and diversity of generated images

2.5 Online presentation:

- Visit the official demo platform: https://opensource.bytedance.com/gmpt/t2i/invite

- Enter a text description to generate a corresponding high-quality image

- Supports adjustment of multiple image resolutions and generation parameters

3. Advanced functions

3.1 Bit-level self-correcting mechanisms:

- Automatic recognition and correction of errors in the generation process

- Improve the quality and accuracy of generated images

3.2 Model extensions:

- Supports flexible scaling of model size

- Multiple models available from 125M to 20B parameters

- Adapts to different hardware environments and application requirements

4. Cautions

- Ensure hardware resources meet model requirements

- Large-scale models require sufficient GPU memory

- Recommended for training with HPC equipment

- Regular backup training checkpoints

- Note the adherence to the MIT open source protocol

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...