IndexTTS: Text-to-Speech Tool with Chinese-English Mixing Support

General Introduction

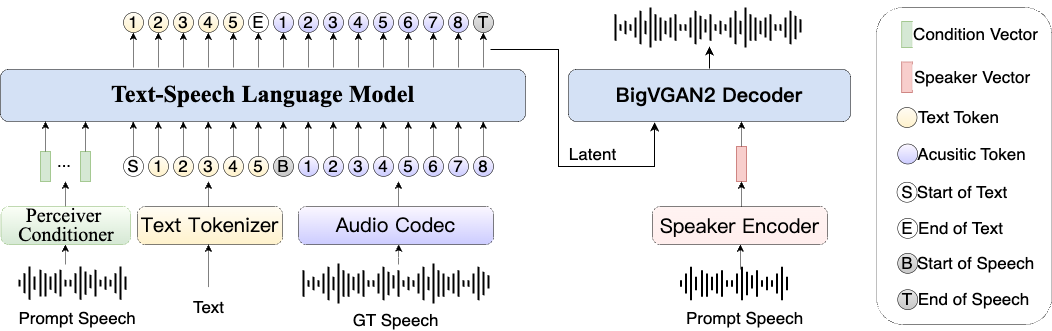

IndexTTS is an open source text-to-speech (TTS) tool hosted on GitHub and developed by the index-tts team. It is based on XTTS and Tortoise technologies, and provides efficient and high-quality speech synthesis through improved module design. indexTTS has been trained with tens of thousands of hours of data and supports both Chinese and English, and performs especially well in Chinese scenarios. It corrects mispronunciations through pinyin and controls pauses in speech. The team has optimized the sound quality, training stability and timbre similarity, and claims to outperform popular TTS systems such as XTTS and CosyVoice2. To experience the full functionality, you can contact the official email address for more information.

Function List

- Supports Chinese Pinyin input and corrects pronunciation errors of polyphonic characters.

- Control of speech pause position by punctuation.

- Enhance audio quality with BigVGAN2.

- Integration of the Conformer Conditional Encoder for enhanced training stability and timbre similarity.

- Supports zero-sample speech synthesis, which can be generated without pre-training specific speech.

- Handles mixed Chinese and English text.

Using Help

How to install

IndexTTS is currently an open source project on GitHub, but no direct installer or online service is officially available. To use it, you need to build your own environment. Here are the installation steps:

- Preparing the environment

- Make sure your computer has Python 3.8 or later.

- Install Git for downloading code.

- GPU support (e.g. NVIDIA graphics card) is required to accelerate processing, CUDA is recommended.

- Download Code

Enter it in a terminal or command line:

git clone https://github.com/index-tts/index-tts.git

This will download the IndexTTS code locally.

- Installation of dependencies

- Go to the project folder:

cd index-tts - Install the required libraries. Since no specific official

<code>requirements.txt</code>file, it is recommended to install common TTS dependencies such as PyTorch, NumPy and Torchaudio. you can try:pip install torch torchaudio numpy - If there is a specific dependency, you need to refer to the import statement in the code to install it manually.

- Get pre-trained models

- The IndexTTS pre-training model is not directly open source. You need to contact us by e-mail

<code>xuanwu@bilibili.com</code>Get the model file. - After receiving the model, put the files into the project directory (you need to refer to the official reply for the exact path).

- Running Projects

- Assuming the model is in place, run the main script (the filename might be

<code>main.py</code>(or similar name, need to check code to confirm):python main.py - If there are parameter requirements (e.g. input text or configuration files), you need to adjust the command according to the official documentation.

How to use the main features

After installation, the core function of IndexTTS is to generate speech. Here is how to operate it:

Generate Speech

- input text

Find the text input section in the code (which may be a script parameter or interface input). For example:

python main.py --text "你好,这是测试文本。"

The input text can be in Chinese, English or mixed content.

Pinyin Correction of Pronunciation

- If you encounter problems with polyphonic characters, enter the pinyin directly. For example:

python main.py --text "xing2 hang2" # 纠正为“银行”而不是“星航”

- The system will generate the correctly pronounced speech based on pinyin.

Control stops

- When punctuation is added to text, IndexTTS automatically recognizes and adjusts the pauses. Example:

python main.py --text "你好,世界。这是一个测试。"

- "," and "." will allow the voice to pause naturally, simulating the rhythm of real speech.

output audio

- The generated speech is usually saved as a WAV file. Check the project directory after running it, there may be something like

<code>output.wav</code>of the document. - You can open the file with the player, or specify the output path in the code:

python main.py --text "测试" --output "my_audio.wav"

Featured Functions Operation Procedure

Zero-sample speech synthesis

- IndexTTS supports zero-sample synthesis and can imitate untrained sounds.

- How to do it: provide a reference piece of audio (format is usually WAV). Assuming the code supports it:

python main.py --text "hello" --ref_audio "reference.wav"

- The system analyzes the timbre of the reference audio to generate a similar sound.

High quality audio output

- IndexTTS is optimized for sound quality with BigVGAN2. With no additional settings, the output audio will be clearer than normal TTS as long as the model is loaded correctly.

- Make sure your hardware supports GPU acceleration, otherwise processing will slow down.

caveat

- If the run reports an error, check that PyTorch is compatible with your GPU.

- The official documentation may be incomplete, so we recommend checking

<code>README.md</code>or code comments. - For deeper tuning of the parameters, you can study the configuration of Conformer and BigVGAN2 (knowledge of programming and TTS principles is required).

application scenario

- Educational aids

Teachers can use IndexTTS to convert text to speech to help students with listening practice. The Pinyin Correction feature also teaches correct pronunciation. - content creation

Anchors or UP owners can use it to generate voiceovers, especially for video content that requires a mix of Chinese and English. - Voice assistant development

Developers can use IndexTTS to create intelligent customer service that mimics a real human voice and provides a natural conversational experience. - language learning

Students can use it to practice pronunciation by transcribing words or sentences into speech, listening and imitating them over and over again.

QA

- What languages are supported by IndexTTS?

It mainly supports Chinese and English, and can handle mixed text. Other language support is unknown and needs to be tested. - How do I get full functionality?

Mail contact required<code>xuanwu@bilibili.com</code>, get pre-trained models and detailed descriptions. - How strong of a computer do I need to run it?

A GPU (e.g. NVIDIA graphics card) is recommended, a CPU will work but is slow. At least 8GB of RAM. - Is it free?

The code is open source and free of charge, but commercial use may be limited, need to consult the official.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...