Inception Labs Releases First Commercial Grade Diffusion Big Language Model

Inception Labs introduces the Mercury family of diffuse Large Language Models (dLLMs), which are up to 10x faster and cheaper than existing LLMs, pushing language modeling to new frontiers of intelligence and speed.

core element

- Inception Labs has officially released the Mercury family of Diffused Large Language Models (dLLMs), marking the birth of a new generation of LLMs and heralding a new level of fast, high-quality text generation technology.

- Mercury is 10x faster than current speed-optimized LLMs. On NVIDIA H100 GPUs, Mercury models run at more than 1,000 tokens/second, a speed previously only achievable with custom chips.

- code generation model Mercury Coder Now in playground Platform Open Beta. Inception Labs provides enterprise customers access to code models and generic models through APIs and native deployments.

Inception Labs' Vision - Diffusion Empowering the Next Generation of LLMs

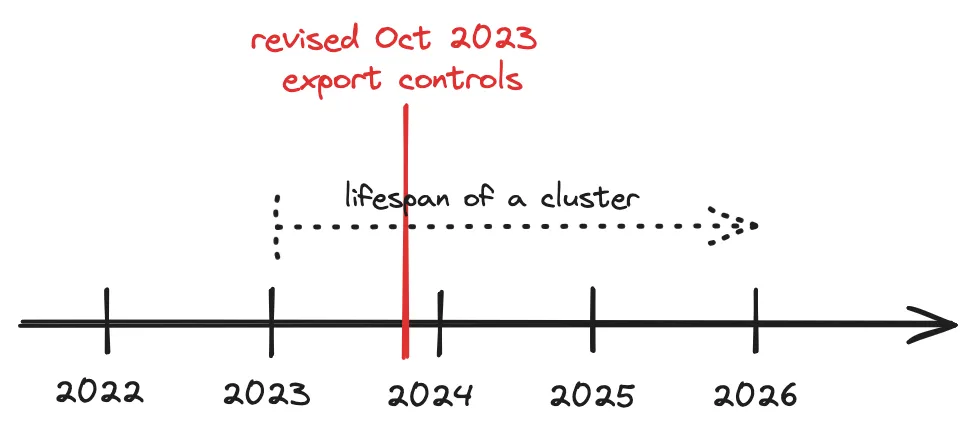

Current Large Language Models (LLMs) commonly use an autoregressive model, which means that they are written like human writing, from left to right, one by one token Generating text. This generation is inherently serial - previous tokens must be generated before subsequent tokens can be generated, and each token generated requires evaluating a neural network with billions of parameters. Industry-leading LLM companies are betting on increased inference-time computation to improve the inference and error correction capabilities of their models, but generating lengthy inference processes also leads to skyrocketing inference costs and increased latency, ultimately making the products difficult to use. A paradigm shift is imperative to make high-quality AI solutions truly ubiquitous.

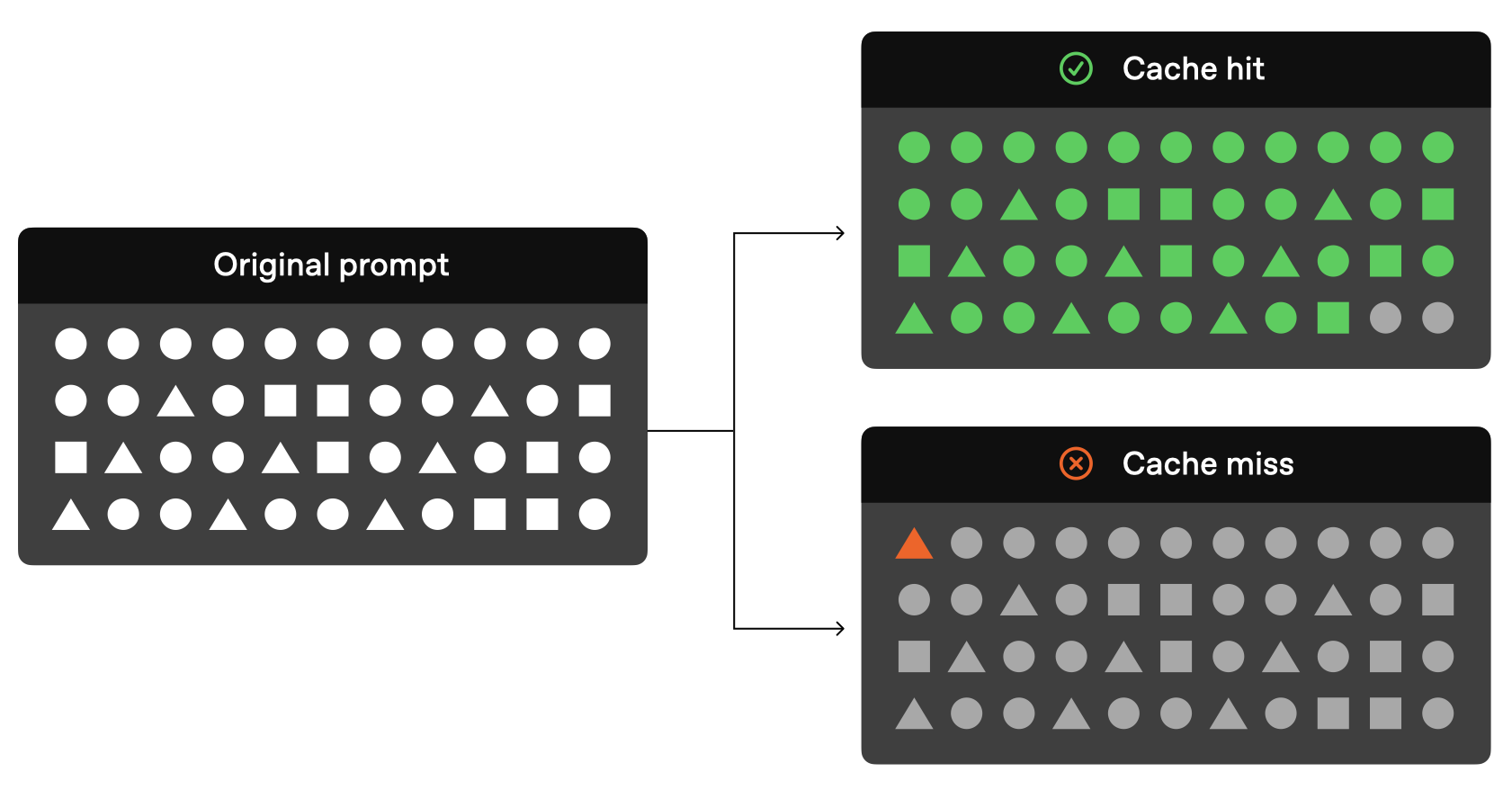

Diffusion models offer the possibility of such a paradigm shift. This type of model uses a "coarse to fine" generation process. As shown in the video, the output of the model starts with pure noise and is progressively optimized in several "denoising" steps.

Unlike autoregressive models, diffusion models are not constrained to consider only what was previously output. This makes them better at inference and structuring responses. In addition, because diffusion models are able to continuously optimize their output, they can effectively correct errors and reduce illusions. It's on the basis of these advantages that diffusion models are a core driver for many of the current outstanding AI solutions in video, image, and audio generation, such as Sora, Midjourney, and Riffusion, to name a few. However, attempts to apply diffusion models to discrete data such as text and code have never been successful until now. Until Mercury, that situation was completely broken.

Mercury Coder - 1000+ Tokens/Second, Cutting Edge Intelligence at Your Fingertips

Inception Labs is excited to announce the release of Mercury Coder, the first publicly available dLLM.

Mercury Coder pushes the boundaries of AI capabilities: it's 5-10x faster than current-generation LLMs and delivers high-quality responses at a much lower cost.Mercury Coder is the result of groundbreaking research by the founding team of Inception Labs -- who not only pioneered image diffusion modeling, but also co-invented many core generative AIs, including Direct Preference Optimization (DPO) and Decision Transformers. Mercury Coder was made possible by the groundbreaking research of the Inception Labs founding team, who not only pioneered image diffusion models, but also co-invented several core generative AI technologies, including Direct Preference Optimization (DPO), Flash Attention, and Decision Transformers.

dLLM can be used as a direct replacement for existing autoregressive LLMs and supports all application scenarios including RAG (Retrieval Augmentation Generation), tool usage and Agent workflows. dLLM does not generate answers token by token when receiving a user query. When receiving a user query, dLLM does not generate the answer token by token, but rather generates the answer in a coarse-to-fine manner, as shown in the animation above. The transformer model (used in Mercury Coder) has been trained on a large amount of data and is able to optimize the quality of the answer globally, modifying multiple tokens in parallel to improve the results continuously. The results are continuously improved.

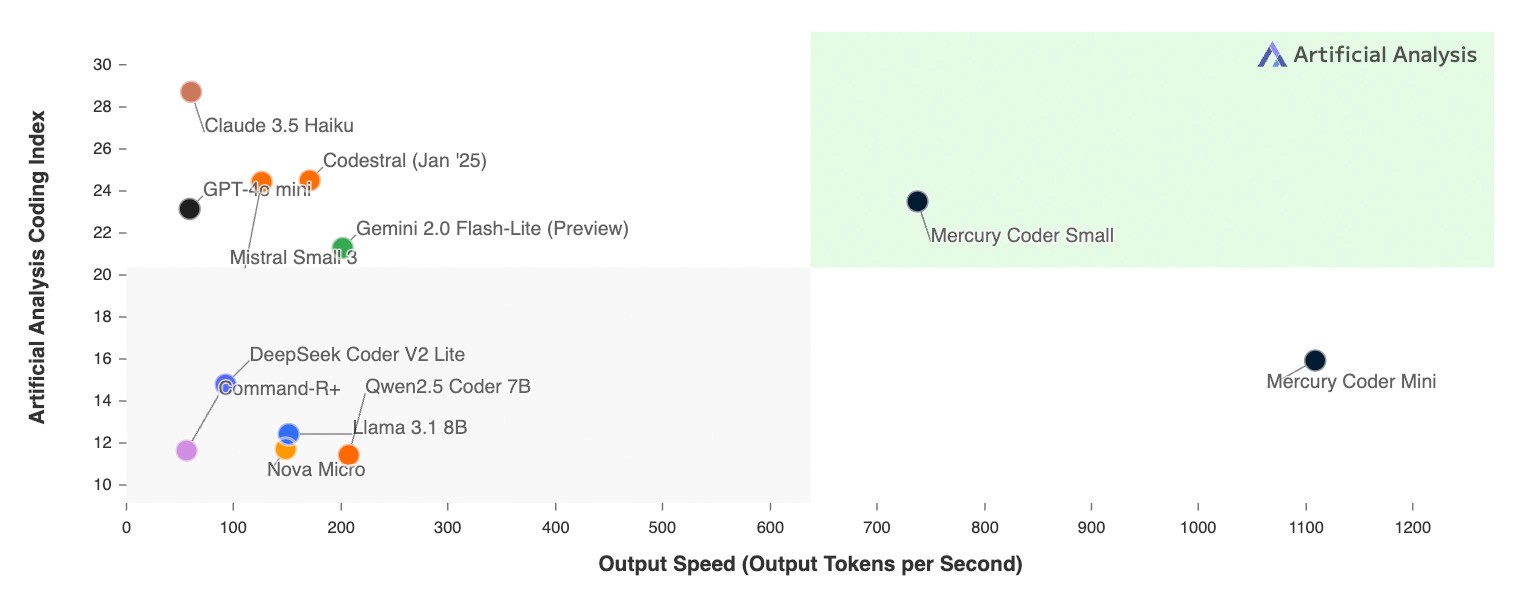

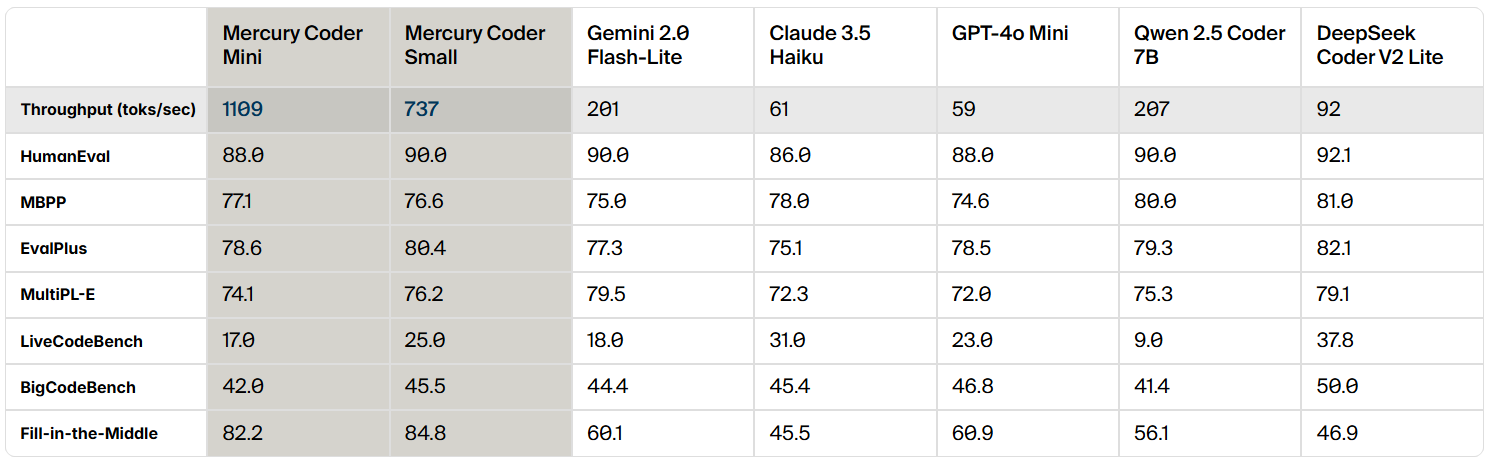

Mercury Coder is a dLLM optimized for code generation, and in standard code benchmark reviews Mercury Coder excels on a wide range of benchmarks, often outperforming the GPT-4o Mini and the Claude 3.5 Haiku and other speed-optimized autoregressive models, while also improving speed by a factor of up to 10.

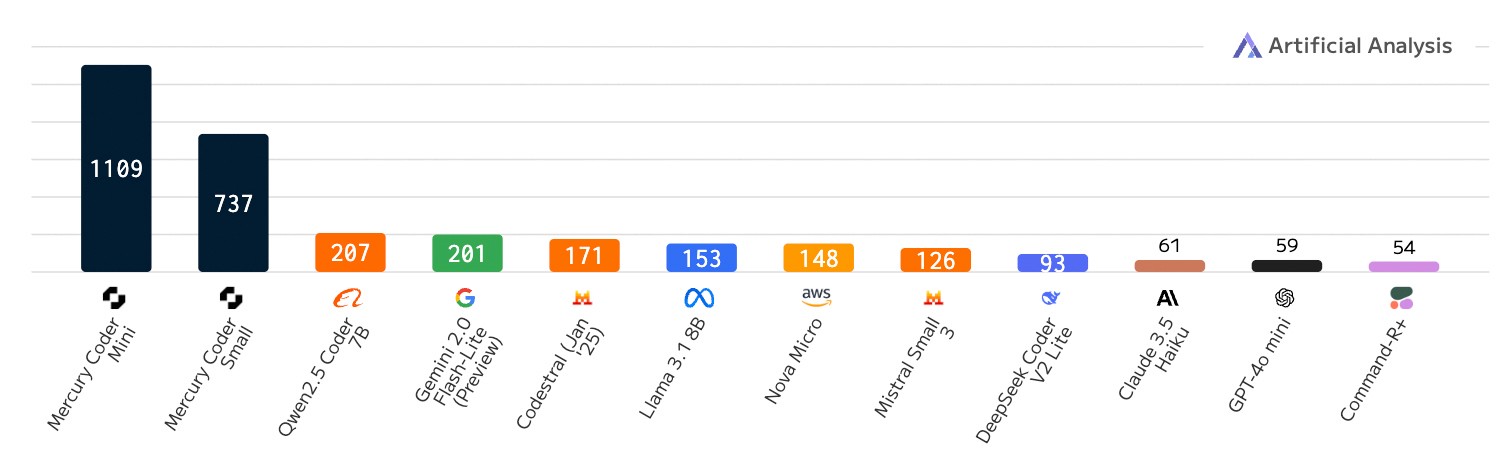

The unique feature of dLLM is its incredible speed. While even speed-optimized autoregressive models run at a maximum of 200 tokens/sec, Mercury Coder runs at over 1000 tokens/sec on a general-purpose NVIDIA H100 GPU, a 5x speedup. Mercury Coder is even faster by a factor of more than 20 compared to some frontier models, which can run at less than 50 tokens/sec.

Previously, throughputs like dLLM were only possible with specialized hardware such as Groq, Cerebras and SambaNova. Mercury Coder's algorithmic improvements and hardware acceleration go hand in hand, and on faster chips, the speedups stack up even further.

Speed comparison: number of Tokens output per second; code writing workloads

Even more exciting is that developers prefer Mercury Coder's code-completion feature. In the Copilot In Arena's benchmarks, Mercury Coder Mini tied for second place, outperforming speed-optimized models such as GPT-4o Mini and Gemini-1.5-Flash, and even matching the performance of larger models such as GPT-4o. At the same time, Mercury Coder is also the fastest model, about 4x faster than GPT-4o Mini.

Inception Labs invites you to experience the power of Mercury Coder for yourself.Inception Labs has partnered with Lambda Labs in the playground The platform provides you with a trial access to Mercury Coder. Experience how Mercury Coder generates high-quality code in a fraction of the time, as shown in the video below.

What does this mean for AI applications?

Early adopters of Mercury dLLM, including market leaders in customer support, code generation, and enterprise automation, are successfully switching from the standard autoregressive base model to Mercury dLLM as a direct replacement. This shift translates directly into a better user experience and lower costs. In latency-sensitive application scenarios, partners were often forced to choose smaller, less capable models in the past in order to meet stringent latency requirements. Now, thanks to dLLM's superior performance, these partners can use larger, more powerful models while still meeting their original cost and speed requirements.

Inception Labs provides access to the Mercury family of models through both API and local deployments.Mercury models are fully compatible with existing hardware, datasets, and supervised fine-tuning (SFT) and alignment (RLHF) processes.Fine-tuning is supported by both API and local deployments.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...