Hybrid Vincennes video: generating realistic footage sense of high-quality video, Tencent open source video generation large model

General Introduction

Tencent Hybrid Text Generation Video (available in Yuanbao APP) is a video generation platform based on AI technology launched by Tencent. The platform utilizes the Tencent Mixed Yuan Big Model with powerful cross-domain knowledge and natural language understanding to generate high-quality video content based on users' text descriptions. Whether it's a realistic or virtual style, Tencent Mixed Element text-generated videos can be easily realized, helping users transform their creativity into vivid video works. The platform is suitable for a variety of creative expression needs, from personal creation to commercial applications, providing a convenient and efficient video generation solution.

HunyuanVideo is an open source video generation framework launched by Tencent, aiming to provide high-quality video generation capabilities. The project is based on PyTorch and includes pre-trained models, inference code, and sample videos.HunyuanVideo employs a number of key technologies, such as data curation, joint image-video model training, and an efficient infrastructure to support large-scale model training and inference. With over 13 billion model parameters, it is one of the most parameterized video generation models in the open source space.HunyuanVideo outperforms many leading closed-source models in terms of visual quality, motion diversity, text-video alignment, and generation stability.

Accelerated version of the hybrid video generation model:FastHunyuanIt takes only 6 diffusion steps to generate a high quality video, which is 8 times faster than the original version's 50 steps.

Online Experience:https://video.hunyuan.tencent.com/

Function List

- Text Generation Video: The user inputs a text description and the platform automatically generates the corresponding video content.

- Multi-style support: Support video generation in both realistic and virtual styles to meet different creative needs.

- High quality output: Generates videos with high physical accuracy and scene consistency, providing a theater-quality visual experience.

- Continuous Action Generation: The ability to generate continuous action scenes ensures smooth and natural video.

- Artistic Lens: Supports the use of director-level footage to provide artistic video presentation.

- Physical Compliance: The generated video conforms to the laws of physics and reduces the viewer's sense of dissonance.

Using Help

Function Operation Guide

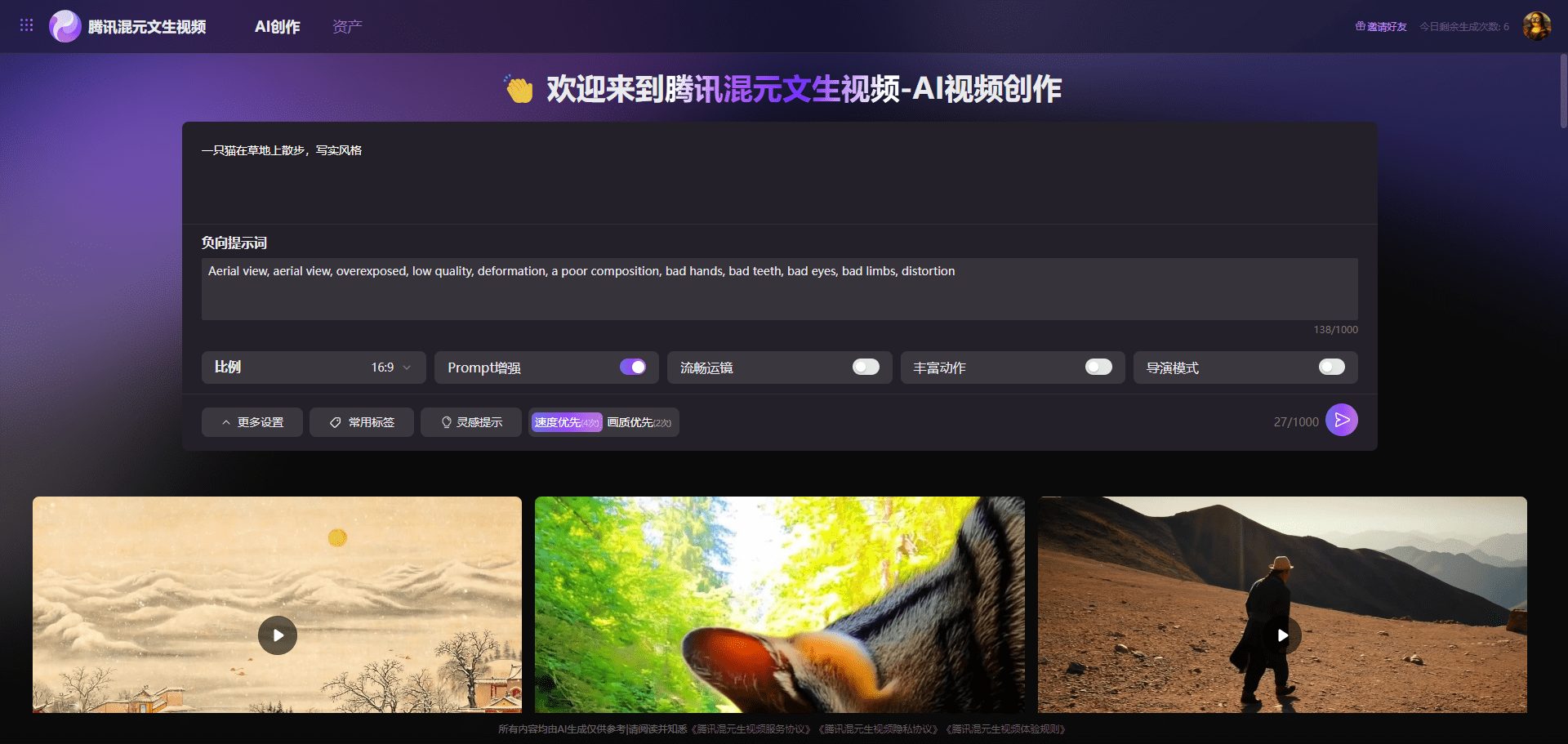

Text Generation Video

- Input text description: Enter the description of the video you want to generate in the text box. For example: "A little girl lights matches in winter, the sky is dark and the ground is covered with a layer of snow".

- Choose a style: Choose the style of video you want, either realistic or virtual.

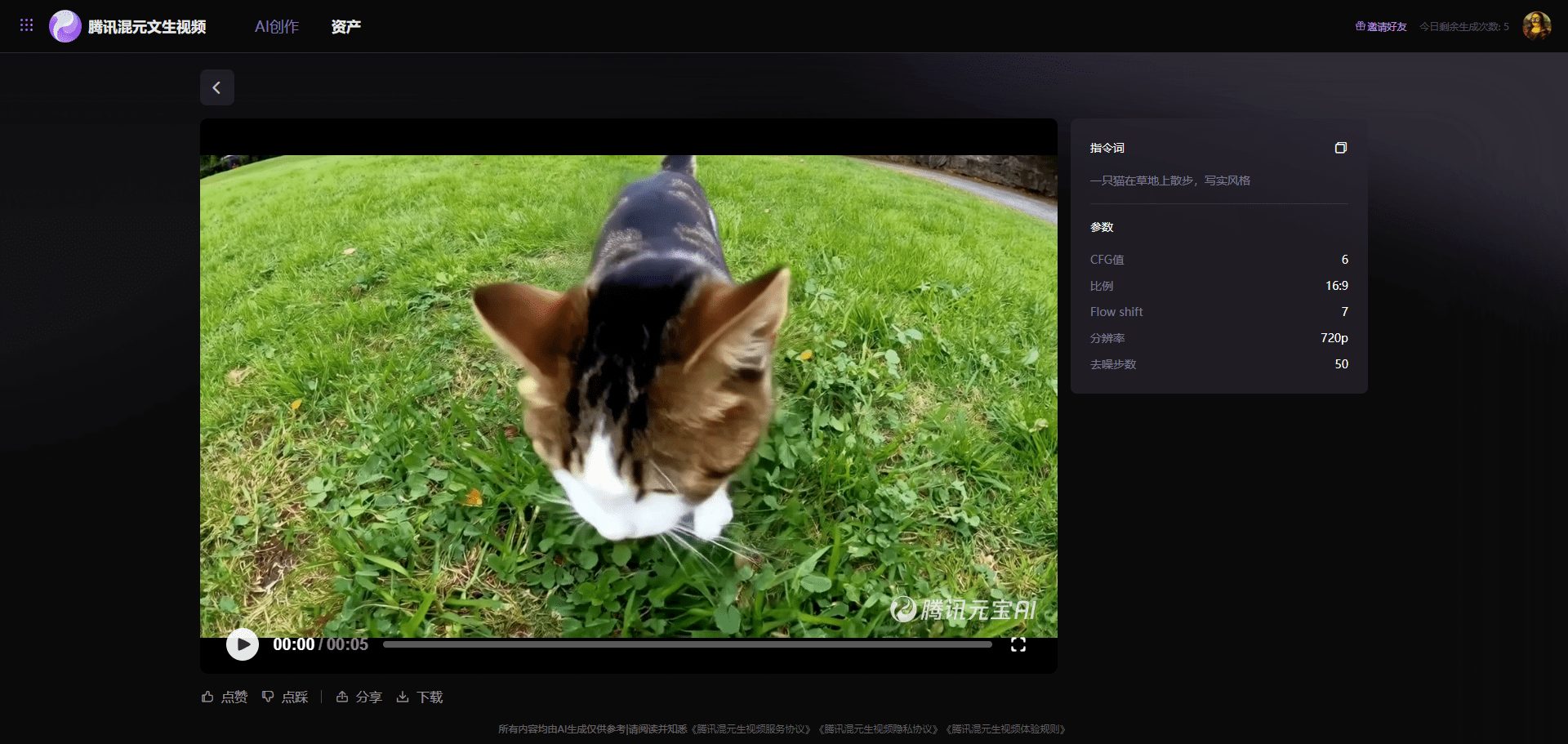

- Generate VideoClick the "Generate" button and the system will automatically generate the corresponding video content.

- Preview & Download: After generating, you can preview the video effect and download and save it when you are satisfied.

Multi-style support

- Style Switching: When generating videos, you can freely switch between real and virtual styles to meet different creative needs.

- Style presetsThe platform provides a variety of style presets so that users can choose the right style for video generation as needed.

High quality output

- Cinema-quality experience: Generates videos with high physical accuracy and scene consistency, providing a theater-quality visual experience.

- Detailed adjustments: Users can make detailed adjustments to the generated video to ensure that every frame meets expectations.

Continuous Action Generation

- Input continuous action description: Enter a scene of continuous action in the text description, e.g. "A person running on a treadmill".

- Generate continuous action video: The system will automatically generate videos of continuous movements to ensure smooth and natural movements.

Artistic Lens

- Lens Selection: The platform supports a wide range of lens options, allowing users to choose the appropriate video lens for generation.

- Artistic expression: The resulting video will contain an artistic use of footage, providing a more expressive visual effect.

Physical Compliance

- physical rule: The generated video conforms to the laws of physics and reduces the viewer's sense of dissonance.

- Scenario Consistency: Ensure consistency and coherence in every scene in the video.

common problems

- Video generation failure: Please check that the text description entered meets the requirements, or try to regenerate it.

- Login Issues: If you can't log in, please make sure your cell phone number and verification code are correct, or contact customer service for assistance.

Installation and Deployment Process

- environmental dependency: Ensure that Python 3.8 and above is installed and that the necessary dependency libraries are installed.

pip install -r requirements.txt - Download pre-trained model: Download the pre-trained model from the project page and place it in the specified directory.

- Run the inference code: Use the following command to run the inference code to generate the video.

python sample_video.py --input_text "生成视频的描述文本"

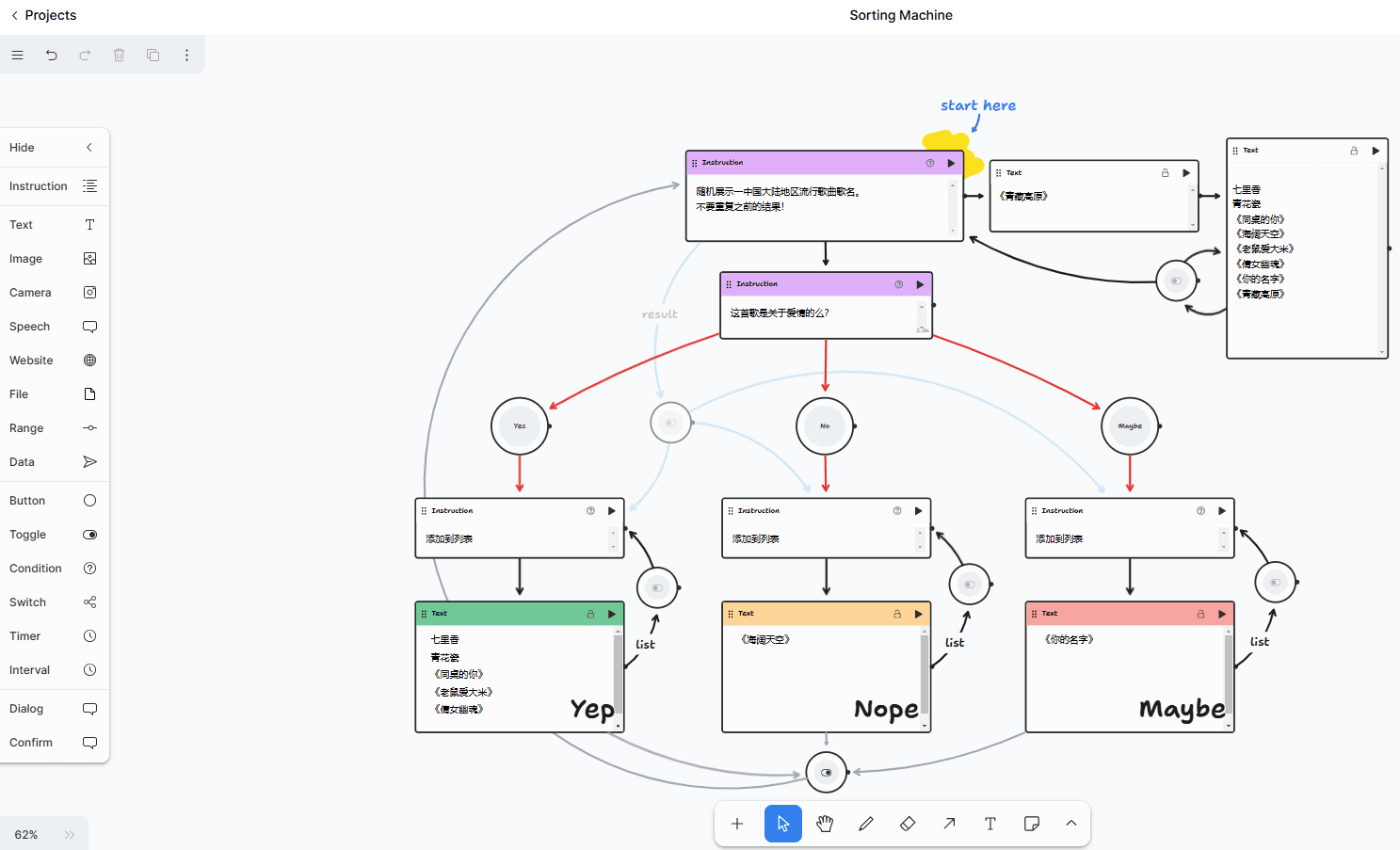

Functional operation flow

Text to Video Generation

- Input text description: Enter the description text on the command line or in the Gradio Web Demo.

- Run the inference code: Execute the inference code to generate the corresponding video file.

- View Generated Results: View the generated video file in the output directory.

Image to Video Generation

- Input Image File: Provides the path to the input image file.

- Run the inference code: Execute inference code to generate dynamic videos.

- View Generated Results: View the generated video file in the output directory.

Multi-GPU Parallel Reasoning

- Configuring a multi-GPU environment: Ensure that multiple GPUs are installed on the system and that the CUDA environment is configured.

- Running parallel inference code: Run the multi-GPU parallel inference code using the following command.

python sample_video.py --input_text "生成视频的描述文本" --gpus 4

Gradio Web Demo

- Starting the Gradio Server: Start the Gradio Web Demo server by running the following command.

python gradio_server.py - Access to Web Demo: Open the provided URL in your browser and experience the text-to-video generation feature.

Pre-training model download

- Visit the project page: Go to HunyuanVideo's GitHub project page.

- Download pre-trained model: Click on the download link for the pre-trained model file.

- Placement of model files: Place the downloaded model file in the specified directory of the project.

With the above detailed help, users can easily get started with HunyuanVideo and experience the high-quality video generation function.

Hybrid Video One Click Integration Pack

Quark: https://pan.quark.cn/s/ae28d498f451

Baidu: https://pan.baidu.com/s/1PgJKZiey98rKWZzPFzT6-w?pwd=pwk8

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...