GPT4Free: Decompile the AI Dialog website interface and use multiple GPT models for free!

General Introduction

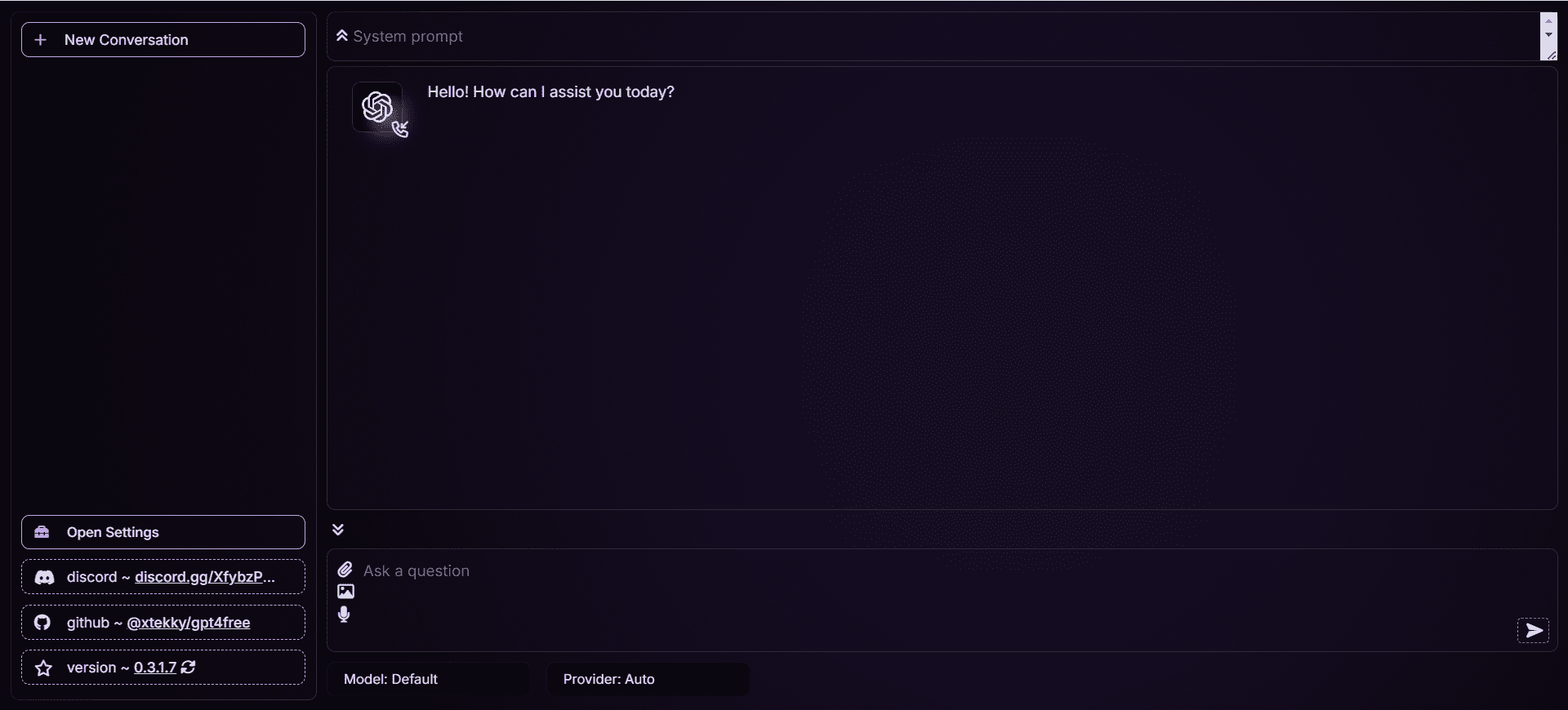

GPT4Free is an open source project released on GitHub by developer xtekky, aiming to provide a wide range of powerful language models for free, including GPT-3.5, GPT-4, Llama, Gemini-Pro, Bard and Claude etc. The project provides features such as timeout, load balancing, and flow control by aggregating multiple API requests. Users can easily use these high-level language models through simple installation and configuration.

This project needs to rely on a number of services, decompile the website interface is obsolete, you can branch the project to deploy the latest version. Recommended https://github.com/xiangsx/gpt4free-ts , or use Sealos one-click deployment.

Online experience: https://gptgod.online/

Function List

- Multi-model support: Supports multiple language models such as GPT-3.5, GPT-4, Llama, Gemini-Pro, Bard and Claude.

- Open source and free: Completely open source, users can use and modify the code for free.

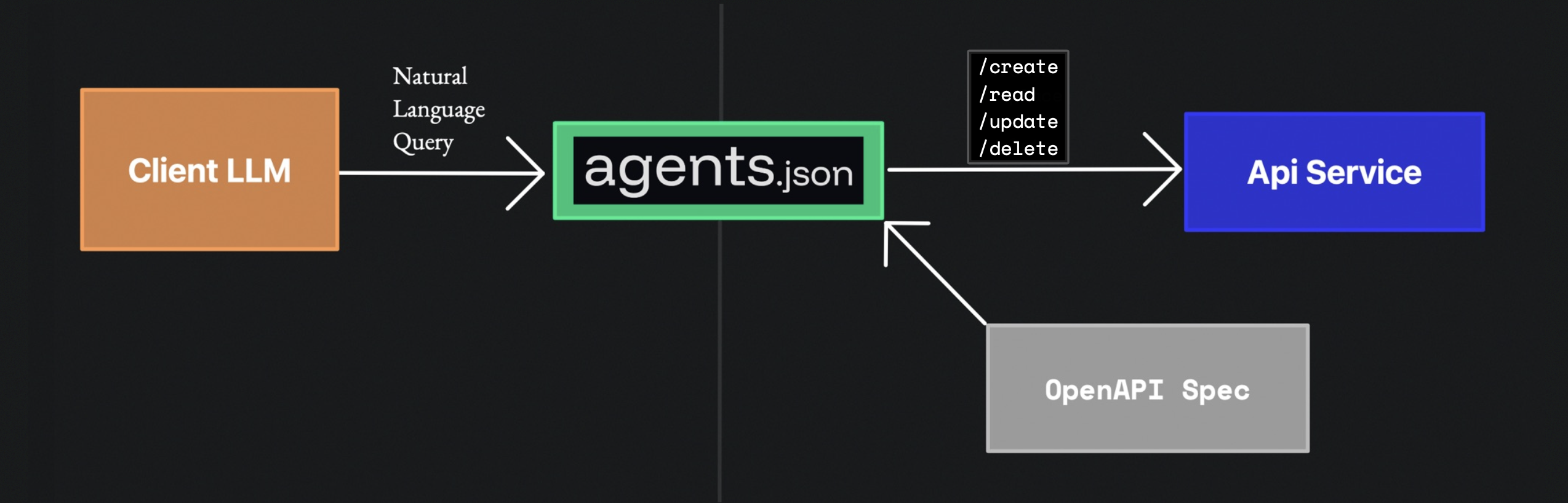

- API Integration: Provides a variety of API request features, supporting timeouts, load balancing, and flow control.

- Documentation and Tutorials: Detailed documentation and tutorials to help users get started quickly.

- Community Support: Active community support where users can communicate and get help on GitHub, Telegram, and Discord.

Using Help

Installation process

- Download Code::

- Open a terminal and run the following command to clone the project:

git clone https://github.com/xtekky/gpt4free.git

- Open a terminal and run the following command to clone the project:

- Installation of dependencies::

- Go to the project directory and run the following command to install the required dependencies:

cd gpt4free pip install -r requirements.txt

- Go to the project directory and run the following command to install the required dependencies:

- Configuration environment::

- Configure environment variables and API keys as needed, please refer to the project documentation for specific steps.

Guidelines for use

- Starting services::

- Run the following command to start the service:

python main.py

- Run the following command to start the service:

- Calling the API::

- Requests are made using the provided API, a simple example is shown below:

import requests url = "http://localhost:8000/api/v1/gpt4" payload = { "model": "gpt-4", "prompt": "你好,GPT-4!", "max_tokens": 100 } response = requests.post(url, json=payload) print(response.json())

- Requests are made using the provided API, a simple example is shown below:

- Using Docker::

- If you prefer to use Docker, you can run the following command to start a Docker container:

docker-compose up -d

- If you prefer to use Docker, you can run the following command to start a Docker container:

common problems

- How do I get an API key?

- Refer to the project documentation for detailed instructions on obtaining and configuring API keys.

- What to do if you encounter an error?

- Check that dependencies are installed correctly, make sure environment variables are configured correctly, or ask for help in the community.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...