F5-TTS: Sample less speech cloning to generate smooth and emotionally rich cloned voices

General Introduction

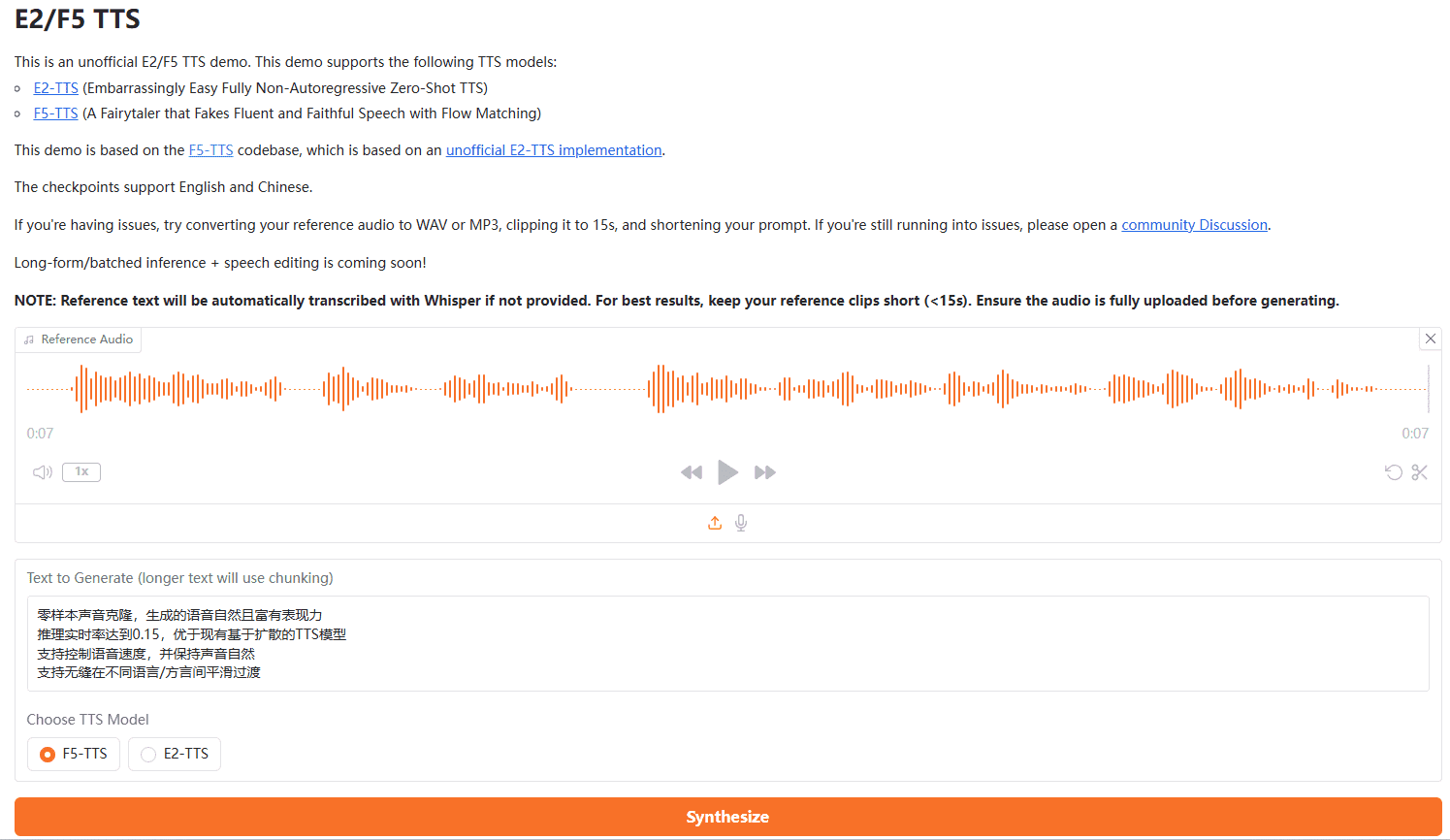

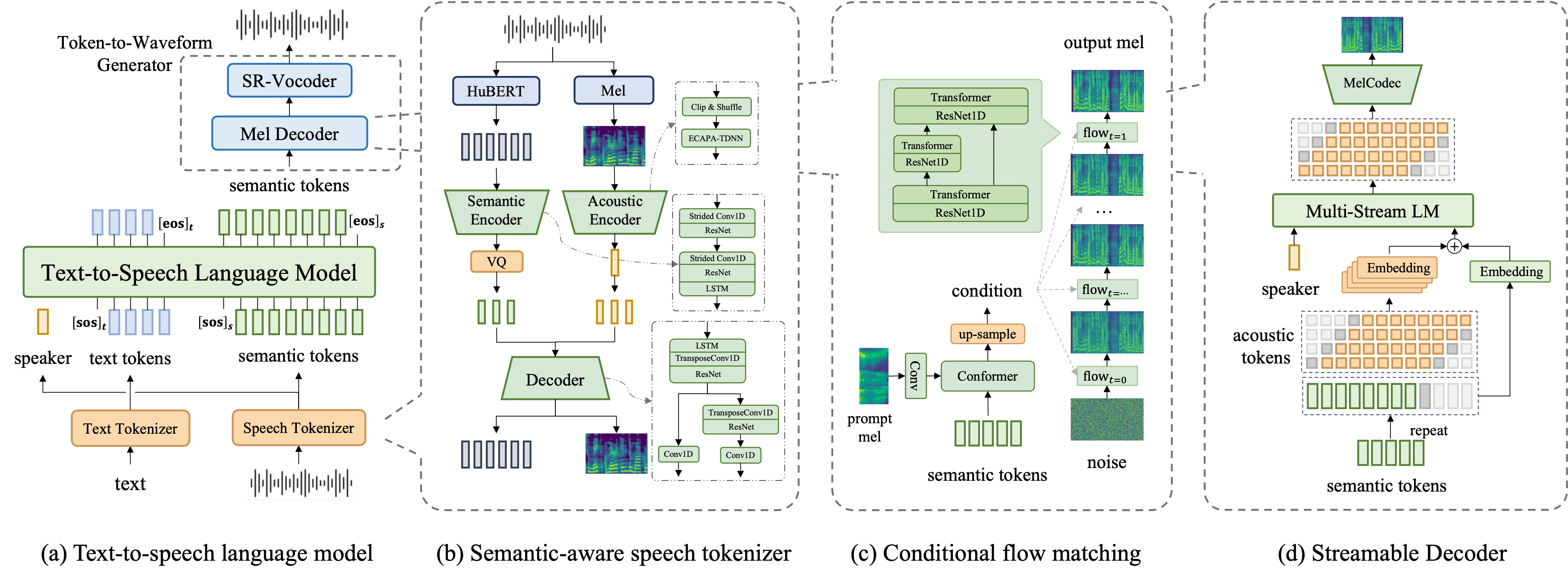

F5-TTS is a novel non-autoregressive text-to-speech (TTS) system based on a stream-matched diffusion converter (Diffusion). TransformerF5-TTS supports training on multi-language datasets with highly natural and efficient synthesis.) The system significantly improves synthesis quality and efficiency by using the ConvNeXt model to optimize the text representation for easier alignment with speech.F5-TTS supports training on multi-language datasets with highly natural and expressive zero-sample capabilities, seamless code switching, and speed-control efficiency. The project is open source and aims to promote community development.

Instead of the complex modules of traditional TTS systems, such as duration modeling, phoneme alignment, and text encoders, this model achieves speech generation by padding the text to the same length as the input speech and applying denoising methods.

One of the major innovations of the F5-TTS is Sway Sampling strategy, which significantly improves the efficiency in the inference phase and enables real-time processing capabilities. This feature is suitable for scenarios requiring fast speech synthesis, such as voice assistants and interactive speech systems.

F5-TTS support zero-sample speech cloningThe program is designed to generate a wide range of voices and accents without the need for large amounts of training data, and also provides a emotional control cap (a poem) Speed Adjustment Features. The system's strong multilingual support makes it particularly suitable for applications that require the generation of diverse audio content, such as audiobooks, e-learning modules and marketing materials.

Function List

- Text-to-Speech Conversion: Convert input text into natural and smooth speech.

- Zero-sample generation: Generate high-quality speech without pre-recorded samples.

- Emotional Reproduction: Support for generating speech with emotions.

- Speed control: the user can control the speed of speech generation.

- Multi-language support: supports speech generation in multiple languages.

- Open source code: complete code and model checkpoints are provided to facilitate community use and development.

Using Help

Installation process

conda create -n f5-tts python=3.10 conda activate f5-tts sudo apt update sudo apt install -y ffmpeg pip uninstall torch torchvision torchaudio transformers # 安装 PyTorch(包含 CUDA 支持) pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 # 安装 transformers pip install transformers git clone https://github.com/SWivid/F5-TTS.git cd F5-TTS pip install -e . # Launch a Gradio app (web interface) f5-tts_infer-gradio # Specify the port/host f5-tts_infer-gradio --port 7860 --host 0.0.0.0 # Launch a share link f5-tts_infer-gradio --share

F5-TTS One-Click Installation Command

conda create -n f5-tts python=3.10 -y && \ conda activate f5-tts && \ sudo apt update && sudo apt install -y ffmpeg && \ pip uninstall -y torch torchvision torchaudio transformers && \ pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 transformers && \ git clone https://github.com/SWivid/F5-TTS.git && \ cd F5-TTS && \ pip install -e . && \ f5-tts_infer-gradio --port 7860 --host 0.0.0.0

F5-TTS google Colab running

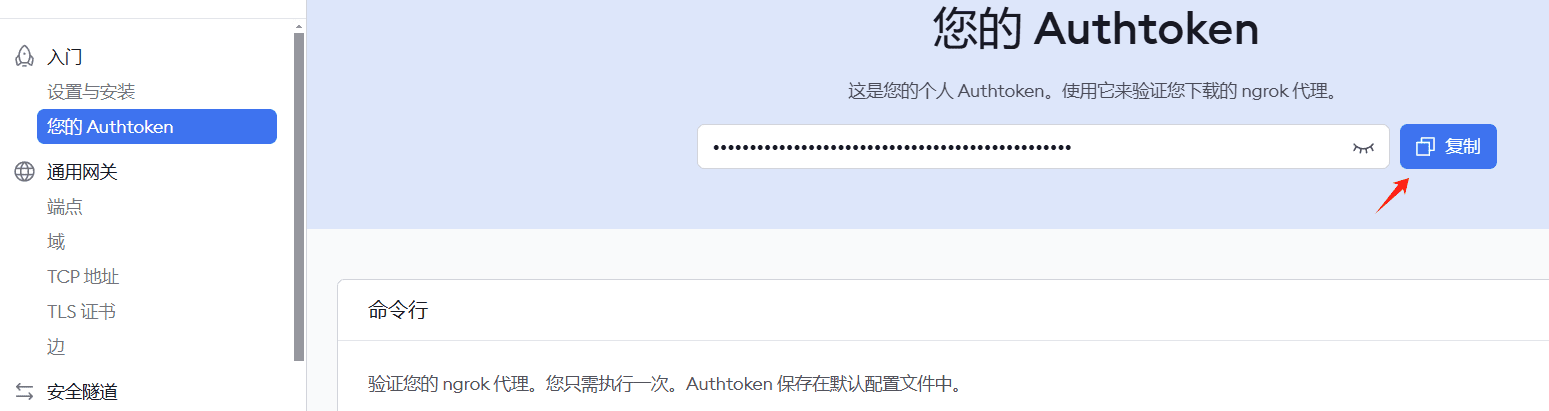

Note: ngrok registration is required to apply for a key to achieve intranet penetration.

!pip install pyngrok transformers gradio

# 导入所需库

import os

from pyngrok import ngrok

!apt-get update && apt-get install -y ffmpeg

!pip uninstall -y torch torchvision torchaudio transformers

!pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 transformers

# 克隆并安装项目

!git clone https://github.com/SWivid/F5-TTS.git

%cd F5-TTS

!pip install -e .

!ngrok config add-authtoken 2hKI7tLqJVdnbgM8pxM4nyYP7kQ_3vL3RWtqXQUUdwY5JE4nj

# 配置 ngrok 和 gradio

import gradio as gr

from pyngrok import ngrok

import threading

import time

import socket

import requests

def is_port_in_use(port):

with socket.socket(socket.AF_INET, socket.SOCK_STREAM) as s:

return s.connect_ex(('localhost', port)) == 0

def wait_for_server(port, timeout=60):

start_time = time.time()

while time.time() - start_time < timeout:

if is_port_in_use(port):

try:

response = requests.get(f'http://localhost:{port}')

if response.status_code == 200:

return True

except:

pass

time.sleep(2)

return False

# 确保 ngrok 没有在运行

ngrok.kill()

# 在新线程中启动 Gradio

def run_gradio():

import sys

import f5_tts.infer.infer_gradio

sys.argv = ['f5-tts_infer-gradio', '--port', '7860', '--host', '0.0.0.0']

f5_tts.infer.infer_gradio.main()

thread = threading.Thread(target=run_gradio)

thread.daemon = True

thread.start()

# 等待 Gradio 服务启动

print("等待 Gradio 服务启动...")

if wait_for_server(7860):

print("Gradio 服务已启动")

# 启动 ngrok

public_url = ngrok.connect(7860)

print(f"\n=== 访问信息 ===")

print(f"Ngrok URL: {public_url}")

print("===============\n")

else:

print("Gradio 服务启动超时")

# 保持程序运行

while True:

try:

time.sleep(1)

except KeyboardInterrupt:

ngrok.kill()

break

!f5-tts_infer-cli \

--model "F5-TTS" \

--ref_audio "/content/test.MP3" \

--ref_text "欢迎来到首席AI分享圈,微软发布了一款基于大模型的屏幕解析工具OmniParser.这款工具是专为增强用户界面自动化而设计的它." \

--gen_text "欢迎来到首席AI分享圈,今天将为大家详细演示另一款开源语音克隆项目。"

Usage Process

training model

- Configure acceleration settings, such as using multiple GPUs and FP16:

accelerate config - Initiate training:

accelerate launch test_train.py

inference

- Download pre-trained model checkpoints.

- Single Reasoning:

- Modify the configuration file to meet requirements, such as fixed duration and step size:

python test_infer_single.py

- Modify the configuration file to meet requirements, such as fixed duration and step size:

- Batch reasoning:

- Prepare the test dataset and update the path:

bash test_infer_batch.sh

- Prepare the test dataset and update the path:

Detailed Operation Procedure

- Text-to-speech conversion::

- After entering text, the system will automatically convert it to speech, and users can choose different speech styles and emotions.

- Zero sample generation::

- The user does not need to provide any pre-recorded samples and the system generates high quality speech based on the input text.

- emotional reproduction::

- Users can select different emotion labels and the system will generate a voice with the corresponding emotion.

- speed control::

- Users can control the speed of speech generation by adjusting the parameters to meet the needs of different scenarios.

- Multi-language support::

- The system supports speech generation in multiple languages, and users can choose different languages as needed.

F5 One-Click Installer

Quark: https://pan.quark.cn/s/3a7054a379ce

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...