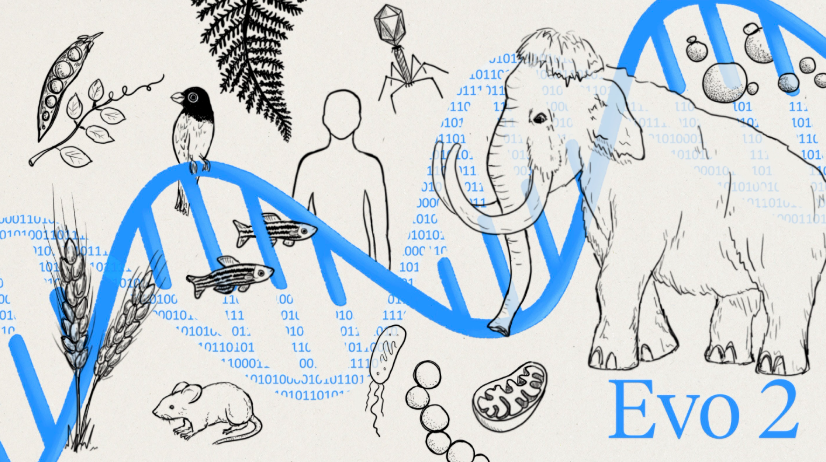

Evo2: An Open Source BioAI Tool to Support Genome Modeling and Design

General Introduction

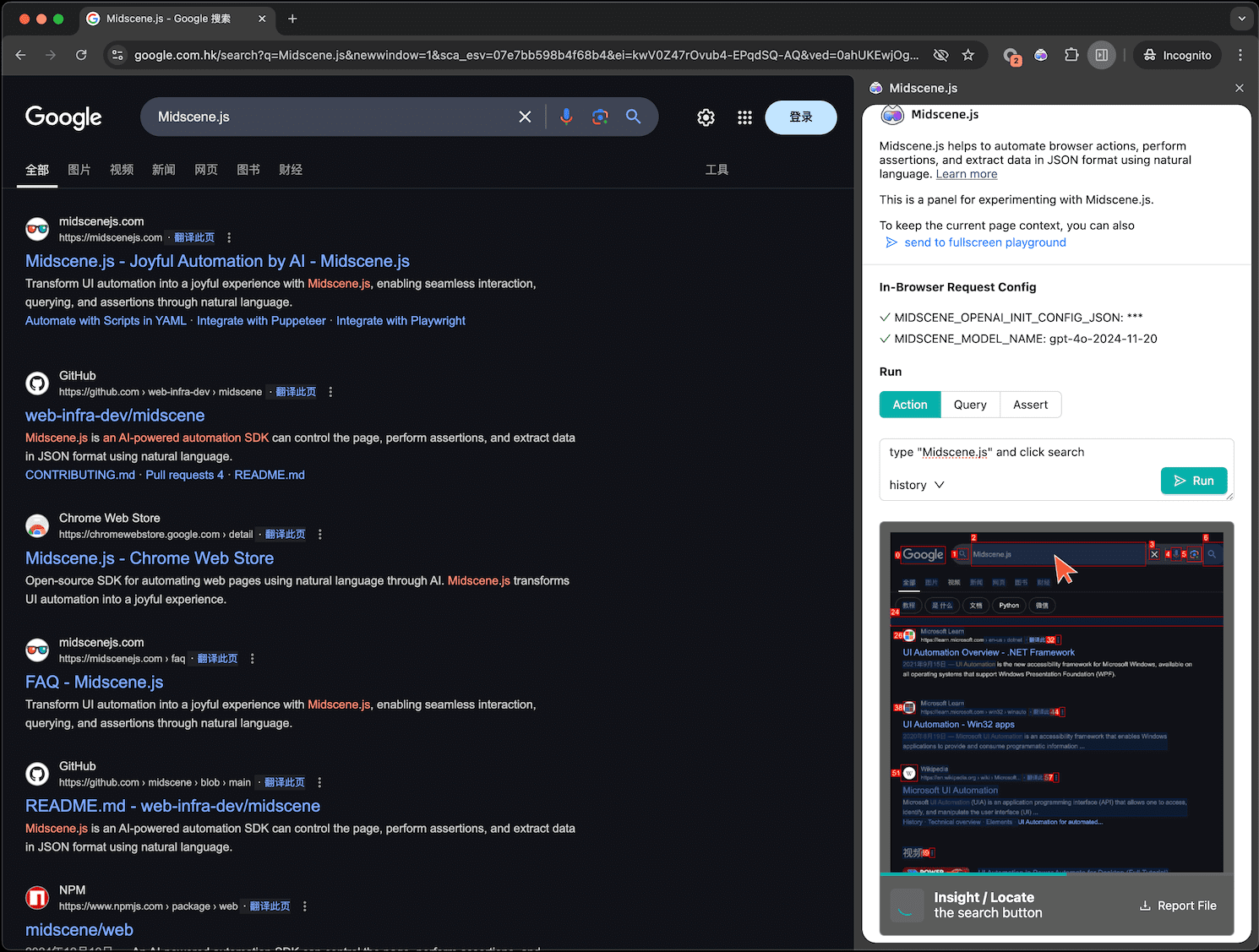

Arc Institute Evo 2 is an open source project focused on genome modeling and design, developed by the Arc Institute, a non-profit research organization based in Palo Alto, California, and launched in collaboration with partners such as NVIDIA. The project builds biologically based models capable of processing DNA, RNA, and proteins through cutting-edge deep learning techniques for predictive and generative tasks in the life sciences. Evo 2 is trained on diverse genomic data of more than 9 trillion nucleotides, has up to 40 billion parameters, and supports context lengths of up to 1 million bases. Its code, training data, and model weights are fully open source, hosted on GitHub, and designed to accelerate bioengineering and medical research. Both researchers and developers can use the tool to explore the mysteries of the genome and design new biological sequences.

Function List

- Supports genome modeling across life domains: enables prediction and design on the genomes of bacteria, archaea, and eukaryotes.

- Long Sequence Handling Capability: Handles DNA sequences of up to 1 million bases for ultra-long contextual analysis tasks.

- DNA Generation and Optimization: Generate new DNA sequences with coding region annotations based on input sequences or species hints.

- Zero-sample variant effect prediction: Predict the biological impact of a gene variant without additional training, e.g., analyze the effect of BRCA1 variants.

- Open source datasets and models: provide pre-trained models and OpenGenome2 datasets to support secondary development and research.

- Multi-GPU parallel computing support: Automatically allocate multiple GPU resources through the Vortex framework to improve large-scale computing efficiency.

- Integration with NVIDIA BioNeMo: Seamless access to NVIDIA's biocomputing platform to expand application scenarios.

- Visualization and Interpretation Tools: Combined with Goodfire's Interpretive Visualizer, reveals the biometric features and patterns recognized by the model.

Using Help

Installation process

To use Evo 2 locally, certain computing resources and environment configurations are required. Below are the detailed installation steps:

1. Environmental preparation

- operating system: Linux (e.g. Ubuntu) or macOS is recommended, Windows users need to install WSL2.

- hardware requirement: At least 1 NVIDIA GPU (multiple GPUs are recommended to support the 40B model) with a minimum of 16GB of video memory (e.g. A100 or RTX 3090).

- software dependency: Make sure Git, Python 3.8+, PyTorch (with CUDA support), and pip are installed.

2. Cloning the code repository

Open a terminal and run the following command to get the Evo 2 source code:

git clone --recurse-submodules git@github.com:ArcInstitute/evo2.git

cd evo2

Attention:--recurse-submodules Make sure all submodules are also downloaded.

3. Installation of dependencies

Run it in the project root directory:

pip install .

If you encounter problems, try installing from Vortex (see the GitHub README for details). Once the installation is complete, run the tests to verify:

python -m evo2.test

If the output reports no errors, the installation was successful.

4. Downloading pre-trained models

Evo 2 is available in several model versions (e.g., 1B, 7B, 40B parameters) and can be downloaded from Hugging Face or GitHub Releases. Example:

wget https://huggingface.co/arcinstitute/evo2_7b/resolve/main/evo2_7b_base.pt

Place the model files in a local directory for subsequent loading.

How to use

Once installed, the core functions of Evo 2 can be invoked via Python scripts. Below is a detailed flow of how the main functions work:

Function 1: Generation of DNA sequences

Evo 2 can generate a continuation sequence from an input DNA fragment. The procedure is as follows:

- Loading Models::

from evo2 import Evo2 model = Evo2('evo2_7b') # 使用 7B 参数模型 - Enter prompts and generate::

prompt = ["ACGT"] # 输入初始 DNA 序列 output = model.generate(prompt_seqs=prompt, n_tokens=400, temperature=1.0, top_k=4) print(output.sequences[0]) # 输出生成的 400 个核苷酸序列 - Interpretation of results: The generated sequences can be used for downstream biological analysis, the temperature parameter controls the randomness, and top_k limits the sampling range.

Function 2: Prediction of zero-sample variant effects

The BRCA1 gene was used as an example to predict the biological impact of variants:

- Prepare data: Deposit the reference and variant sequences into the list.

- Operational forecasts::

ref_seqs = ["ATCG..."] # 参考序列 var_seqs = ["ATGG..."] # 变体序列 ref_scores = model.score_sequences(ref_seqs) var_scores = model.score_sequences(var_seqs) print(f"Reference likelihood: {ref_scores}, Variant likelihood: {var_scores}") - analysis: Compare differences in scores to assess the potential impact of variants on function.

Function 3: Long Sequence Processing

For very long sequences, Evo 2 supports chunked loading and computation:

- Loading Large Models::

model = Evo2('evo2_40b') # 需要多 GPU 支持 - Handling long sequences::

long_seq = "ATCG..." * 100000 # 模拟 100 万碱基序列 output = model.generate([long_seq], n_tokens=1000) print(output.sequences[0]) - caveat: The current long sequence forward propagation may be slow, it is recommended to optimize the hardware configuration or use the teacher prompting method (teacher prompting).

Function 4: Data sets and secondary development

- Getting the dataset: Download the OpenGenome2 dataset (in FASTA or JSONL format) from Hugging Face.

- Customized training: Modify model architecture or fine-tune parameters based on the Savanna framework for specific research needs.

Handling tips and precautions

- Multi-GPU Configuration: If you are using a 40B model, you need to make sure that Vortex recognizes multiple GPUs correctly by using the

nvidia-smiCheck resource allocation. - performance optimization: Long sequence processing reduces

temperaturevalues to reduce the computational burden. - Community Support: Questions can be directed to the GitHub Issues board, where the Arc Institute team and the community are available to help.

These steps will get you up and running with Evo 2, whether you're generating DNA sequences or analyzing genetic variants, and you'll be able to do it efficiently.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...