DualPipe: a bi-directional pipelined parallel algorithm to improve the efficiency of large-scale AI model training (DeepSeek Open Source Week Day 4)

General Introduction

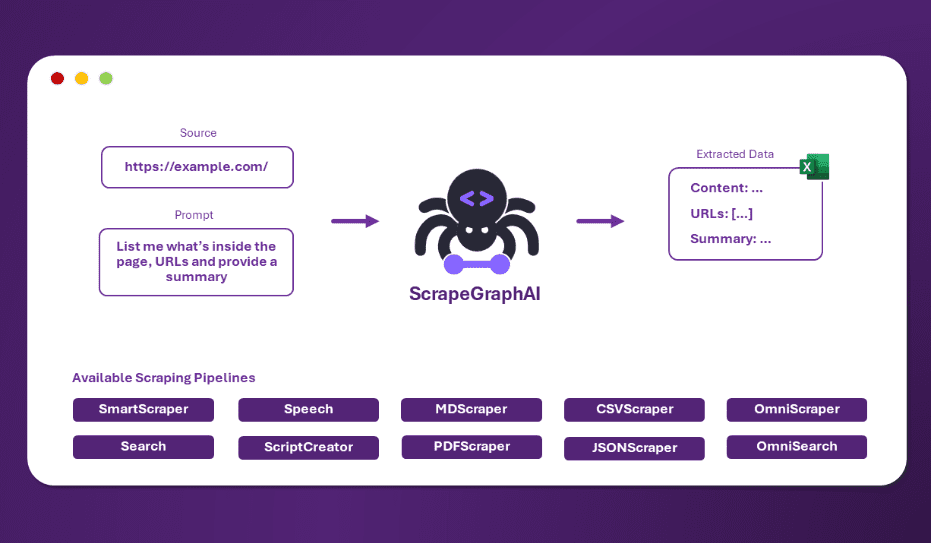

DualPipe is an open source technology developed by the DeepSeek-AI team focused on improving the efficiency of large-scale AI model training. It is an innovative bi-directional pipeline parallelization algorithm, which is mainly used to achieve complete overlap of computation and communication in DeepSeek-V3 and R1 model training, effectively reducing "bubbles" (i.e., waiting time) in the pipeline, and thus accelerating the training process. Developed by Jiashi Li, Chengqi Deng, and Wenfeng Liang, the project has been open-sourced on GitHub and has attracted the attention of the AI technology community. the core advantage of DualPipe is that it enables model training to run efficiently on multi-node GPU clusters through optimized scheduling, which is suitable for model training scenarios with trillions of parameters and provides new possibilities for AI researchers and developers. The core advantage of DualPipe is that it enables model training to run efficiently in multi-node GPU clusters through optimized scheduling.

Function List

- Bidirectional pipelined scheduling: Supports simultaneous input of micro batches from both ends of the pipeline, enabling a high degree of overlap between computation and communication.

- Reduction of assembly line air bubbles: Reduce idle waiting time during training by algorithmic optimization.

- Supports large-scale model trainingThe system can be adapted to very large-scale models such as DeepSeek-V3, and can cope with trillions of parameter training requirements.

- Computation and communication overlap: Parallelize computation and communication tasks in forward and backward propagation to improve GPU utilization.

- Open Source Support: A complete Python implementation is provided, which developers are free to download, modify, and integrate.

Using Help

DualPipe is an advanced tool for developers, and as a GitHub open source project, it doesn't have a standalone graphical interface, but is available as a code base. Below is a detailed usage guide to help developers get started quickly and integrate it into their AI training projects.

Installation process

The installation of DualPipe requires some basic Python and deep learning environment. Here are the steps:

- environmental preparation

- Make sure that Python 3.8 or later is installed on your system.

- Install Git for downloading code from GitHub.

- It is recommended to use a virtual environment to avoid dependency conflicts, with the following command:

python -m venv dualpipe_env source dualpipe_env/bin/activate # Linux/Mac dualpipe_env\Scripts\activate # Windows

- Clone Code Repository

Download the DualPipe repository locally by entering the following command in the terminal:git clone https://github.com/deepseek-ai/DualPipe.git cd DualPipe

- Installation of dependencies

DualPipe relies on common deep learning libraries, the specific dependencies are not explicitly listed in the repository, but based on its functionality it is assumed to require an environment such as PyTorch. You can try the following command to install the base dependencies:pip install torch torchvisionIf you encounter errors about missing specific libraries, follow the prompts to install them further.

- Verify Installation

Since DualPipe is algorithmic code and not a standalone application, it is not possible to run the verification directly. However, it can be verified by looking at the code files (e.g.dualpipe.py) to confirm that the download is complete.

Usage

At the heart of DualPipe is a scheduling algorithm that developers need to integrate into existing model training frameworks (such as PyTorch or DeepSpeed). Here's how it works:

1. Understanding code structure

- show (a ticket)

DualPipefolder, the main code may be located in thedualpipe.pyor in a similar document. - Read the code comments and the DeepSeek-V3 technical report (link in GitHub repository description) to understand the algorithm logic. The report mentions DualPipe scheduling examples (e.g., 8 pipeline levels and 20 microbatches).

2. Integration into the training framework

- Preparing models and data: Assuming you already have a PyTorch-based model and dataset.

- Modifying the training cycle: Embed DualPipe's scheduling logic into the training code. Here is a simplified example:

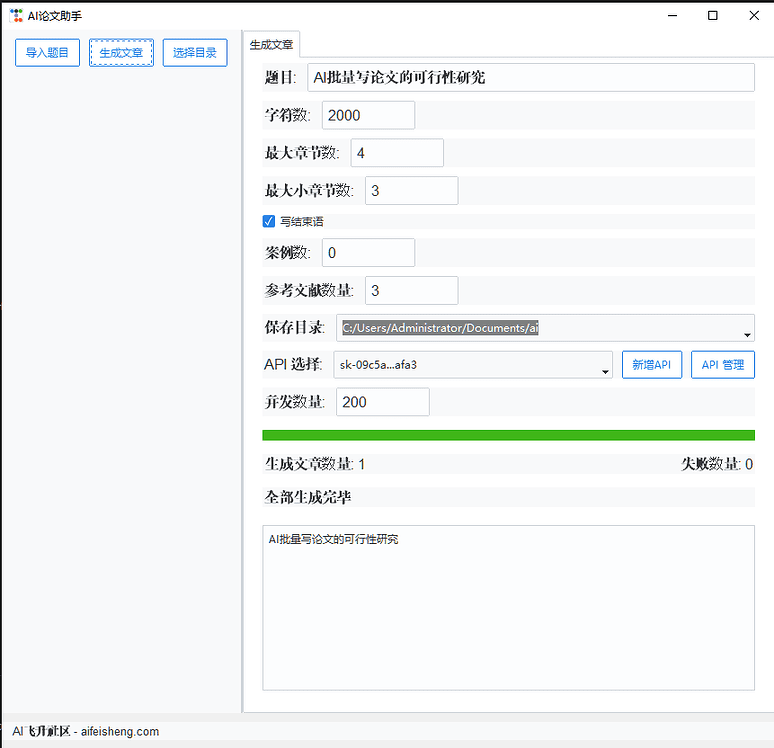

# 伪代码示例 from dualpipe import DualPipeScheduler # 假设模块名 import torch # 初始化模型和数据 model = MyModel().cuda() optimizer = torch.optim.Adam(model.parameters()) data_loader = MyDataLoader() # 初始化 DualPipe 调度器 scheduler = DualPipeScheduler(num_ranks=8, num_micro_batches=20) # 训练循环 for epoch in range(num_epochs): scheduler.schedule(model, data_loader, optimizer) # 调用 DualPipe 调度 - The implementation needs to be adapted to the actual code, and it is recommended to refer to the examples in the GitHub repository (if any).

3. Configuring the hardware environment

- DualPipe is designed for multi-node GPU clusters and is recommended for use with at least 8 GPUs (e.g. NVIDIA H800).

- Ensure that the cluster supports InfiniBand or NVLink to take full advantage of communication optimizations.

4. Operation and commissioning

- Run the training script in the terminal:

python train_with_dualpipe.py - Observe the log output and check whether the computation and communication overlap successfully. If there is a performance bottleneck, adjust the number of micro batches or the pipeline level.

Featured Function Operation

Bidirectional pipelined scheduling

- Setting in configuration file or code

num_ranks(number of pipeline levels) andnum_micro_batches(Number of micro-batches). - Example Configuration: 8 levels, 20 micro batches, refer to the scheduling diagram in the technical report.

computational communications overlap

- Without the need for manual intervention, DualPipe automatically takes the positive calculations (e.g.

F) with the reverse calculation (e.g.B) of overlapping communication tasks. - Check the timestamps in the logs to confirm that the communication time is hidden from the calculation.

Reduction of assembly line air bubbles

- Find the optimal configuration by adjusting the microbatch size (e.g., from 20 to 16) and observing the change in training time.

caveat

- hardware requirement: A single card cannot fully utilize the DualPipe advantage, so a multi-GPU environment is recommended.

- documentation support: The GitHub page is currently low on information, so it is recommended to study it in depth in conjunction with the DeepSeek-V3 technical report (arXiv: 2412.19437).

- Community Support: Ask questions on the GitHub Issues page, or refer to related discussions on the X platform (e.g., @deepseek_ai's posts).

By following these steps, developers can integrate DualPipe into their projects and significantly improve the efficiency of large-scale model training.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...