DragAnything: Controlled motion silicon-based video generation for solid objects in images

General Introduction

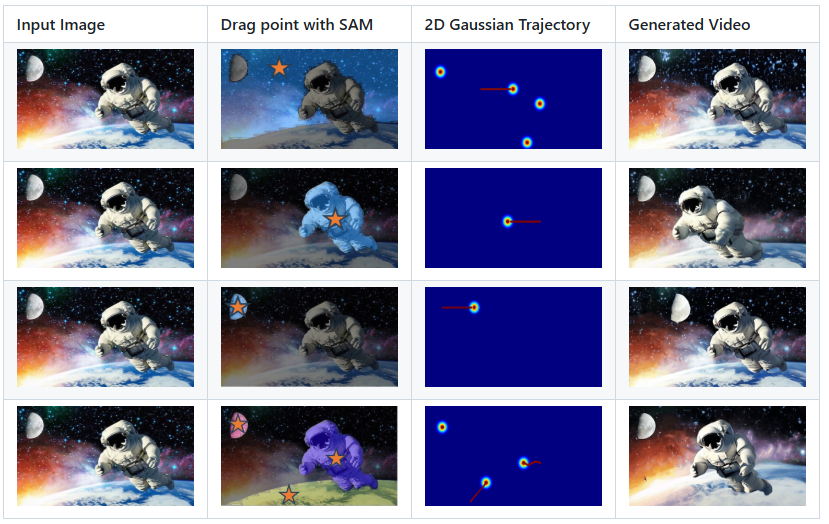

DragAnything is an open source project that aims to realize motion control of arbitrary objects through entity representation. Developed by the Showlab team and accepted by ECCV 2024, DragAnything provides a user-friendly interaction where the user only needs to draw a trajectory line to control the motion of an object. The project supports simultaneous motion control of multiple objects, including foreground, background, and camera motion.DragAnything outperforms existing state-of-the-art methods in a number of metrics, especially for object motion control.

Function List

- Entity Representation: use the open field embedding to represent any object.

- Trajectory Control: Realize object motion control by drawing trajectory lines.

- Multi-object control: supports simultaneous motion control of foreground, background and camera.

- Interactive demos: Support for interactive demos using Gradio.

- Dataset Support: Supports VIPSeg and Youtube-VOS datasets.

- High performance: excellent in FVD, FID and user studies.

Using Help

Installation process

- Clone the project code:

git clone https://github.com/showlab/DragAnything.git

cd DragAnything

- Create and activate a Conda environment:

conda create -n DragAnything python=3.8

conda activate DragAnything

- Install the dependencies:

pip install -r requirements.txt

- Prepare the dataset:

- Download VIPSeg and Youtube-VOS datasets to

./dataCatalog.

- Download VIPSeg and Youtube-VOS datasets to

Usage

- Run an interactive demo:

python gradio_run.py

Open your browser and visit the local address provided to get started with the interactive demo.

- Controls object motion:

- Draw a trajectory line on the input image and select the object you want to control.

- Run the script to generate the video:

python demo.py --input_image <path_to_image> --trajectory <path_to_trajectory>- The generated video will be saved in the specified directory.

- Customize the motion trajectory:

- Use the Co-Track tool to process your own motion track annotation files.

- Place the processed files in the specified directory and run the script to generate the video.

Main function operation flow

- physical representation: Represents any object through open field embedding, eliminating the need for users to manually label objects.

- Trajectory control: The user can control the motion of an object by simply drawing a trajectory line on the input image.

- multi-object control: Supports controlling the motion of multiple objects at the same time, including foreground, background and camera.

- Interactive Demo: Through the interactive interface provided by Gradio, users can view the effects of motion control in real time.

- high performance: Excellent performance in FVD, FID and user studies, especially in object motion control.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...