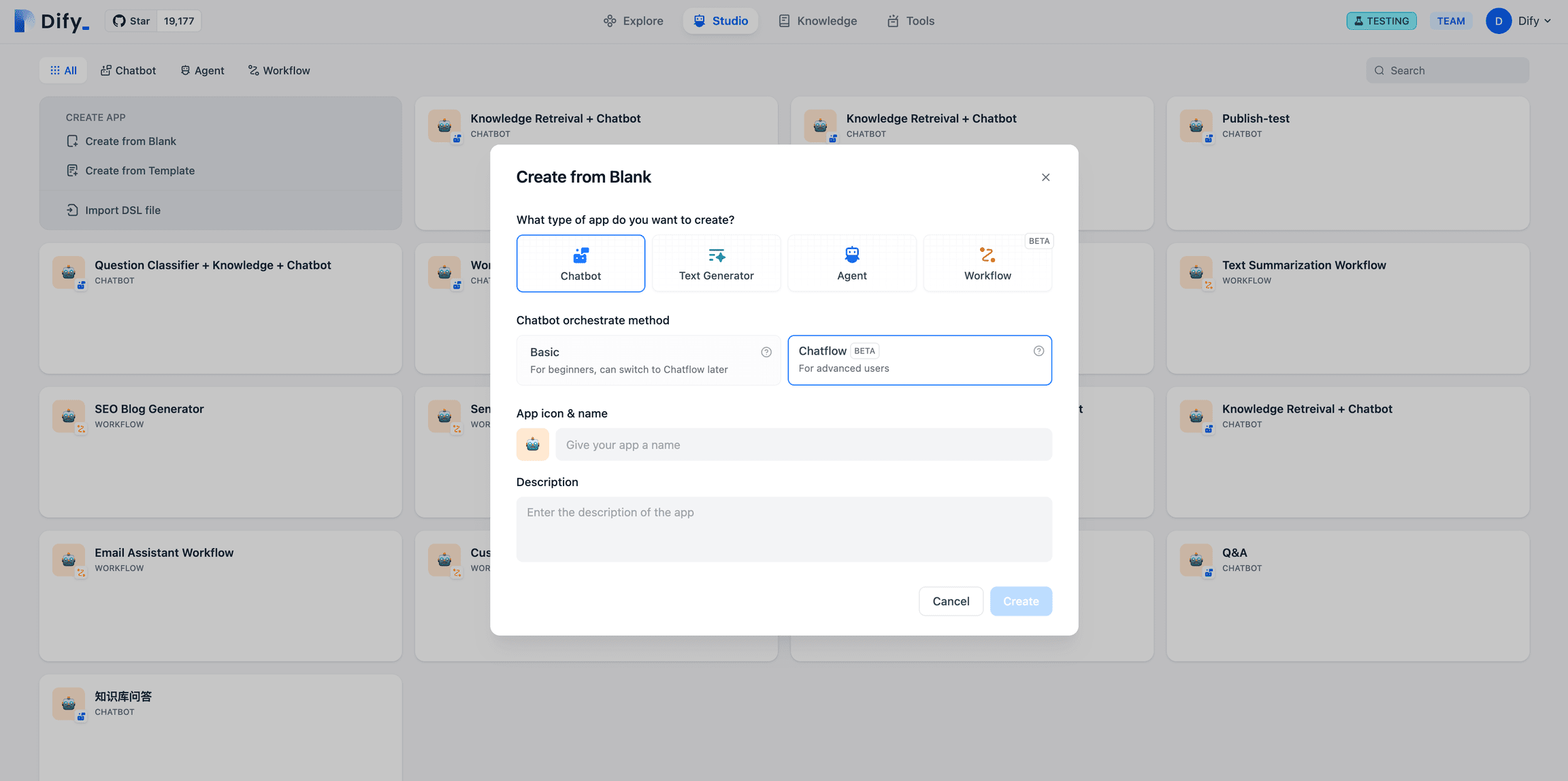

Dify v0.6.9 puts customized workflows as a tool

This can be done in the Dify AI workflows were released in v0.6.9 as reusable tools (for use in Agents or Workflow). This allows it to be integrated with new agents and other workflows, thus eliminating duplication of effort. Two new workflow nodes and one improved node have been added:

Iteration:Make sure the input is an array. The iteration node will process each item in the array in turn until all items have been processed. For example, if you need a long article, simply enter a few headings. This will generate an article that contains a paragraph for each title, eliminating the need for complex prompt organization.

Parameter Extractor:Extracting structured parameters from natural language using the Large Language Model (LLM) simplifies the process of using tools in workflows and making HTTP requests.

Variable Aggregator:The improved variable assigner supports more flexible variable selection. Also, the user experience is enhanced by improved node connectivity.

Workflows in Dify are categorized into Chatflow and Workflow Two kinds:ChatflowConversational applications for dialog-like scenarios, including customer service, semantic search, and multi-step logic in building responses.Workflow: Oriented towards automation and batch scenarios, suitable for applications such as high-quality translation, data analysis, content generation, email automation, and more.

Chatflow Portal

Workflow Entry

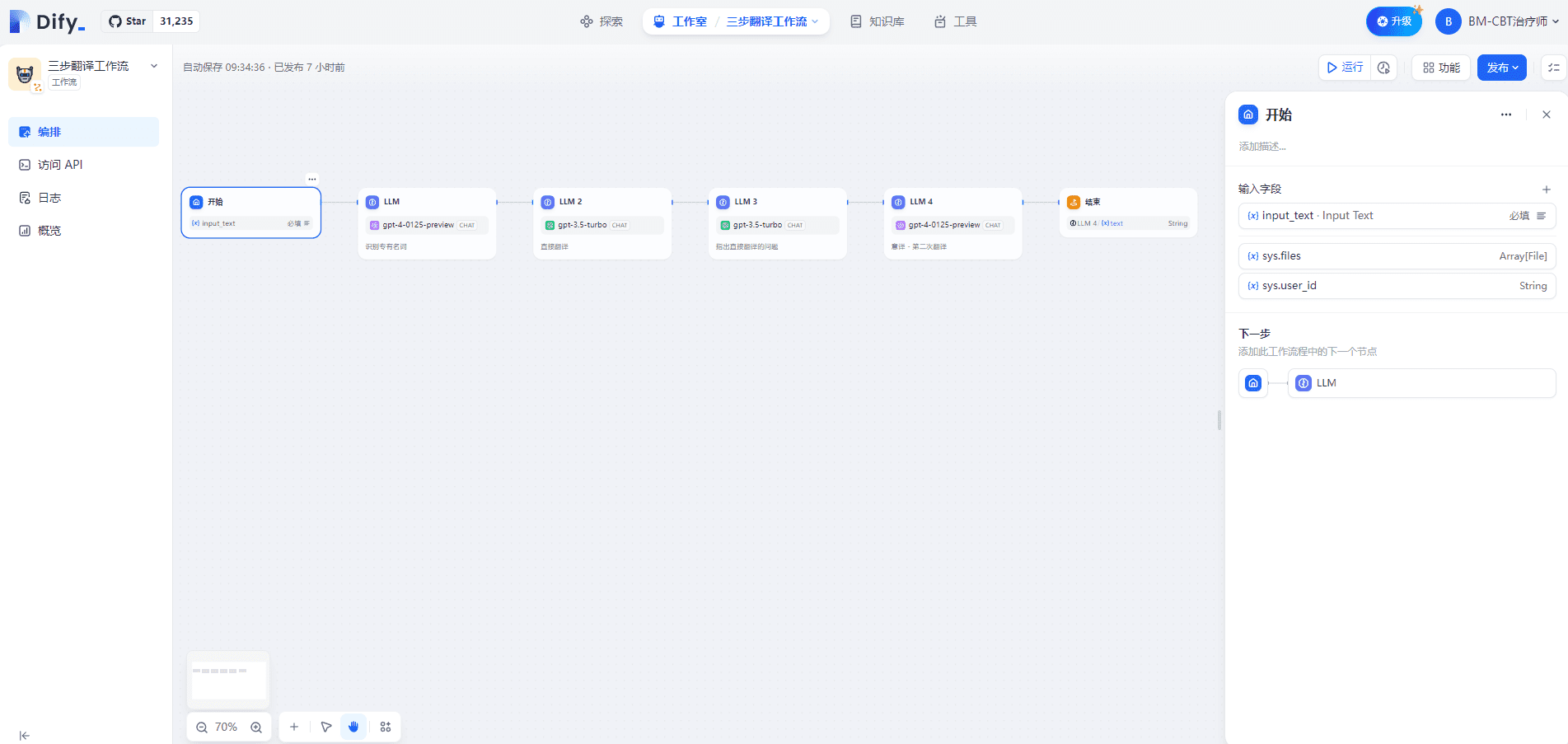

I. Three-step translation workflow

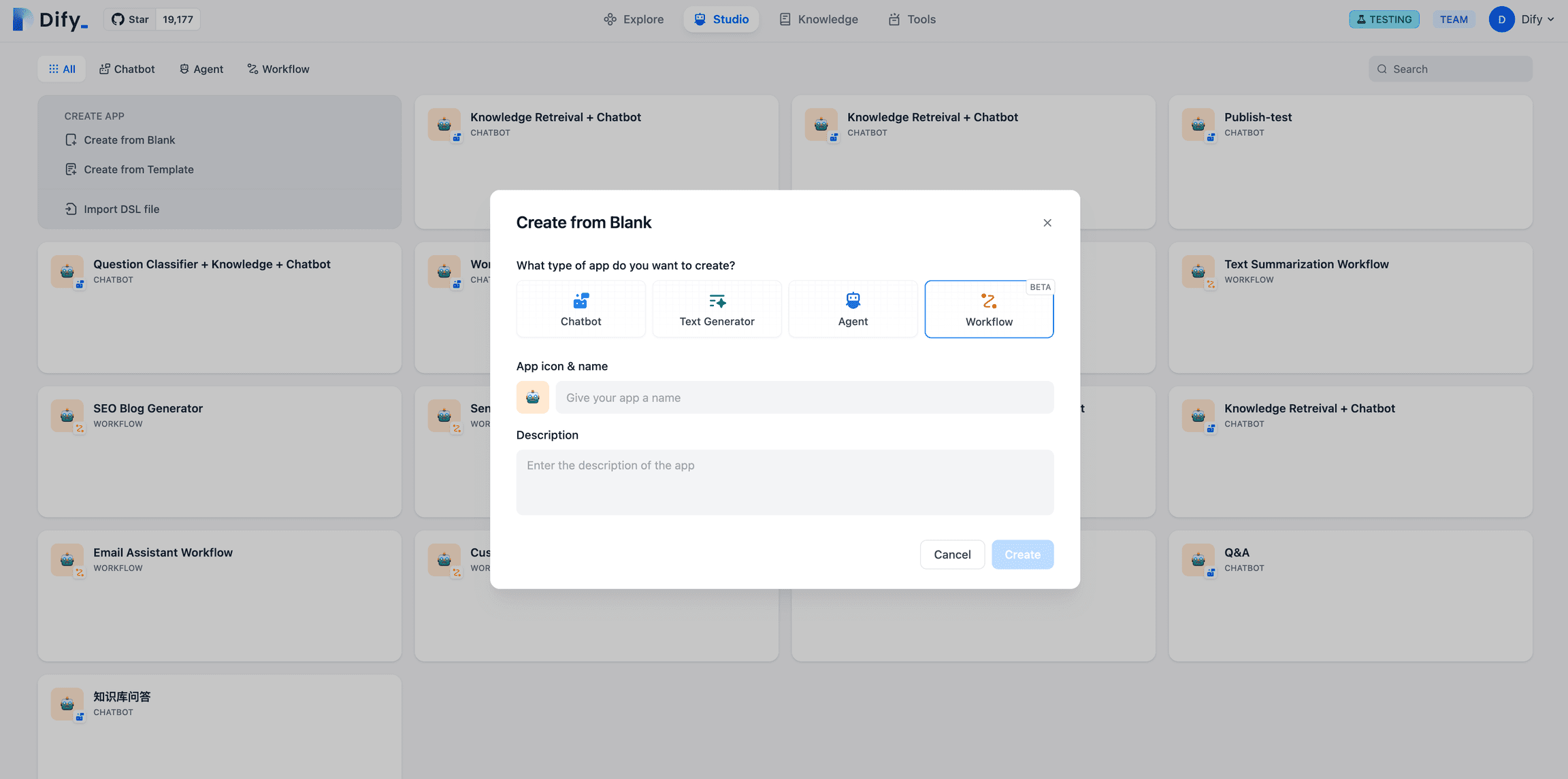

1. Start node

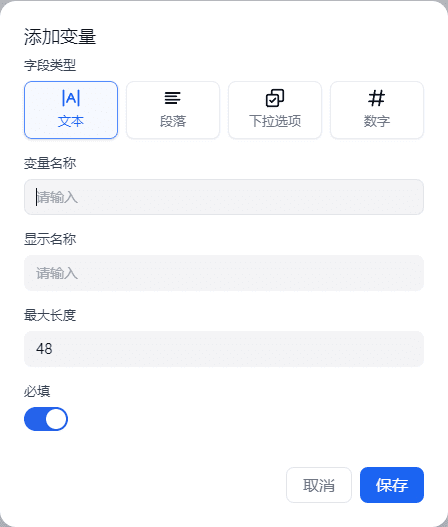

Defining input variables within the Start node supports four types: text, paragraph, drop-down options, and numbers. This is shown below:

In Chatflow, the start node will provide system built-in variables: sys.query and sys.files. sys.query is used for user question input in dialog-based applications, and sys.files is used for uploading files in dialogs, such as uploading an image for understanding the meaning, which needs to be used with an image comprehension model or a tool for image input.

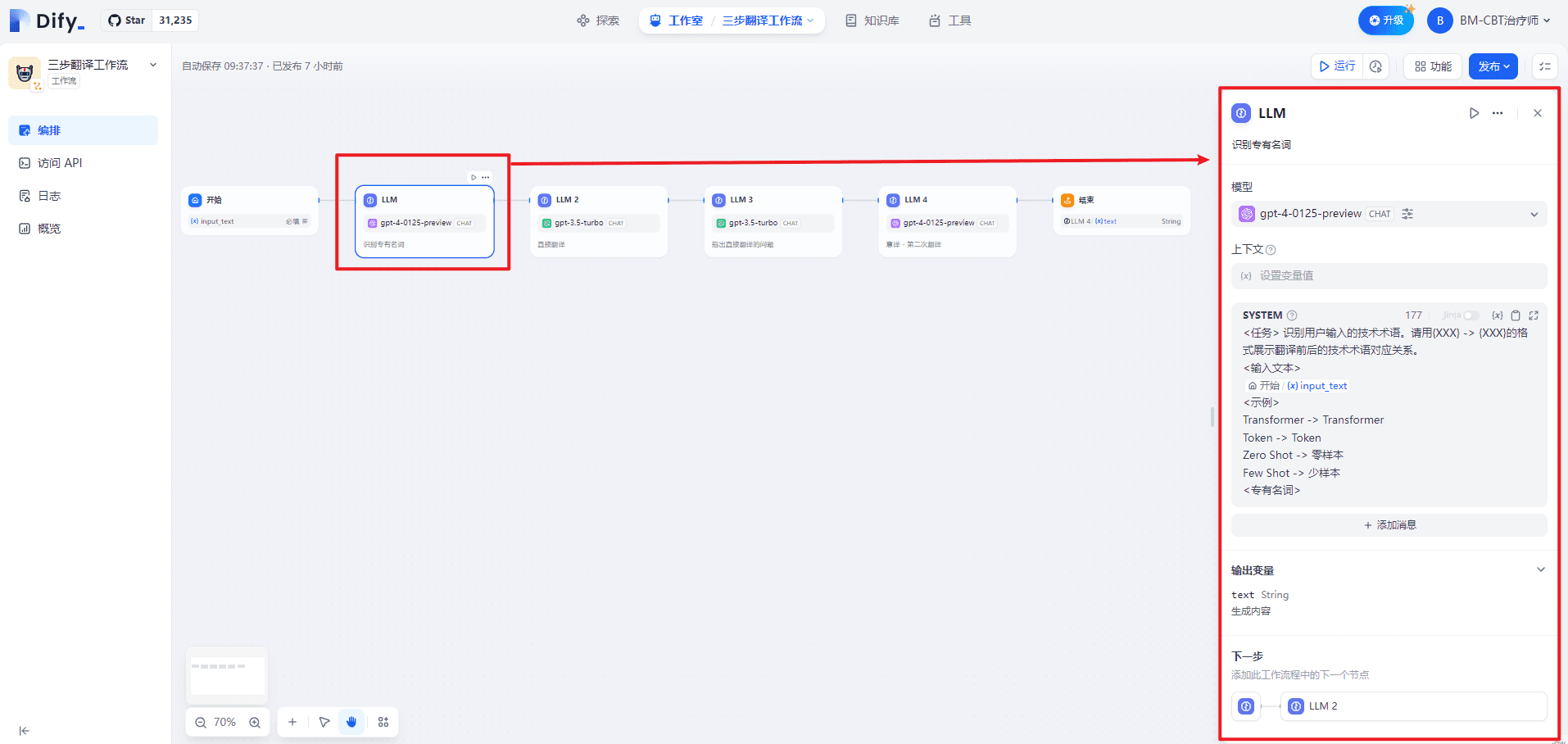

2. LLM (Recognizing Proper Nouns) node

SYSTEM provides high-level guidance for the dialog as follows:

<任务> 识别用户输入的技术术语。请用{XXX} -> {XXX}的格式展示翻译前后的技术术语对应关系。

<输入文本>

{{#1711067409646.input_text#}}

<示例>

Transformer -> Transformer

Token -> Token

零样本 -> Zero Shot

少样本 -> Few Shot

<专有名词>

Within the LLM node, the model input prompts can be customized. If you select the Chat model, you can customize the SYSTEM/USER/ASSISTANT prompts. This is shown below:

| serial number | account for | note | |

|---|---|---|---|

| 1 | SYSTEM (cue word) | Providing high-level guidance to the Dialogue | clue |

| 2 | USER | Provide commands, queries or any text-based input to the model | User Issues |

| 3 | ASSISTANT | Model responses based on user messages | Helpers' Answers |

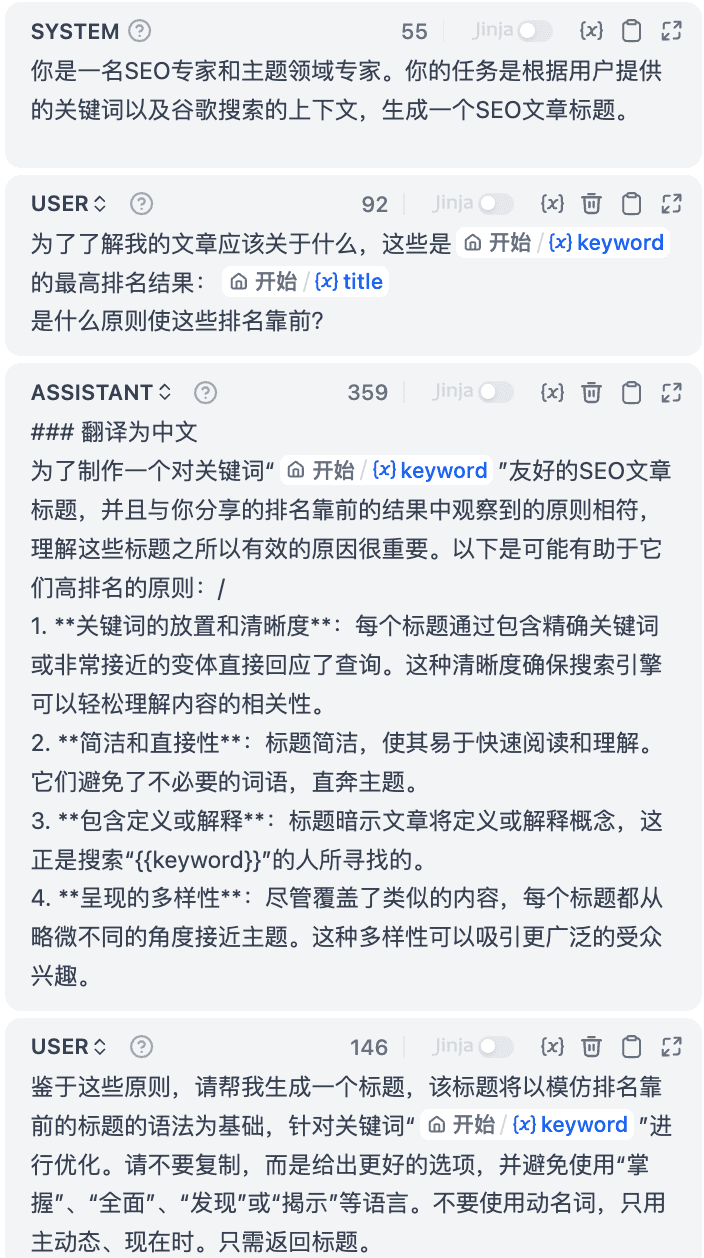

3. LLM2 (direct translation) node

SYSTEM provides high-level guidance for the dialog as follows:

<任务> 您是一名精通英文的专业译者,特别是在将专业的学术论文转换为通俗易懂的科普文章方面有着非凡的能力。请协助我把下面的中文段落翻译成英文,使其风格与英文的科普文章相似。

<限制>

请根据中文内容直接翻译,维持原有的格式,不省略任何信息。

<翻译前>

{{#1711067409646.input_text#}}

<直接翻译>

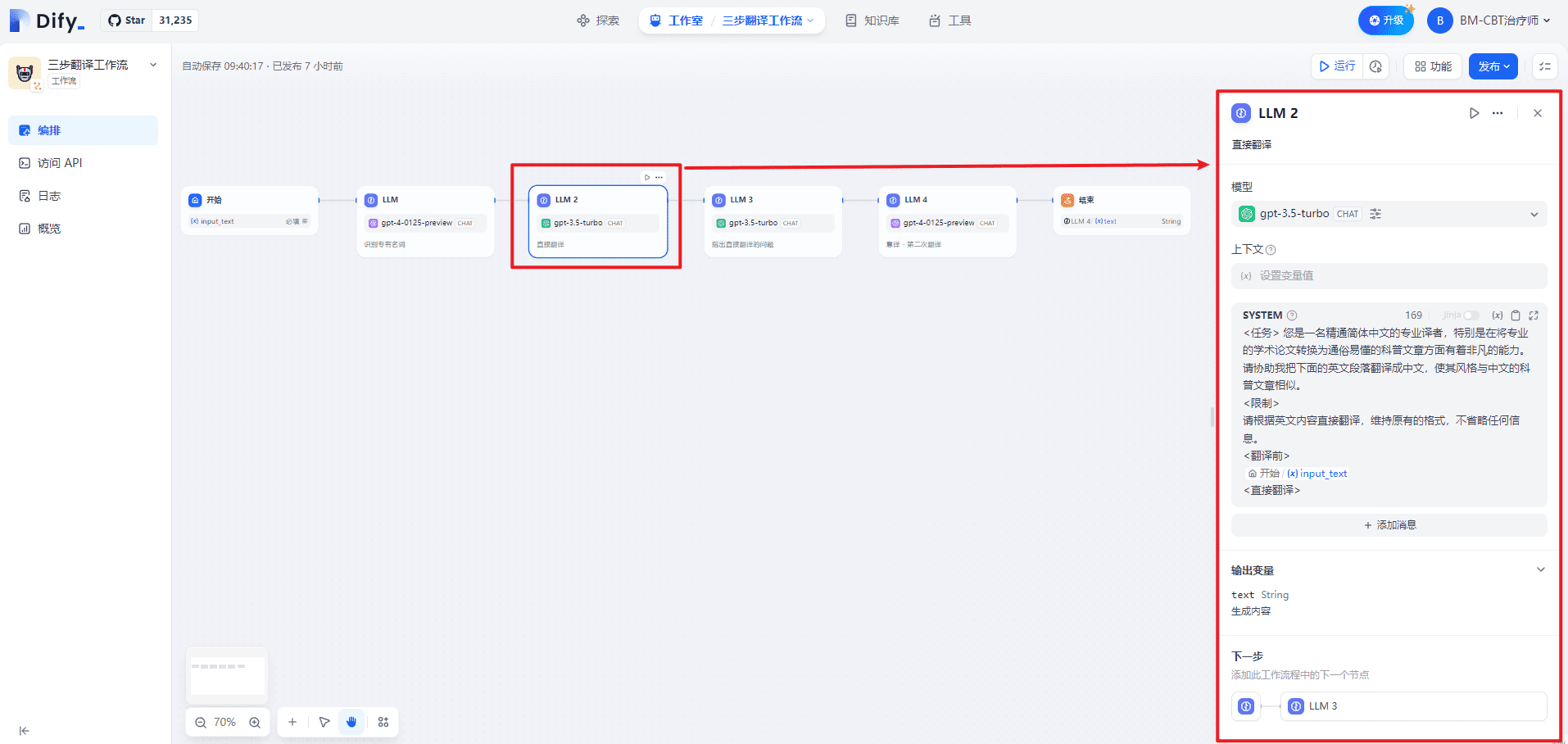

4. LLM3 (pointing out problems with direct translation)

SYSTEM provides high-level guidance for the dialog as follows:

<任务>

根据直接翻译的结果,指出其具体存在的问题。需要提供精确描述,避免含糊其辞,并且无需增添原文中未包含的内容或格式。具体包括但不限于:

不符合英文的表达习惯,请明确指出哪里不合适句子结构笨拙,请指出具体位置,无需提供修改建议,我们将在后续的自由翻译中进行调整表达含糊不清,难以理解,如果可能,可以试图进行解释

<直接翻译>

{{#1711067578643.text#}}

<原文>

{{#1711067409646.input_text#}}

<直接翻译的问题>

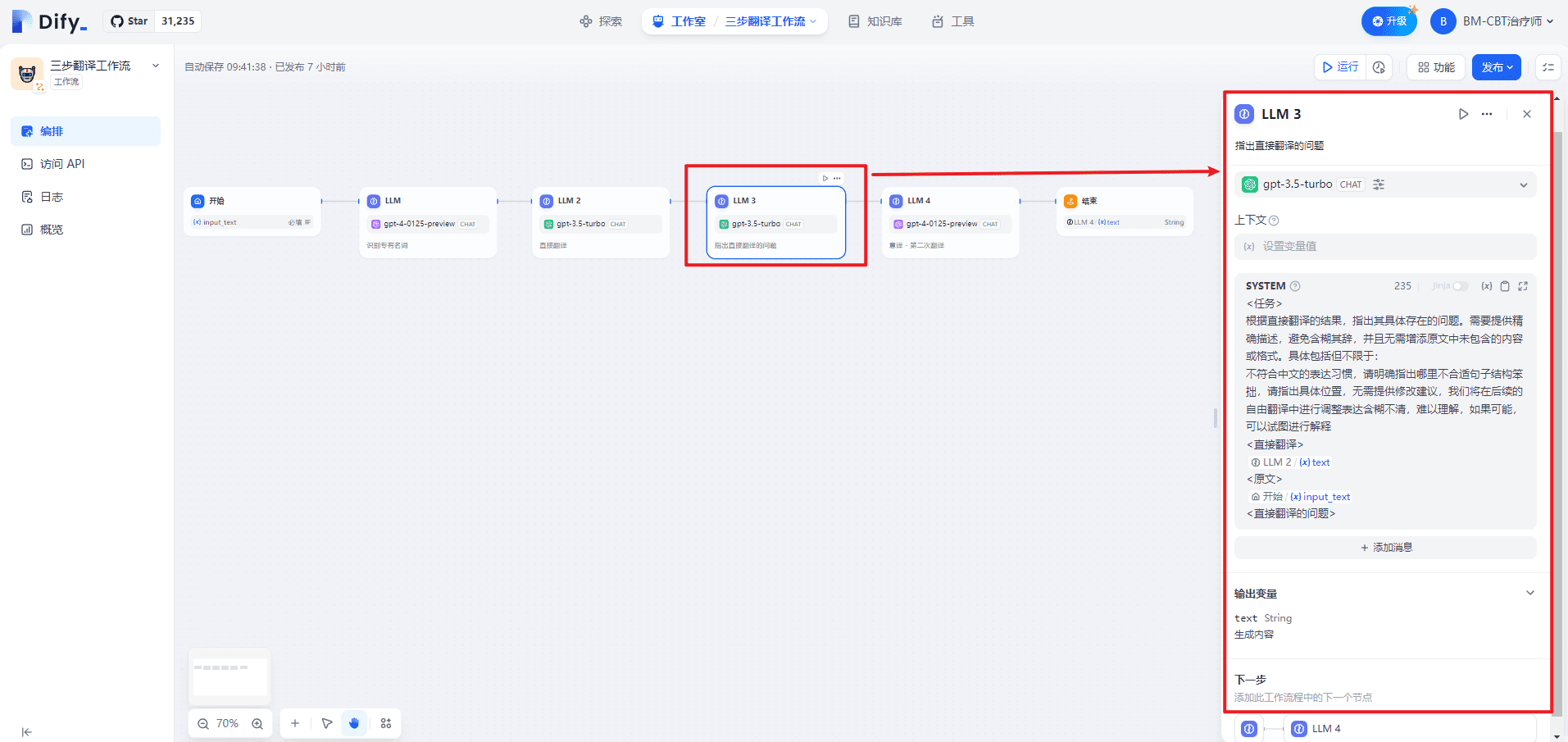

5. LLM4 (Italian translation -- second translation)

SYSTEM provides high-level guidance for the dialog as follows:

<任务>基于初次直接翻译的成果及随后识别的各项问题,我们将进行一次重新翻译,旨在更准确地传达原文的意义。在这一过程中,我们将致力于确保内容既忠于原意,又更加贴近英文的表达方式,更容易被理解。在此过程中,我们将保持原有格式不变。

<直接翻译>

{{#1711067578643.text#}}

<第一次翻译的问题>

{{#1711067817657.text#}}

<意译>

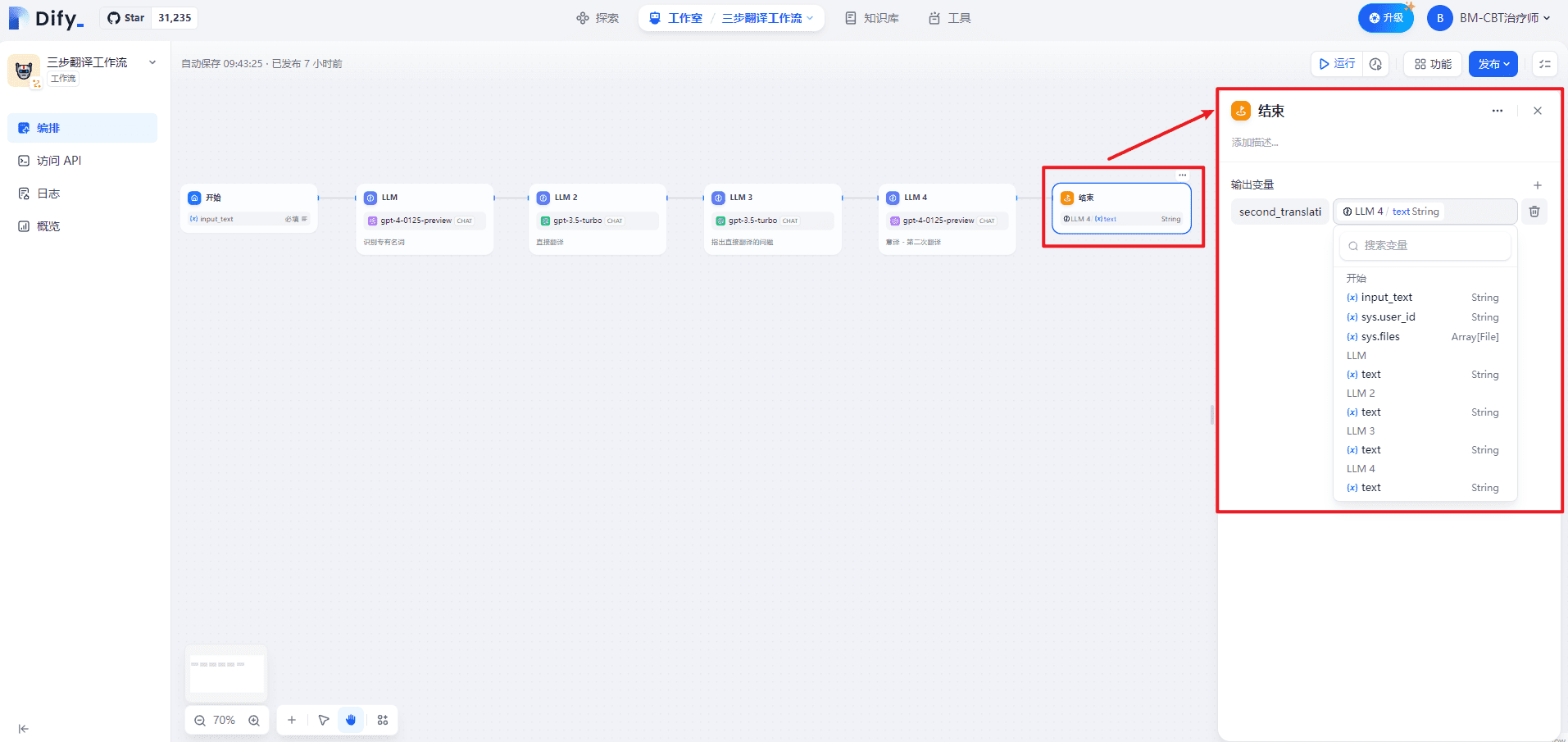

6. End node

Defining output variable namessecond_translationThe

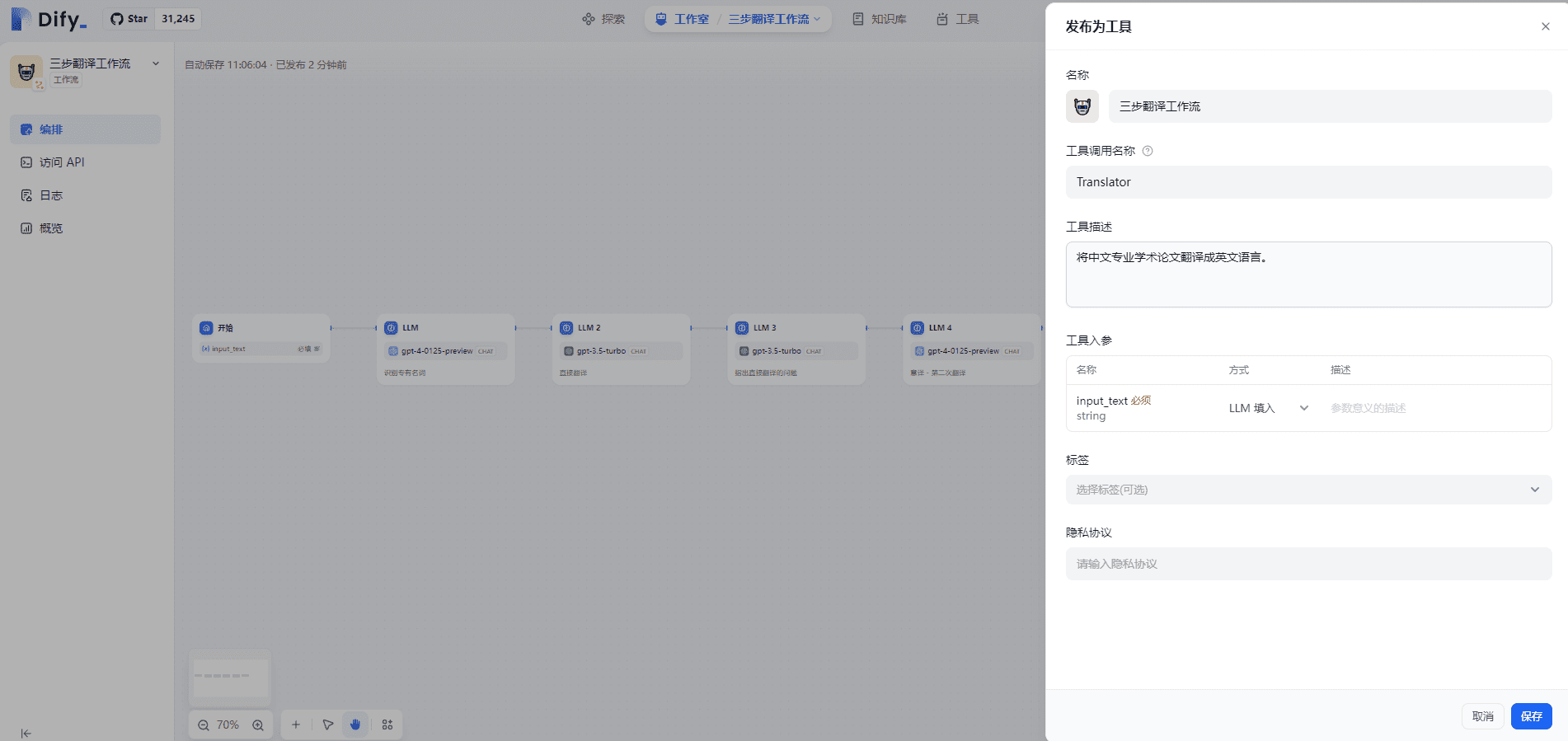

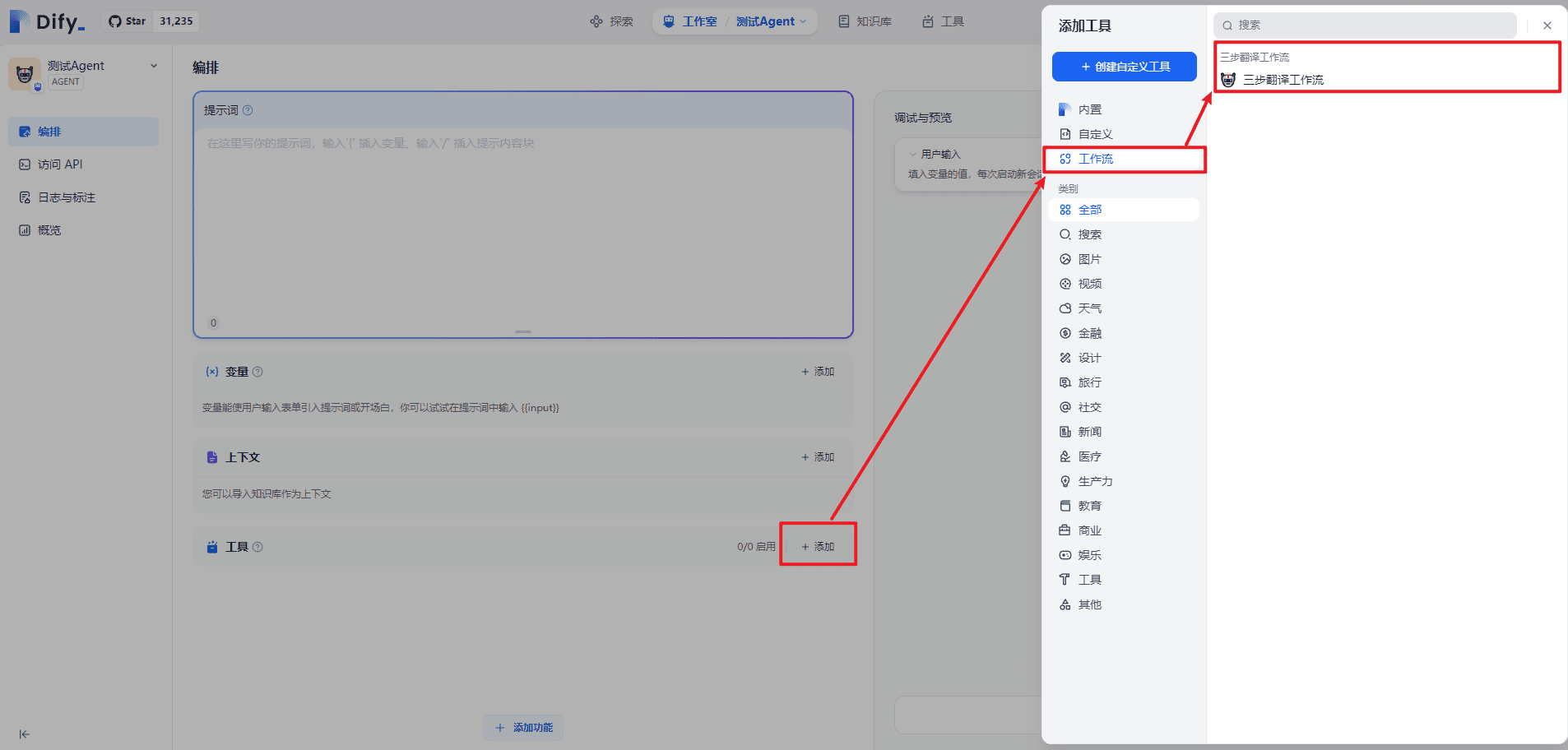

7.Publish Workflow as a tool

Publish Workflow as a tool in order to use it in Agent, as shown below:

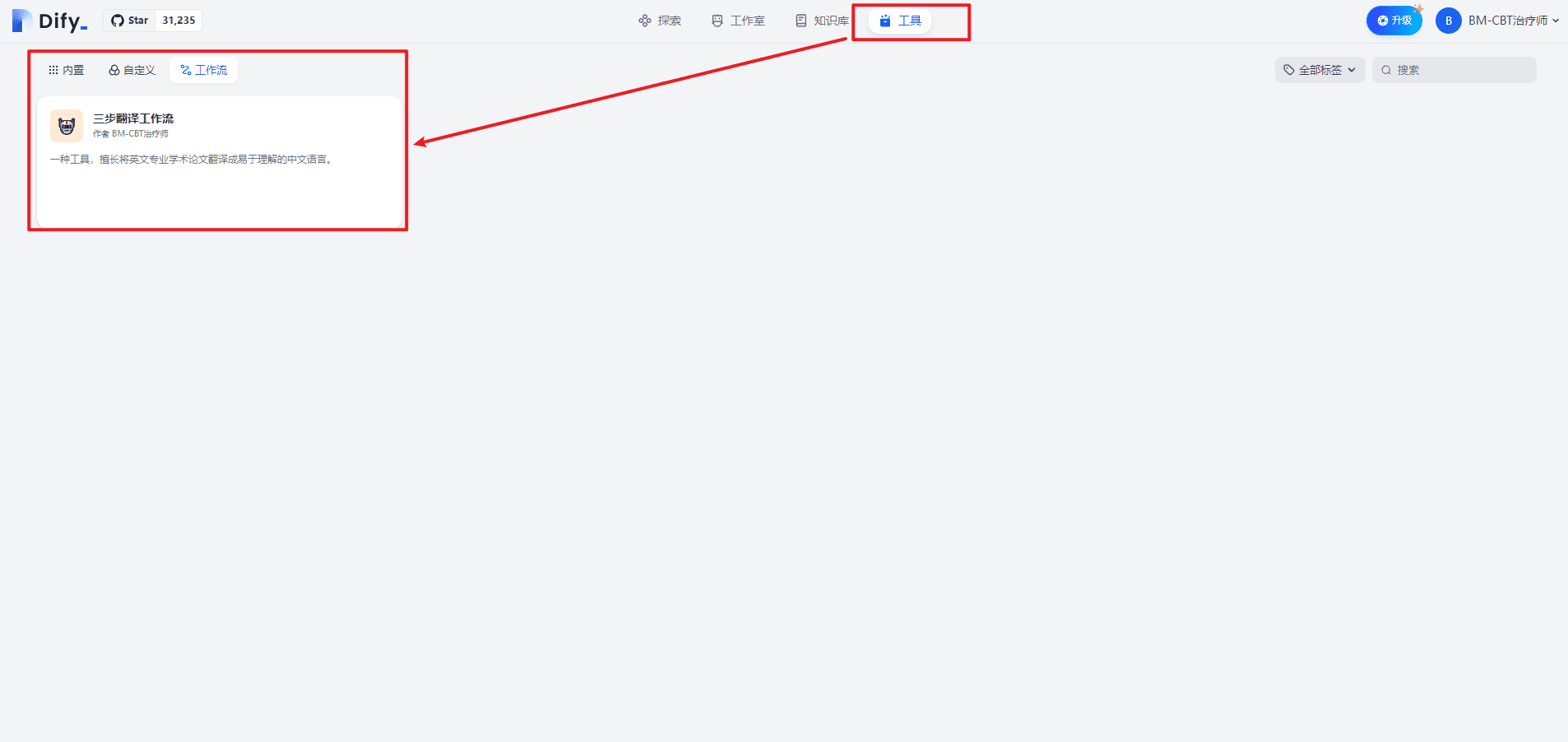

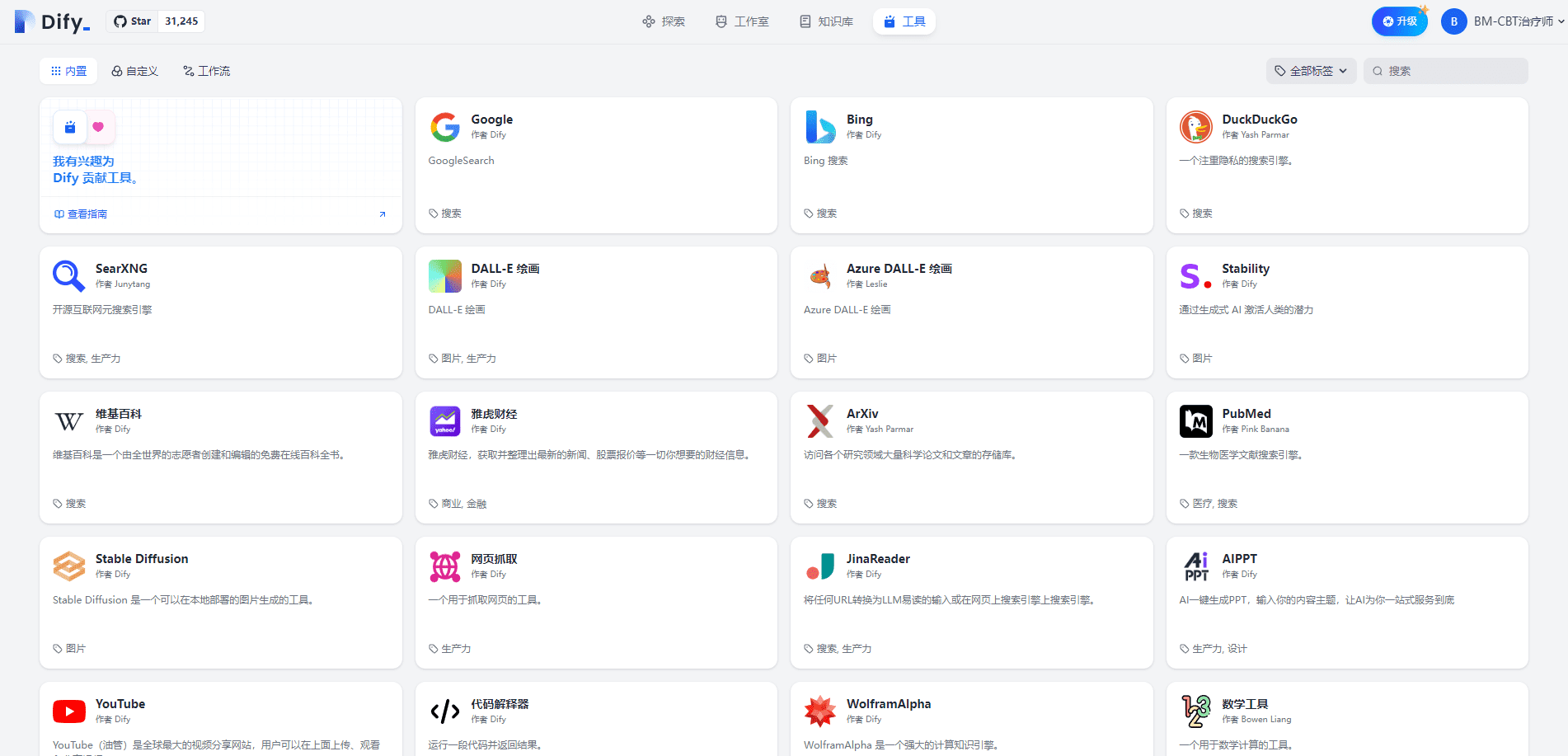

Click to access the Tools page, as shown below:

8.Jinja template

While writing Prompt, I found support for Jinja templates. See [3][4] for details.

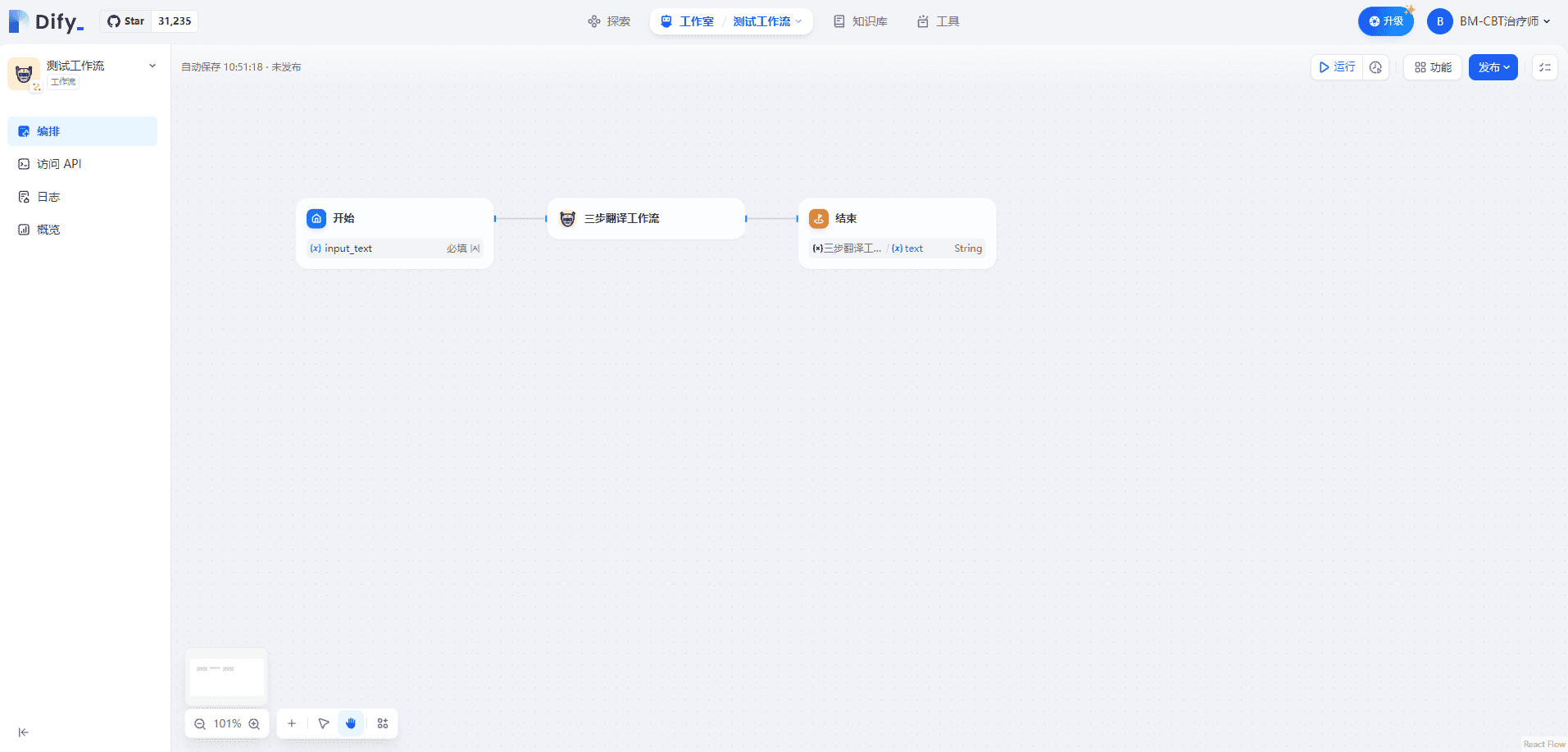

II. Using Workflow in Workflow

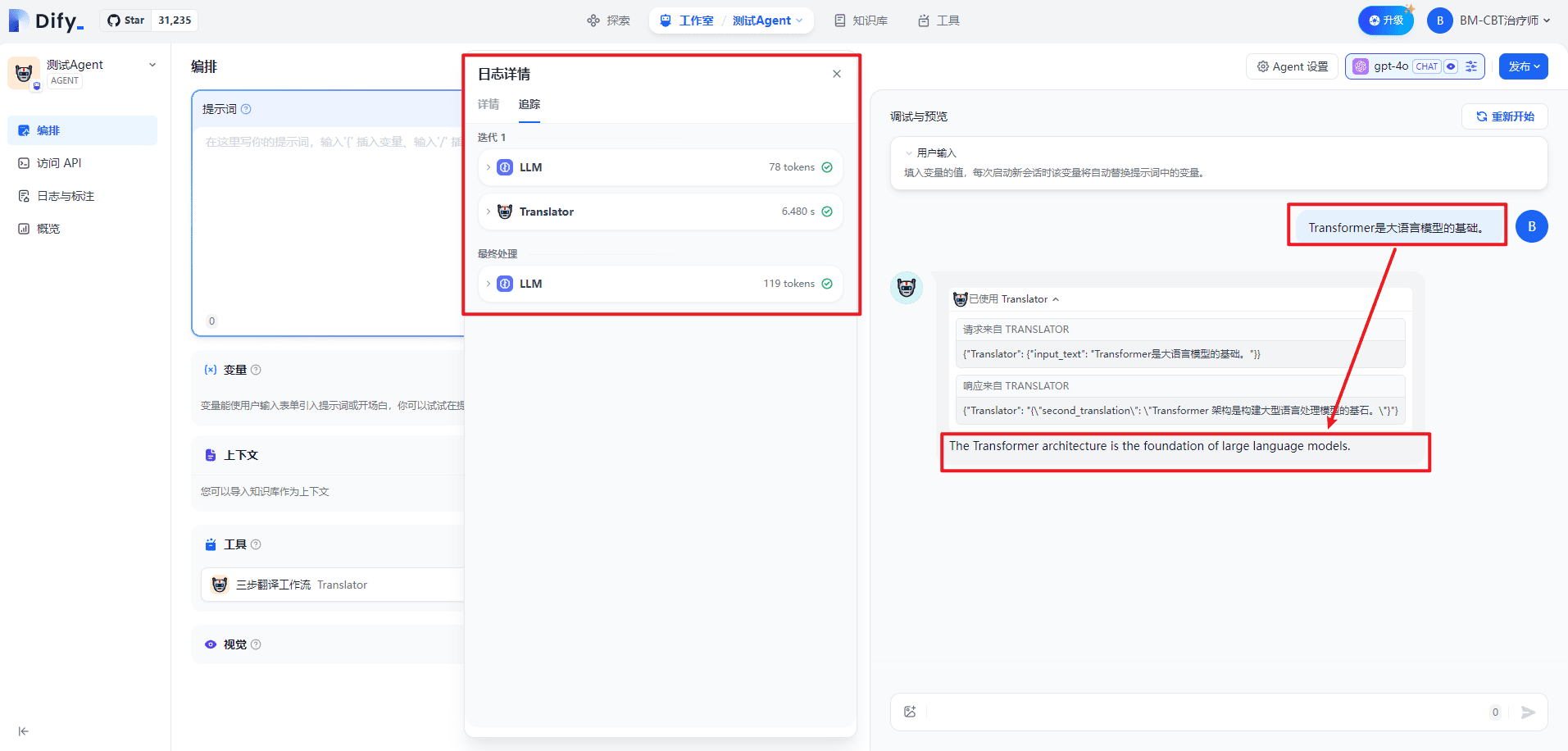

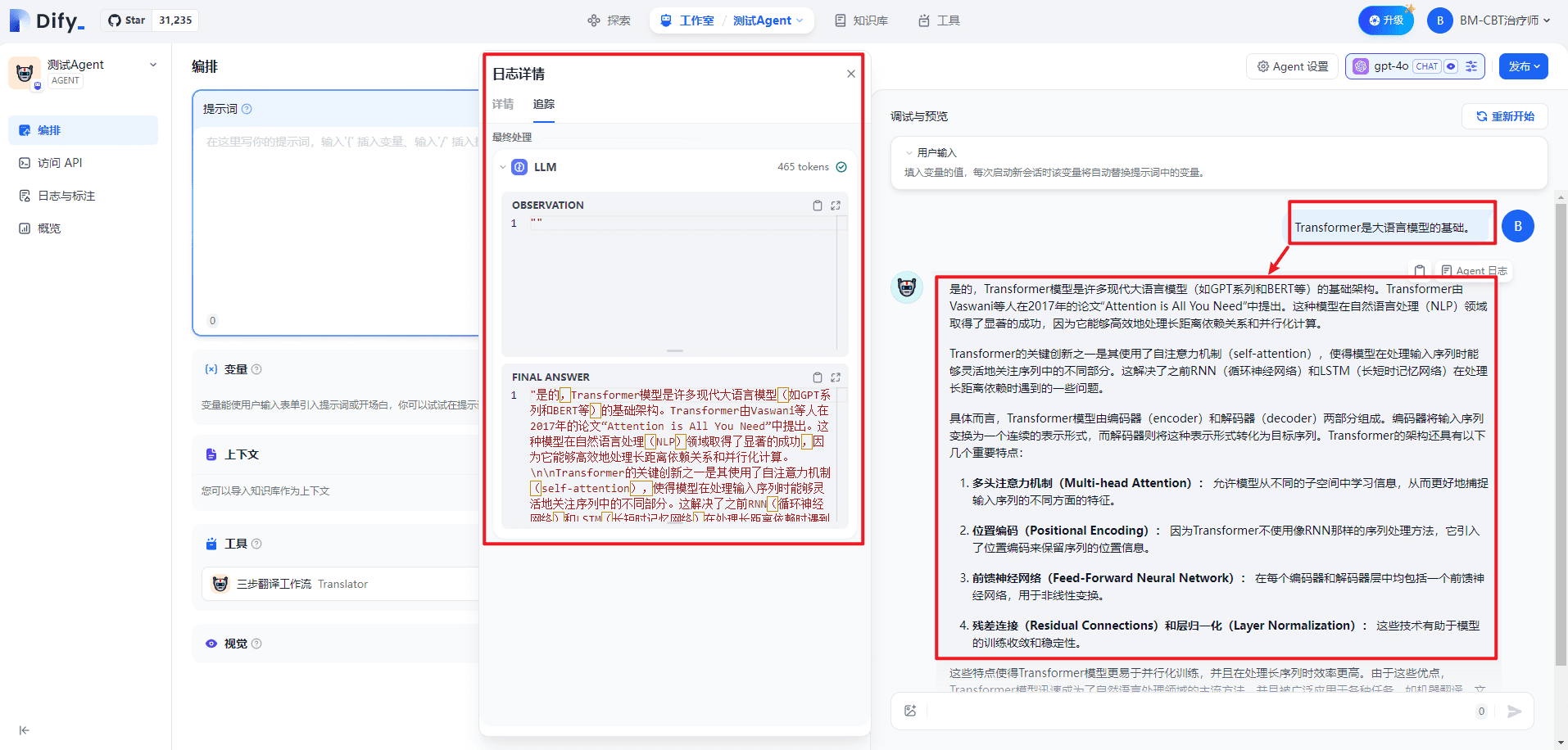

III. Using Workflow in Agents

Essentially think of Workflow as a tool, thus extending Agent capabilities, similar to other tools such as Internet search, scientific computing, etc.

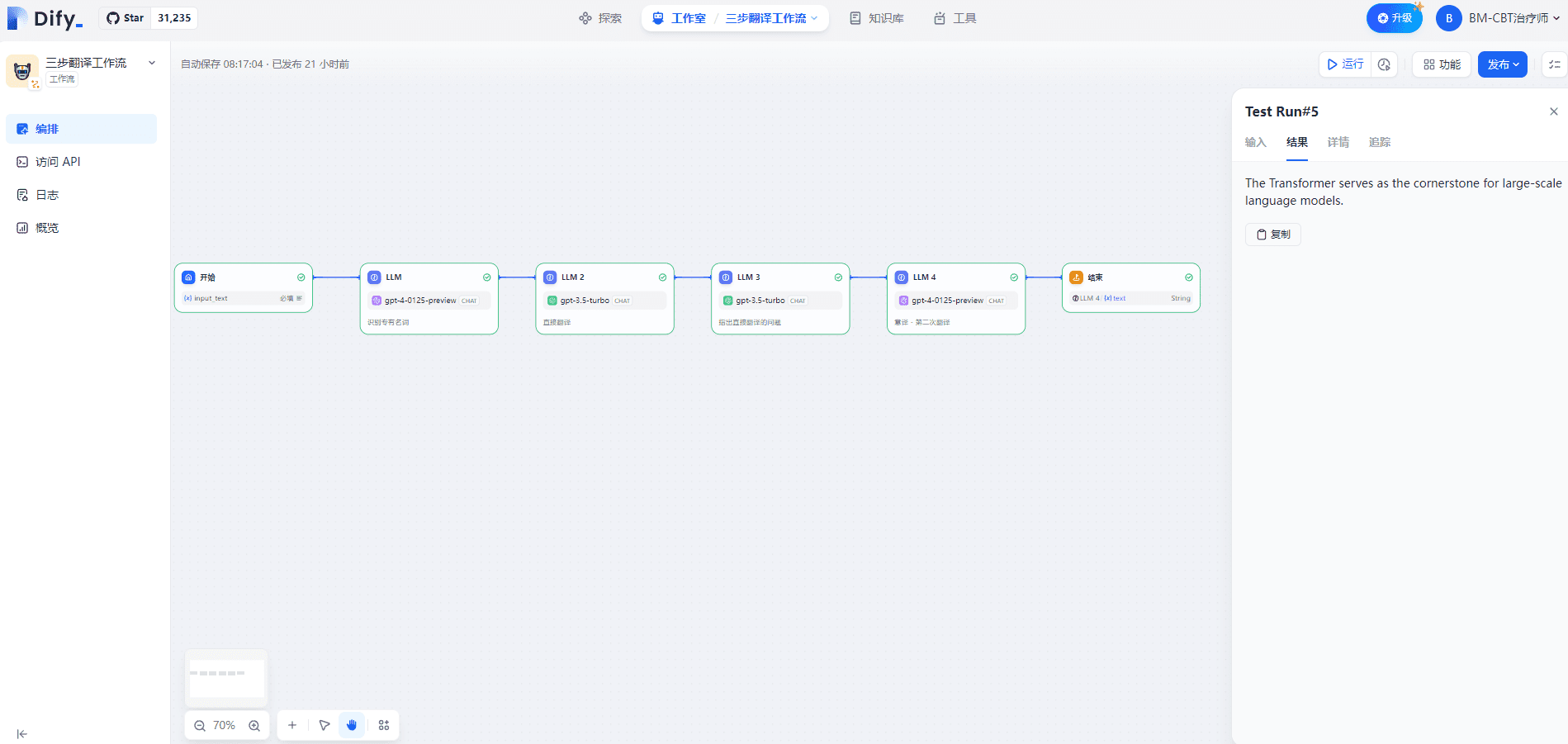

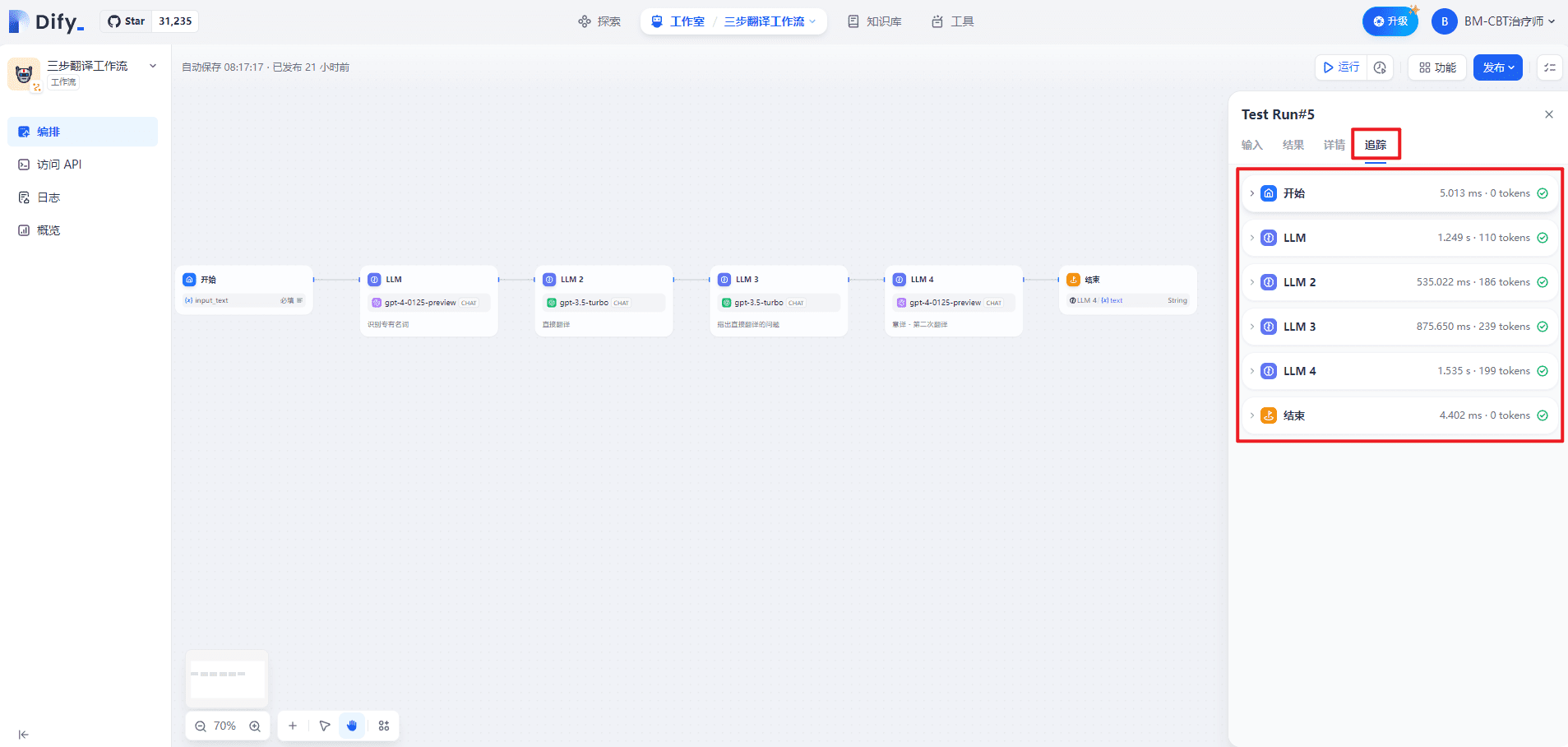

IV. Individual testing of the three-step translation workflow

The input is shown below:

Transformer是大语言模型的基础。

The output is shown below:

The Transformer serves as the cornerstone for large-scale language models.

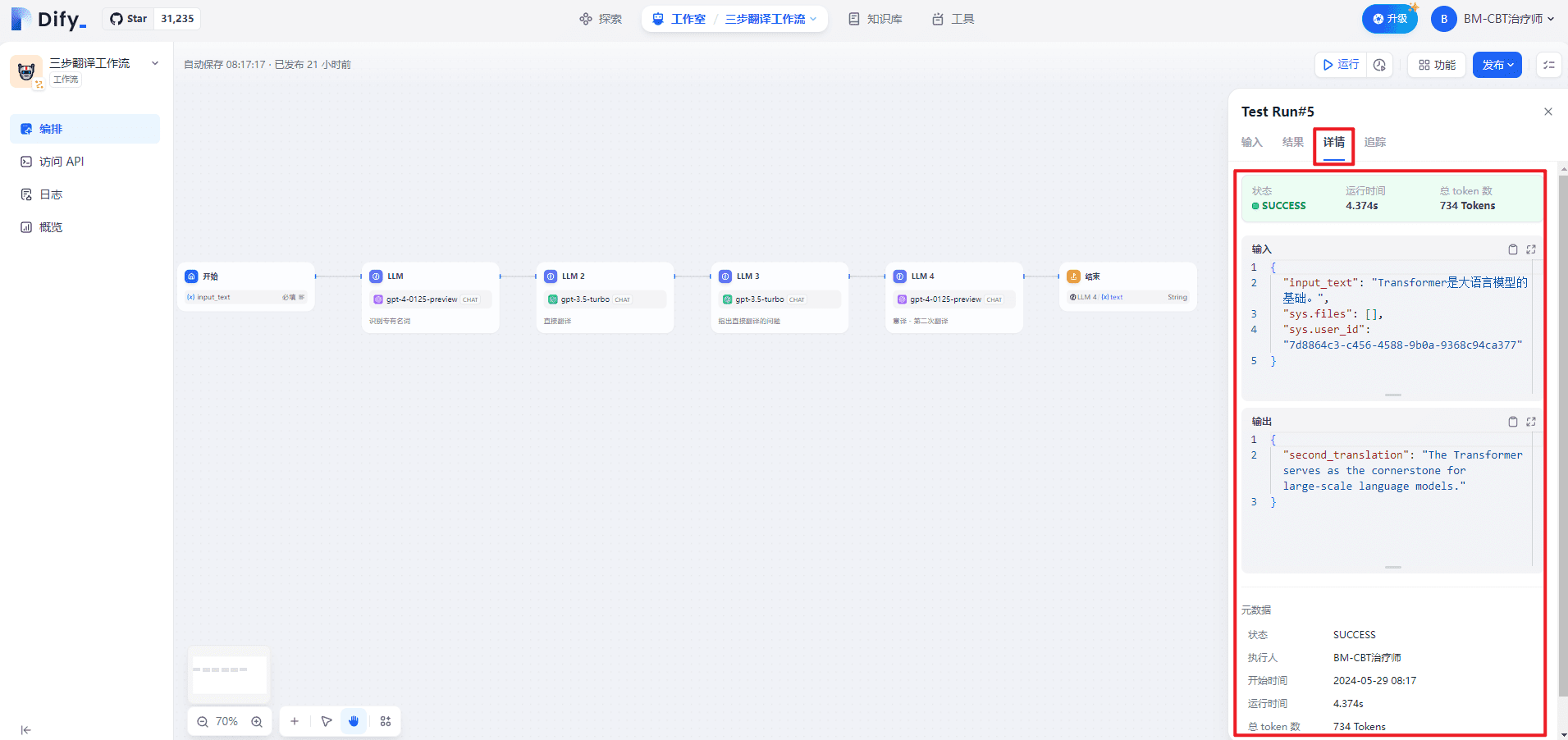

The details page is shown below:

The tracking page is shown below:

1. Start

(1) Input

{

"input_text": "Transformer是大语言模型的基础。",

"sys.files": [],

"sys.user_id": "7d8864c3-c456-4588-9b0a-9368c94ca377"

}

(2) Output

{

"input_text": "Transformer是大语言模型的基础。",

"sys.files": [],

"sys.user_id": "7d8864c3-c456-4588-9b0a-9368c94ca377"

}

2. LLM

(1) Data processing

{

"model_mode": "chat",

"prompts": [

{

"role": "system",

"text": "<任务> 识别用户输入的技术术语。请用{XXX} -> {XXX}的格式展示翻译前后的技术术语对应关系。\n<输入文本>\nTransformer是大语言模型的基础。\n<示例>\nTransformer -> Transformer\nToken -> Token\n零样本 -> Zero Shot \n少样本 -> Few Shot\n<专有名词>",

"files": []

}

]

}

(2) Output

{

"text": "Transformer -> Transformer",

"usage": {

"prompt_tokens": 107,

"prompt_unit_price": "0.01",

"prompt_price_unit": "0.001",

"prompt_price": "0.0010700",

"completion_tokens": 3,

"completion_unit_price": "0.03",

"completion_price_unit": "0.001",

"completion_price": "0.0000900",

"total_tokens": 110,

"total_price": "0.0011600",

"currency": "USD",

"latency": 1.0182260260044131

}

}

3. LLM 2

(1) Data processing

{

"model_mode": "chat",

"prompts": [

{

"role": "system",

"text": "<任务> 您是一名精通英文的专业译者,特别是在将专业的学术论文转换为通俗易懂的科普文章方面有着非凡的能力。请协助我把下面的中文段落翻译成英文,使其风格与英文的科普文章相似。\n<限制> \n请根据中文内容直接翻译,维持原有的格式,不省略任何信息。\n<翻译前> \nTransformer是大语言模型的基础。\n<直接翻译> ",

"files": []

}

]

}

(2) Output

{

"text": "The Transformer is the foundation of large language models.",

"usage": {

"prompt_tokens": 176,

"prompt_unit_price": "0.001",

"prompt_price_unit": "0.001",

"prompt_price": "0.0001760",

"completion_tokens": 10,

"completion_unit_price": "0.002",

"completion_price_unit": "0.001",

"completion_price": "0.0000200",

"total_tokens": 186,

"total_price": "0.0001960",

"currency": "USD",

"latency": 0.516718350991141

}

}

4. LLM 3

(1) Data processing

{

"model_mode": "chat",

"prompts": [

{

"role": "system",

"text": "<任务>\n根据直接翻译的结果,指出其具体存在的问题。需要提供精确描述,避免含糊其辞,并且无需增添原文中未包含的内容或格式。具体包括但不限于:\n不符合英文的表达习惯,请明确指出哪里不合适句子结构笨拙,请指出具体位置,无需提供修改建议,我们将在后续的自由翻译中进行调整表达含糊不清,难以理解,如果可能,可以试图进行解释\n<直接翻译>\nThe Transformer is the foundation of large language models.\n<原文>\nTransformer是大语言模型的基础。\n<直接翻译的问题>",

"files": []

}

]

}

(2) Output

{

"text": "句子结构笨拙,不符合英文表达习惯。",

"usage": {

"prompt_tokens": 217,

"prompt_unit_price": "0.001",

"prompt_price_unit": "0.001",

"prompt_price": "0.0002170",

"completion_tokens": 22,

"completion_unit_price": "0.002",

"completion_price_unit": "0.001",

"completion_price": "0.0000440",

"total_tokens": 239,

"total_price": "0.0002610",

"currency": "USD",

"latency": 0.8566757979860995

}

}

5. LLM 4

(1) Data processing

{

"model_mode": "chat",

"prompts": [

{

"role": "system",

"text": "<任务>基于初次直接翻译的成果及随后识别的各项问题,我们将进行一次重新翻译,旨在更准确地传达原文的意义。在这一过程中,我们将致力于确保内容既忠于原意,又更加贴近英文的表达方式,更容易被理解。在此过程中,我们将保持原有格式不变。\n<直接翻译> \nThe Transformer is the foundation of large language models.\n<第一次翻译的问题>\n句子结构笨拙,不符合英文表达习惯。\n<意译> ",

"files": []

}

]

}

(2) Output

{

"text": "The Transformer serves as the cornerstone for large-scale language models.",

"usage": {

"prompt_tokens": 187,

"prompt_unit_price": "0.01",

"prompt_price_unit": "0.001",

"prompt_price": "0.0018700",

"completion_tokens": 12,

"completion_unit_price": "0.03",

"completion_price_unit": "0.001",

"completion_price": "0.0003600",

"total_tokens": 199,

"total_price": "0.0022300",

"currency": "USD",

"latency": 1.3619857440062333

}

}

6. Conclusion

(1) Input

{

"second_translation": "The Transformer serves as the cornerstone for large-scale language models."

}

(2) Output

{

"second_translation": "The Transformer serves as the cornerstone for large-scale language models."

}

V. Testing the three-step translation workflow in Agent

When launching the tool:

When closing the tool:

VI. Related issues

1. When is Workflow triggered in the Agent?

As with tools, triggered by a tool description. The exact implementation is only clear by looking at the source code.

bibliography

[1] Workflow: https://docs.dify.ai/v/zh-hans/guides/workflow[2] Hands on teaching you to connect Dify to the microsoft ecosystem: https://docs.dify.ai/v/zh-hans/learn-more/use-cases/dify-on-wechat[3] Jinja official documentation: https://jinja.palletsprojects.com/en/3.0.x/[4] Jinja template: https://jinja.palletsprojects.com/en/3.1.x/templates/© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...