DiffRhythm: Generate songs up to 4 minutes and 45 seconds in 10 seconds.

General Introduction

DiffRhythm is an open source project developed by ASLP-lab (Audio, Speech and Language Processing Group, Northwestern Polytechnical University), focusing on end-to-end music creation through artificial intelligence technology. It is based on the Latent Diffusion model and is able to generate a complete song of up to 4 minutes and 45 seconds long, including vocals and backing vocals, in as little as 10 seconds. The tool is not only fast, but also simple to use, only need to provide lyrics and style cues to generate high-quality music, DiffRhythm's goal is to solve the traditional music generation model of high complexity, long generation time, and can only generate fragments of the pain points, suitable for music creators, educators, and users in the entertainment industry.

Encapsulating ComfyUI workflows: Chttps://github.com/billwuhao/ComfyUI_DiffRhythm

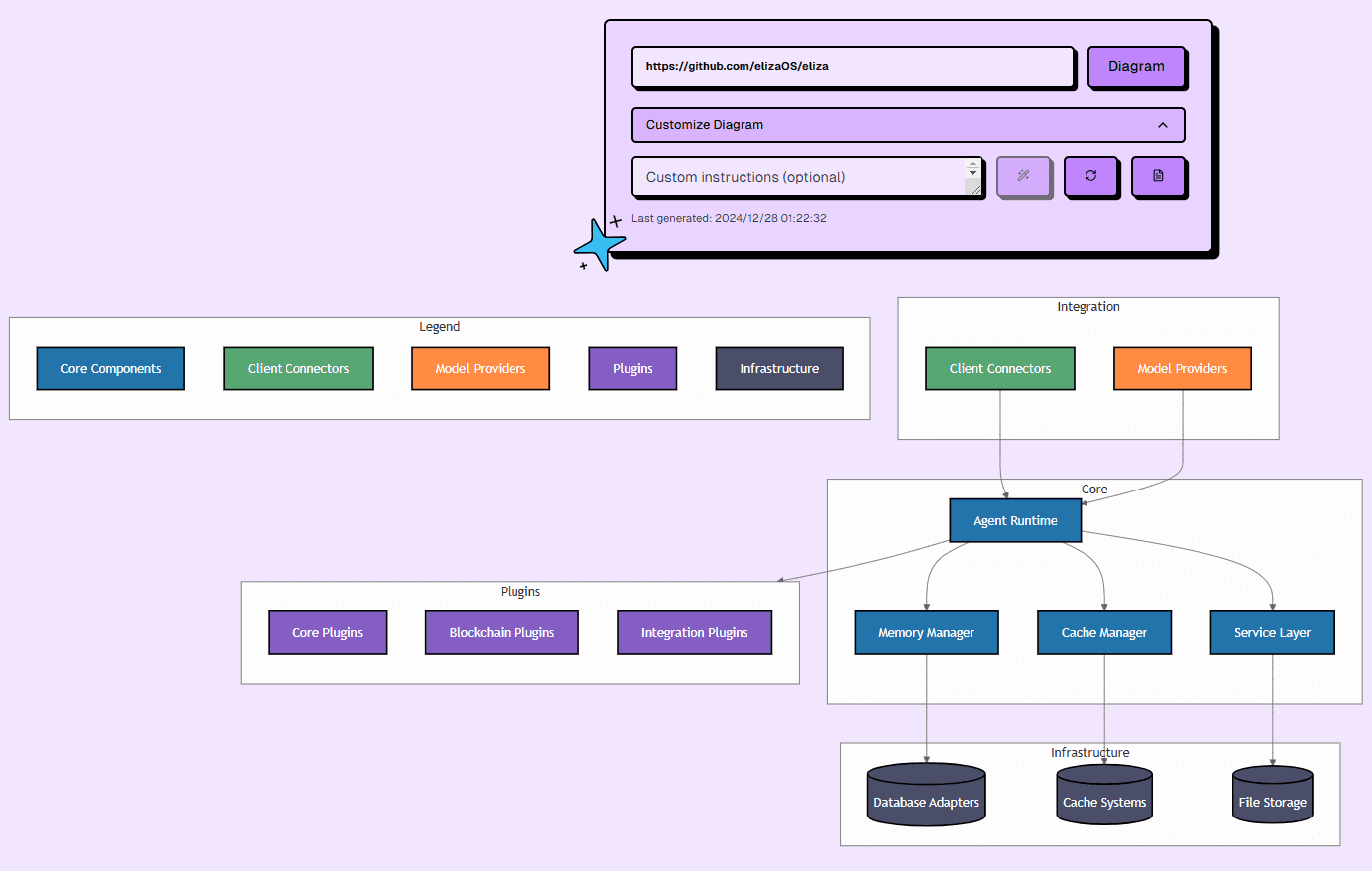

Experience: https://huggingface.co/spaces/ASLP-lab/DiffRhythm

Function List

- End-to-end song generation: Enter lyrics and style cues to automatically generate complete songs with vocals and backing tracks.

- fast inference: Generate a song of up to 4 minutes and 45 seconds in 10 seconds.

- Synchronization of lyrics and melody: Ensure that the generated melody matches the syllables and rhythm of the lyrics naturally.

- Style Customization: Support multiple music style cues to generate music that meets the user's needs.

- Open Source Support:: Provide source code and models that allow users to customize and extend functionality.

- High quality output: The music generated is of a high standard in terms of sound quality and listenability.

Using Help

Installation process

DiffRhythm is a GitHub-based open source project that requires some programming knowledge to install and run. The following are the detailed installation steps:

- environmental preparation

- Make sure you have Python 3.8 or later installed on your computer.

- Install Git for downloading code from GitHub.

- It is recommended to use a virtual environment (e.g.

venvmaybeconda) to avoid dependency conflicts.

- Download Project Code

- Open a terminal and enter the following command to clone the DiffRhythm repository:

git clone https://github.com/ASLP-lab/DiffRhythm.git - Go to the project catalog:

cd DiffRhythm

- Open a terminal and enter the following command to clone the DiffRhythm repository:

- Installation of dependencies

- Programs typically offer

requirements.txtfile that lists the required Python libraries. - Run the following command in the terminal to install the dependencies:

pip install -r requirements.txt - If you don't have this file, you can install core libraries such as PyTorch manually by referring to the dependency notes on the GitHub page or in the documentation.

- Programs typically offer

- Download pre-trained model

- DiffRhythm's pre-trained models are typically hosted on Hugging Face or other cloud storage platforms.

- interviews ASLP-lab/DiffRhythm-base Download the model file (e.g.

cfm_model.pt). - Place the downloaded model files into the specified folder in the project directory (usually described in the documentation, e.g.

models/).

- Verify Installation

- Run a simple test command in the terminal (see GitHub's README file for the exact command), for example:

python main.py --test - If no errors are reported, the installation was successful.

- Run a simple test command in the terminal (see GitHub's README file for the exact command), for example:

How to use DiffRhythm

The core function of DiffRhythm is to generate songs by inputting lyrics and style cues. Below is the detailed operation procedure:

1. Preparation of inputs

- song lyric: Write a lyrics (in Chinese, English, etc.) and save it as a text file (e.g.

lyrics.txt), or enter it directly on the command line. - Style Tips:: Prepare a short description of the style, e.g. "pop-rock", "classical piano" or "electronic dance music".

2. Song generation

- Open a terminal and enter the DiffRhythm project directory.

- Run the generate command (the specific parameters are based on the official documentation, the sample command is as follows):

python generate.py --lyrics "lyrics.txt" --style "pop rock" --output "song.wav"

- Parameter Description:

--lyrics: Specifies the lyrics file path.--style: Enter a music style cue.--output: Specifies the output audio file path and name.

- Wait for about 10 seconds and the program will generate an audio file in WAV format in the specified path.

3. Checking the output

- Once the generation is complete, find the

song.wavfile, play it using any audio player (such as Windows Media Player or VLC). - Check that the lyrics are in sync with the melody and that the sound quality is as expected.

Featured Functions

- End-to-end song generation: No segmentation required, DiffRhythm generates complete songs in one go. Users only need to provide lyrics and style, no additional parameter adjustments are required to get the finished product.

- fast inference: The generation speed is extremely fast thanks to the non-autoregressive structure and latent diffusion technique. Compared with the generation time of traditional models, which often takes several minutes, DiffRhythm's 10-second generation greatly improves the efficiency.

- Synchronization of lyrics and melody: If you find that the generated melody does not match the lyrics, you can adjust the number of syllables in the lyrics or add descriptions such as "clear rhythm" in the style tips to optimize the output.

- Style Customization:: Try different style cues, such as "jazz", "folk", or "hip-hop", and observe how the results change. The more specific the style cue, the closer the generated music will be to what is expected.

Tips for use

- Optimize lyrics: Short lyrics are easier to match to a melody than longer ones, avoiding overly complex phrases.

- Batch Generation:: Write a simple script that loops through calls to the

generate.py, multiple songs can be generated at once. - debug output: If the generated results are not satisfactory, check the log files (if any) or adjust the model parameters (e.g., number of diffusion steps) as described in the GitHub documentation.

caveat

- hardware requirement: The generation process requires high computational resources, and it is recommended to use a computer equipped with a GPU to increase the speed.

- open source contribution: If you are good at programming, you can fork the project, optimize the code or add new features and submit a pull request.

- Copyright Alert: Generated music may involve copyright risks due to stylistic similarities and is recommended for study or non-commercial use.

With these steps, you can quickly get started with DiffRhythm and experience the process of creating a song from lyrics to a complete song!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...