DeepSeek-RAG-Chatbot: a locally running DeepSeek RAG chatbot

General Introduction

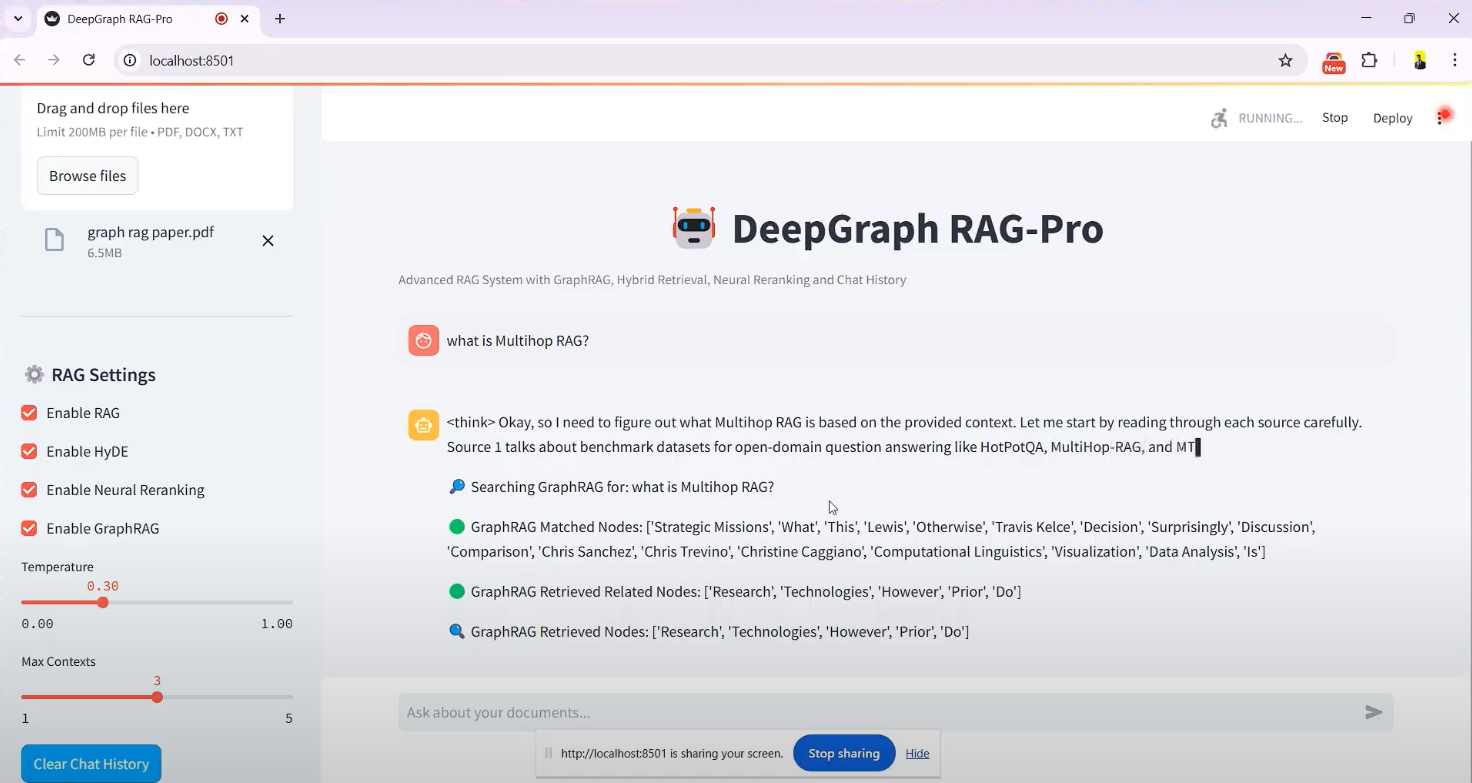

DeepSeek-RAG-Chatbot is a program based on the DeepSeek R1 model built open source chatbot project, hosted on GitHub, created by developer SaiAkhil066. It combines Retrieval Augmented Generation (RAG) technology to support users to upload documents (such as PDF, DOCX or TXT files), through the local runtime to achieve efficient document retrieval and intelligent Q&A. The project utilizes advanced features such as hybrid search (BM25 + FAISS), neural reordering and knowledge graph (GraphRAG) to ensure contextually accurate and relevant information is extracted from documents. It is suitable for scenarios that require privacy protection or offline use, such as personal knowledge management and enterprise document processing. The project provides Docker and Streamlit interface support, which is easy to install and users can get started easily.

Function List

- Document Uploading and Processing: Supports uploading PDF, DOCX, TXT and other formats, automatically splitting documents and generating vector embeddings.

- Hybrid search mechanism: Combined BM25 and FAISS technologies to quickly retrieve relevant content from documents.

- Knowledge Graph Support (GraphRAG): Build a knowledge graph of documents to understand entity relationships and improve the contextual accuracy of answers.

- neural reordering: Re-ordering the retrieval results using a cross-coder model ensures that the most relevant information is prioritized for display.

- Query Extension (HyDE): Generating hypothetical answers to extend user queries and improve retrieval recall.

- Local model runs: By Ollama be in favor of DeepSeek R1 and other models are deployed locally to ensure data privacy.

- Real-time Response Streaming: Streaming output is provided so that users can see the generated results instantly.

- Docker Support: Simplify installation and operation with Docker containerized deployment.

- Streamlit Interface: Intuitive graphical interface for easy file uploading and interactive chat.

Using Help

Installation process

DeepSeek-RAG-Chatbot supports local operation and requires some environment configuration. Below are the detailed installation steps:

pre-conditions

- operating system: Windows, macOS, or Linux.

- hardware requirement: At least 8GB of RAM (16GB recommended) and a CUDA-enabled graphics card if using GPU acceleration.

- software dependency: Python 3.8+, Git, Docker (optional).

Step 1: Clone the project

- Open a terminal and run the following command to clone your GitHub repository:

git clone https://github.com/SaiAkhil066/DeepSeek-RAG-Chatbot.git

- Go to the project catalog:

cd DeepSeek-RAG-Chatbot

Step 2: Setting Up the Python Environment

- Create a virtual environment:

python -m venv venv

- Activate the virtual environment:

- Windows:

venv\Scripts\activate - macOS/Linux:

source venv/bin/activate

- Upgrade pip and install dependencies:

pip install --upgrade pip

pip install -r requirements.txt

Dependencies include streamlit,langchain,faiss-gpu(if there is a GPU), etc.

Step 3: Install and Configure Ollama

- Download and install Ollama (visit ollama.com for the installation package).

- Pull the DeepSeek R1 model (default 7B parameters, other versions such as 1.5B or 32B are available as needed):

ollama pull deepseek-r1:7b

ollama pull nomic-embed-text

- Ensure that the Ollama service is running and that the default listening address is

localhost:11434The

Step 4: (Optional) Docker Deployment

If you don't want to configure your environment manually, you can use Docker:

- Install Docker (refer to docker.com).

- Run it in the project root directory:

docker-compose up

- Docker automatically pulls the Ollama and chatbot services, runs them, and then visits the

http://localhost:8501The

Step 5: Launch the application

- Run Streamlit in a virtual environment:

streamlit run app.py

- The browser will automatically open the

http://localhost:8501, enter the chat screen.

Operation Functions in Detail

Function 1: Uploading Documents

- Getting to the interface: Once started, the Streamlit interface has an "Upload Documents" sidebar on the left side of the screen.

- Select FileClick on the "Browse files" button to select a local PDF, DOCX or TXT file.

- Processing of documents: Once uploaded, the system automatically splits the document into smaller chunks, generates vector embeddings, and stores them in FAISS. processing time varies depending on the size of the file and is usually from a few seconds to a few minutes.

- draw attention to sth.: A confirmation message "File processing complete" is displayed in the sidebar.

Function 2: Questioning and Searching

- Input Issues: Type a question in the chat box in either Chinese or English, e.g. "What is the purpose of GraphRAG as mentioned in the documentation?". in the documentation.

- retrieval process::

- The system uses BM25 and FAISS to retrieve relevant document fragments.

- GraphRAG analyzes entity relationships between fragments.

- Neural reordering optimizes the order of results.

- HyDE extends the query to cover more relevant content.

- Generate Answers: DeepSeek R1 generates answers based on the search results, and the answers are output in a streaming format and displayed step-by-step on the interface.

- typical exampleIf you ask "What is hybrid search?" may return "Hybrid search combines BM25 and FAISS, BM25 is responsible for keyword matching and FAISS quickly locates content through vector similarity".

Function 3: Knowledge Graph Application

- Enable GraphRAG: Enabled by default, no additional action required.

- Asking complex questionsFor example, "What is the partnership between Company A and Company B?". .

- Results ShowcaseThe system not only returns text, but also provides relational answers based on the knowledge graph, e.g., "Company A and Company B have signed a technical cooperation agreement in 2023".

Function 4: Adjustment of models and parameters

- Replacement model: in

.envModify in the fileMODELparameter, e.g. readdeepseek-r1:1.5bThe - optimize performance: If supported by the hardware, install the

faiss-gpuWith GPU acceleration enabled, retrieval speeds can be increased by up to 3x.

Tips for use

- Multi-Document Support: Multiple files can be uploaded at the same time and the system will integrate the content to answer questions.

- clarity of issues: Be as specific as possible when asking questions and avoid vague statements to improve the accuracy of your answers.

- Check logs: If you encounter an error, you can check the terminal logs to troubleshoot the problem, such as the model is not downloaded or the port is occupied.

caveat

- Privacy: No internet connection is required for local operation and data is not uploaded to the cloud.

- resource occupancy: Large models and high-dimensional vector computations require high memory, it is recommended to close irrelevant programs.

- Updated models: Regular operation

ollama pullGet the latest version of the DeepSeek model.

After completing the above steps, users can interact with DeepSeek-RAG-Chatbot through their browsers and enjoy an efficient document quizzing experience.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...