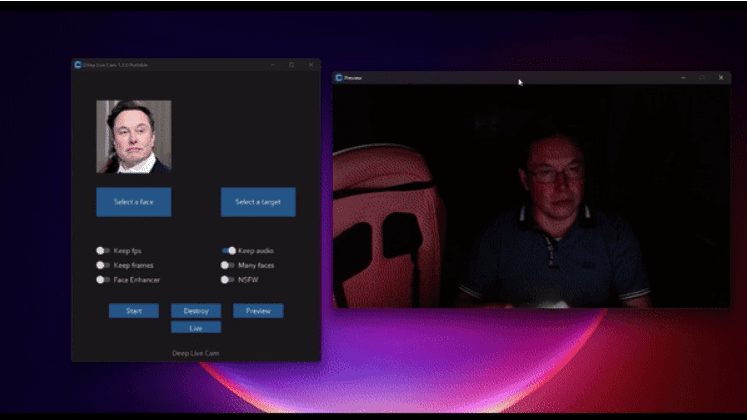

Deep Live Cam: open source real-time AI face-swapping tool, a photo can realize real-time face-swapping live

General Introduction

Deep Live Cam is an open source AI tool designed to enable real-time face replacement and deep fake video generation from a single photo. Utilizing advanced deep learning algorithms, the tool is able to replace faces in real time during live streaming or video calls, protecting user privacy and adding interest.Deep Live Cam supports multiple platforms including CPU, NVIDIA CUDA, Apple Silicon, etc., and is suitable for a wide range of fields such as entertainment, education, art creation, and advertising.

Function List

- Real-time Face Replacement: Real-time face replacement in video through a single photo.

- Multi-platform support: compatible with mainstream operating systems and hardware platforms.

- GPU Acceleration: Supports NVIDIA CUDA acceleration for faster processing.

- Content Audit Mechanism: Built-in anti-abuse mechanism to prevent technology from being used in inappropriate scenarios.

- Open source code: The project code is hosted on GitHub and can be freely downloaded and modified by users.

Using Help

Installation process

- environmental preparation::

- Ensure that Python 3.10 or later is installed.

- Install pip, git, ffmpeg and other development tools.

- For Windows users, you will also need to install the Visual Studio 2022 runtime.

- Cloning and Configuration::

- Clone Deep-Live-Cam's GitHub repository to your local environment:

git clone https://github.com/hacksider/Deep-Live-Cam.git - Download the necessary model files and place them in the specified directory following the documentation guidelines:

- GFPGANv1.4

- inswapper_128_fp16.onnx

- Clone Deep-Live-Cam's GitHub repository to your local environment:

- Dependent Installation::

- Use pip to install the required dependency libraries for your project. It is recommended to use a virtual environment to avoid potential dependency conflicts:

pip install -r requirements.txt

- Use pip to install the required dependency libraries for your project. It is recommended to use a virtual environment to avoid potential dependency conflicts:

- running program::

- Start Deep-Live-Cam from the command line:

python run.py - Select the source image and the target video to observe the real-time face-swapping effect.

- Start Deep-Live-Cam from the command line:

- GPU acceleration::

- For those with NVIDIA GPUs, you can speed up the face-swapping process by installing the CUDA Toolkit and configuring the appropriate environment variables:

pip uninstall onnxruntime onnxruntime-gpu pip install onnxruntime-gpu==1.16.3 python run.py --execution-provider cuda

- For those with NVIDIA GPUs, you can speed up the face-swapping process by installing the CUDA Toolkit and configuring the appropriate environment variables:

Usage Process

- Select source image and target video::

- After the program starts, select an image containing the desired face and a target image or video.

- Start processing::

- Click the "Start" button, the program will start processing and display the face change effect in real time.

- View Output::

- Once the processing is complete, open File Explorer and navigate to the selected output directory to view the generated face swap video.

- camera mode::

- Select a face, click the "live" button and wait a few seconds for the preview to show.

- Streaming using a screen recording tool, such as OBS.

caveat

- When running the program for the first time, you may need to download a model file of about 300MB, the download time depends on the speed of your internet connection.

- If you want to change faces, just select another picture and the preview mode will restart.

resource (such as manpower or tourism)

https://github.com/hacksider/Deep-Live-Cam

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...