Interpreting the key parameters of the big model: Token, context length and output limits

Large-scale language modeling (LLM) is playing an increasingly important role in the field of artificial intelligence. In order to better understand and apply LLMs, we need to gain a deeper understanding of their core concepts. In this paper, we will focus on three key concepts, namely Token, Maximum Output Length, and Context Length, to help readers clear the understanding hurdles so that they can utilize LLM technology more effectively.

Token: LLM's basic processing unit

Token Token is the basic unit of a large language model (LLM) for processing natural language text, and can be understood as the smallest semantic unit that the model can recognize and process. Although Token can be roughly analogized to a "word" or "phrase", it is more accurately described as the building block on which the model bases its text analysis and generation.

In practice, there is a certain conversion relationship between Token and word count. Generally speaking:

- 1 English character ≈ 0.3 Token

- 1 Chinese character ≈ 0.6 Token

Therefore, we canapproximate estimate(math.) genusNormally.A Chinese character can be regarded as a TokenThe

As shown in the figure above, when we input text into LLM, the model first slices the text into Token sequences, and then processes these Token sequences to generate the desired output. The following figure vividly demonstrates the process of text tokenization:

Maximum output length (output limit): the upper limit of the model's single text generation

in order to DeepSeek series models as an example, we can observe that the different models set a limit on the maximum output length.

Above.deepseek-chat model correspondence DeepSeek-V3 version, while the deepseek-reasoner model then corresponds to DeepSeek-R1 Versions. Both the inference model R1 and the dialog model V3 have their maximum output length set to 8KThe

Considering the approximate conversion relation that one kanji is approximately equal to one token.8K The maximum output length of can be interpreted as: The model can generate up to about 8000 Chinese characters in a single interaction.The

The concept of maximum output length is relatively intuitive and easy to understand; it limits the maximum amount of text that the model can produce in each response. Once this limit is reached, the model will not be able to continue generating more content.

Context Window: the extent of the model's memory.

Context length, also known in the technical field as the Context Window, is a key parameter for understanding LLM capabilities. We continue with DeepSeek The model is illustrated as an example:

As shown in the figure, both the inference model and the dialog model, theDeepSeek (used form a nominal expression) Context Window all are 64K. So.64K What exactly does the context length of the

To understand context length, we need to clarify its definition first. Context Window refers to the maximum number of tokens that can be processed by a Large Language Model (LLM) in a single inference session.. This sum consists of two parts:

(1) input section: All user-supplied input, such as prompts, dialog history, and any additional documentation.

(2) output section: The content of the response that the model is currently generating and returning.

In short, when we have a single interaction with LLM, the whole process, starting from when we input a question and ending when the model gives a response, is called "single inference". During this inference, the sum of all input and output textual content (counted in Token) cannot be more than Context Window The limitations for the DeepSeek In terms of the model, this restriction is 64KThe number of Chinese characters is about 60,000, which is equivalent to more than 60,000 Chinese characters.

In case you're wondering.So is there a limit to what can be entered? The answer is yes. As mentioned above, the model has a context length of 64K and a maximum output length of 8K. Therefore, in a single round of conversation, the maximum number of tokens that can be input is theoretically the context length minus the maximum output length, i.e., 64K - 8K = 56K. To summarize, in a single question-and-answer interaction, the maximum amount of user input is about 56,000 words, and the maximum amount of model output is about 8,000 words.

Contextualization mechanisms for multi-round dialogues

In practice, we often have multi-round conversations with LLMs. So how does a multi-round dialog handle context? Take DeepSeek For example, when initiating a multi-round dialog, the server-sideThe user's dialog context is not saved by default. This means that inFor each new conversation request, the user needs to stitch together all the content, including the history of the conversation, and pass it to the API as input informationThe

To more clearly illustrate the mechanics of a multi-round dialog, here is a sample Python code for a multi-round dialog using the DeepSeek API:

from openai import OpenAI

client = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")

# Round 1

messages = [{"role": "user", "content": "What's the highest mountain in the world?"}]

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

messages.append(response.choices[0].message)

print(f"Messages Round 1: {messages}")

# Round 2

messages.append({"role": "user", "content": "What is the second?"})

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

messages.append(response.choices[0].message)

print(f"Messages Round 2: {messages}")

The content of the messages parameter passed to the API during the first round of dialog requests is as follows:

[

{"role": "user", "content": "What's the highest mountain in the world?"}

]

Required for the second round of dialog requests:

(1) Add the output of the model from the previous round of dialog to the messages The end of the list;

(2) Add the user's new question also to the messages The end of the list.

So, in the second round of conversations, the messages parameter passed to the API will contain the following:

[

{"role": "user", "content": "What's the highest mountain in the world?"},

{"role": "assistant", "content": "The highest mountain in the world is Mount Everest."},

{"role": "user", "content": "What is the second?"}

]

It follows that the essence of a multi-round dialog is to combine theHistorical dialog records (including user input and model output) are spliced before the most recent user input, and then the spliced complete dialog is submitted to the LLM in one go.

This means that in a multi-round dialog scenario, the Context Window for each round of dialog does not always stay the same at 64K, but decreases as the number of rounds increases. For example, if the inputs and outputs of the first round of dialog use a total of 32K tokens, then in the second round of dialog, the available Context Window will only be 32K. The principle is consistent with the context length limitation analyzed above.

You may still have questions: If, according to this mechanism, the inputs and outputs of each round of dialog are very long, wouldn't it take no more than a few rounds of dialog to exceed the model limits? In practice, however, the model seems to be able to respond properly even with multiple rounds of dialog.

That's a very good question, which leads us to another key concept: "contextual truncation."

Contextual truncation: strategies for dealing with very long conversations

When we use LLM-based products (e.g., DeepSeek, Wisdom Spectrum Clear Speech, etc.), service providers usually do not directly expose the hard limits of the Context Window to the user, but instead employ Context Truncation strategies for the Context Truncation strategy is used to realize the processing of very long text.

For example, let's say the model natively supports a Context Window of 64K. If the user has accumulated 64K or close to 64K Token inputs and outputs over multiple rounds of conversation, then the user would exceed the Context Window limit by initiating a new request (e.g., a new input of 2K Token). In this case, the server will usually keep the latest 64K Token (including the most recent input), while discarding the earliest part of the dialog history**. For the user, their most recent input is retained, while the earliest input (or even output) is "forgotten" by the model. **

This is why, when having multiple rounds of dialog, the model sometimes suffers from "memory loss" even though we can still get normal replies from the model. Because the capacity of Context Window is limited, the model can't remember all the historical dialog information, it can only "remember the most recent and forget the oldest".

It needs to be emphasized that"Contextual truncation" is a strategy implemented at the engineering level rather than a capability inherent in the model itself**. Users typically do not perceive the truncation process when using it, as the server side accomplishes this in the background. **

To summarize, we can draw the following conclusions about context length, maximum output length and context truncation:

- The context window (e.g. 64K) is a hard limit for the model to handle a single requestThe total number of Token inputs and outputs must not exceed this limit.

- Server-side management of very long text in multi-round conversations through contextual truncation policies, allowing users to break out in multiple rounds of dialog Context Window limitations, but this would be at the expense of the model's long-term memory capacity.

- Context window limits are usually a service provider's strategy to control costs or minimize risk, is not exactly equivalent to the technical capabilities of the model itself.

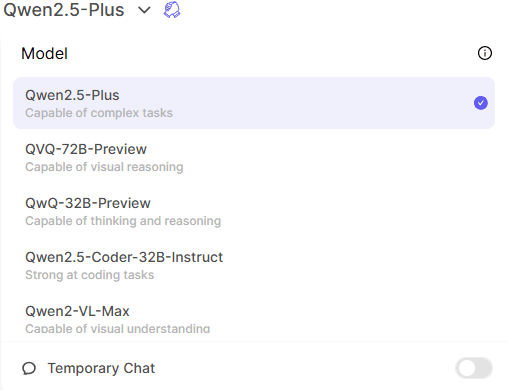

Comparison of parameters of each model: OpenAI & Anthropic

The parameter settings for maximum output length and context length vary between model vendors. The following figure shows the parameter configurations of some models, using OpenAI and Anthropic as examples:

In the figure, "Context Tokens" represents the context length and "Output Tokens" represents the maximum output length.

Technical principles: reasons behind the restrictions

Why does LLM set limits on maximum output length and context length? From a technical point of view, this involves constraints on model architecture and computational resources. In short, the context window limit is determined by the following key factors:

(1) Range of position codes: Transformer The model relies on positional encoding (e.g., RoPE, ALiBi) to assign positional information to each Token, and the design range of the positional encoding directly determines the maximum sequence length that the model can handle.

(2) Calculation of the self-attention mechanism: When generating each new Token, the model needs to compute the attentional weight between that Token and all historical Token (both input and generated output). Therefore, the total length of the sequence is strictly limited. In addition, the memory footprint of the KV Cache is positively correlated with the total length of the sequence, and exceeding the length of the context window may result in memory overflow or computation errors.

Typical application scenarios and response strategies

It is crucial to understand the concepts of maximum output length and context length and the technical principles behind them. After acquiring this knowledge, users should develop corresponding strategies when using large modeling tools to enhance the efficiency and effectiveness of their use. The following lists several typical application scenarios and gives the corresponding response strategies:

- Short Input + Long Output

- application scenario: The user enters a small amount of Token (e.g. 1K) and expects the model to generate long-form content, such as articles, stories, etc.

- Parameter Configuration: At the time of the API call, you can set the max_tokens parameter is set to a larger value, for example 63,000 (Be sure to enter the same amount of Token as in the max_tokens The sum of the following is not more than Context Window limits, e.g. 1K + 63K ≤ 64K).

- potential risk: The model output may be terminated early due to quality checks (e.g., excessive repetition, inclusion of sensitive words, etc.).

- Long Input + Short Output

- application scenario: The user inputs a long document (e.g., 60K tokens) and asks the model to summarize, extract information, etc., and produce a short output.

- Parameter Configuration: You can set the max_tokens parameter is set to a smaller value, for example 4,000 (e.g. 60K + 4K ≤ 64K).

- potential risk: If the model actually requires more output tokens than the max_tokens If the input document is compressed (e.g., key paragraphs are extracted, redundant information is reduced, etc.) to ensure the completeness of the output, then the output document should be compressed to a set value of 0.5 or less.

- Multi-Round Dialog Management

- rules and regulations: During multiple rounds of dialog, it is important to note that the total number of input and output tokens accumulated should not be more than Context Window limit (exceeding which will be truncated).

- typical example::

(1) Round 1: User inputs 10K tokens, model outputs 10K tokens, accumulates 20K tokens.

(2) Round 2: 30K tokens of user input, 14K tokens of model output, and a total of 64K tokens.

(3) Round 3: user inputs 5K tokens, server will truncate the earliest 5K tokens and keep the latest 59K. tokens History, plus a new 5K tokens input for a total of 64K tokens.

By understanding the three core concepts of Token, Maximum Output Length, and Context Length, and by formulating a reasonable strategy based on specific application scenarios, we can more effectively harness LLM technology and fully utilize its potential.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...