COT and related advanced cue variants cue words explained

Disclaimer: While basic hinting techniques (e.g., zero/few sample examples or imperative hints) are very efficient, more sophisticated hints may be more effective when faced with some complex puzzles (e.g., math/programming or problems requiring multi-step logical reasoning). Since large language models (LLMs) naturally have difficulties in dealing with such problems (their reasoning power does not monotonically increase with model size), most research on prompt design has focused on how to improve reasoning and solving complex problems. Simple cues are sufficient for most other problems.

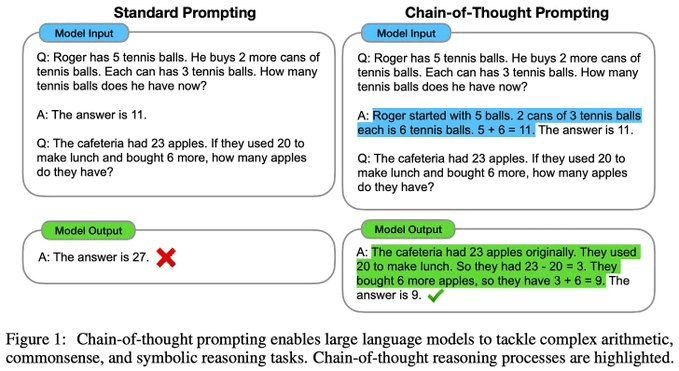

Chain of Thought [CoT]Hints [1] activates the reasoning capabilities of LLM by adding a string of intermediate reasoning steps to the model's hints. By adding a chain of thoughts for each example, the model then learns (through contextual learning) to automatically generate similar chains of thoughts before giving an answer. The study in [1] shows that explicitly explaining the reasoning process for solving a problem can actually be effective in improving the model's reasoning ability.

Input:

The odd numbers in this set add up to an even number: 4, 8, 9, 15, 12, 2, 1.

A: Add all the odd numbers (9, 15, 1) to get 25. the answer is False.The odd numbers in this set add up to an even number: 17, 10, 19, 4, 8, 12, 24.

A: Add all the odd numbers (17, 19) to get 36. the answer is True.The odd numbers in this set add up to an even number: 16, 11, 14, 4, 8, 13, 24.

A: Add all the odd numbers (11, 13) to get 24. the answer is True.The odd numbers in this set add up to an even number: 17, 9, 10, 12, 13, 4, 2.

A: Add all the odd numbers (17, 9, 13) to get 39. the answer is False.The odd numbers in this set add up to an even number: 15, 32, 5, 13, 82, 7, 1.

A:Output:

Add all the odd numbers (15, 5, 13, 7, 1) to get 41. the answer is False.

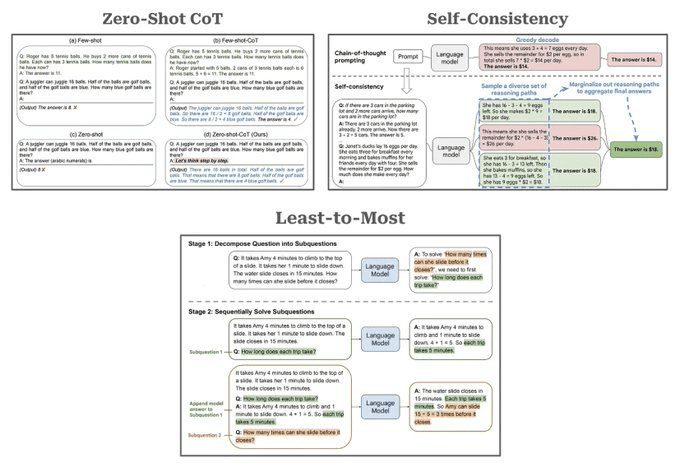

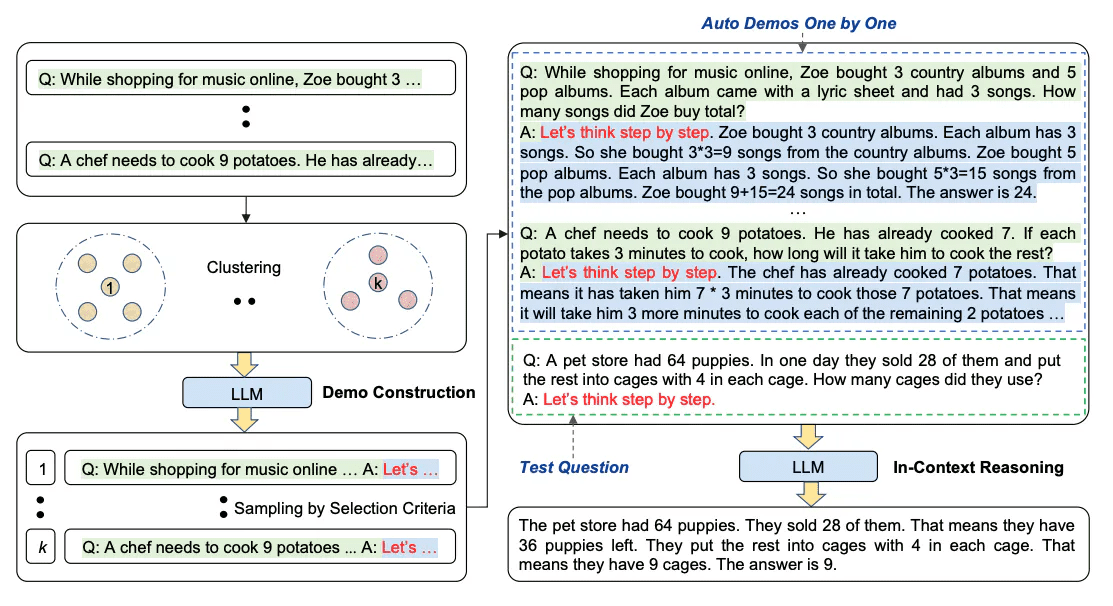

Automated Chain of Thought (Auto-CoT)[8]: When using thought chain prompts, the process requires manually crafting effective and diverse examples. This manual work can lead to sub-optimal solutions. [8] proposes a way to eliminate the manual effort by using LLMs "Let's think step by step" prompts to generate chain of reasoning one after the other. This automated process may still make errors in the generated chains. To mitigate the effects of errors, variety in presentation is important. This work proposes Auto-CoT, which samples problems with diversity and generates chains of reasoning to construct demonstrations.

Auto-CoT consists of two main phases:

Stage 1: Problem clustering: dividing the given problem into several clusters

Stage 2: Demonstration sampling: select a representative problem from each array and generate its inference chain using Zero-Shot-CoT with a simple heuristic

Simple heuristics can be the length of the problem (e.g., 60 tokens) and the number of steps in the rationale (e.g., 5 inference steps). This encourages models to use simple and accurate presentations.

The process is shown below:

CoT variant: Given the validity of CoT prompts and their popularity, multiple derivative techniques have been proposed:

- Zero sample examples CoT [2]: removes a few examples and motivates the model to generate logical reasoning for solving the problem by adding "Let's think step by step." at the end of the prompt. that motivates the model to generate logical reasoning for solving the problem.

Input:

I went to the market and bought 10 apples. I gave 2 apples to my neighbor and 2 apples to the repairman. Then I went and bought 5 apples and ate 1. How many apples did I have left?

Let's think step by step.

Output:

First, you start with 10 apples.

You gave your neighbor and the repairman 2 apples each, so you have 6 apples left.

You then buy 5 apples, so now you have 11 apples.

In the end, you eat 1 apple, so you have 10 apples left.

- Self-Consistency (SC) [3]: enhances the robustness of the reasoning process by independently generating multiple solutions and voting in majority on the final answer.

Input:

Q: There are 15 trees in the forest. Forestry workers will plant trees in the forest today. When finished, there will be 21 trees. How many trees have the forest workers planted today?

A: We started with 15 trees. Later we had 21 trees. The difference must be the number of trees they planted. Therefore, they must have planted 21-15 = 6 trees. The answer is 6.

Q: There were 3 cars in the parking lot and 2 more came in, how many cars were in the parking lot?

A: There are already 3 cars in the parking lot. Another 2 cars have come in. Now there are 3 + 2 = 5 cars. The answer is 5.

Q: Leah has 32 pieces of chocolate and her sister has 42 pieces. If they ate 35 pieces, how many pieces do they have left in total?

A: Leah has 32 pieces of chocolate and Leah's sister has 42 pieces. This means that initially there were 32 + 42 = 74 pieces of chocolate. 35 pieces have been eaten. Therefore, they have a total of 74 - 35 = 39 chocolates left. The answer is 39.

Q: Jason has 20 lollipops. He gives Denny some lollipops. Now Jason has only 12 lollipops.How many lollipops did Jason give Denny?

A: Jason has 20 lollipops. Since he only has 12 now, he must give the rest to Denny. the number of lollipops he gives Denny must be 20 - 12 = 8 lollipops. The answer is 8.

Q: Shawn has five toys. For Christmas, he got two toys from his parents. How many toys does he have now?

A: He has 5 toys. He got 2 from his mom, so after that he had 5 + 2 = 7 toys. Then he got 2 from his dad, so in total he has 7 + 2 = 9 toys. The answer is 9.

Q: There are 9 computers in the server room. From Monday through Thursday, 5 computers are installed each day. How many computers are in the server room now?

A: There were 4 days from Monday through Thursday. Each day 5 computers were added. This means that a total of 4 * 5 =

20 computers. There were 9 computers at the beginning, so now there are 9 + 20 = 29 computers. The answer is 29.

Q: Michael has 58 golf balls. On Tuesday, he lost 23 golf balls. On Wednesday, he lost 2 more. How many golf balls did he have left at the end of Wednesday?

A: Michael initially had 58 balls. On Tuesday he lost 23, so after that he had 58-23 = 35 balls. On Wednesday he lost 2 more, so now he has 35-2 = 33 balls. The answer is 33.

Q: Olivia has $23. She bought five bagels for $3 each. How much money does she have left?

A: She bought 5 bagels for $3 each. This means she spent 15 dollars. She has 8 dollars left.

Q: When I was 6 years old, my sister was half my age. Now that I'm 70, how old is my sister?

A:Output 1:

When I was 6, my sister was half my age, or 3. Now I'm 70, so she's 70-3 = 67. The answer is 67.

Output 2:

When the narrator was 6 years old, his sister was half his age, or 3 years old. Now that the narrator is 70, his sister would be 70-3 = 67. The answer is 67.

Output 3:

When I was 6, my sister was half my age, or 3. Now I'm 70, so she's 70/2 = 35. The answer is 35.

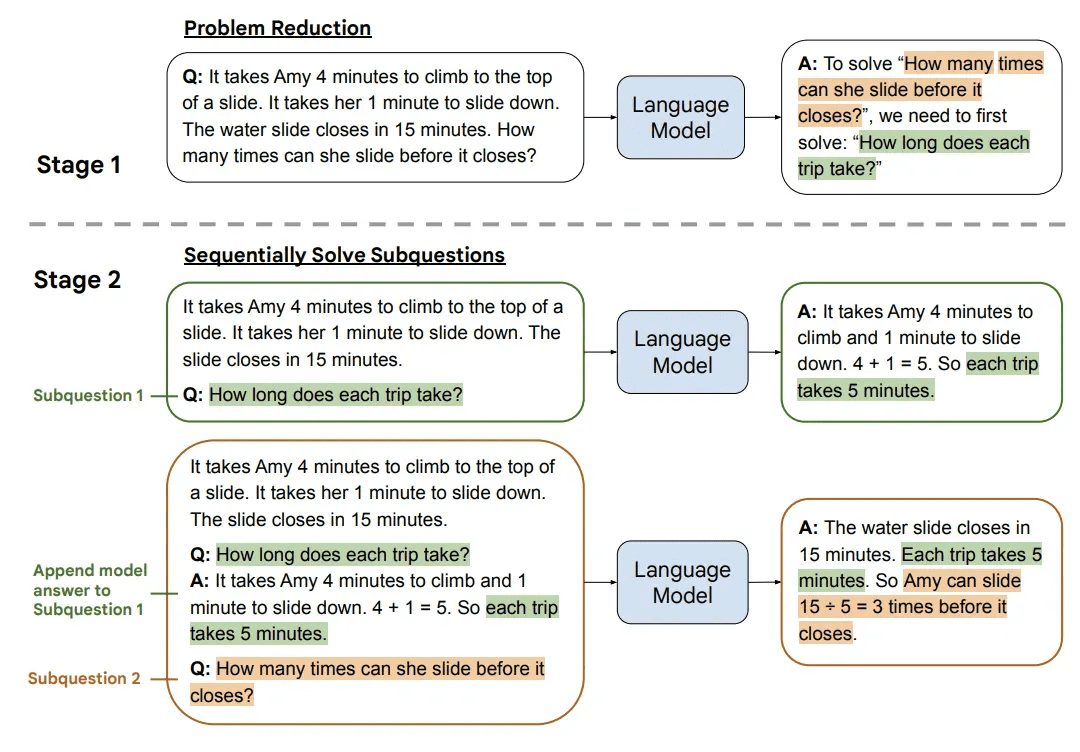

- Least-to-Most (LTM) [4]: breaking the problem into multiple parts, solving them one by one, and then using the answer to each sub-problem as a context for solving the next one.

Input:

Customer Inquiries.

I purchased a t-shirt from your Arnold line on March 1st. I saw that it was on sale so I bought a shirt that was 60% off the original price of $30. I noticed that you now have a new t-shirt discount, 50% off. i was wondering if i can return it and still have enough in-store credit to purchase two of your t-shirts?Command.

You are a customer service representative responsible for responding to customer inquiries in a friendly manner. Returns are allowed within 30 days. Today is March 29th. There is currently a 50% discount on all shirts. Your in-store shirts range in price from $18-$100. Please do not make up any information about the discount policy.

What sub-questions must be addressed before this question can be answered?Output:

1. the date the customer purchased the shirt;

2. Current discount activities;

3. price range of shirts in the store;

4. whether the customer has enough store credit;

5. Policy on customer returns.Input:

Customer Inquiries.

I purchased a t-shirt from your Arnold line on March 1st. I saw that it was on sale so I bought a shirt that was 60% off the original price of $30. I noticed that you now have a new t-shirt discount, 50% off. i was wondering if i can return it and still have enough in-store credit to purchase two of your t-shirts?Command.

You are a customer service representative responsible for responding to customer inquiries in a friendly manner. Returns are allowed within 30 days. Today is March 29th. There is currently a 50% discount on all shirts. Your in-store shirts range in price from $18-$100. Please do not make up any information about the discount policy.

Determine if the customer is within the 30-day return period. Let's take it one step at a time.Output:

Dear Customer, Thank you very much for your support of the Arnold range! We are pleased to offer our services to you. Our return policy is that customers can return items within 30 days of purchase. Since you purchased your item on March 1st, you are still within the return period and can return it.

Additionally, we are currently holding a 50% sale where you can use your store credit to purchase two t-shirts from the Arnold collection. Our shirts range from $18-$100, so you can choose the right shirt for your needs.

If you have any other questions, please feel free to contact us. We will be happy to serve you!

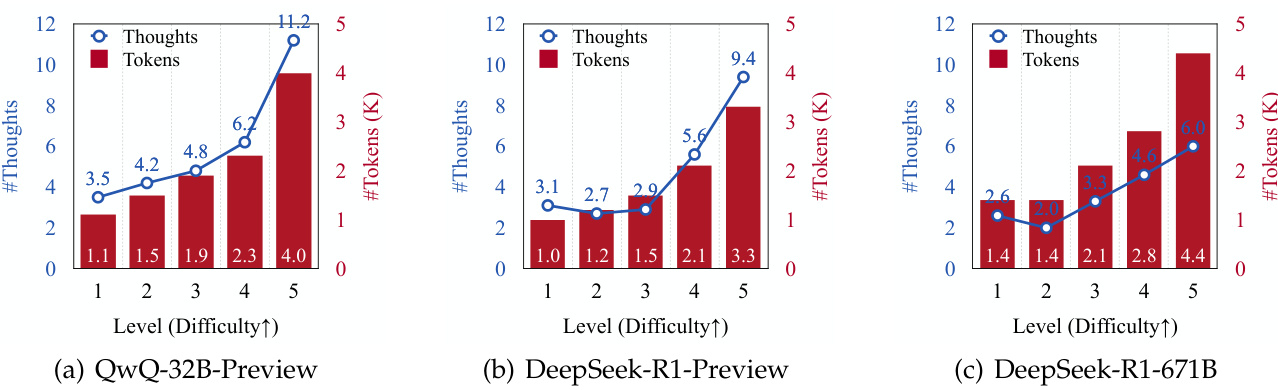

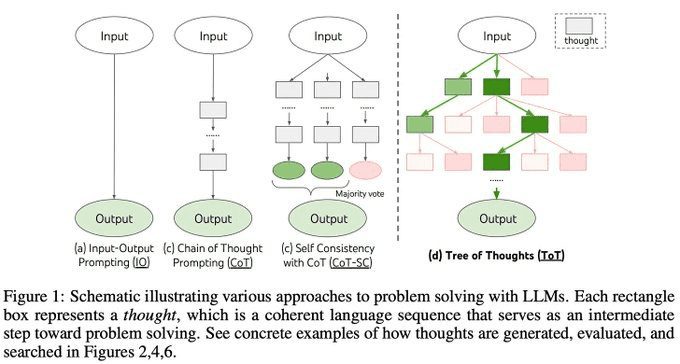

Tree of thoughts [ToT].Tip [5]: CoT tips do not solve problems that require planning, strategic foresight, backtracking, and exploring multiple solutions in parallel.ToT tips split complex problems into a series of simpler problems (or "thinks").LLM generates numerous "thinks" and continuously evaluates their progress toward a final solution through natural language. LLM generates numerous "Thoughts" and continuously evaluates their progress toward a final solution through natural language. Using the model's self-assessment of solution progress, we can apply widely used search algorithms (e.g., breadth-first or depth-first search) to facilitate the exploration process and allow for foresight/backtracking when solving problems.

Suppose three different experts answer this question.

All the experts wrote down their first steps in thinking about the issue and then shared them with the group.

All experts then write down the next steps in their thinking and share them.

And so on until all the experts have written about all the steps in their thinking.

As soon as people realize that an expert's steps are wrong, let that expert go.

Excuse me ...

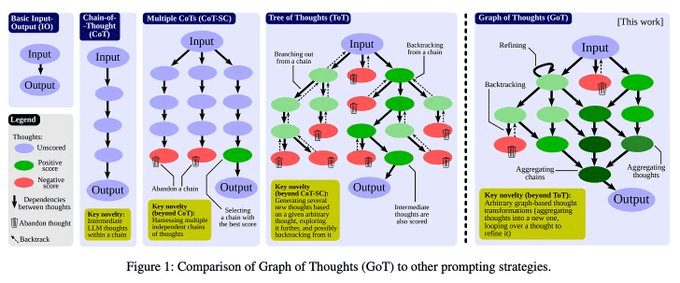

Graph of Thoughts [GoT]Hints [6, 7]: subsequent research has generalized the work on ToT hints to graph-based reasoning strategies. These techniques are similar to ToT hints, but do not assume that the path of thought that generates a solution is linear. We can reuse thoughts and even recurse over a series of thoughts when deriving a solution. Although a variety of graph-based hinting strategies have been proposed, these techniques, as well as ToT hints, have been criticized for their lack of utility. Solving a reasoning problem using GoT hints may require the LLM to perform a large number of reasoning steps!

Below are links to all of the above cited papers!

[1] https://arxiv.org/abs/2201.11903[2] https://arxiv.org/abs/2205.11916

[3] https://arxiv.org/abs/2203.11171

[4] https://arxiv.org/abs/2205.10625

[5] https://arxiv.org/abs/2305.10601

[6] https://arxiv.org/abs/2308.09687

[7] https://arxiv.org/abs/2305.16582[8] https://arxiv.org/abs/2201.11903

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...