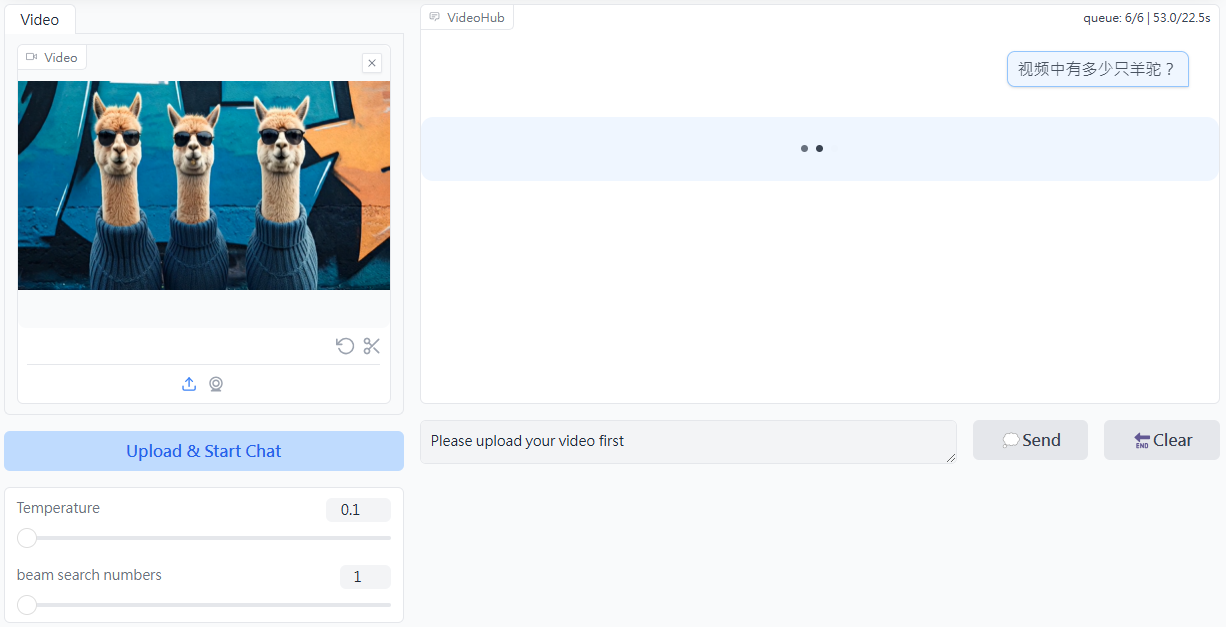

CogVLM2: Open Source Multimodal Modeling with Support for Video Comprehension and Multi-Round Dialogue

General Introduction

CogVLM2 is an open source multimodal model developed by the Tsinghua University Data Mining Research Group (THUDM), based on the Llama3-8B architecture, and designed to provide performance comparable to or even better than GPT-4V. The model supports image understanding, multi-round conversations, and video understanding, and is capable of processing content up to 8K in length and supporting image resolutions up to 1344x1344. The CogVLM2 family includes several sub-models optimized for different tasks, such as text Q&A, document Q&A, and video Q&A. The models are not only bilingual, but also offer a variety of online experiences and deployment methods for users to test and apply.

Related information:How long can a large model understand a video? Smart Spectrum GLM-4V-Plus: 2 hours

Function List

- graphic understanding: Supports the understanding and processing of high-resolution images.

- many rounds of dialogue: Capable of multiple rounds of dialog, suitable for complex interaction scenarios.

- Video comprehension: Supports comprehension of video content up to 1 minute in length by extracting keyframes.

- Multi-language support: Support Chinese and English bilingualism to adapt to different language environments.

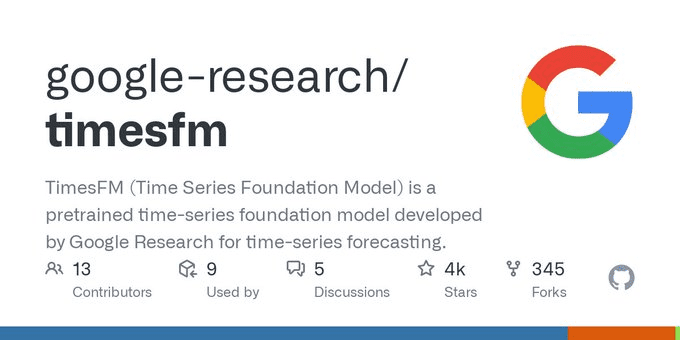

- open source (computing): Full source code and model weights are provided to facilitate secondary development.

- Online Experience: Provides an online demo platform where users can directly experience the model functionality.

- Multiple Deployment Options: Supports Huggingface, ModelScope, and other platforms.

Using Help

Installation and Deployment

- clone warehouse::

git clone https://github.com/THUDM/CogVLM2.git

cd CogVLM2

- Installation of dependencies::

pip install -r requirements.txt

- Download model weights: Download the appropriate model weights as needed and place them in the specified directory.

usage example

graphic understanding

- Loading Models::

from cogvlm2 import CogVLM2

model = CogVLM2.load('path_to_model_weights')

- process image::

image = load_image('path_to_image')

result = model.predict(image)

print(result)

many rounds of dialogue

- Initializing the Dialog::

conversation = model.start_conversation()

- hold a dialog::

response = conversation.ask('你的问题')

print(response)

Video comprehension

- Load Video::

video = load_video('path_to_video')

result = model.predict(video)

print(result)

Online Experience

Users can access the CogVLM2 online demo platform to experience the model's functionality online without local deployment.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...