CodeFormer: image and video facial restoration, old photo restoration, offers one-click deployment version

CodeFormer General Introduction

CodeFormer is a codebase for robust blind face repair, developed by a team of researchers at S-Lab, Nanyang Technological University and presented at NeurIPS 2022. The project leverages the Codebook Lookup Transformer technology and aims to enhance the restoration of faces in images, especially when dealing with low-quality or corrupted images.CodeFormer provides a wide range of features, including face restoration, colorization, and patching, for a variety of image processing needs. In addition, the program supports video input and offers easy-to-use online demos, pre-trained models, and detailed instructions for use.

Instructions for use must be read strictly, otherwise it will not work properly. Formally sold as old photo restoration business, you need a simple PS base, otherwise you can not achieve the same effect online.

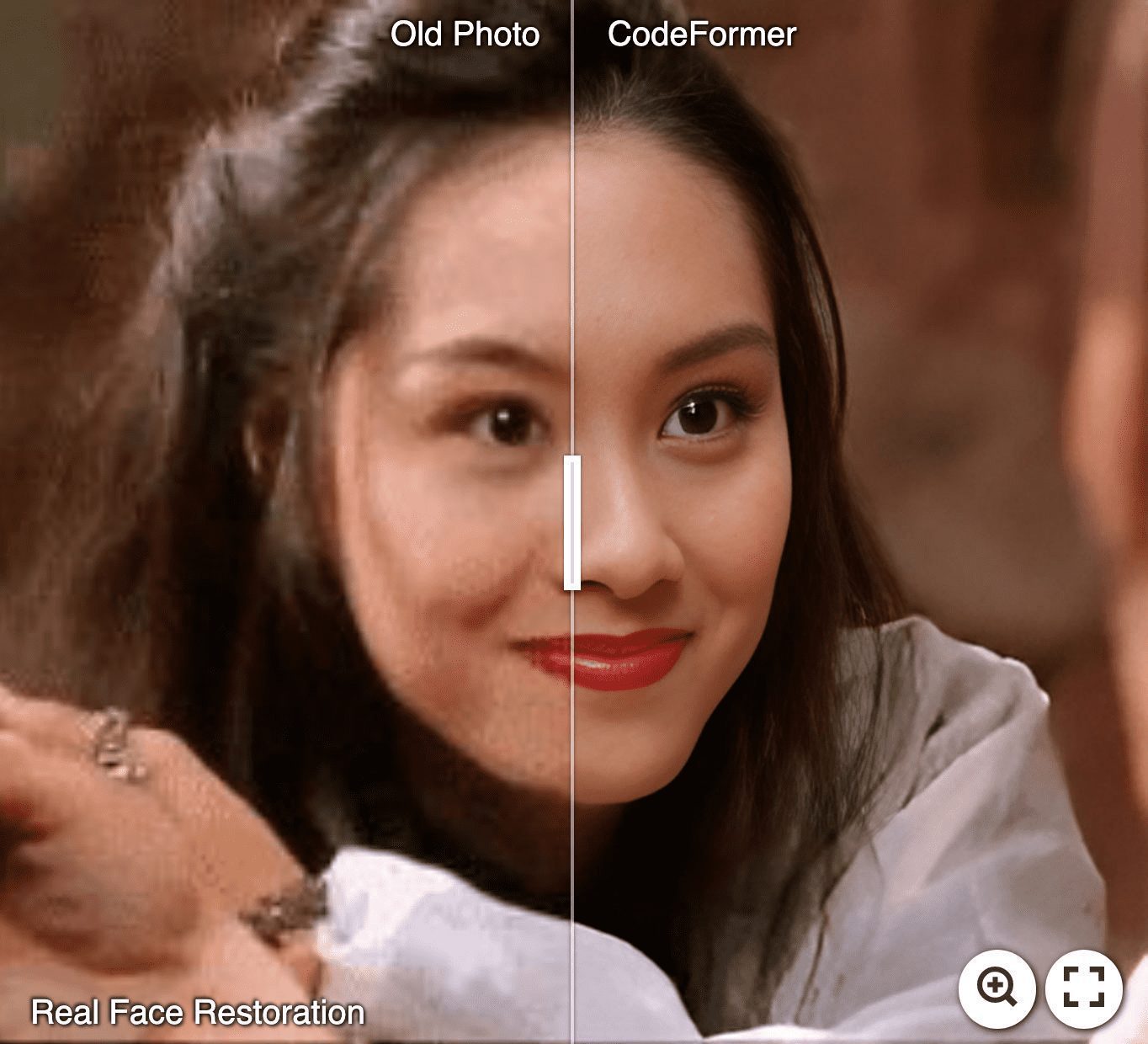

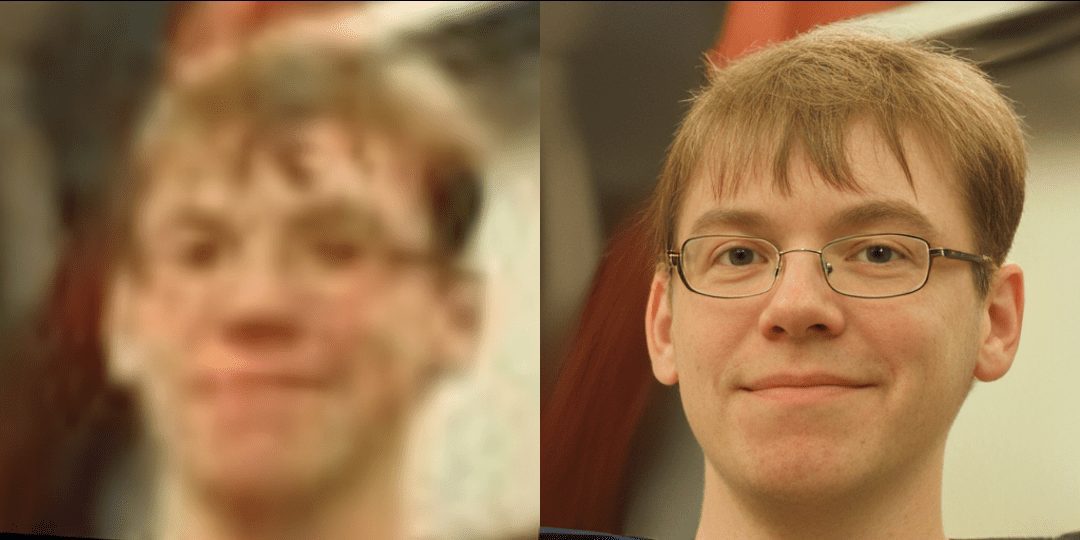

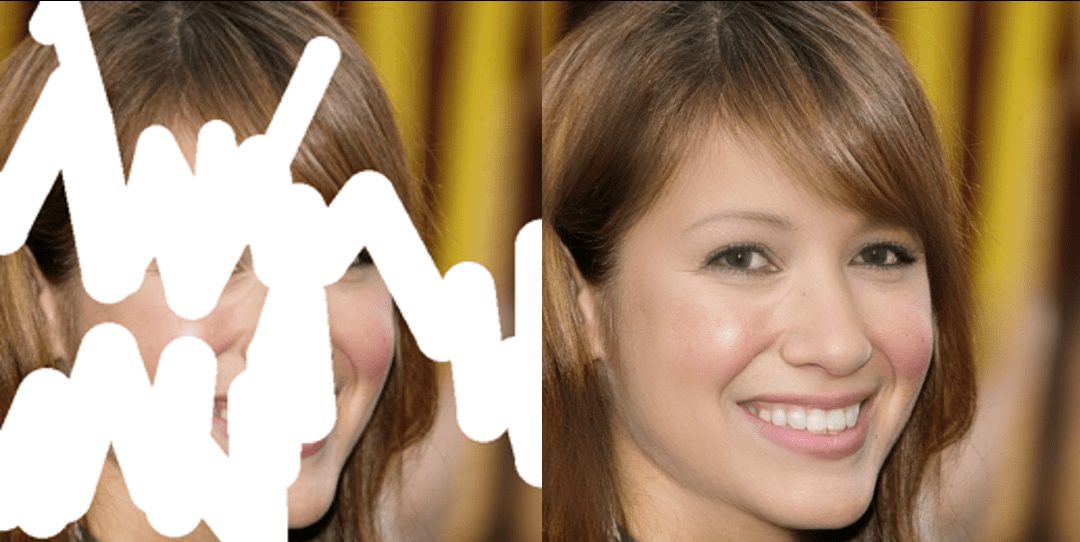

CodeFormer Tries to Enhance Old Photos / Fix AI Portraits

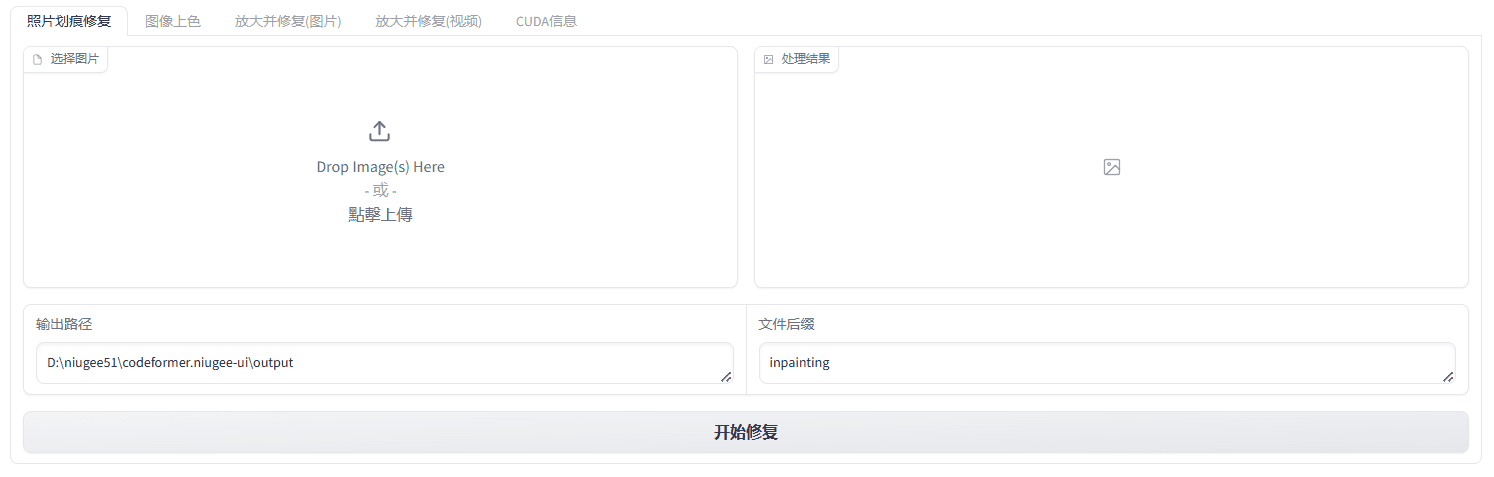

CodeFormer Face Repair

CodeFormer Facial Color Enhancement and Restoration

CodeFormer Face Repair

CodeFormer Feature List

- face restoration: Enhance the clarity and detail of low-quality or corrupted face images with codebook lookup converter technology.

- Image coloring: Add natural color to black and white or faded images.

- image repair: Repair missing parts of an image to make it complete.

- Video Processing: Supports repair and enhancement of faces in videos.

- Online Demo: An online demo function is provided so that users can experience the restoration directly in their browsers.

CodeFormer Help

Installation process

- Cloning Codebase::

git clone https://github.com/sczhou/CodeFormer cd CodeFormer - Create and activate a virtual environment::

conda create -n codeformer python=3.8 -y conda activate codeformer - Installation of dependencies::

pip install -r requirements.txt python basicsr/setup.py develop conda install -c conda-forge dlib - Download pre-trained model::

python scripts/download_pretrained_models.py facelib python scripts/download_pretrained_models.py dlib python scripts/download_pretrained_models.py CodeFormer

Usage Process

- Preparing test data: Place the test image into the

inputs/TestWholefolder. If you need to test cropped and aligned face images, you can place them in theinputs/cropped_facesfolder. - Run the inference code::

python inference_codeformer.py --input_path inputs/TestWhole --output_path resultsThis command will process the

inputs/TestWholefolder with all the images in it and save the results in theresultsfolder.

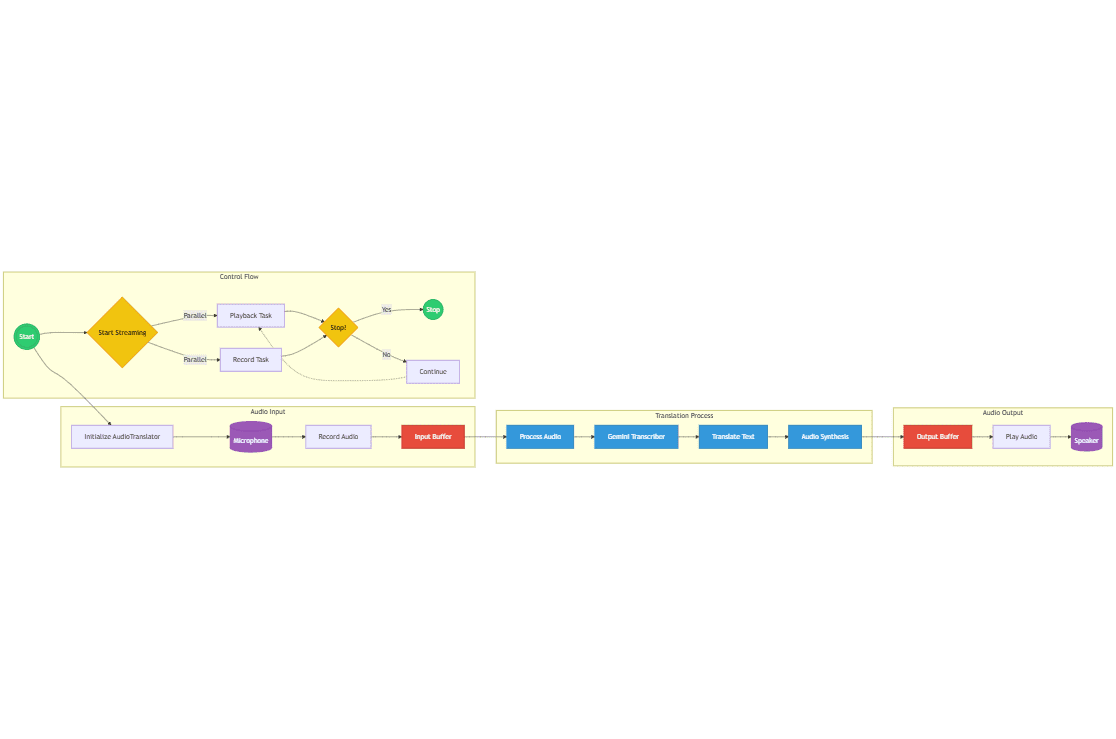

Functional operation flow

- face restoration::

- Place the image to be restored into the

inputs/TestWholeFolder. - Run the inference code to generate the repaired image.

- Place the image to be restored into the

- Image coloring::

- Place a black and white image into the

inputs/TestWholeFolder. - utilization

inference_colorization.pyScript for coloring.

- Place a black and white image into the

- image repair::

- Place the image to be patched into the

inputs/TestWholeFolder. - utilization

inference_inpainting.pyScripts for patching.

- Place the image to be patched into the

- Video Processing::

- Place the video file into the specified folder.

- Run the inference code to process the faces in the video.

Facial restoration (cropping and alignment of the face)

# For cropped and aligned faces

python inference_codeformer.py -w 0.5 --has_aligned --input_path [input folder]

Overall Image Enhancement

# For whole image

# Add '---bg_upsampler realesrgan' to enhance the background regions with Real-ESRGAN

# Add '--face_upsample' to further upsample restored face with Real-ESRGAN

python inference_codeformer.py -w 0.7 --input_path [image folder/image path]

video enhancement

# For video clips

python inference_codeformer.py --bg_upsampler realesrgan --face_upsample -w 1.0 --input_path

The fidelity weight w is located in [0, 1]. In general, smaller w tends to produce higher quality results, while larger w produces higher fidelity results.

The results will be saved in the results folder.

CodeFormer One-Click Deployment Kit

CodeFormer WebUI (Password niugee51)

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...