This guide describes how to utilize Claude's advanced natural language understanding capabilities to categorize customer support work orders at scale based on customer intent, urgency, priority, customer profile, and other factors.

Define whether or not to use Claude for work order assignment

Here are some key metrics that indicate you should use LLMs (Large Language Models) like Claude rather than traditional machine learning methods for classification tasks:

You have limited labeled training data available

Traditional machine learning processes require large labeled datasets.Claude's pre-trained model can significantly reduce the time and cost of data preparation by efficiently classifying work orders using only a few dozen labeled examples.

Your categorization categories may change or evolve over time

Once a traditional machine learning method has been established, modifying it can be a time-consuming and data-intensive process. And as your product or customer needs change, Claude can easily adapt to changes in category definitions or new categories without extensive relabeling of training data.

You need to handle complex unstructured text inputs

Traditional machine learning models often struggle with unstructured data and require extensive feature engineering.Claude's advanced language comprehension allows for accurate classification based on content and context without relying on strict ontology structures.

Your classification rules are based on semantic understanding

Traditional machine learning methods often rely on bag-of-words models or simple pattern matching. When categories are defined by conditions rather than examples, Claude specializes in understanding and applying these underlying rules.

You need interpretable reasoning for categorical decisions

Many traditional machine learning models provide little to no insight into the decision-making process. claude can provide human-readable explanations for its categorization decisions, thus enhancing trust in the automated system and making it easy to adapt if necessary.

You want to handle edge cases and ambiguous work orders more effectively

Traditional machine learning systems typically perform poorly when dealing with anomalies and ambiguous inputs, often misclassifying or categorizing them into a "catch-all" category.Claude's natural language processing capabilities allow it to better interpret the context and nuances of support work orders, potentially reducing the number of misclassified or unclassified work orders that require human intervention. The number of misclassified or uncategorized work orders requiring manual intervention may be reduced.

You need to provide multi-language support without maintaining multiple models

Traditional machine learning approaches typically require maintaining separate models or performing extensive translation processes for each supported language.Claude's multilingual capabilities make it possible to categorize work orders in a variety of languages without the need for separate models or extensive translation processes, thereby simplifying global customer support.

Build and Deploy Your Big Language Model to Support Workflows

Know your current support methods

Before diving into automation, it's critical to first understand your existing work order system. Start by investigating how your support team currently handles work order routing.

The following questions may be considered:

- What criteria were used to determine the applicable SLA/service plan?

- Is work order routing used to determine which support level or product specialist the work order should go to?

- Are there any automated rules or workflows already in place? Under what circumstances do they fail?

- How are edge cases or fuzzy work orders handled?

- How do teams prioritize work orders?

The more you know about how humans deal with particular situations, the better you'll be able to communicate with the Claude Collaborate on tasks.

Define user intent categories

A well-defined list of user intent categories is critical to accurately categorizing support work orders using Claude.Claude's ability to effectively route work orders within your system is directly related to the clarity of category definitions in your system.

Here are some examples of user intent categories and subcategories.

Technical issues

- Hardware issues

- software vulnerability

- compatibility issue

- Performance issues

account management

- Reset Password

- Account access issues

- Billing inquiries

- Subscription Changes

Product Information

- Function Search

- Product compatibility issues

- Price Information

- Availability Queries

User guides

- Operation Guide

- Help for using the function

- Best Practice Recommendations

- Troubleshooting Guide

send back information

- Vulnerability Reporting

- Function Request

- General feedback or suggestions

- complain .

Order Related

- Order Status Inquiry

- Logistics Information

- return a product for another item

- Order Modification

service request

- Installation assistance

- upgrade request

- maintenance program

- Service Cancellation

security issue

- Data Privacy Query

- Suspicious Activity Reports

- Safety Features Help

Compliance and legal

- Regulatory compliance issues

- Terms of Service Inquiry

- Requests for legal documents

Emergency support

- Failure of critical systems

- Emergency security issues

- Time-sensitive issues

Training and education

- Product Training Requests

- Document Search

- Webinar or Workshop Information

Integration and API

- Integration Assistance

- API Usage Issues

- Third Party Compatibility Check

In addition to user intent, work order routing and prioritization can be influenced by other factors, such as urgency, customer type, SLAs, or language. Be sure to consider other routing criteria when building an automated routing system.

Establishing success criteria

Work with your support team to use measurable benchmarks, thresholds and targetsDefine clear success criteriaThe

The following are common standards and benchmarks when using the Large Language Model (LLM) for work order assignment:

Classification consistency

This metric assesses how consistently Claude categorizes similar work orders over time. This is critical to maintaining reliability of assignments. By using a standardized set of input test models on a regular basis, the goal is to achieve a consistency of 951 TP3T or better.

Adaptation speed

This metric measures how quickly Claude adapts to new categories or changing work order patterns. This is done by introducing new work order types and measuring the time it takes for the model to achieve satisfactory accuracy (e.g., >90%) in these new categories. The goal is to achieve adaptation within 50-100 sample work orders.

multilingual processing

This metric evaluates Claude's ability to accurately assign multilingual work orders. Measures the accuracy of the assignment in different languages, with a goal of no more than a 5-10% drop in accuracy in non-dominant languages.

Edge Case Processing

This metric evaluates Claude's performance when processing uncommon or complex work orders. Create a test set of edge cases with the goal of achieving an assignment accuracy of at least 801 TP3T on these complex inputs.

bias diminishes

This metric measures the fairness of Claude's allocation across different client groups. Allocation decisions are periodically reviewed for potential bias, with the goal of maintaining consistent allocation accuracy (within 2-3%) across all customer groups.

Cue Efficiency

This criterion evaluates Claude's performance under minimal context conditions where a reduced number of Token is required. Measures the accuracy of assignments when different quantities of context are provided, with the goal of achieving an accuracy of 90% or more when only the work order title and a short description are provided.

Interpretability scores

This metric assesses the quality and relevance of Claude's explanations of his allocation decisions. Human raters can score explanations (e.g., 1-5) with the goal of achieving an average rating of 4 or higher.

Here are some common success criteria that are common whether or not you use the Big Language Model:

Distribution accuracy

Assignment accuracy measures whether a work order was correctly assigned to the right team or individual the first time. It is typically measured as a percentage of total work orders that are correctly assigned. Industry benchmarks typically target an accuracy rate of 90-951 TP3T, but this depends on the complexity of the support structure.

slot

This metric tracks how quickly a work order is assigned after it is submitted. Faster allocation times typically lead to faster resolution and higher customer satisfaction. The average allocation time for optimal systems is typically under 5 minutes, with many systems aiming for near-instant allocation (which is possible when using LLM).

redistribution rate

The reallocation rate indicates how often a work order needs to be reallocated after the initial allocation. A lower reallocation rate indicates a more accurate initial allocation. The goal is to keep the reallocation rate below 101 TP3T, with the best performing systems achieving 51 TP3T or less.

First contact resolution rate

This metric measures the percentage of work orders that are resolved on the first customer interaction. Higher first-time resolution rates indicate efficient assignments and well-prepared support teams. Industry benchmarks are typically 70-751 TP3T, with top-performing teams achieving first-time resolution rates of 801 TP3T or more.

Average processing time

Average Processing Time measures the time it takes to resolve a work order from start to finish. Effective allocation can significantly reduce this time. Benchmarks vary by industry and complexity, but many organizations aim to keep the average processing time for non-urgent issues under 24 hours.

Customer Satisfaction Rating

Often measured through post-interaction surveys, these ratings reflect the customer's overall satisfaction with the support process. Effective distribution contributes to satisfaction. The goal is to achieve a Customer Satisfaction Rating (CSAT) of 90% or higher, with the best performing teams achieving a satisfaction rate of 95% or higher.

promotion rate

This indicator measures the frequency with which work orders need to be escalated to a higher level of support. Lower escalation rates typically indicate more accurate initial assignments. The goal is to keep the escalation rate below 201 TP3T, with the optimal system achieving an escalation rate of 101 TP3T or less.

Employee productivity

This metric measures the number of work orders that can be efficiently processed by support staff after the implementation of a distribution solution. Improved distribution should result in increased productivity. Measured by tracking the number of work orders resolved per employee per day or hour, the goal is to improve productivity by 10-20% after implementing a new distribution system.

Self-service triage rate

This metric measures the percentage of potential work orders resolved through self-service options prior to entering the distribution system. Higher triage rates indicate effective pre-assignment triage. The goal is to achieve a triage rate of 20-301 TP3T, with the best performing teams achieving 401 TP3T or more.

Cost per work order

This metric calculates the average cost to resolve each support work order. Efficient distribution should help reduce costs over time. Although benchmarks vary widely, many organizations aim to reduce the cost per work order by 10-151 TP3T after implementing an improved distribution system.

Selecting the right Claude model

The choice of model depends on the trade-off between cost, accuracy and response time.

Many customers find claude-3-haiku-20240307 is the ideal model for handling work order routing because it is the fastest and most cost-effective model in the Claude 3 family, while still delivering excellent results. If your classification problem requires deep expertise or a lot of complex intentional category reasoning, you can choose the Larger Sonnet ModelThe

Building a Powerful Tip

Work Order Routing is a categorization task.Claude analyzes the content of support work orders and categorizes them into predefined categories based on the type of issue, urgency, required expertise, or other relevant factors.

Let's write a prompt for work order categorization. The initial prompt should contain the content of the user request and return the reasoning process and intent.

You can try the Anthropic Console prioritize the use of sth. Tip Generator Let Claude write the first version of the prompt for you.

Below is an example of a work order routing categorization prompt:

def classify_support_request(ticket_contents):

# Define prompts for categorization tasks

classification_prompt = f"""You will be working as a customer support work order categorization system. Your task is to analyze customer support requests and output the appropriate categorization intent for each request, as well as give your reasoning process.

These are the customer support requests you need to categorize:{ticket_contents}Please analyze the above request carefully to determine the core intent and needs of the client. Consider the content and concerns of the customer's inquiry.

Start by writing your reasoning and analysis of how to categorize the request within the tag.

Then, output the appropriate categorization labels for the request within the tag. Valid intents include:

Support, Feedback, Complaints

Order tracking

Refunds/exchanges

A request can have only one applicable intent. Include only the intent that is most appropriate for the request.

For example, consider the following request:

Hello! I had my high speed fiber optic internet installed on Saturday and the installer, Kevin, was fantastic! Where can I submit my positive review? Thank you for your help!

This is an example of how your output should be formatted (for the above request):

The user wants to leave positive feedback.

Support, feedback, complaints

Here are a couple more examples:

<example 2

Example 2 Input:

I wanted to write and thank you for the care you gave my family at my father's funeral last weekend. Your staff was very considerate and helpful, which took a lot of weight off our shoulders. The memorial booklet was beautiful. We will never forget the care you gave us and we are so grateful for how smoothly the whole process went. Thank you again, Amarantha Hill on behalf of the Hill family. </request

Example 2 Output:

The user left a positive review of their experience.

Support, feedback, complaints.

</example 2

...

</example 8

Example 9 Input:

Your website keeps popping up ad windows that block the entire screen. It took me twenty minutes to find the number to call to complain. How can I possibly access my account information with all these pop-ups? Can you help me access my account because there is a problem with your website? I need to know the address on file. </request

Example 9 Output:

The user requested help to access his web account information.

Support, Feedback, Complaints

</example 9

Remember to always include categorical reasoning before the actual intent output. Reasoning should be included within the tag and intent should be included within the tag. Only reasoning and intent are returned.

"""

Let's break down the key parts of that tip:

- We use Python's f-string to create hint templates that allow for the inclusion of

ticket_contentsInserted intoTagged in. - We clearly defined a role for Claude as a classification system that carefully analyzes the content of work orders to determine the customer's core intent and needs.

- We instructed Claude on the correct output format in the

Inference and analysis are provided within the tags and subsequently in theOutput the appropriate category tags within the tags. - We have designated valid intent categories: "Support, Feedback, Complaints", "Order Tracking" and "Refunds/Exchanges".

- Some examples (i.e., few-shot tips) are provided to illustrate how the output should be formatted, which can help improve accuracy and consistency.

We want Claude to split the response into separate XML tag sections so that we can use regular expressions to extract reasoning and intent separately. This allows us to create targeted subsequent steps in the work order routing workflow, such as using only the intent to determine who to route the work order to.

Deploying your cue words

If not deployed in a test production environment and Operational assessment, it's hard to know how effective your cue words are.

Let's build the deployment structure. Start by defining a method signature that will wrap our calls to Claude. We'll use the method we've already started writing, which starts with the ticket_contents as input and now returns reasoning cap (a poem) intent tuples as output. If you already have an automated method using traditional machine learning, it is recommended to follow the signature of that method.

import anthropic

import re

# Create an instance of the Anthropic API client

client = anthropic.Anthropic()

# Setting the Default Model

DEFAULT_MODEL="claude-3-haiku-20240307"

def classify_support_request(ticket_contents):

# Define cue words for categorization tasks

classification_prompt = f"""You will act as a categorization system for customer support work orders.

...

... The reasoning should be included in the tag and the intent should be included in the tag. Only reasoning and intent are returned.

"""

# Sends prompt words to API to categorize support requests

message = client.messages.create(

model=DEFAULT_MODEL, max_tokens=MODEL, max_tokens=MODEL

model=DEFAULT_MODEL, max_tokens=500,

temperature=0,

messages=[{"role": "user", "content": classification_prompt}],

stream=False)

)

reasoning_and_intent = message.content[0].text

# Extracting `reasoning` with Python's regular expression library

reasoning_match = re.search(

r"(. *?) ", reasoning_and_intent, re.DOTALL

)

reasoning = reasoning_match.group(1).strip() if reasoning_match else ""

# Similarly extract `intent`

intent_match = re.search(r"(. *?) ", reasoning_and_intent, re.DOTALL)

intent = intent_match.group(1).strip() if intent_match else ""

return reasoning, intent

This code:

- Imported the Anthropic library and created a client instance using your API key.

- Defines a

classify_support_requestfunction that accepts aticket_contentsString. - utilization

classification_promptcommander-in-chief (military)ticket_contentsSend it to Claude for categorization. - Returns the model extracted from the response of the

reasoningcap (a poem)intentThe

Since we need to wait for full inference and intent text generation before parsing, it will be stream=False Set to the default value.

Evaluate your tips

The use of cue words usually requires testing and optimization to reach production-ready status. To determine if your solution is ready, evaluate its performance based on previously set success criteria and thresholds.

To run an evaluation, you need to have test cases to execute the evaluation. This article assumes that you haveDeveloped your test casesThe

Constructing the evaluation function

The sample evaluations in this guide measure Claude's performance by three key metrics:

- accuracy

- Cost per classification

Depending on what is important to you, it may be necessary to evaluate Claude in other dimensions.

In order to perform the evaluation, first we need to modify the previous script by adding a function that compares the predicted intent with the actual intent and calculates the percentage of correct predictions. We also need to add costing and time measurement functions.

import anthropic

import re

# 创建 Anthropic API 客户端的实例

client = anthropic.Anthropic()

# 设置默认模型

DEFAULT_MODEL="claude-3-haiku-20240307"

def classify_support_request(request, actual_intent):

# 定义分类任务的提示

classification_prompt = f"""You will be acting as a customer support ticket classification system.

...

...The reasoning should be enclosed in <reasoning> tags and the intent in <intent> tags. Return only the reasoning and the intent.

"""

message = client.messages.create(

model=DEFAULT_MODEL,

max_tokens=500,

temperature=0,

messages=[{"role": "user", "content": classification_prompt}],

)

usage = message.usage # 获取 API 调用的使用统计信息,包括输入和输出 Token 数量。

reasoning_and_intent = message.content[0].text

# 使用 Python 的正则表达式库提取 `reasoning`。

reasoning_match = re.search(

r"<reasoning>(.*?)</reasoning>", reasoning_and_intent, re.DOTALL

)

reasoning = reasoning_match.group(1).strip() if reasoning_match else ""

# 同样,提取 `intent`。

intent_match = re.search(r"<intent>(.*?)</intent>", reasoning_and_intent, re.DOTALL)

intent = intent_match.group(1).strip() if intent_match else ""

# 检查模型的预测是否正确。

correct = actual_intent.strip() == intent.strip()

# 返回推理结果、意图、正确性和使用情况。

return reasoning, intent, correct, usage

The changes we made to the code are as follows:

- we will

actual_intentAdd from the test case to theclassify_support_requestmethod, and a comparison mechanism was set up to assess whether Claude's intent categorization was consistent with our golden intent categorization. - We extracted usage statistics for API calls to calculate costs based on input and output Token usage.

Run your assessment

A well-developed evaluation requires clear thresholds and benchmarks to determine what constitutes a good result. The script above will provide us with runtime values for accuracy, response time, and cost per classification, but we still need clearly set thresholds. Example:

- Accuracy: 95% (100 tests)

- Cost per classification: Average reduction of 50% (100 tests) compared to current routing methods

Setting these thresholds allows you to quickly and easily determine at scale which approach is best for you, and with unbiased empirical data, clarify what improvements are needed to better meet your requirements.

improve performance

In complex scenarios, consider going beyond the standard prompt engineering techniques cap (a poem) guardrail implementation strategies of additional strategies may be helpful. Here are some common scenarios:

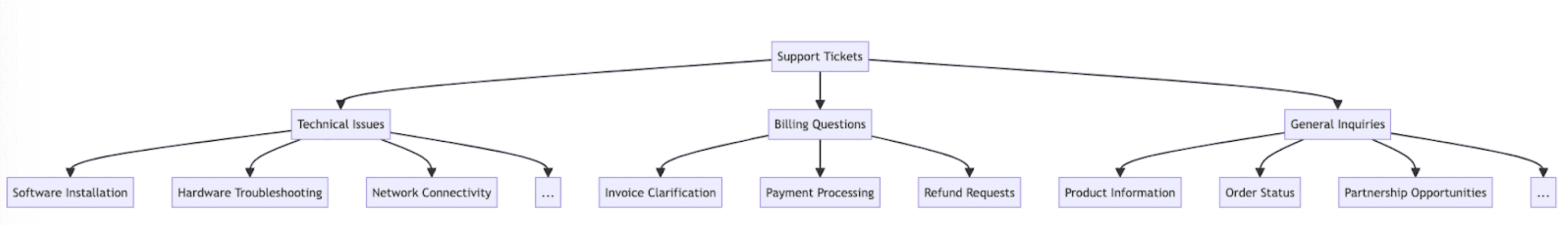

For the case of 20+ intent categories, use the categorization hierarchy

As the number of categories increases, so does the number of required examples, which can make the cue unwieldy. As an alternative, you may consider implementing a hierarchical classification system using hybrid classifiers.

- Organize your intentions into a classification tree structure.

- Create a series of classifiers at each level of the tree, enabling the cascade routing method.

For example, you may have a top-level classifier that broadly categorizes work orders into "Technical Issues", "Billing Issues", and "General Inquiries". Each of these categories can have its own sub-classifier to further refine the categorization.

- Benefits - Greater nuance and accuracy: You can create different prompts for each parent path, allowing for more targeted and context-specific categorization. This improves accuracy and allows for more granular handling of customer requests.

- Cons - Increased latency: Note that multiple classifiers may result in increased latency, so we recommend implementing this method when using our fastest model, Haiku.

Use of vector databases and similarity search retrieval to handle highly variable work orders

While providing examples is the most effective way to improve performance, it may be difficult to include enough examples in a single prompt if support requests are highly variable.

In this case, you can utilize the vector database to perform a similarity search from the example dataset and retrieve the most relevant examples to a given query.

This methodology is used in our classification recipe which is detailed in the following section, has been shown to improve performance from 71% accuracy to 93% accuracy.

Special consideration of anticipated edge cases

Here are some scenarios where Claude might misclassify a work order (there may be other situations that are unique to your situation). In these scenarios, consider providing clear instructions or examples in the prompts of how Claude should handle edge cases:

Clients make implicit requests

Customers often express needs indirectly. For example, "I've been waiting for my package for more than two weeks" may be an indirect request for order status.

- Solution: Provide Claude with some real-world customer examples of this type of request and its underlying intent. If you include a particularly nuanced categorization rationale for the intent of the work order, you can get better results so that Claude can better generalize the logic to other work orders.

Claude prioritizes emotion over intention.

When a client expresses dissatisfaction, Claude may prioritize dealing with the emotion over resolving the underlying issue.

- Solution: Provide Claude with an indication of when to prioritize customer sentiment. This could be something as simple as "Ignore all customer sentiment. Focus only on analyzing the intent of the customer request and the information the customer may be asking for."

Multiple issues lead to confusion in prioritizing issues

Claude may have difficulty identifying the main concern when a client asks multiple questions in one interaction.

- Solution: Clarify the prioritization of intentions so that Claude can better rank the extracted intentions and identify the main concerns.

Integrating Claude into your larger support workflow

Proper integration requires that you make some decisions about how Claude-based work order routing scripts fit into the larger work order routing system architecture. You can do this in one of two ways:

- Push-based: The support work order system you use (e.g. Zendesk) triggers your code by sending webhook events to your routing service, which then categorizes and routes the intent.

- This approach is more network scalable, but requires you to expose an endpoint.

- Pull-Based: Your code pulls the latest work order based on the given schedule and routes it as it is pulled.

- This approach is easier to implement, but may make unnecessary calls to the supporting work order system if pulled too often, or may be too slow if pulled too infrequently.

For both methods, you need to wrap your scripts in a service. Which method you choose depends on what APIs your support work order system provides.