Claude Common Use Case Guide: Customer Support Agents

This guide describes how to utilize Claude s advanced conversational capabilities handle customer inquiries in real-time, provide 24/7 support, reduce wait times, and manage high volumes of support requests with accurate responses and proactive interactions.

Before building with Claude

Decide whether to use Claude for support chat

Here are some key indicators that you should use an LLM like Claude to automate some of your customer support processes:

Large number of repetitive queries

Claude specializes in efficiently handling large numbers of similar problems, freeing human agents to tackle more complex problems.

The need for rapid information integration

Claude is able to quickly retrieve, process, and integrate information from a vast knowledge base, whereas a human agent may need time to conduct research or consult multiple sources.

24/7 availability requirements

Claude is able to provide 24/7 support without tiring, whereas providing continuous coverage for human agents can be both expensive and challenging.

Rapid expansion during peak periods

Claude can handle sudden increases in query volume without having to hire and train additional staff.

Consistent brand voice

You can instruct Claude to consistently represent your brand's tone and values, while human agents may have different communication styles.

Some factors to consider when choosing Claude over other LLMs:

- You prioritize natural and nuanced conversations: Claude's fine-grained language comprehension allows for more natural, context-aware conversations that are closer to human communication than chats with other LLMs.

- You often receive complex and open-ended questions: Claude can handle a wide range of topics and queries without generating boilerplate responses or having to write extensive permutations of user-speak.

- You need scalable multilingual support: Claude's multilingual capabilities make it possible to converse in more than 200 languages without having to set up a separate chatbot for each supported language or go through an extensive translation process.

Defining the ideal chat interaction

Outline the ideal customer interaction to define how and when you expect your customers to interact with Claude. This overview will help determine the technical requirements for your solution.

Below is a sample chat interaction for auto insurance customer support:

- our customers: Launch Support Chat Experience

- Claude: Warmly welcome customers and start a conversation

- our customers: Ask about insurance for their new electric car

- Claude: Provide information about electric vehicle insurance

- our customers: Ask questions related to the special needs of electric vehicle insurance

- Claude: Provide accurate and useful answers with links to relevant sources

- our customers: Ask off-topic questions that have nothing to do with insurance or automobiles

- Claude: Clarify that they do not discuss irrelevant topics and direct customers back to auto insurance related content

- our customers: Expressed interest in insurance quotes

- Claude: Ask a series of questions to determine an appropriate quote and make adjustments based on the customer's answers

- Claude: Send a request to the Quote Generation API tool with the necessary information gathered from the customer

- Claude: receive response information from the API tool, synthesize it into a natural answer and provide a quote to the customer

- our customers: Ask follow-up questions

- Claude: Answer follow-up questions as needed

- Claude: Guide the customer to the next step in the insurance process and end the conversation

As you write actual examples for your own use cases, you may find it helpful to write out the actual words used in the dialog so that you can also get a feel for Claude's ideal tone, length of response, and level of detail required.

Breaking down interactions into discrete tasks

Customer support chat consists of a number of different tasks, including answering questions, retrieving information, and handling request actions, all brought together in one complete customer interaction. Before you start building, break down the ideal customer interaction into each task that Claude needs to perform. This ensures that you prompt and evaluate Claude for each task and provides a good understanding of the scope of the interaction to consider when writing test cases.

Clients sometimes find it helpful to visualize this process as an interaction flowchart showing the possible branching points of the conversation based on user requests.

The following are key tasks related to the above insurance interactions:

- Welcome and general guidance

- Warmly welcome customers and start a conversation

- Provide basic information about the company and interactions

- Product Information

- Providing information about EV insurance This needs to be done to ensure that Claude has the necessary information in its context, and may mean that it needs to be RAG IntegrationThe

- Answer questions related to the special needs of electric vehicle insurance

- Answer follow-up questions about quotes or insurance details

- Provide links to relevant sources where appropriate

- Dialogue management

- Staying Topic-Focused (Auto Insurance)

- Steering off-topic questions back to relevant topics

- Quote Generation

- Ask appropriate questions to determine qualification of offers

- Adjusting questions based on customer responses

- Submit the collected information to the Quote Generation API

- Provide customers with generated quotes

Establishing success criteria

Working with your support team, theDefine clear success criteriaand writing with quantifiable benchmarks and targetsDetailed assessmentThe

The following are the criteria and benchmarks for evaluating Claude's success in performing the defined tasks:

Query comprehension accuracy

This metric assesses the accuracy of Claude's understanding of customer inquiries across a variety of topics. By reviewing a sample of conversations, Claude's understanding of customer intent, critical next steps, successful solutions, etc. is assessed. The goal is to achieve an understanding accuracy of 95% or better.

Relevance

Evaluate whether Claude's responses are effective in solving a customer's specific problem. Evaluate a set of conversations and rate the relevance of each response (large-scale evaluation using a scoring system based on a large language model). Target relevance score of 90% or higher.

Response accuracy

Evaluate the accuracy of the company and product information provided to Claude based on the contextual information provided. The goal is to achieve an accuracy rate of 100% for this lead information.

Relevance provided by citation

Tracks the frequency and relevance of links or resources provided. The goal is to provide relevant resources in 80% interactions that require additional information.

Theme adherence

Evaluate whether Claude stays on topic during the conversation, such as in our auto insurance example implementation, and evaluate Claude's responses to auto insurance or customer-specific questions. The goal is that 95%'s responses are directly related to auto insurance or customer-specific queries.

Effectiveness of content generation

Evaluate Claude's success in when to generate message content and the relevance of its content. For example, in our implementation, we will evaluate Claude's understanding of when to generate quotes and their accuracy. The goal is 100% accuracy, as this is critical information for successful customer interactions.

Upgrade efficiency

This measures Claude's ability to recognize queries that require human intervention and escalate them when appropriate. Tracks the percentage of conversations that are escalated correctly and those that should have been escalated but were not. The goal is 95% or better escalation accuracy.

The following are criteria and benchmarks for assessing the business impact of using Claude for support:

emotional maintenance

Assess Claude's ability to maintain or improve customer sentiment throughout the conversation. Use a sentiment analysis tool to measure the emotional state at the beginning and end of each conversation. The goal is for sentiment to be maintained or improved during interactions in 90%.

diversion rate

Percentage of customer inquiries that are successfully handled by a chatbot without human intervention. Typically targets 70%-80% triage rate, depending on the complexity of the inquiry.

Customer Satisfaction Rating

Measures customer satisfaction with interactions with chatbots. Usually done through a post-interaction survey. The goal is a customer satisfaction score of 4 out of 5 or higher.

Average processing time

The average time it takes a chatbot to resolve an inquiry. This time varies greatly depending on the complexity of the question, but the overall goal is to make the average handling time shorter than human customer service.

How to implement Claude as a customer service agent

Selecting the right Claude model

The choice of model depends on the trade-off between cost, accuracy and response time.

For customer support chat.claude-3-5-sonnet-20240620 Ideal for balancing intelligence, latency, and cost. However, if multiple prompts are included in a dialog (including RAGs, tool usage, and/or long contextual prompts), theclaude-3-haiku-20240307 It may be better to optimize latency.

Building a Powerful Cue Word

Using Claude for customer support requires that Claude be directional and contextual enough to respond appropriately, and flexible enough to handle a wide range of customer queries.

Let's start with the elements of writing strong cues, starting with system cues:

IDENTITY = """你是 Eva,一位友好且知识渊博的 Acme 保险公司 AI 助手。你的角色是热情地欢迎客户,并提供有关 Acme 保险产品的信息,包括车险和电动车险。你还可以帮助客户获取他们的保险需求报价。"""

While you might be inclined to put all the information in the system prompt words to separate the instructions from the user dialog, Claude actually puts most of the prompt content in the first round of the User Performs best when in the middle (the only exception is character prompts). For more information read Use the system prompt to assign a role to ClaudeThe

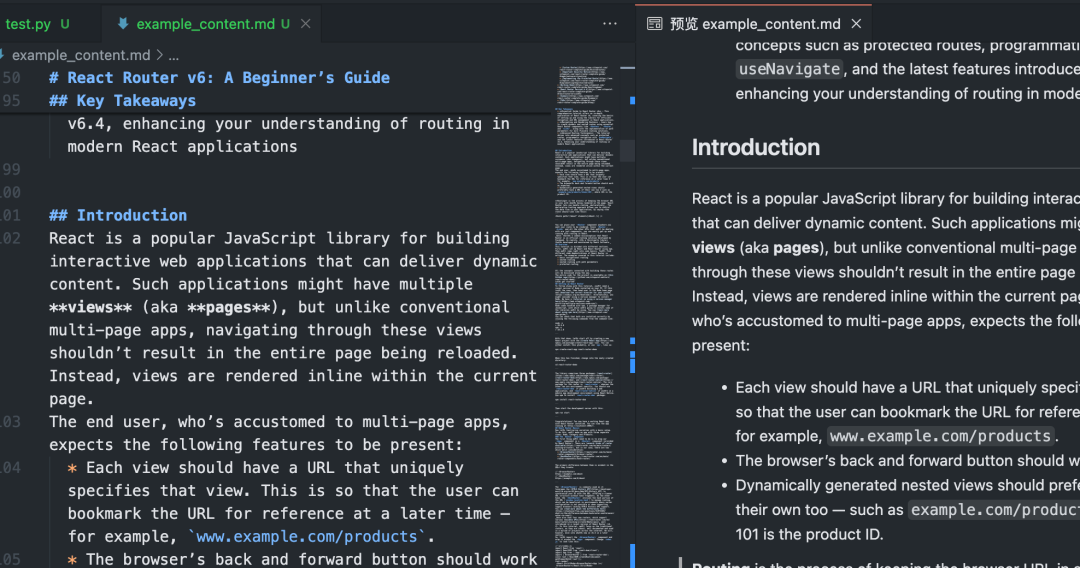

It is best to break down complex cues into sub-parts and write them one part at a time. For each task, you can get better results by progressively defining the parts of the prompt that Claude needs to complete that task. In the case of auto insurance customer support, for example, we'll write the prompts in stages, starting with the 'Greetings and General Guidance' task. This also makes debugging the prompts easier, as you can tweak the individual parts of the overall prompt more quickly.

We put all these sections in a file called config.py in the document.

STATIC_GREETINGS_AND_GENERAL = """

<static_context>

Acme 汽车保险:您在路上的可靠伙伴

简介:

在 Acme 保险公司,我们理解您的车辆不仅仅是交通工具——它是您通往生活冒险的门票。自 1985 年以来,我们一直在制定汽车保险政策,赋予驾驶者探索、通勤和旅行的信心。

无论您是在城市街道上穿行,还是在进行跨国旅行,Acme 都会保护您和您的车辆。

我们的创新汽车保险政策专为适应您的独特需求而设计,涵盖从轻微碰撞到重大事故的一切。

凭借 Acme 的获奖客户服务和快速理赔解决方案,您可以专注于驾驶的乐趣,而我们处理其他事宜。

我们不仅是保险提供商——我们是您人生旅途中的副驾驶。

选择 Acme 汽车保险,体验优质保障和真诚关怀带来的安心。因为在 Acme,我们不仅为您的汽车提供保险——我们还为您开拓生活的旅程提供动力。

注:我们还提供电动车的专门保险,确保所有类型车辆的驾驶者都能从我们的保护中受益。

Acme 保险提供以下产品:

- 汽车保险

- 电动车保险

- 两轮车保险

营业时间:周一至周五,上午 9 点至下午 5 点(美国东部时间)

客户服务电话:1-800-123-4567

</static_context>

"""

We will treat auto insurance and EV insurance information the same.

STATIC_CAR_INSURANCE="""

<static_context>

汽车保险覆盖范围:

Acme 的汽车保险政策通常涵盖:

1. 责任险:支付您对他人造成的人身伤害和财产损失。

2. 碰撞险:支付事故中对您汽车的损坏。

3. 综合险:支付非碰撞事故对您汽车造成的损坏。

4. 医疗费用险:支付事故后的医疗费用。

5. 无保险/保险不足的驾驶员险:当您被保险不足的驾驶员撞击时保护您。

可选覆盖范围包括:

- 租车报销

- 道路救援

- 新车更换

</static_context>

"""

STATIC_ELECTRIC_CAR_INSURANCE="""

<static_context>

电动车保险:

我们专门的电动车保险超越了传统的汽车保险,为您的高科技投资提供量身定制的保护。除了标准的保险项目外,我们还提供:

- 保护昂贵的电池系统

- 覆盖家用充电设备

- 适应电动车领域的快速发展

无论您驾驶的是 Rivian、Nissan Leaf、Chevrolet Bolt 或其他电动车型,我们经验丰富的代理人都准备为您制定一份让您安心享受每一段电力驱动旅程的保单。

</static_context>

"""

Now that we have static content, let's add at least 4-5 examples of 'good' interactions to guide Claude's response. These examples should represent your ideal customer interactions and can include safeguards, tool calls, etc.

EXAMPLES="""

以下是一些如何与客户互动的示例:

<example 1>

H: 你好,请问你们提供小企业的商业保险吗?

A: 啊!很遗憾,目前我们不提供商业保险。

不过,我们有多种个人保险产品,包括汽车保险和电动车保险。

您想了解更多关于这些产品的信息吗?

</example 1>

<example 2>

H: 你好,你们的营业时间是几点?

A: 我们的营业时间是周一至周五,上午 9 点到下午 5 点 (美国东部标准时间)。

如果您有任何问题或需要帮助,请随时在这些时间内联系我们。

今天还有什么其他需要我帮助的吗?

</example 2>

<example 3>

H: 我正在寻找汽车保险。Acme 提供哪些服务?

A: 很好的选择!Acme 的汽车保险通常包括:

- 责任险:在事故中您为过错方时为您提供保障

- 碰撞险:支付事故中您车辆的损坏费用

- 全面保障:涵盖非碰撞事件,如盗窃或故意破坏

- 医疗费用保险:事故后支付医疗费用

- 未投保/保险不足司机保障:保护您免受投保不足的司机影响

我们还提供一些可选保障,如租车报销、道路救援和新车更换。

您想了解这些保障的更多细节,还是有其他关于为您的车辆投保的具体问题?

</example 3>

<example 4>

H: 我想了解一下你们的电动车保险。

A: 当然可以!我们的电动车保险专为电动车车主的独特需求量身定制。

除了我们为普通车辆提供的所有标准保障外,我们的电动车保险还包括:

- 电池保障:保护您的车辆昂贵电池系统免受损坏或老化

- 技术包:涵盖高级电子设备和车载计算机系统的维修或更换

- 充电站保障:保护家庭和便携式充电设备

- 网络安全保障:对抗黑客攻击或数字威胁的保障

- 专业道路救援:包括平板拖车服务,以保护车辆底盘

我们为多种电动车提供保障,包括特斯拉、Rivian、日产、雪佛兰等热门车型。

您是否有特定的电动车需要投保,或者您想了解某些保障的更多细节?

</example 4>

<example 5>

H: 我想获取我的汽车保险报价。

A: 当然!我很乐意为您提供汽车保险报价。

为了为您提供准确的报价,我需要收集一些关于您车辆和主要驾驶人的信息。

让我们从基本信息开始:

1. 您的车辆品牌和型号是什么?

2. 车辆生产年份是哪一年?

3. 您大约行驶了多少英里?

4. 主要驾驶人的年龄是多少?

一旦您提供了这些信息,我会使用我们的报价工具为您生成个性化的保险报价。

</example 5>

"""

You will also need to include any important instructions on the do's and don'ts of how Claude interacts with customers. These may be taken from brand protection or support policies.

ADDITIONAL_GUARDRAILS = """请遵循以下防护措施:

1. 仅提供我们产品中列出的保险类型信息。

2. 如果被问及我们不提供的保险类型,请礼貌地说明我们不提供此类服务。

3. 不要对未来的产品或公司计划进行猜测。

4. 不要做出未经授权的承诺或达成协议。你只能提供信息和指导。

5. 不要提及任何竞争对手的产品或服务。

"""

Now let's combine all these parts into a string to use as our prompt.

TASK_SPECIFIC_INSTRUCTIONS = ' '.join([

STATIC_GREETINGS_AND_GENERAL,

STATIC_CAR_INSURANCE,

STATIC_ELECTRIC_CAR_INSURANCE,

EXAMPLES,

ADDITIONAL_GUARDRAILS,

])

Using tools to empower dynamics and proactivity

Claude can dynamically perform operations and retrieve information by using client-side tooling features. Any external tools or APIs that should be used for the prompt are listed first.

In this example, we'll start with a tool for calculating quotes.

Note that the tool does not actually perform the calculation, it only signals to the application that the tool should be used and passes the specified parameters.

Example insurance quote calculator:

TOOLS = [{

"name": "get_quote",

"description": "根据用户输入计算保险报价。返回值为每月的保费。",

"input_schema": {

"type": "object",

"properties": {

"make": {"type": "string", "description": "车辆的品牌。"},

"model": {"type": "string", "description": "车辆的型号。"},

"year": {"type": "integer", "description": "车辆的生产年份。"},

"mileage": {"type": "integer", "description": "车辆的行驶里程数。"},

"driver_age": {"type": "integer", "description": "主要驾驶员的年龄。"}

},

"required": ["make", "model", "year", "mileage", "driver_age"]

}

}]

def get_quote(make, model, year, mileage, driver_age):

"""返回每月的保费(以美元计)"""

# 你可以调用 HTTP 端点或数据库来获取报价。

# 这里,我们模拟1秒的延迟并返回固定的100美元报价。

time.sleep(1)

return 100

Deploying your tips

Without deploying the prompt to a test production environment and Operational assessment case, it's hard to know how effective your hints are. So let's build a small application using our hints, the Anthropic SDK, and streamlit, and create a user interface for it.

under the name of chatbot.py file, first set up the ChatBot class, which will encapsulate the same information that is used by the Anthropic SDK interactions.

The class should contain two main methods:generate_message cap (a poem) process_user_inputThe

from anthropic import Anthropic

from config import IDENTITY, TOOLS, MODEL, get_quote

from dotenv import load_dotenv

load_dotenv()

class ChatBot:

def __init__(self, session_state):

self.anthropic = Anthropic()

self.session_state = session_state

def generate_message(

self,

messages,

max_tokens,

):

try:

response = self.anthropic.messages.create(

model=MODEL,

system=IDENTITY,

max_tokens=max_tokens,

messages=messages,

tools=TOOLS,

)

return response

except Exception as e:

return {"error": str(e)}

def process_user_input(self, user_input):

self.session_state.messages.append({"role": "user", "content": user_input})

response_message = self.generate_message(

messages=self.session_state.messages,

max_tokens=2048,

)

if "error" in response_message:

return f"发生错误: {response_message['error']}"

if response_message.content[-1].type == "tool_use":

tool_use = response_message.content[-1]

func_name = tool_use.name

func_params = tool_use.input

tool_use_id = tool_use.id

result = self.handle_tool_use(func_name, func_params)

self.session_state.messages.append(

{"role": "assistant", "content": response_message.content}

)

self.session_state.messages.append({

"role": "user",

"content": [{

"type": "tool_result",

"tool_use_id": tool_use_id,

"content": f"{result}",

}],

})

follow_up_response = self.generate_message(

messages=self.session_state.messages,

max_tokens=2048,

)

if "error" in follow_up_response:

return f"发生错误: {follow_up_response['error']}"

response_text = follow_up_response.content[0].text

self.session_state.messages.append(

{"role": "assistant", "content": response_text}

)

return response_text

elif response_message.content[0].type == "text":

response_text = response_message.content[0].text

self.session_state.messages.append(

{"role": "assistant", "content": response_text}

)

return response_text

else:

raise Exception("发生错误: 意外的响应类型")

def handle_tool_use(self, func_name, func_params):

if func_name == "get_quote":

premium = get_quote(**func_params)

return f"生成的报价: 每月 ${premium:.2f}"

raise Exception("使用了意外的工具")

Building the User Interface

Deploy this code using Streamlit and a master method test. This main() function sets up a Streamlit-based chat interface.

We'll do this in a file called app.py This is done in the file

import streamlit as st

from chatbot import ChatBot

from config import TASK_SPECIFIC_INSTRUCTIONS

def main():

st.title("与 Eva 聊天,Acme 保险公司的助理🤖")

if "messages" not in st.session_state:

st.session_state.messages = [

{'role': "user", "content": TASK_SPECIFIC_INSTRUCTIONS},

{'role': "assistant", "content": "明白"},

]

chatbot = ChatBot(st.session_state)

# 显示用户和助理的消息,跳过前两条

for message in st.session_state.messages[2:]:

# 忽略工具使用的消息块

if isinstance(message["content"], str):

with st.chat_message(message["role"]):

st.markdown(message["content"])

if user_msg := st.chat_input("在此输入您的消息..."):

st.chat_message("user").markdown(user_msg)

with st.chat_message("assistant"):

with st.spinner("Eva 正在思考..."):

response_placeholder = st.empty()

full_response = chatbot.process_user_input(user_msg)

response_placeholder.markdown(full_response)

if __name__ == "__main__":

main()

Use the following command to run the program:

streamlit run app.py

Evaluate your tips

Prompts often require testing and optimization to reach production readiness. To determine if your solution is ready, you can evaluate chatbot performance through a systematic process that combines quantitative and qualitative methods. Based on your defined success criteria, create aStrong empirical assessmentwill enable you to optimize your prompts.

Anthropic Console An evaluation tool is now provided that allows you to test your prompts in different scenarios.

Improve performance

In complex scenarios, in addition to the standardTip Engineeringcap (a poem)Guardrail Implementation StrategyBeyond that, it may be helpful to consider other strategies to improve performance. Here are some common scenarios:

Reducing Long Context Latency with RAG

When dealing with a large number of static and dynamic contexts, including all the information in the cue can lead to high costs, slower response times, and reaching the limits of the context window. In this case, implementing Retrieval Augmented Generation (RAG) techniques can significantly improve performance and efficiency.

By using the Voyage-like embedding model Converting information into a vector representation creates a more scalable and responsive system. This approach allows dynamic retrieval of relevant information based on the current query, rather than including all possible contexts in every hint.

realize RAG Used to support the use of scenarios RAG formulations has been shown to improve accuracy, reduce response times, and lower API costs in systems that require a wide range of contexts.

Integration of real-time data through the use of tools

When dealing with queries that require real-time information, such as account balances or policy details, an embedded RAG-based approach is not enough. Instead, you can leverage tool usage to significantly enhance your chatbot's ability to provide accurate real-time responses. For example, you can use tools to find customer information, retrieve order details, and cancel orders on behalf of customers.

This method is used in our Tool Use: Customer Service Agent Recipe outlined in , allowing you to seamlessly integrate real-time data into Claude's responses, providing a more personalized and efficient customer experience.

Enhanced input and output protection measures

When deploying chatbots, especially in customer service scenarios, it is critical to protect against the risk of abuse, out-of-scope queries, and inappropriate responses. While Claude is inherently resilient to these scenarios, here are additional steps to strengthen your chatbot safeguards:

- Reduction of hallucinations: Implementation of fact-checking mechanisms and quote to ensure that responses are based on the information provided.

- Cross-check information: Ensure that the agent's response is consistent with company policy and known facts.

- Avoiding Contractual Commitments: Ensuring that the agent does not make promises or agreements that he or she does not have the authority to commit to.

- Jailbreak mitigation: Use methods such as innocuous screening and input validation to prevent users from exploiting model vulnerabilities to generate inappropriate content.

- Avoid mentioning competitors: implement a competitor mention filter to keep your brand focused and avoid mentioning any competitor's products or services.

- Keep Claude's role.: Prevents Claude from changing its contextual style during long and complex interactions.

- Remove Personally Identifiable Information (PII): Unless explicitly requested and authorized, delete all PII from responses.

Reducing Perceived Response Time through Streaming Processing

Implementing streaming can significantly increase user engagement and satisfaction when dealing with potentially long responses. In this case, users receive answers gradually, rather than waiting for the entire response to be generated.

Below are the steps on how to implement stream processing:

- utilization Anthropic Streaming API Streaming responses are supported.

- Sets up the front end to process incoming blocks of text.

- Displays a block of text for each arrival, simulating the effect of real-time input.

- Implement a mechanism to save full replies, allowing users to view the full content when they return after navigating away.

In some cases, streaming allows the use of advanced models with higher base latencies, as progressive display mitigates the effects of longer processing times.

Extend your Chatbot

As the complexity of Chatbot increases, the application architecture can evolve with it. Before adding more layers to the architecture, consider the following simpler options:

- Make sure you're making the most of hints and optimizing with hint engineering. Use our Tip Engineering Guide Write the most effective tips.

- Add others to the prompt artifact (which may include cue chain) to see if the desired function can be achieved.

If your Chatbot handles very diverse tasks, you may want to consider adding a Separate intent classifiers to route the initial customer query. For existing applications, this would involve creating a decision tree that routes customer queries through classifiers to specialized conversations (each with its own tools and system prompts). Note that this approach requires an additional call to Claude, which may add latency.

Integrating Claude into Support Workflows

While our examples focus on Python functions that can be called in a Streamlit environment, deploying Claude for real-time support of Chatbot requires an API service.

You can do this:

- Creating API wrappers: Develop a simple API wrapper for your taxonomy functions. For example, you can use the Flask API or Fast API to wrap your code as an HTTP service. This HTTP service can take user input and return a complete Assistant response. As a result, the service can have the following characteristics:

- Server-Sent Events (SSE): SSE allows real-time response streaming between the server and the client. This is critical to providing a smooth, interactive experience when using LLM.

- Caching: Implementing caching can significantly improve responsiveness and reduce unnecessary API calls.

- Context retention: Keeping context is important for the continuity of the dialog as the user navigates away and back.

- Building the Web Interface: Implement a user-friendly Web UI for interacting with Claude-driven agents.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...