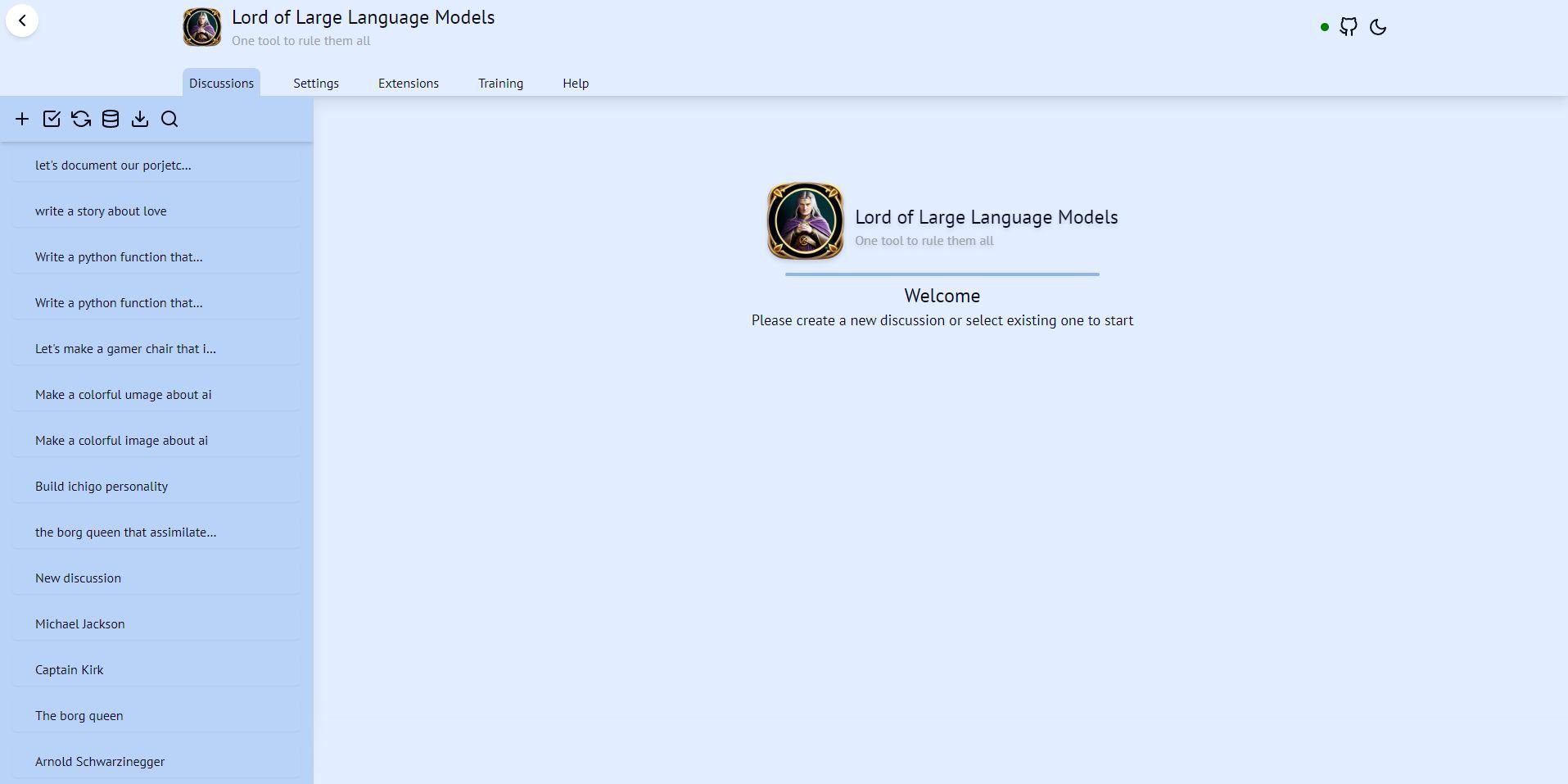

Chonkie: a lightweight RAG text chunking library

General Introduction

Chonkie is a lightweight and efficient RAG (Retrieval-Augmented Generation) text chunking library designed to help developers quickly and easily chunk text. The library supports a variety of chunking methods, including token-, word-, sentence-, and semantic similarity-based chunking, and is suitable for a variety of text processing and natural language processing tasks. Default installation requires only 21MB (other similar products require 80-171MB) Supports all major chunkers.

Function List

- TokenChunker: Splits text into fixed-size marker blocks.

- WordChunker: Divide text into chunks based on words.

- SentenceChunker: Divide the text into chunks based on sentences.

- SemanticChunker: Split text into chunks based on semantic similarity.

- SDPMChunker: Segmentation of text using a semantic double merge approach.

Using Help

mounting

To install Chonkie, simply run the following command:

pip install chonkie

Chonkie follows the principle of minimizing default installations and recommends installing specific chunkers as needed, or all of them if you don't want to consider dependencies (not recommended).

pip install chonkie[all]

utilization

Here is a basic example to help you get started quickly:

- First import the desired chunker:

from chonkie import TokenChunker - Import your favorite tokenizer library (AutoTokenizers, TikToken and AutoTikTokenizer are supported):

from tokenizers import Tokenizer tokenizer = Tokenizer.from_pretrained("gpt2") - Initialize the chunker:

chunker = TokenChunker(tokenizer) - Chunking the text:

chunks = chunker("Woah! Chonkie, the chunking library is so cool! I love the tiny hippo hehe.") - Access the chunking results:

for chunk in chunks: print(f"Chunk: {chunk.text}") print(f"Tokens: {chunk.token_count}")

Supported Methods

Chonkie offers a wide range of chunkers to help you efficiently create and organize your own chunks for the RAG The application splits the text. The following is a brief overview of the available chunkers:

- TokenChunker: Splits text into fixed-size marker blocks.

- WordChunker: Divide text into chunks based on words.

- SentenceChunker: Divide the text into chunks based on sentences.

- SemanticChunker: Split text into chunks based on semantic similarity.

- SDPMChunker: Segmentation of text using a semantic double merge approach.

benchmarking

Chonkie performs well in several benchmarks:

- sizes: The default installation is only 9.7MB (compared to 80-171MB for other versions), which is still lighter than the competition, even when semantic chunking is included.

- tempo: Tag chunking is 33x faster than the slowest alternative, sentence chunking is nearly 2x faster than the competition, and semantic chunking is 2.5x faster than other methods.

Detailed Operation Procedure

- installer: Install Chonkie and the required tokenizer libraries via pip.

- import library: Import Chonkie and the tagger library in your Python scripts.

- Initializing the chunker: Select and initialize the appropriate chunker for your needs.

- chunked text: Chunks the text using an initialized chunker.

- Outcome of the process: Iterate through the chunking results for further processing or analysis.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...