ChatOllama Notes | Implementing Advanced RAG for Productivity and Redis-based Document Databases

ChatOllama It is an open source chatbot based on LLMs. For a detailed description of ChatOllama click on the link below.

ChatOllama | Ollama-based 100% Local RAG Application

ChatOllama The initial goal was to provide users with a 100% native RAG application.

As it has grown, more and more users have come forward with valuable requirements. Now, `ChatOllama` supports multiple language models including:

With ChatOllama, users can:

- Manage Ollama models (pull/delete)

- Manage knowledge base (create/delete)

- Free Dialogue with LLMs

- Managing the personal knowledge base

- Communicating with LLMs through personal knowledge bases

In this post, I'm going to look at how to achieve productionization of advanced RAG. I have learned the basic and advanced techniques of RAG, but there are still many things that need to be taken care of to put RAG into production. I will share the work that has been done in ChatOllama and the preparations that have been made to bring RAG to production.

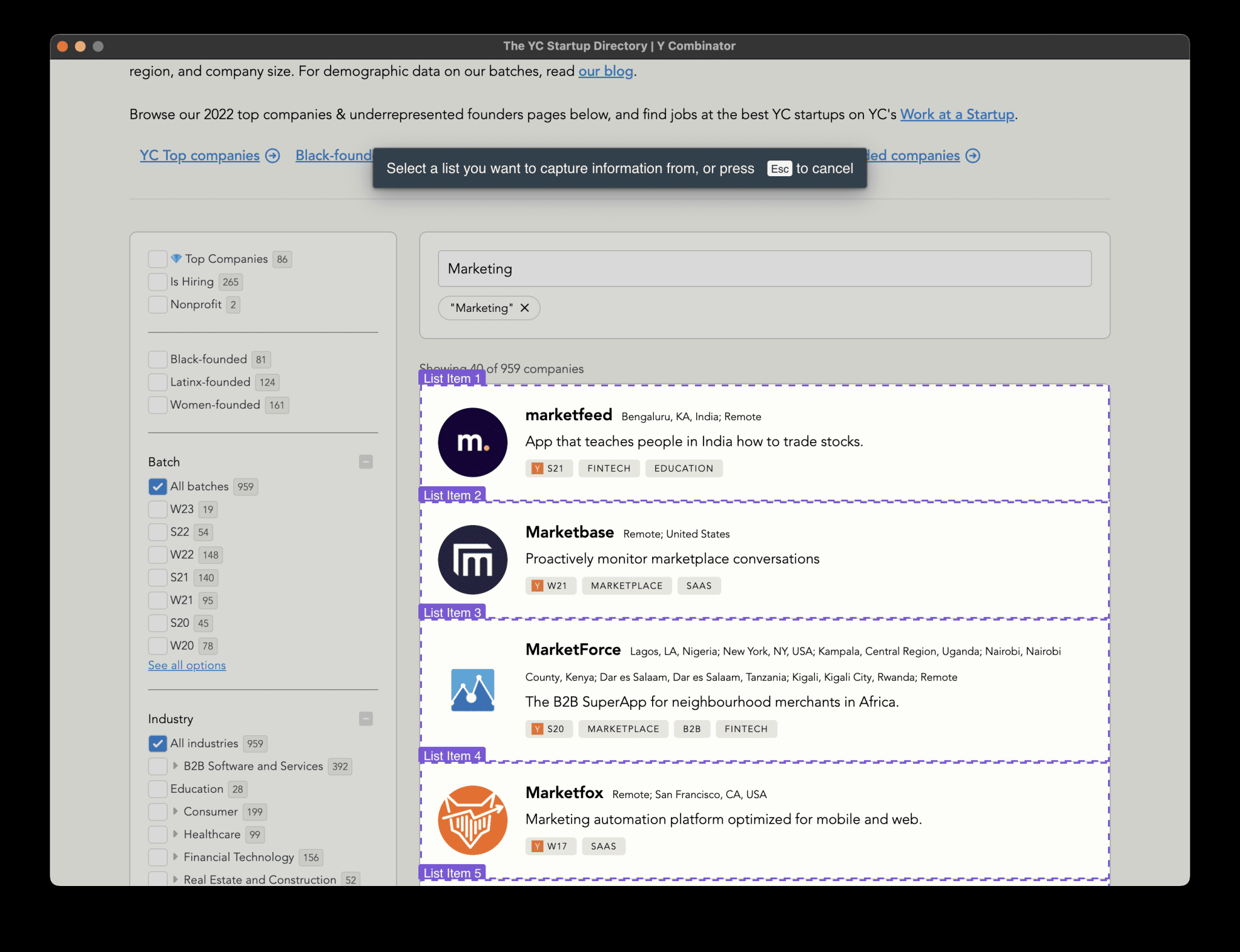

ChatOllama | RAG Constructed Runs

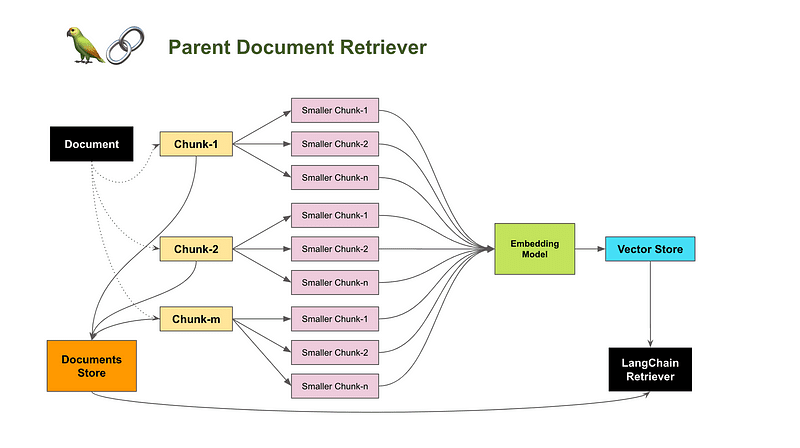

In the ChatOllama platform, we utilize theLangChain.jsto manipulate the RAG process. In ChatOllama's knowledge base, parent document retrievers beyond the original RAG are utilized. Let's take a deep dive into its architecture and implementation details. We hope you find it enlightening.

Utilization of the Parent Document Retriever

In order to make the parent document retriever work in a real product, we need to understand its core principles and select the appropriate storage elements for production purposes.

Core Principles of the Parent Document Retriever

Conflicting requirements are often encountered when splitting documents for retrieval needs:

- You may want documents to be as small as possible so that their embedded content can most accurately represent their meaning. If the content is too long, the embedded information may lose its meaning.

- You also want documents that are long enough to ensure that each paragraph has a complete full-text environment.

Powered by LangChain.jsParentDocumentRetrieverThis balance is achieved by splitting the document into smaller chunks. Each time it retrieves, it extracts the chunks and then looks up the parent document corresponding to each chunk, returning a larger range of documents. In general, the parent document is linked to the chunks by doc id. We'll explain more about how this works later.

Note that theparent documentRefers to small chunks of source documents.

build

Let's take a look at the overall architecture diagram of the parent document retriever.

Each chunk will be processed and transformed into vector data, which will then be stored in the vector database. The process of retrieving these chunks will be as it was done in the original RAG setup.

The parent document (block-1, block-2, ...... block-m) is split into smaller parts. It is worth noting that two different text segmentation methods are used here: a larger one for parent documents and a smaller one for smaller blocks. Each parent document is given a document ID, and this ID is then recorded as metadata in its corresponding chunk. This ensures that each chunk can find its corresponding parent document using the document ID stored in the metadata.

The process of retrieving parent documents is not the same as this. Instead of a similarity search, a document ID is provided to find its corresponding parent document. In this case, a key-value store system is sufficient for the job.

Parent Document Retriever

Storage section

There are two types of data that need to be stored:

- Small-scale vector data

- String data of the parent document with its document ID

All major vector databases are available. Of `ChatOllama`, I chose Chroma.

For parent document data, I chose Redis, the most popular and highly scalable key-value storage system available.

What's missing from LangChain.js

LangChain.js provides a number of ways to integrate byte and document storage:

Support for `IORedis`:

The missing part of the RedisByteStore is thecollectionMechanisms.

When processing documents from different knowledge bases, each knowledge base will be processed with acollectionCollections are organized in the form of collections, and documents in the same library will be converted to vector data and stored in a vector database such as Chroma in one of thecollectionIn the assembly.

Suppose we want to delete a knowledge base? We can certainly delete a `collection` collection in the Chroma database. But how do we clean up the document store according to the dimensions of the knowledge base? Since components such as RedisByteStore do not support `collection` functionality, I had to implement it myself.

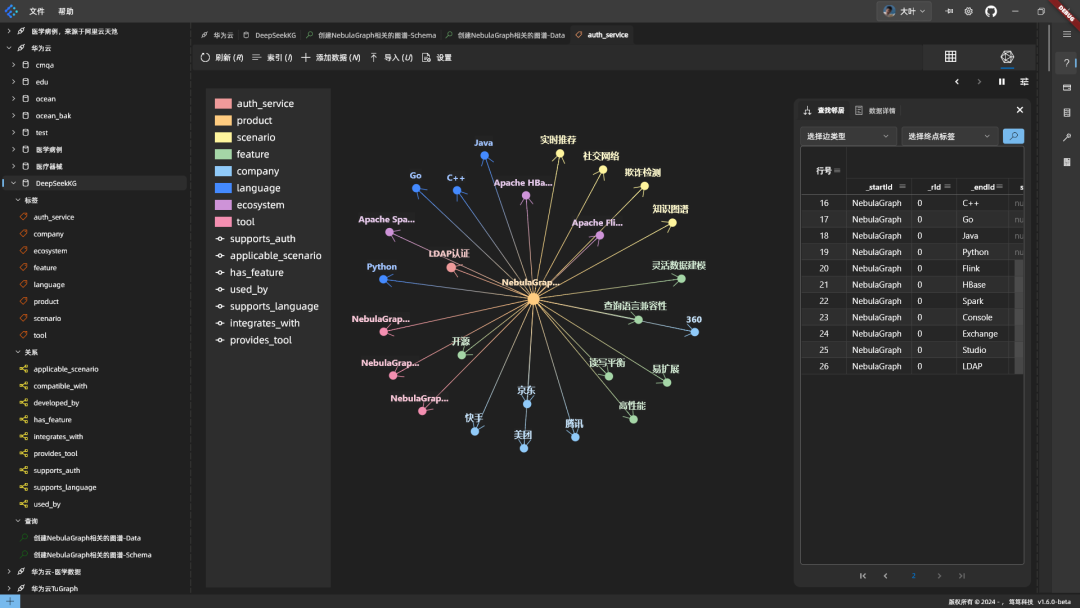

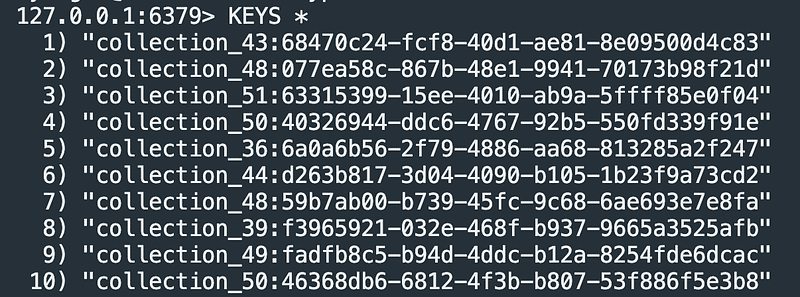

ChatOllama RedisDocStore

In Redis, there is no built-in feature called `collection`. Developers usually implement `collection` functionality by using prefix keys. The following figure shows how prefixes can be used to identify different collections:

Redis key representation with prefixes

Now let's look at how it was implemented toRedisof the document storage function for the background.

Key Message:

- Each `RedisDocstore` is initialized with a `namespace` parameter. (The naming of namespaces and collections may not currently be standardized.)

- The keys are first `namespace` processed for both get and set operations.

import { Document } from "@langchain/core/documents".

import { BaseStoreInterface } from "@langchain/core/stores" ;

import { Redis } from "ioredis".

import { createRedisClient } from "@/server/store/redis" ;export class RedisDocstore implements BaseStoreInterface

{

_namespace: string;

_client: Redis.constructor(namespace: string) {

this._namespace = namespace;

this._client = createRedisClient();

}serializeDocument(doc: Document): string {

return JSON.stringify(doc);

}deserializeDocument(jsonString: string): Document {

const obj = JSON.parse(jsonString);

return new Document(obj);

}getNamespacedKey(key: string): string {

return `${this._namespace}:${key}`;

}getKeys(): Promise {

return new Promise((resolve, reject) => {

const stream = this._client.scanStream({ match: this._namespace + '*' });const keys: string[] = [];

stream.on('data', (resultKeys) => {

keys.push(... .resultKeys);

});stream.on('end', () => {

resolve(keys).

});stream.on('error', (err) => {

reject(err);

});

});

}addText(key: string, value: string) {

this._client.set(this.getNamespacedKey(key), value);

}async search(search: string): Promise {

const result = await this._client.get(this.getNamespacedKey(search));

if (!result) {

throw new Error(`ID ${search} not found.`);

} else {

const document = this.deserializeDocument(result);

return document;

}

}/**

* :: Adds new documents to the store.

* @param texts An object where the keys are document IDs and the values are the documents themselves.

* @returns Void

*/

async add(texts: Record): Promise {

for (const [key, value] of Object.entries(texts)) {

console.log(`Adding ${key} to the store: ${this.serializeDocument(value)}`);

}const keys = [... .await this.getKeys()];

const overlapping = Object.keys(texts).filter((x) => keys.includes(x));if (overlapping.length > 0) {

throw new Error(`Tried to add ids that already exist: ${overlapping}`);

}for (const [key, value] of Object.entries(texts)) {

this.addText(key, this.serializeDocument(value)); this.

}

}async mget(keys: string[]): Promise {

return Promise.all(keys.map((key) => {

const document = this.search(key);

return document;

}));

}async mset(keyValuePairs: [string, Document][]): Promise {

await Promise.all(

keyValuePairs.map(([key, value]) => this.add({ [key]: value }))

);

}async mdelete(_keys: string[]): Promise {

throw new Error("Not implemented.");

}// eslint-disable-next-line require-yield

async *yieldKeys(_prefix?: string): AsyncGenerator {

throw new Error("Not implemented");

}async deleteAll(): Promise {

return new Promise((resolve, reject) => {

let cursor = '0';const scanCallback = (err, result) => {

if (err) {

reject(err);

return;

}const [nextCursor, keys] = result;

// Delete keys matching the prefix

keys.forEach((key) => {

this._client.del(key);

});// If the cursor is '0', we've iterated through all keys

if (nextCursor === '0') {

resolve().

} else {

// Continue scanning with the next cursor

this._client.scan(nextCursor, 'MATCH', `${this._namespace}:*`, scanCallback);

}

};// Start the initial SCAN operation

this._client.scan(cursor, 'MATCH', `${this._namespace}:*`, scanCallback);

});

}

}

You can use this component seamlessly with ParentDocumentRetriever:

retriever = new ParentDocumentRetriever({

vectorstore: chromaClient,

docstore: new RedisDocstore(collectionName),

parentSplitter: new RecursiveCharacterTextSplitter({

chunkOverlap: 200,

chunkSize: 1000,

}),

childSplitter: new RecursiveCharacterTextSplitter({

chunkOverlap: 50,

chunkSize: 200,

}),

childK: 20.

parentK: 5.

});

We now have a scalable storage solution for advanced RAG, Parent Document Retriever, along with `Chroma` and `Redis`.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...