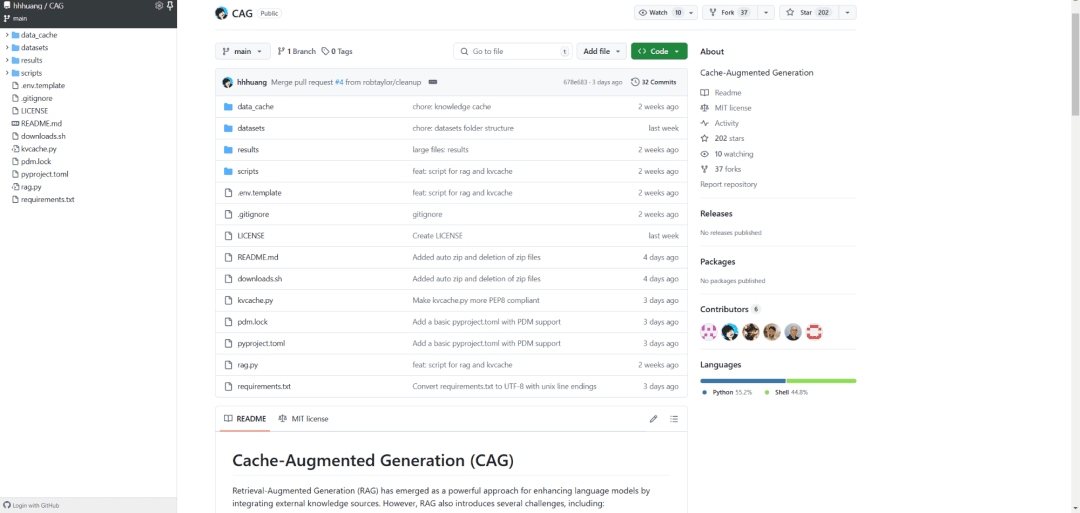

CAG: A cache-enhanced generation method that is 40 times faster than RAG

CAG (Cache Augmented Generation), which is 40 times faster than RAG (Retrieval Augmented Generation), revolutionizes knowledge acquisition: instead of retrieving external data in real time, all the knowledge is pre-loaded into the model context. It's like condensing a huge library into an on-the-go toolkit that you can flip through when you need it, and CAG is elegantly implemented:

- First preprocess the document to make sure it will fit in the LLM context window

- The processed content is then encoded into the Key-Value cache

- Finally this cache is stored in memory or on the hard disk to be recalled at any time

The results are compelling: on benchmark datasets such as HotPotQA and SQuAD, CAG is not only 40 times faster, but also significantly more accurate and coherent. This is due to its ability to grasp the context globally, without the problem of retrieval errors or incomplete data.

In terms of practical applications, this technology is promising in areas such as medical diagnosis, financial analysis, and customer service. It allows AI systems to maintain high performance while avoiding the maintenance burden of complex architectures.

In the end, the innovation of CAG is that it turns "take-it-as-you-go" into "take-it-with-you", which not only improves efficiency, but also opens up new possibilities for AI deployment. This may be the standard for the next generation of AI architectures.

References:

[1] https://github.com/hhhuang/CAG

[2] https://arxiv.org/abs/2412.15605

[3] Long-context LLMs Struggle with Long In-context Learning: https://arxiv.org/pdf/2404.02060v2

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...