AI College of Engineering: 2.5 RAG Systems Assessment

summary

Evaluation is a key component in the development and optimization of Retrieval Augmented Generation (RAG) systems. Evaluation involves a review of the RAG All aspects of the process are measured for performance, accuracy, and quality, including relevance and authenticity from retrieval effectiveness to response generation.

Importance of RAG assessment

Effective evaluation of the RAG system is important because it:

- Helps identify strengths and weaknesses in the retrieval and generation process.

- Guide the improvement and optimization of the entire RAG process.

- Ensure that the system meets quality standards and user expectations.

- Facilitates comparison of different RAG implementations or configurations.

- Helps detect problems such as hallucinations, biases, or irrelevant responses.

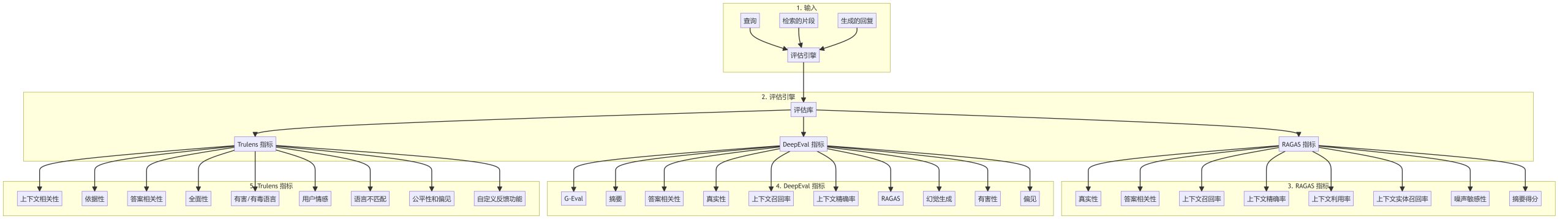

RAG Assessment Process

An assessment of a RAG system typically includes the following steps:

Core assessment indicators

RAGAS Indicators

- validity: Measure the consistency of the generated response with the retrieval context.

- Relevance of answers: Evaluate the relevance of the response to the query.

- context recall (computing): Evaluate whether the retrieved chunks cover the information needed to answer the query.

- Contextual accuracy: A measure of the proportion of relevant information in the retrieved chunks.

- Context utilization: Evaluate the efficiency with which the generated response utilizes the provided context.

- contextual entity recall: Assess whether important entities in the context are covered in the response.

- noise sensitivity: A measure of the robustness of a system to irrelevant or noisy information.

- Abstract Score: Assess the quality of the summary in the response.

DeepEval Indicators

- G-Eval: Common assessment metrics for text generation tasks.

- summaries: Assess the quality of the text summaries.

- Relevance of answers: A measure of how well the response answers the query.

- validity: Assess the accuracy of response and source information.

- Contextual Recall and Precision: Measuring the effectiveness of contextual retrieval.

- Hallucination Detection: Identify false or inaccurate information in a response.

- poisonous: Detect potentially harmful or offensive content in the response.

- bias: Identify unfair preferences or tendencies in generated content.

Trulens Indicators

- contextual relevance: Evaluate how well the retrieval context matches the query.

- grounded: A measure of whether the response is supported by the retrieved information.

- Relevance of answers: Evaluate the quality of the response to the answer to the query.

- comprehensiveness: Measures the completeness of the response.

- Harmful/offensive language: Identify potentially offensive or dangerous content.

- user sentiment: Analyzing emotional tone in user interactions.

- language mismatch: Detect inconsistencies in language usage between query and response.

- Fairness and bias: Assess the fair treatment of different groups in the system.

- Customized Feedback Functions: Allows the development of customized evaluation metrics for specific use cases.

Best Practices for RAG Assessment

- Overall assessment: Combining multiple indicators to assess different aspects of the RAG system.

- Regular benchmarking: Continuously evaluate the system as processes change.

- Human participation: A comprehensive analysis combining manual assessments and automated indicators.

- Domain-specific indicators: Develop customized metrics related to specific use cases or domains.

- error analysis: Analyze patterns in low-scoring responses to identify areas of improvement.

- Comparative assessment: Benchmark your RAG system against baseline models and alternative implementations.

reach a verdict

A robust assessment framework is critical to the development and maintenance of a high quality RAG system. By utilizing a diverse set of metrics and following best practices, developers can ensure that their RAG system provides accurate, relevant and credible responses while continuously improving performance.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...