Original text:Generally Capable Agents in Open-Ended Worlds [S62816]

1. Reflective Intelligence

- Ability to check and modify your own generated code or content, and iteratively optimize it.

- Through self-reflection and revision, higher quality results can be generated

- It is a robust and effective technology suitable for a wide range of applications.

2. Use of instrumental intelligences

- Ability to use a variety of tools to gather information, analyze and take action, expanding the range of applications

- Early research on tool use originated mainly in the field of computer vision, where language models were not yet capable of processing images.

3. Planning Intelligence

- Demonstrates an amazing ability to autonomously develop and execute plans based on task objectives.

- If a step fails, the ability to re-route to avoid failure, with a degree of autonomy and adaptability.

- It's not entirely reliable yet, but when it works, it's pretty amazing.

4. Collaborative multi-intelligence

- By setting up intelligences with different roles and letting them work together to accomplish a task, they can show stronger abilities than a single intelligence.

- Multi-intelligentsia can deepen the dialog and division of labor to accomplish complex development or creative tasks.

- It is also possible for multiple intelligences to debate, which is an effective way to improve the performance of the model.

I'm looking forward to sharing my findings on AI Intelligentsia, which I think is an exciting trend that anyone involved in AI development should keep an eye on. I'm also looking forward to all the "future trends" that will be presented.

So let's talk about AI intelligentsia. Now, the way most of us use big language modeling is like this, through a workflow with no intelligentsia, we type in a prompt and then generate an answer. It's kind of like when you ask someone to write an article about a certain topic, and I say you just sit down at the keyboard and type it out in one shot, like no backspace is allowed. Despite the difficulty of this task, the large language model performs surprisingly well.

In contrast, a workflow with AI intelligences might look like this. Have an AI or a big language model write an outline for an article. Need to look something up online? If so, look it up. Then write a first draft and read the first draft you wrote yourself and think about what parts need to be changed. Then revise your first draft and move on. So this workflow is iterative, and you might get the big language model to do some thinking, then revise the article, then do some more thinking, and so on. Few people realize that the results are better this way. I've surprised myself with the results of these AI intelligences' workflows.

I'm going to do a case study. My team analyzed some data using a programming benchmark called the Human Evaluation Benchmark, which was released by OpenAI a few years ago. The benchmark consists of programming problems such as finding the sum of all odd elements or elements in odd positions, given a non-empty list of integers. The answer might be a code snippet like this. Now, many of us will use zero-sample hints, meaning we tell the AI to write the code and then have it run it all at once. Who would program this way? No one does. We just write the code and run it. Maybe you do that. I can't.

So in fact, if you use GPT 3.5 for zero-sample cueing, it gets 481 TP3 T. GPT-4 does much better, getting 671 TP3 T. But if you build a workflow of AI intelligences on top of GPT 3.5, it can do even better than GPT-4. If you apply this workflow to GPT-4, the results are also very good. You'll notice that GPT 3.5 with the AI Intelligentsia workflow actually outperforms GPT-4, which means it's going to have a big impact on the way we build applications.

The term AI Intelligentsia is widely discussed and there are many consulting reports discussing about AI Intelligentsia, the future of AI, and so on. I'd like to share with you more substantively some of the common design patterns I see in AI intelligences. It's a complex and confusing field with tons of research, tons of open source projects. There's a lot of stuff going on. But I'm trying to give a more relevant overview of what's going on in AI intelligences.

Reflection is a tool I think most of us should use. It does work. I think it should be more widely used. It really is a very robust technique. When I use them, I always get them to work. As for planning and multi-intelligence collaboration, I think it's an emerging field. When I use them, I am sometimes surprised at how well they work. But at least at this moment, I'm not sure I can always get them to work consistently. So let me go over these four design patterns in more detail in the next couple of slides. If any of you go back and try them out for yourselves, or get your engineers to use these patterns, I think you'll see productivity gains very quickly.

So, regarding reflection, here's an example. Let's say I ask a system to code a task for me. And then we have a programming intelligence that just gives it a prompt to code the task, for example, define a function that performs the task, write a function that does that. An example of self-reflection is that you can prompt the big language model like this. Here's a piece of code written for a particular task. Then present it with the exact same code it just generated again. Then ask it to scrutinize this code to see if it is correct, efficient and well-structured, asking questions like this. The results show that the same big language model that you prompted for the code before might be able to find a problem like the bug at line 5 and fix it. And so on. If you now present it with its own feedback again, it may create version two of the code, which may perform better than the first version. There are no guarantees, but in most cases this approach is worth trying in many applications. Revealing this in advance, if you have it run unit tests, and if it doesn't pass the unit tests, then you can ask it why it didn't pass the unit tests. Having this conversation, maybe we can figure out why it didn't pass the unit tests, so you should try to change something and generate a V3 version of the code. By the way, for those of you who want to learn more about these techniques, I'm very excited about them. For each part of the presentation, I've included some recommended reading at the bottom to hopefully provide more reference.

To preview the multi-intelligent body system again, I'm describing a programmed intelligent body that you can prompt to have this conversation with itself. A natural evolution of this idea is that instead of just one programmed intelligent, you can set up two intelligences, one programmed and the other reviewed. These could be based on the same big language model, just with different cues that we provide. To one party we say, you're a programming expert, write code. To the other side we would say, you are a code review expert, please review this code. It's actually a very easy workflow to implement. I think it's a very versatile technique that can be adapted to a variety of workflows. It will significantly improve the performance of the big language model.

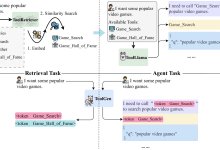

The second design pattern is the use of tools. Many of you have probably seen how systems based on large language models use tools. On the left is a screenshot from the co-pilot, and on the right is a portion of what I pulled from GPT-4. However, if you ask today's big language model to answer a question like which is the best copier for a web search, it will generate and run code. In fact, there are many different tools that are used by many people to perform analysis, gather information, take action, and improve personal efficiency.

Much of the early research in the use of tools came from the computer vision community. This is because before the advent of large language models, they could not process images. So, the only option was to have the big language model generate a function that could manipulate images, such as generating images or performing object detection. So, if you look closely at the literature, you'll see that a lot of the research on tool use appears to have originated in the vision field, because before the advent of GPT-4 and LLaVA and so on, the big language models didn't know anything about images. This is where the use of tools comes in and it expands the applications of big language models.

Next up is planning. For those of you who haven't delved into planning algorithms yet, I think a lot of people talk about the ChatGPT The shocking moment of that unprecedented feeling. I think you guys probably haven't used planning algorithms. There are a lot of people who will exclaim, wow, I didn't think AI intelligences could do this well. I've done live demonstrations where when something fails, the AI intelligence will replan the path to avoid the failure. In fact, there have been several occasions where I've been blown away by the autonomy of my own AI systems.

I've adapted an example from a paper on GPT modeling, where you could have it generate an image of a girl reading a book, with the same pose as the boy in the image, e.g., example.jpeg, and then it would describe the boy in the new image. Using existing AI intelligences, you could decide to first determine the pose of the boy and then find a suitable model, possibly on the platform HuggingFace, to extract the pose. Next, you need to find a model that post-processes the image, synthesizes a picture of the girl according to the instructions, and then uses image to text and finally text to speech techniques.

Currently, we have a number of AI intelligences, and while they are not always reliable, and can sometimes be a bit cumbersome and don't always work, when they do work, the results are pretty amazing. With this kind of intelligent body cycle design, sometimes we can even recover from previous failures. I find that I've started using research intelligences like this in some of my work, where I need some research but I don't really want to spend a lot of time searching for it myself. I would give the task to the research intelligent and come back a little while later to see what it had found. Sometimes it finds valid results, sometimes not. But either way, it's become part of my personal workflow.

The last design pattern is Multi-Intelligence Collaboration. This pattern may seem strange, but it works better than you might think. On the left is a screenshot from a paper called "Chat Dev", a project that is completely open and actually open source. Many of you may have seen those flashy social media postings of "Devin's" demo, but I was able to run "Chat Dev" on my laptop. Chat Dev" is an example of a multi-intelligence system where you can set up a Large Language Model (LLM) to take on the role of CEO of a software engineering company, a designer, a product manager, or a tester. All you need to do is tell the LLM that you are now the CEO and you are now a software engineer, and they will start collaborating and having a deep conversation. If you tell them to develop a game, like the GoMoki game, they'll spend a few minutes writing code, testing, iterating, and generating amazingly complex programs. It doesn't always work, and I've had my share of failures, but sometimes it's amazing how well it performs, and the technology is getting better all the time. Also, another design pattern is to have different intelligences debate, you can have multiple different intelligences like ChatGPT and Gemini Having a debate is also an effective pattern for improving performance. So, having multiple simulated AI intelligences working together has proven to be a very powerful design pattern.

Overall, these are the design patterns that I have observed, and I think that if we can apply them in our work, we can improve AI results faster. I believe that the Intelligent Body Reasoning design pattern will be an important development.

This is my final slide. I expect that the tasks that AI can do will expand dramatically this year, due to the impact of intelligent body workflows. One thing that may be hard for people to accept is that when we send a cue word to the LLM, we expect an immediate response. In fact, in a discussion I had at Google ten years ago called "Big Box Search", we entered very long prompts. I was unsuccessful in pushing this because when you do a web search, you want a response in half a second, it's human nature. We like instant feedback. But for a lot of intelligent body workflows, I think we need to learn to delegate tasks to AI intelligences and be patient and wait a few minutes, maybe even hours, for a response. Just as I see a lot of novice managers who delegate tasks and then check in five minutes later, which is not efficient, we need to do the same with some AI intelligences, even though it's very difficult. I thought I heard some laughter.

In addition, the rapid generation of token is an important trend as we continue to iterate on these intelligentsia workflows. llm reads and generates tokens for itself, and being able to generate tokens faster than anyone else is great. I think the ability to generate more tokens quickly, even from slightly lower quality LLMs, may yield good results, as opposed to slow generation of tokens from better quality LLMs, which may not be as good. This idea may cause some controversy, as it may give you a few more turns in the process, as I showed on the first slide with the results for GPT-3 and the smart body architecture.

Frankly, I'm looking forward to it. Claude 4, GPT-5, Gemini 2.0, and all the other wonderful models under construction. It seems to me that if you're looking forward to running your project on GPT-5 zero-sample learning, you may find that by using intelligentsia and inference on early models, you may approach GPT-5 performance levels sooner than expected. I think this is an important trend.

In all honesty, the path to generalized AI is more of a journey than a destination, but I think this intelligent body workflow may help us take a small step on that very long journey.

Thank you.