5 days to learn RAG's route planner

RAG be Retrieval Abbreviation for Retrieval Augmented Generation. Let's break down this terminology to get a clearer understanding of what RAG is:

R -> Retrieve

A -> Enhancement

G -> Generate

Basically, the Large Language Models (LLMs) we use now are not updated in real time. If I ask an LLM (e.g. ChatGPT) a question, it may hallucinate and give the wrong answer. To counter this, we train the LLM with more data (data that is only accessed by a subset of people, not data that is publicly available globally.) We then ask questions to the LLM that is trained on this data so that it can provide relevant information. If we don't use the RAG, the following may happen:

- Increased likelihood of hallucinations

- LLM obsolescence

- Reduced accuracy and factuality

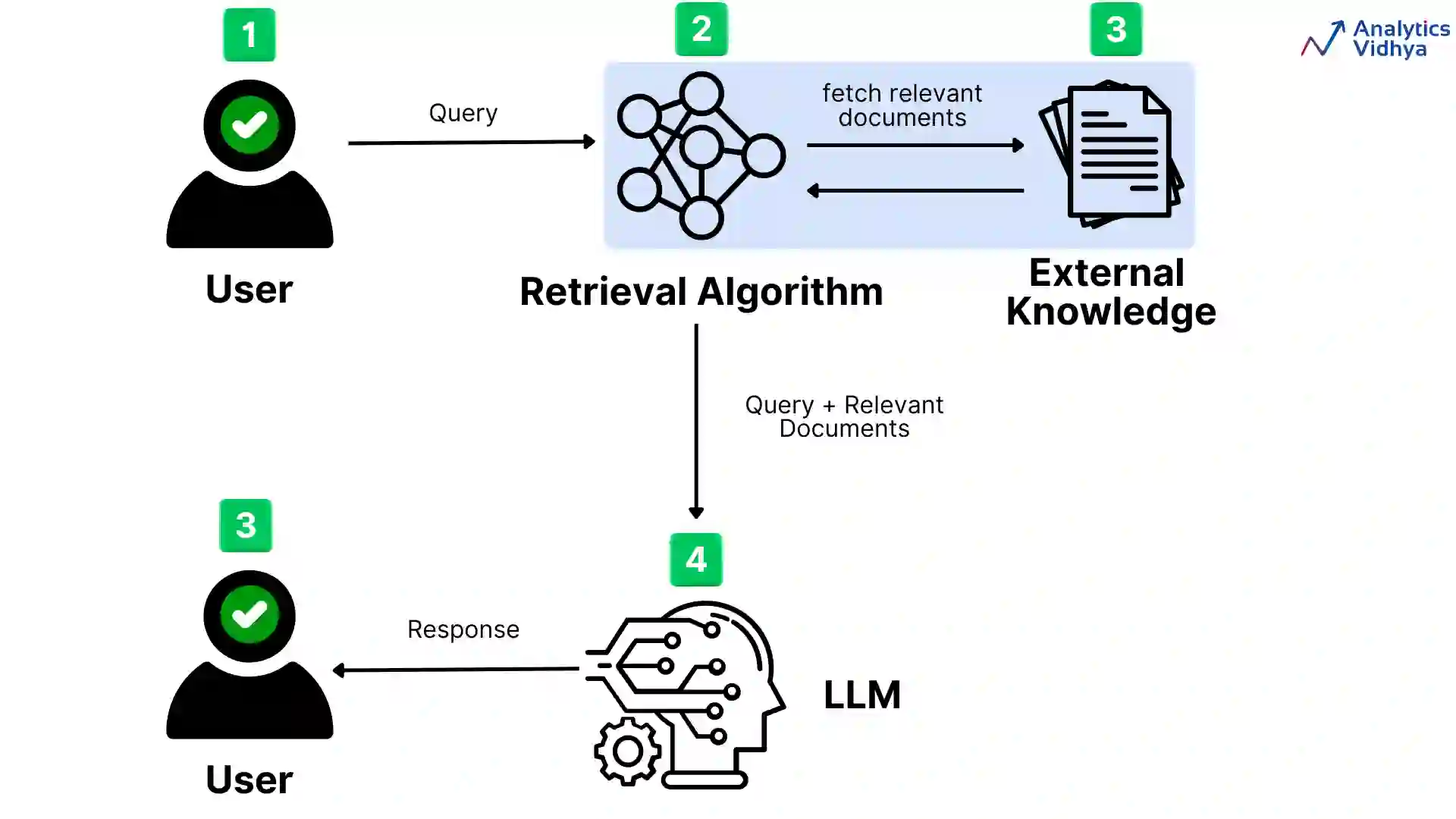

You can refer to the chart mentioned below:

RAG is a hybrid system that combines the advantages of a retrieval-based system with LLM to generate more accurate, relevant and informative decisions. This approach utilizes external knowledge sources in the generation process, enhancing the model's ability to provide up-to-date and contextualized information. In the above diagram:

- In the first step, the user makes a query to the LLM.

- The query is then sent to the

- after that

- The retrieved documents are sent to the Language Model (LLM) along with the original query.

- The generator processes both the query and the associated document, generates a response, and then returns it to the user.

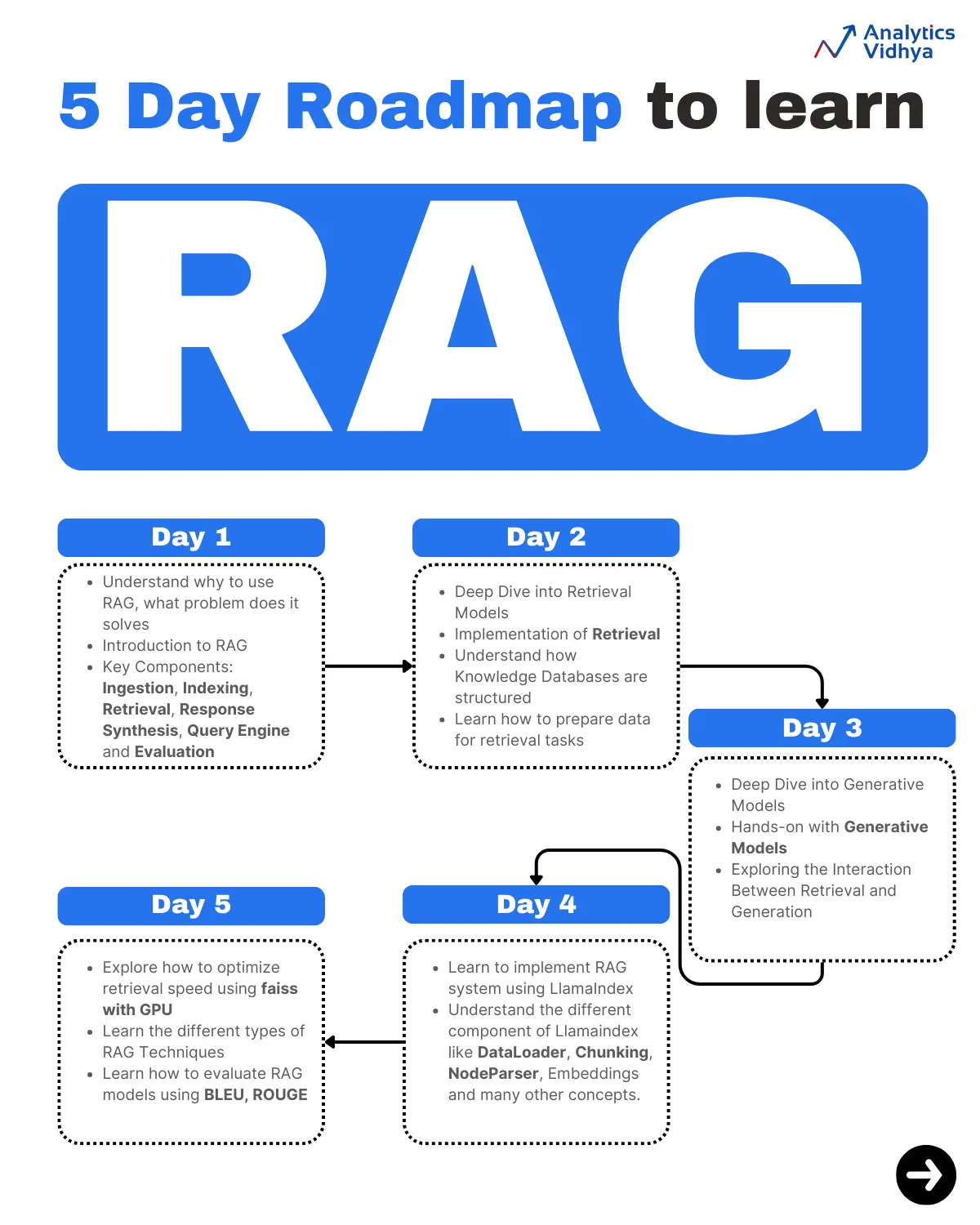

Now I know you are totally interested in learning RAG from basic to advanced, so let me show you the perfect roadmap to learn the RAG system in just 5 days. Yes, you heard me right, you can master the RAG system in just 5 days. Let's get straight to the learning roadmap:

Day 1: Laying the groundwork for RAG

The core objective of Day 1 is to understand RAG as a whole and to explore the key components of RAG. Below is a breakdown of the topics for Day 1:

RAG Overview.

- Recognize the function and importance of RAG and its place in modern NLP.

- The main idea is that retrieval-augmented generation (RAG) enhances the effectiveness of the generated model by introducing external information.

Key components.

- Learn retrieval and generation separately.

- Understand the architecture of retrieval (e.g., Dense Paragraph Retrieval (DPR), BM25) and generation (e.g., GPT, BART, T5).

Day 2: Building Your Own Search System

The core objective of Day 2 is to successfully implement a retrieval system (even if it is a basic one). Below is a breakdown of the topics for Day 2:

An In-Depth Look at Retrieval Models.

- Learn the difference between dense and sparse searches:

- Intensive search: DPR, ColBERT.

- Sparse Search. BM25, TF-IDF.

- Explore the advantages and disadvantages of each method.

Retrieval Implementation.

- Use libraries such as elasticsearch (for sparse searches) or faiss (for dense searches) to accomplish basic search tasks.

- Understand how to retrieve relevant documents from the knowledge base with Hugging Face's DPR tutorial.

Knowledge databases.

- Understand the structure of the knowledge base.

- Learn how to prepare data for retrieval tasks such as preprocessing corpora and indexing documents.

Day 3: Fine-tuning the generated model and observing the results

The goal of Day 3 is to fine-tune the generation model and observe the results to understand the role of retrieval in enhancing generation. Below is a breakdown of the topics for Day 3:

An in-depth look at generative modeling.

- Examine trained models such as T5, GPT-2, and BART.

- Learn to fine-tune the process of generating tasks (e.g., quizzes or summaries).

Hands-On Model Generation.

- Apply the Hugging Face provided Transformer model, optimizing the model on a small dataset.

- The test uses a generative model to generate answers to questions.

Exploring the Interaction of Retrieval and Generation.

- Investigate the way the generative model inputs the retrieved data.

- Recognize how retrieval can improve the accuracy and quality of generated responses.

Day 4: Realization of an operational RAG system

Now we are closer to our goal. The main goal for today was to implement a working RAG system on a simple dataset and familiarize ourselves with tuning parameters. Here is a breakdown of the topics for Day 4

Combined retrieval and generation:

- Integration of generated and retrieved components into a single system.

- Enables interaction between retrieving output and generating models.

RAG pipeline using LlamaIndex:

- Learn the features of the RAG pipeline through official documentation or tutorials.

- Set up and execute an example using LlamaIndex's RAG model.

Hands-on experimentation:

- Start experimenting with different parameters, such as the number of documents retrieved, the generated bundle search strategy, and temperature scaling.

- Try running the model on simple knowledge-intensive tasks.

Day 5: Building and fine-tuning a more robust RAG system

The goal of the final day was to create a more robust RAG model by fine-tuning it and understanding the different types of RAG models. Below is a breakdown of the topics for day five:

- Advanced fine-tuning: Discover how to optimize the generation and retrieval components for domain-specific tasks.

- Expansion: Scale your RAG system with larger datasets and more complex knowledge bases.

- Performance Optimization: Learn how to maximize memory usage and retrieval speed (e.g., by using faiss on the GPU).

- Assessment: Learn how to evaluate RAG models in knowledge-intensive tasks, using various metrics such as BLEU, ROUGE, etc. to measure problem resolution.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...