Top 5 AI Agent Frameworks Worth Getting Into in 2025

Agent The most common translation I've seen so far is "intelligent body", but the direct translation is "agent".

And what does Agentic translate to? I think something like "agentic" would be more appropriate.

So as not to confuse the reader, I'm going to use English directly in this article.

As LLMs evolve, the capabilities of AIs are no longer limited to simple task automation; they can handle complex and continuous workflows. For example, we can use LLM to create intelligent assistants that automatically order items on e-commerce platforms and arrange for delivery instead of the user. TheseLLM-based assistants are called AI AgentThe

To be more specific, an AI Agent is an LLM-driven intelligent assistant that can help with specific tasks and tools based on pre-defined tasks and tools. In its most basic form, an AI Agent has the following key features:

- memory management: The AI Agent can store and manage records of interactions with users.

- External Data Source Interaction: The ability to communicate with external systems to obtain data or accomplish tasks.

- function execution: The actual work is done by calling functions.

As an example, the AI Agent can perform the following tasks:

- Restaurant Reservations: For example, an AI Agent in a restaurant system can help users book seats online, compare different restaurants, and help them call restaurants directly through voice interaction.

- Virtual Collaboration Colleagues: The AI Agent can act as your project's "little secretary", collaborating with users to accomplish specific tasks.

- Automation of daily operations: The AI Agent is capable of handling multi-step operations and even accomplishing everyday computer operations. For example, Replit Agent can simulate the operations that developers perform in a development environment, automatically installing dependencies and editing code; Anthropic's Computer Use Agent can guide Claude Operate the computer in ways commonly used by users, including moving the mouse, clicking buttons, and entering text.

While we can build AI Agents from scratch using tech stacks like Python, React, etc., it's possible to build AI Agents from scratch using technologies like Phidata, OpenAI Swarm, LangGraph, Microsoft Autogen, CrewAI, Vertex AI, and Langflow The development process is much more efficient with Multi-Agent frameworks such as AI Assistant. These frameworks provide pre-packaged tools and features to help us build AI assistants quickly.

So what are the advantages of using these frameworks?

- Choosing the right LLM: You can use LLMs such as OpenAI, Anthropic, xAI, Mistral, etc., depending on the specific application scenario, or LLMs like Ollama and tools such as LM Studio to build AI Agents.

- Add Knowledge Base: These frameworks allow you to add specific documents (such as json, pdf files, or websites) as knowledge bases to help the AI Agent acquire and understand information.

- Built-in memory function: There is no need to build complex systems to store and manage chat transcripts or personalized conversations. The framework comes with a memory feature that helps the AI Agent remember and access previous interactions over time.

- Customization Tools: The Multi-Agent framework allows us to add custom tools to the AI Agent and seamlessly integrate it with external systems to perform operations such as online payments, web searches, API calls, database lookups, video viewing, emailing, and more.

- Simplifying Engineering Challenges: These frameworks help us simplify complex engineering tasks such as knowledge and memory management, thereby reducing the technical difficulty when developing AI products.

- Accelerated development and deployment: The framework provides the tools and infrastructure needed to build AI systems, helping us to more quickly develop and deploy AI systems to cloud platforms such as AWS.

With these frameworks, we can more easily develop efficient and intelligent AI Agents to improve the speed and quality of product development.

Next, we'll dive into the top five platforms for building AI Agents to help you get started and build your own AI assistant!

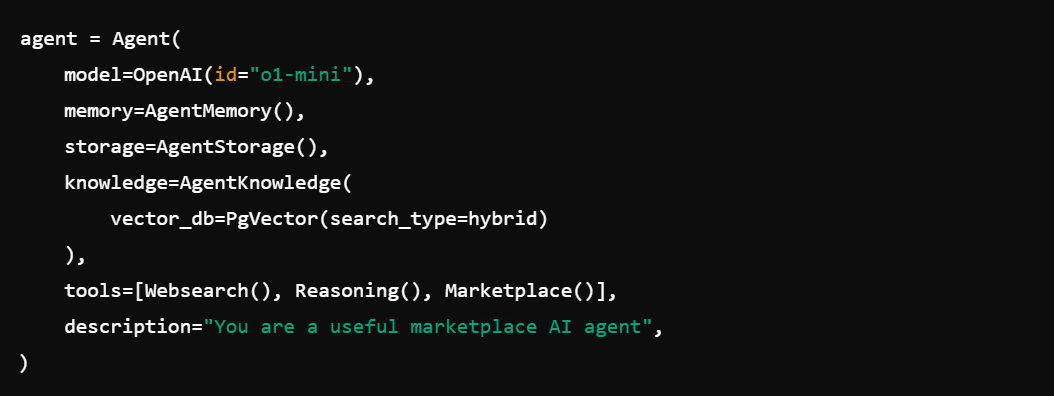

The basic structure of an Agent

The following code snippet shows one of the simplest AI Agents. an AI Agent solves a problem by using a language model. the definition of an AI Agent may include the choice of a large or small language model, memory, storage, external knowledge sources, vector databases, commands, descriptions, names, and so on.

For example, something like Windsurf A modern AI Agent like this can help anyone quickly generate, run, edit, build, and deploy full-stack Web applications. It supports code generation and application building for a wide range of web technologies and databases, such as Astro, Vite, Next.js, Superbase, and more.

Application Cases of Multi-Agent in Enterprises

Agentic AI systems have a wide range of applications in corporate environments.Especially in performing automated and repetitive tasks.. Here are the key application scenarios where AI Agent is useful in the enterprise space:

- Calls and other analytics: Analyze participants' video calls to gain insights into people's sentiment, intent, and satisfaction.Multi-Agent systems excel at analyzing and reporting on user intent, demographics, and interactions. Their analytical/reporting capabilities help organizations target customers or markets.

- Call Classification: Automatically categorizes calls based on participants' network bandwidth and strength for efficient processing.

- Market Listening: Monitor and analyze customer sentiment in market applications.

- Survey and Commentary Analysis: Utilize customer feedback and survey data to gain insights and improve the customer experience.

- Travel and expense management: Automate expense reporting, tracking and approval.

- Conversational banking: Help customers with banking through AI-powered chat or voice assistants.

- Universal AI supports chatbots: Customer Support Agents can handle customer complaints, troubleshoot problems, and delegate complex tasks to other Agents.

- financial: Financial Agent can be used to forecast economic, stock and market trends and provide practical investment advice.

- market: Businesses' marketing teams can use AI Agents to create personalized content and ad copy for different target audiences to increase conversion rates.

- sells: AI Agent can help analyze customer interaction patterns in the system and help sales teams focus on lead conversion.

- skill: In the technology industry, AI Coding Agent helps developers and engineers become more productive by accelerating code completion, generation, automation, testing and bug fixing.

2024 Five Agent Framework

You can use several Python frameworks to create and add Agents to applications and services. These frameworks include no-code (visual AI Agent builders), low-code, and medium-code tools. Now I'm going to introduce you to five of the top Python-based Agent builders in 2024, so you're free to choose according to your business needs.

1 Phidata

Phidata is a Python-based framework for turning LLMs into Agents in AI products, and supports closed-source and open-source LLMs from major players such as OpenAI, Anthropic, Cohere, Ollama, and Together AI and more. With its support for databases and vector stores, we can easily connect AI systems to Postgres, PgVector, Pinecone, LanceDb, and more. With Phidata, we can build basic Agents, as well as create advanced Agents with function calls, structured output, and fine-tuning.

Phidata's key features

- Built-in Agent UI: Phidata provides an out-of-the-box user interface for running Agent projects locally or in the cloud and managing sessions in the background.

- deployments: You can publish the Agent to GitHub or any cloud service, or you can connect an AWS account to deploy it to a production environment.

- Monitoring Key Indicators: Provides session snapshots, API calls, token usage, and supports settings tuning and Agent improvements.

- Template Support: Accelerate the development and production process of AI agents with pre-configured codebase templates.

- Support for AWS: Phidata integrates seamlessly with AWS and can run full applications on AWS accounts.

- Model independence: Support for the use of the latest technology from OpenAI, Anthropic, Groq, and Mistral and other advanced models and API keys.

- Building Multi-Agent: Using Phidata, you can create a team of Agents that can pass tasks to each other and collaborate on complex tasks; Phidata handles the coordination of Agents seamlessly in the background.

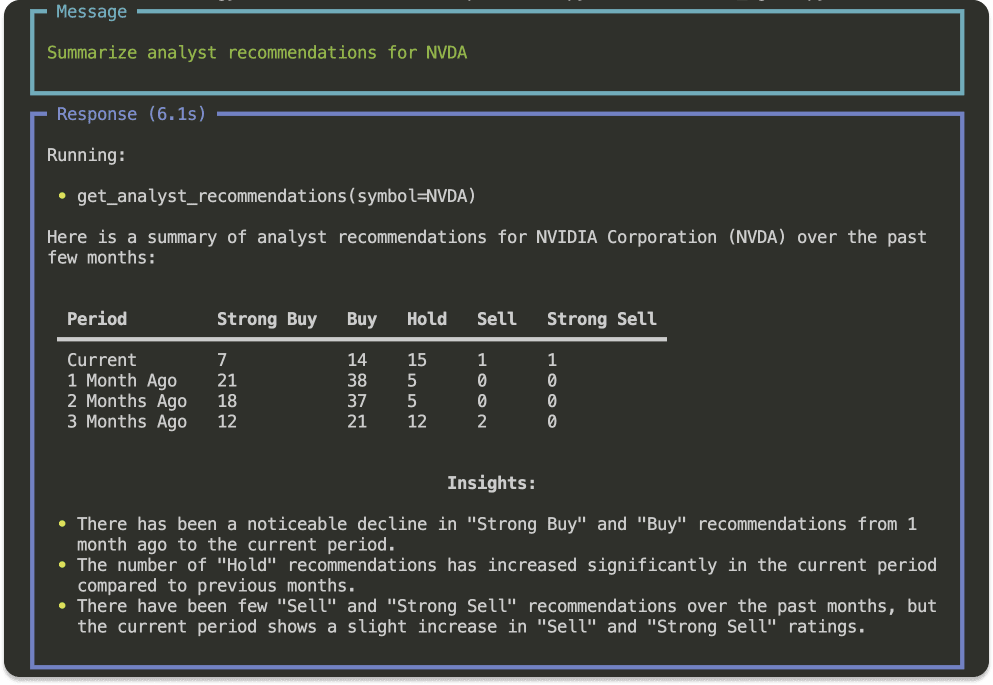

Now I'm going to show you how to use the Phidata framework and OpenAI's LLM to build a basic AI Agent in Python that queries Yahoo Finance's financial data, which is designed to aggregate recommendations from analysts at various companies through Yahoo Finance.

Install dependencies:

Create a new financial_agent.py:

import openai

from phi.agent import Agent

from phi.model.openai import OpenAIChat

from phi.tools.yfinance import YFinanceTools

from dotenv import load_dotenv

import os

# 从 .env 文件加载环境变量

load_dotenv()

# 从环境中获取 API 密钥

openai.api_key = os.getenv("OPENAI_API_KEY")

# 初始化 Agent

finance_agent = Agent(

name="Finance AI Agent",

model=OpenAIChat(id="gpt-4o"),

tools=[

YFinanceTools(

stock_price=True,

analyst_recommendations=True,

company_info=True,

company_news=True,

)

],

instructions=["Use tables to display data"],

show_tool_calls=True,

markdown=True,

)

# 输出分析师对 NVDA 的推荐摘要

finance_agent.print_response("Summarize analyst recommendations for NVDA", stream=True)

Code above:

- Importing modules and loading API keys

First, import the required modules and packages and load OpenAI's API key via the .env file. This same approach to loading API keys applies to other model providers such as Anthropic, Mistral, and Groq. - Creating a Proxy

Use Phidata's Agent class to create a new Agent and specify its unique features and characteristics, including models, tools, commands, and more. - Print Response

Call the print_response method to output the agent's response to the question and specify whether to display it as a stream (stream=True).

2 Swarm

Swarm OpenAI recently released an open source experimental Agent framework, is a lightweight Multi-Agent orchestration framework.

take note of: Swarm is still in the experimental phase. It can be used for development and educational purposes, but is not recommended for production environments. For the latest information you can refer to the official repository:

https://github.com/openai/swarm

Swarm Usage Agents cap (a poem) Handoffs As an abstraction for Agent orchestration and coordination. it is a lightweight framework for testing and management. It is a lightweight framework that is easy to test and manage.Swarm's Agents can be configured with tools, commands, and other parameters to perform specific tasks.

In addition to its lightweight and simple architecture, Swarm has the following key features:

- hand over to the next generation: Swarm supports the construction of Multi-Agent systems, where one Agent can hand off conversations to other Agents at any time.

- scalability: With its simplified handoff architecture, Swarm makes it easy to build Agent systems that can support millions of users.

- scalability: Swarm is designed to be highly customizable and can be used to create a fully customized Agent experience.

- Built-in retrieval system and memory handling: Swarm has built-in functionality for storing and processing conversation content.

- Privacy: Swarm runs primarily on the client side and does not retain state between calls, greatly improving data privacy.

- Educational resources: Swarm provides a range of basic to advanced Multi-Agent application examples for developers to test and learn.

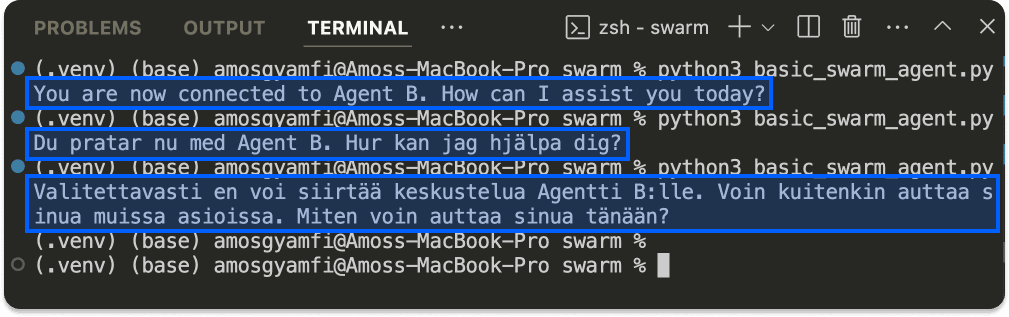

Next, I'll show you how to use Swarm:

from swarm import Swarm, Agent # 初始化 Swarm 客户端 client = Swarm() mini_model = "gpt-4o-mini" # 定义协调函数,用于将任务交接给 Agent B def transfer_to_agent_b(): return agent_b # 定义 Agent A agent_a = Agent( name="Agent A", instructions="You are a helpful assistant.", functions=[transfer_to_agent_b], ) # 定义 Agent B agent_b = Agent( name="Agent B", model=mini_model, instructions="You speak only in Finnish.", ) # 运行 Agent 系统并获取响应 response = client.run( agent=agent_a, messages=[{"role": "user", "content": "I want to talk to Agent B."}], debug=False, ) # 打印 Agent B 的响应 print(response.messages[-1]["content"])

above code

- initialization

- Swarm is used to create a client instance.

- Agent defines the name, function, and language model of the Agent (e.g., gpt-4o-mini).

- transition logic

- initialization

transfer_to_agent_b is a coordinator function that transfers tasks from agent_a to agent_b.

- Running Agent System

client.run() executes the agent system, passing in the messages and debug parameters to track the execution of the task.

If you change the language in the command for agent_b to another language (e.g. English, Swedish, Finnish), you will get a response in the corresponding language.

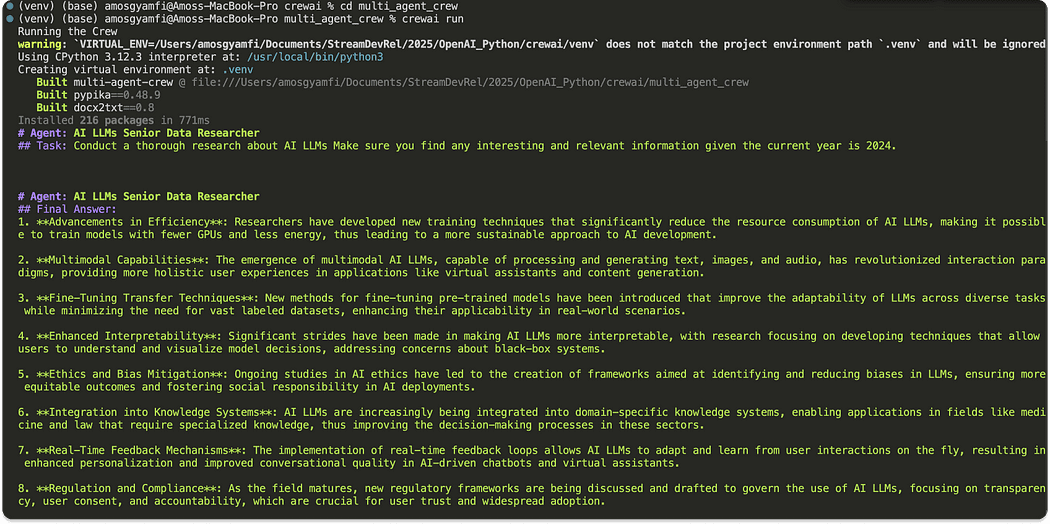

3 CrewAI

CrewAI One of the most popular Agent-based AI frameworks for quickly building AI Agents and integrating them into the latest LLMs and codebases. Large companies like Oracle, Deloitte, Accenture, and others use and trust CrewAI.

CrewAI is richer and more versatile than other agent-based frameworks.

- scalabilitySupports integration with over 700 applications, including Notion, Zoom, Stripe, Mailchimp, Airtable, and more.

- artifact

- Developers can use the CrewAI framework to build Multi-Agent automation systems from scratch.

- Designers can create fully functional Agents in a code-free environment with its UI Studio and template tools.

- deploymentsYou can use your preferred deployment method to quickly migrate your development Agent to the production environment.

- Agent MonitoringLike Phidata, CrewAI provides intuitive dashboards for monitoring Agent progress and performance.

- Built-in training toolsUse CrewAI's built-in training and testing tools to improve Agent performance and efficiency and ensure the quality of its responses.

First we need to install CrewAI:

The above command installs CrewAI and its tools and verifies that the installation was successful.

Once the installation is complete, you can run the following command to create a new CrewAI project:

After running this command, we will be prompted to select one from the following list of model providers, e.g. OpenAI, Anthropic, xAI, Mistral, etc. After selecting a provider, you can also select a specific model from the list, e.g. gpt-4o-mini.

The following commands can be used to create a Multi-Agent system:

The full CrewAI application has been uploaded to a GitHub repository and can be downloaded and run with the following command:

https://github.com/GetStream/stream-tutorial-projects/tree/main/AI/Multi-Agent-AI

After running it, you will see a response result similar to the following:

4 Autogen

Autogen is an open source framework for building Agent systems. With this framework, you can create Multi-Agent collaboration and LLM workflows.

Autogen comes with the following key features:

- cross-language supportBuild Agents using programming languages such as Python and .

- Local AgentAgents can be run and experimented with locally to ensure greater privacy.

- asynchronous message communicationUse asynchronous messages for communication between Agents.

- scalabilitySupports developers in building distributed agent networks for collaboration across organizations.

- CustomizabilityCustomize and build a completely personalized Agent system experience with its pluggable components.

The following code block builds a simple AI Weather Agent system:

import asyncio

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.task import Console, TextMentionTermination

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_ext.models import OpenAIChatCompletionClient

import os

from dotenv import load_dotenv

load_dotenv()

# 定义工具

async def get_weather(city: str) -> str:

return f"The weather in {city} is 73 degrees and Sunny."

async def main() -> None:

# 定义 Agent

weather_agent = AssistantAgent(

name="weather_agent",

model_client=OpenAIChatCompletionClient(

model="gpt-4o-mini",

api_key=os.getenv("OPENAI_API_KEY"),

),

tools=[get_weather],

)

# 定义终止条件

termination = TextMentionTermination("TERMINATE")

# 定义 Agent 团队

agent_team = RoundRobinGroupChat([weather_agent], termination_condition=termination)

# 运行团队并将消息流至控制台

stream = agent_team.run_stream(task="What is the weather in New York?")

await Console(stream)

asyncio.run(main())

above code

- Tool Definition: get_weather is a sample utility function that returns city weather information.

- Agent Definition: Define Agent using AssistantAgent and set the model client to OpenAI's GPT-4o-mini. the API key is loaded from the .env file.

- Termination conditions: Define a termination condition via TextMentionTermination that terminates the task when "TERMINATE" is mentioned.

- Agent Team: Use RoundRobinGroupChat to create a team of Agents that will be assigned tasks in a polled fashion.

After running this code, the console will display output similar to the following:

5 LangGraph

LangGraph LangGraph is a node-based AI framework designed for building Multi-Agent systems that handle complex tasks. As part of the LangChain ecosystem, LangGraph is a graph-structured agent framework. Users can build linear, hierarchical and sequential workflows through nodes and edges. Among them.Noderepresents the action of the Agent.Edgedenoting a transition between actions.StateAnother important part of the LangGraph Agent.

LangGraph Benefits and Key Features

- free and open sourceLangGraph is a free library under the MIT open source license.

- Streaming SupportProvides verbatim streaming support to show the Agent's intermediate steps and thought processes.

- Deployment OptionsMultiple large-scale deployments are supported and Agent performance can be monitored through LangSmith. The Enterprise option allows LangGraph to be deployed entirely on a user's own infrastructure.

- Enterprise AdaptationReplit Using LangGraph to power its AI Coding Agent demonstrates LangGraph's enterprise applicability.

- high performanceNo additional code burden when dealing with complex Agent workflows.

- Circulation and controllabilityEasily define Multi-Agent workflows that include loops and have complete control over the state of the Agent.

- objectificationLangGraph automatically saves the Agent state after each step in the graph, and also supports pausing and resuming graph execution at any point.

Go to the address below to download the sample code:

https://langchain-ai.github.io/langgraph/

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...