2024 RAG Inventory, RAG Application Strategy 100+

Looking back to 2024, the big models are changing day by day, and hundreds of intelligent bodies are competing. As an important part of AI applications, RAG is also a "swarm of heroes and lords". At the beginning of the year, ModularRAG continues to heat up, GraphRAG shines, in the middle of the year, open-source tools are in full swing, knowledge graphs create new opportunities, and at the end of the year, graph understanding and multimodal RAG start a new journey, so it's almost like "you're singing and I'm appearing on the stage", and strange techniques are coming out, and the list goes on and on!

I have selected the typical RAG systems and papers for the year 2024 (with AI notes, sources, and summary information), and I have included a RAG overview and test benchmarking material at the end of the paper, and I hope to execute this paper, which is 16,000 words long, to help you to get through RAG quickly.

The full text of 72 articles, month by month as an outline, strong called "RAG seventy-two styles", in order to offer you.

Remarks:

All content in this article has been hosted in the open source repository Awesome-RAG, feel free to submit PRs to check for gaps.

GitHub address: https://github.com/awesome-rag/awesome-rag

(01) GraphReader [Graphic Expert]

Graphic ExpertsIt is like a tutor who is good at making mind maps, transforming long text into a clear knowledge network, so that the AI can easily find the key points needed for the answer like exploring along a map, effectively overcoming the problem of "getting lost" when dealing with long text.

- Time: 01.20

- Thesis: GraphReader: Building Graph-based Agent to Enhance Long-Context Abilities of Large Language Models

GraphReader is a graph-based intelligent body system designed to process long texts by constructing them into a graph and exploring that graph autonomously using intelligent bodies. Upon receiving a problem, the intelligent body first performs a step-by-step analysis and develops a reasonable plan. Then, it calls a set of predefined functions to read node contents and neighbors to facilitate the exploration of the graph from coarse to fine. Throughout the exploration process, the intelligent body continuously records new insights and reflects on the current situation to optimize the process until it has gathered enough information to generate an answer.

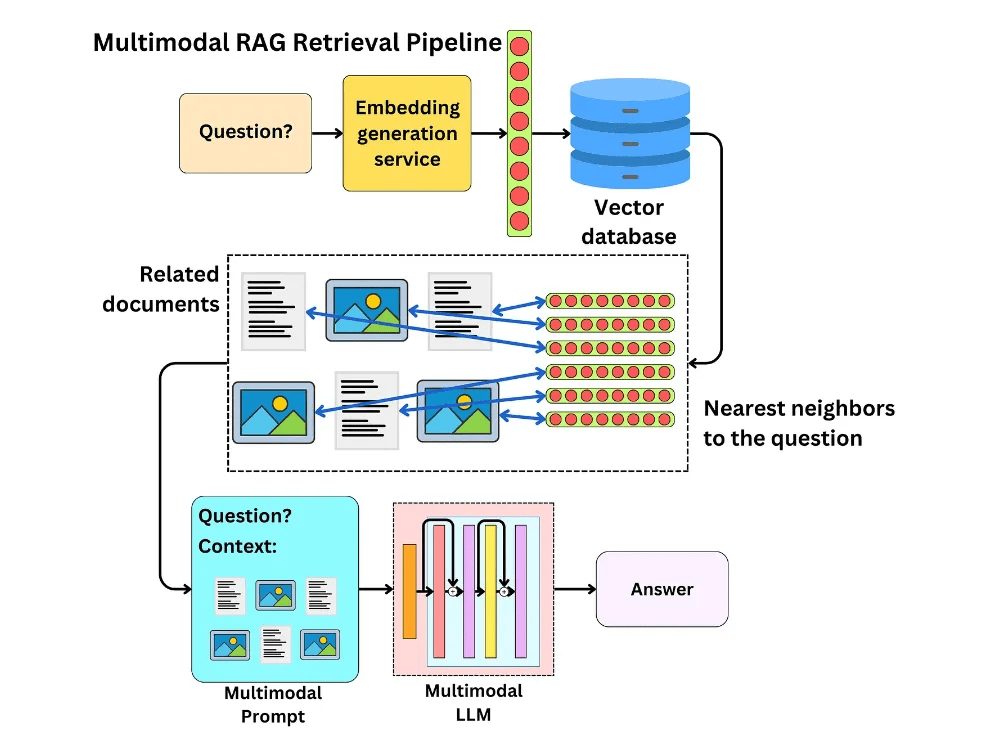

(02) MM-RAG [Multi-faceted]

versatile person: It is like an all-rounder that can be proficient in vision, hearing and language at the same time, not only understanding different forms of information, but also switching and correlating between them freely. Through the comprehensive understanding of various information, it can provide smarter and more natural services in various fields such as recommendation, assistant and media.

Developments in multimodal machine learning are presented, including comparative learning, arbitrary modal search implemented by multimodal embedding, multimodal retrieval augmented generation (MM-RAG), and how to use vector databases to build multimodal production systems. Future trends in multimodal AI are also explored, emphasizing its promising applications in areas such as recommender systems, virtual assistants, media and e-commerce.

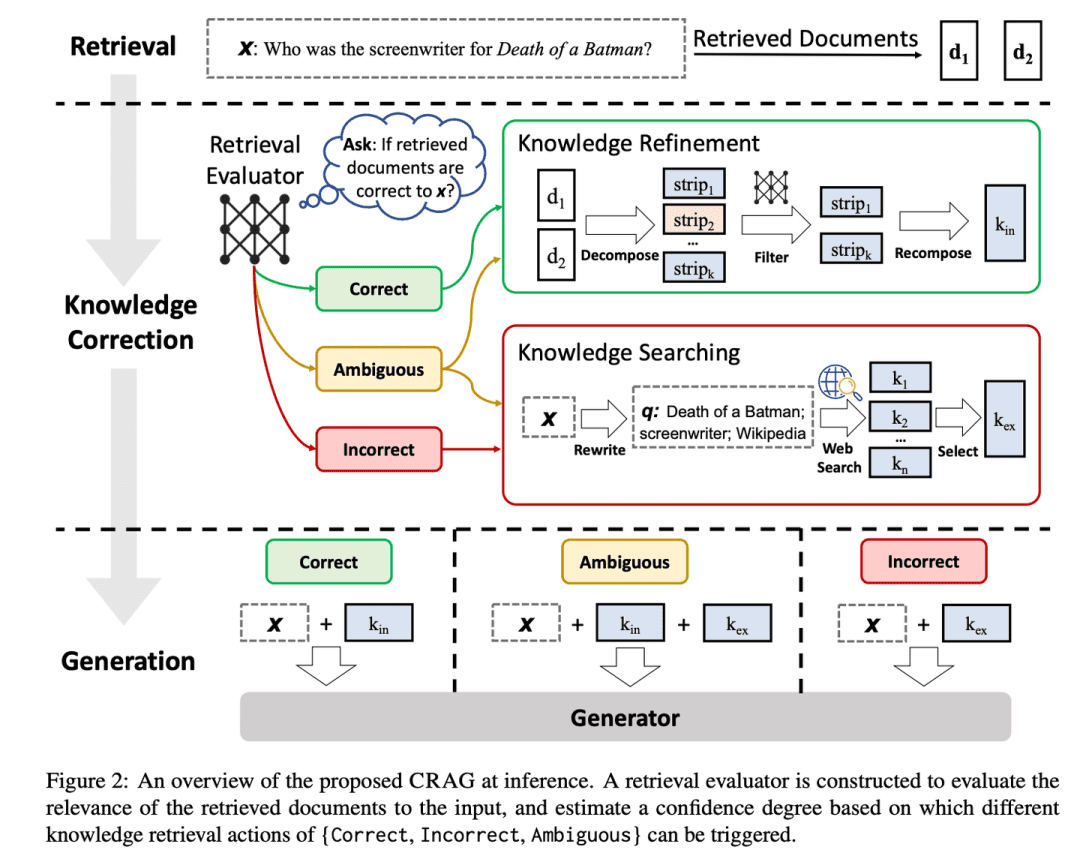

(03) CRAG [self-correcting]

self-correction: Like a seasoned editor, it first sifts through preliminary information in a simple and quick manner, then expands the information through web searches, and finally ensures that the final content presented is both accurate and reliable by disassembling and reorganizing it. It's like putting a quality control system on RAG to make the content it produces more trustworthy.

- Time: 01.29

- Thesis: Corrective Retrieval Augmented Generation

- Project: https://github.com/HuskyInSalt/CRAG

CRAG improves the quality of retrieved documents by designing a lightweight retrieval evaluator and introducing large-scale web search, and further refines the retrieved information by decomposition and reorganization algorithms to enhance the accuracy and reliability of the generated text.CRAG is a useful complement and improvement to the existing RAG technique, which enhances the robustness of the generated text by self-correcting the retrieval results.

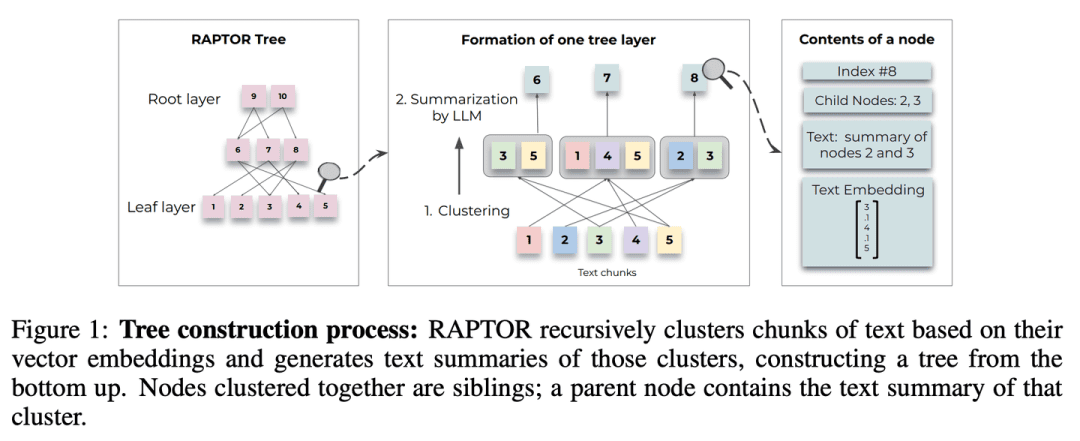

(04) RAPTOR [hierarchical generalization]

hierarchical summarization: Like a well-organized librarian, the content of the document is organized into a tree structure from the bottom up, so that information retrieval can flexibly shuttle between different levels, both to see the overall summary, but also in-depth details.

- Time: 01.31

- Thesis: RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval

- Project: https://github.com/parthsarthi03/raptor

RAPTOR (Recursive Abstractive Processing for Tree-Organized Retrieval) introduces a new method of recursively embedding, clustering and summarizing blocks of text to build trees with different levels of summarization from the bottom up. At inference time, the RAPTOR model retrieves from this tree, integrating information from long documents with different abstraction levels.

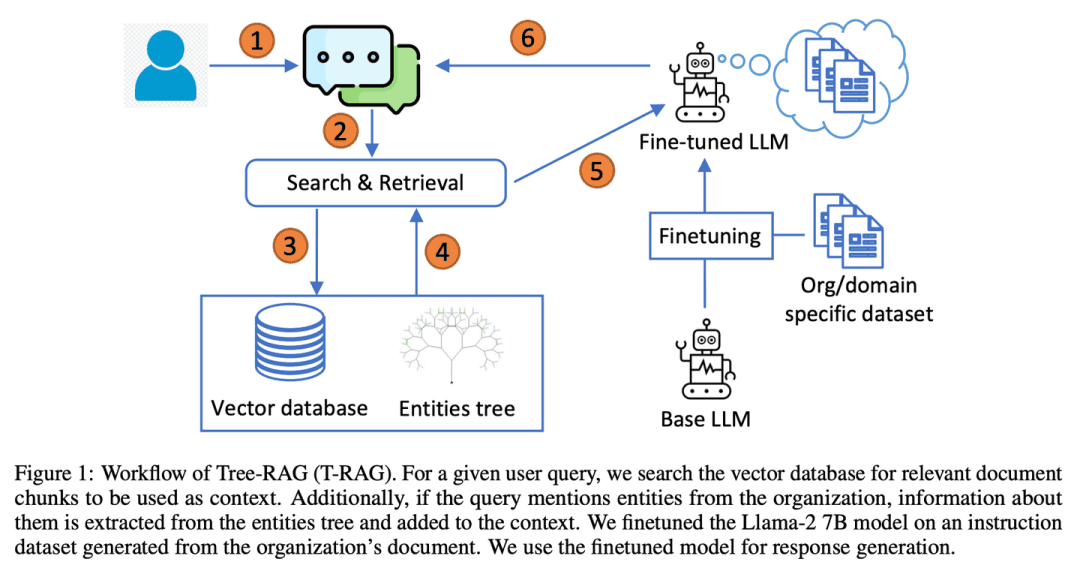

(05) T-RAG [private counselor]

personal counselor: Like an in-house consultant familiar with the structure of an organization, it is adept at organizing information using a tree structure to deliver localized services efficiently and cost-effectively while protecting privacy.

- Thesis: T-RAG: Lessons from the LLM Trenches

T-RAG (Tree Retrieval Augmentation Generation) combines RAG with fine-tuned open source LLMs that use a tree structure to represent entity hierarchies within an organization augmented with context, leveraging locally-hosted open-source models to address data privacy concerns while addressing inference latency, token usage costs, and regional and geographic availability issues.

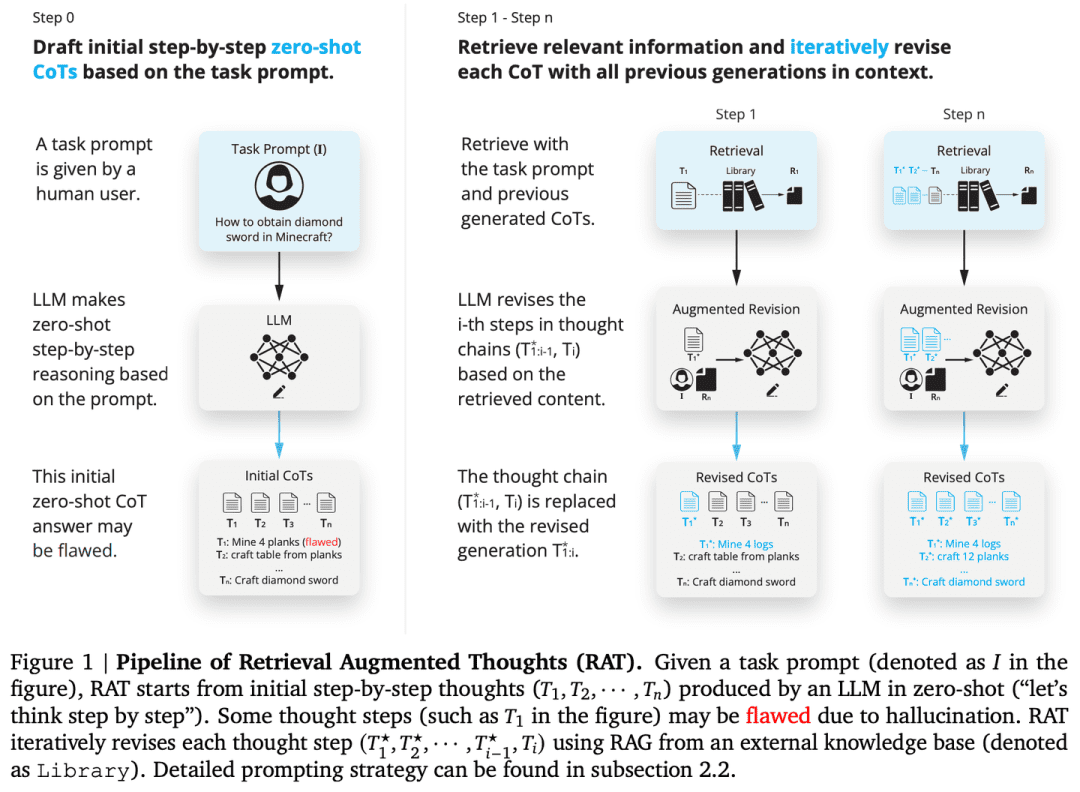

(06) RAT [Thinker]

thinker: Like a reflective tutor, instead of coming to a conclusion all at once, you start with initial ideas and then use the relevant information retrieved to continually review and refine each step of the reasoning process to make the chain of thought tighter and more reliable.

- Thesis: RAT: Retrieval Augmented Thoughts Elicit Context-Aware Reasoning in Long-Horizon Generation

- Project: https://github.com/CraftJarvis/RAT

RAT (Retrieval-Augmented Thinking) After generating an initial zero-sample chain of thoughts (CoT) and revising each thought step individually using retrieved information related to the task query, current and past thought steps, RAT significantly improves performance on a wide variety of long-time generation tasks.

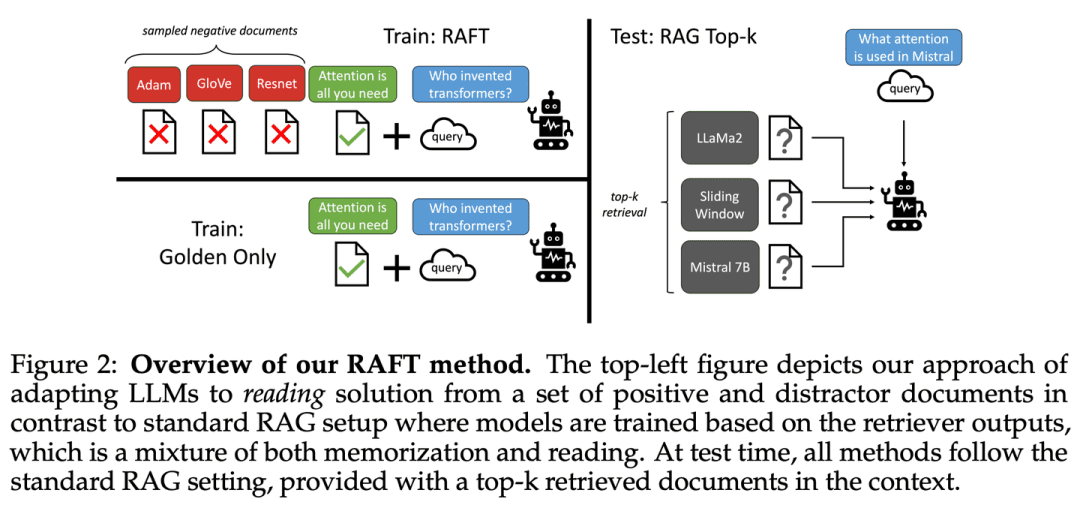

(07) RAFT [Open Book Master]

master of opening books: Like a good candidate, you will not only find the right references, but you will also quote key elements accurately and explain the reasoning process clearly, so that the answer is both well-founded and sensible.

- Thesis: RAFT: Adapting Language Model to Domain Specific RAG

RAFT aims to improve the model's ability to answer questions in domain-specific "open-book" environments by training the model to ignore irrelevant documents and to answer questions by quoting verbatim the correct sequences from the relevant documents, which, in combination with thought-chaining responses, significantly improves the model's reasoning ability.

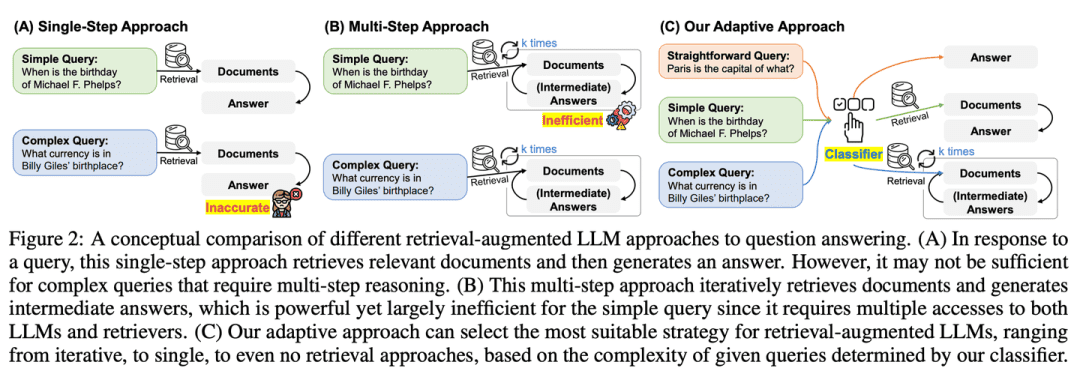

(08) Adaptive-RAG [Adapted to the needs of the individual]

(idiom) teach in line with the student's ability: When faced with questions of different difficulty, it will intelligently choose the most appropriate way to answer them. Simple questions will be answered directly, while complex questions will be answered with more information or step-by-step reasoning, just like an experienced teacher who knows how to adjust the teaching method according to the specific problems of the students.

- Thesis: Adaptive-RAG: Learning to Adapt Retrieval-Augmented Large Language Models through Question Complexity

- Project: https://github.com/starsuzi/Adaptive-RAG

Adaptive-RAG dynamically selects the most suitable retrieval enhancement strategy for LLM based on the complexity of the query, dynamically selecting the most appropriate strategy for LLM from the simplest to the most complex. This selection process is implemented through a small language model classifier that predicts the complexity of the query and automatically collects labels to optimize the selection process. This approach provides a balanced strategy that seamlessly adapts between iterative and single-step retrieval-enhanced LLMs as well as no-retrieval approaches for a range of query complexities.

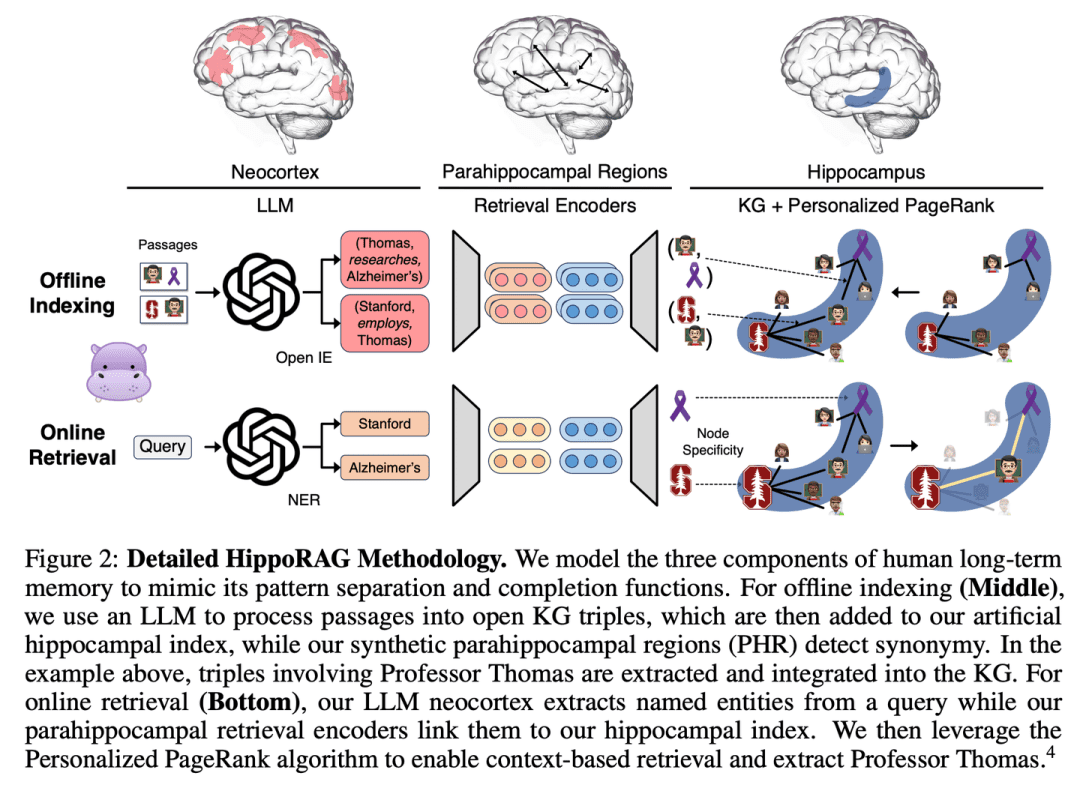

(09) HippoRAG [hippocampus]

hippocampus: To skillfully weave a web of old and new knowledge like the human mind's equine body. Not simply piling up information, but allowing each new piece of knowledge to find its most appropriate home.

- Thesis: HippoRAG: Neurobiologically Inspired Long-Term Memory for Large Language Models

- Project: https://github.com/OSU-NLP-Group/HippoRAG

HippoRAG is a novel retrieval framework inspired by the hippocampal indexing theory of human long-term memory, aiming at deeper and more efficient knowledge integration of new experiences.HippoRAG synergistically orchestrates LLMs, Knowledge Graphs, and personalized PageRank algorithms to mimic the different roles of the neocortex and hippocampus in human memory.

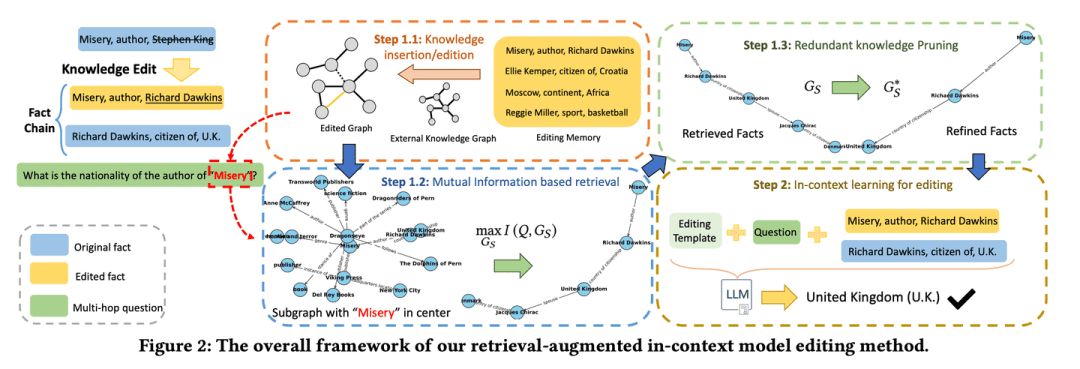

(10) RAE [intelligent editing]

intelligent editor (software)Like a careful news editor, he or she will not only dig deep into the relevant facts, but also find out the key information that can be easily overlooked through chain reasoning, and at the same time know how to cut down the redundancy, so as to ensure that the final information presented is both accurate and concise, and to avoid the problem of "talking a lot but not reliable".

- Thesis: Retrieval-enhanced Knowledge Editing in Language Models for Multi-Hop Question Answering

- Project: https://github.com/sycny/RAE

RAE (Multi-hop Q&A Retrieval Enhanced Model Editing Framework) first retrieves edited facts and then optimizes the language model through contextual learning. The mutual information maximization based retrieval approach leverages the inference capabilities of large language models to identify chained facts that may be missed by traditional similarity-based searches. In addition the framework includes a pruning strategy to eliminate redundant information from retrieved facts, which improves editing accuracy and mitigates the illusion problem.

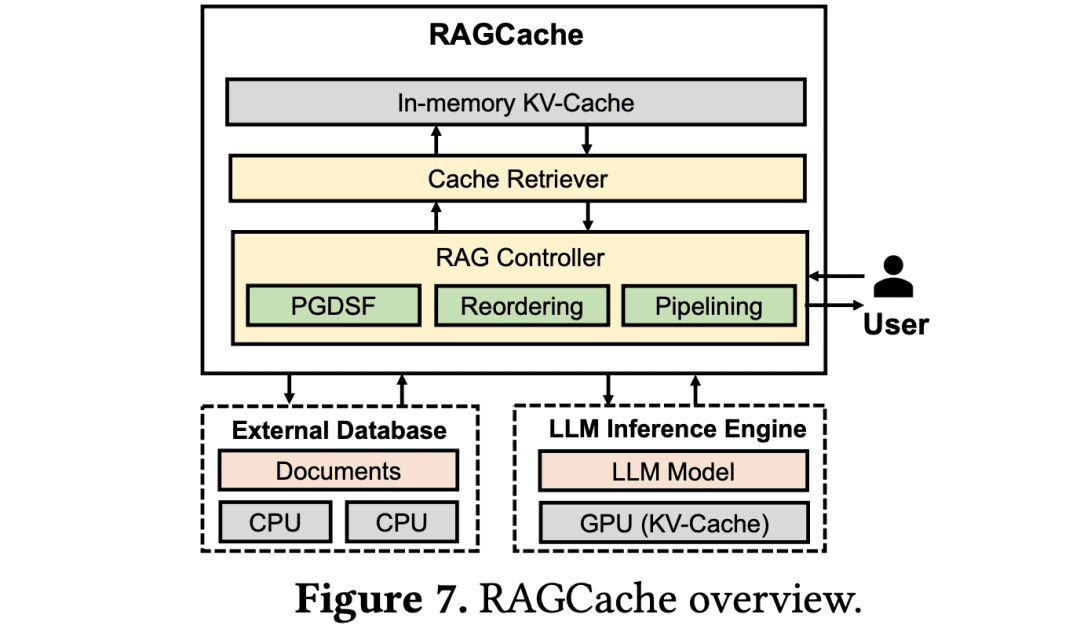

(11) RAGCache [Warehouseman]

storeroom clerk: Like a large logistics center, put frequently used knowledge on the shelves that are easiest to pick up. Know how to maximize pickup efficiency by placing frequently used packages at the door and infrequently used ones in the back bins.

- Thesis: RAGCache: Efficient Knowledge Caching for Retrieval-Augmented Generation

RAGCache is a novel multi-level dynamic caching system tailored for RAG that organizes the intermediate states of retrieved knowledge in a knowledge tree and caches them in both GPU and host memory hierarchies.RAGCache proposes a replacement strategy that takes into account both LLM reasoning features and RAG retrieval patterns. It also dynamically overlaps the retrieval and reasoning steps to minimize the end-to-end latency.

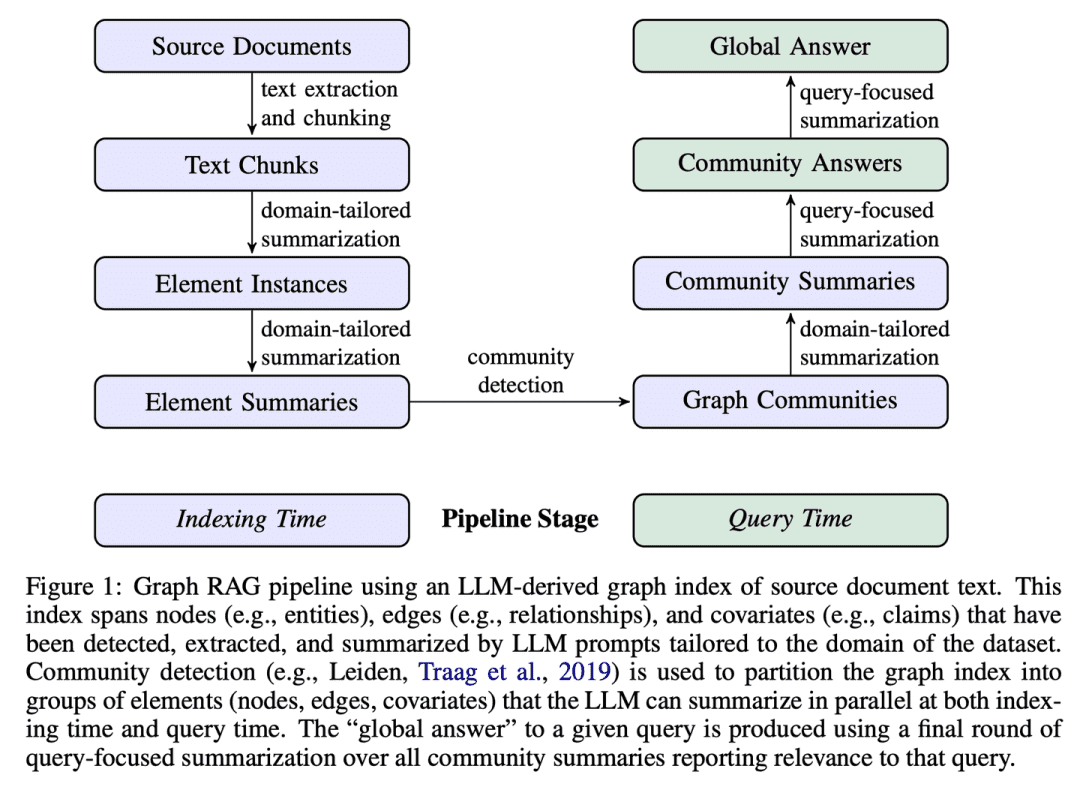

(12) GraphRAG [Community Summary]

Community summaries: Sort out the network of residents in the neighborhood first, and then make a profile of each neighborhood circle. When someone asks for directions, each neighborhood circle provides clues that are finally integrated into the most complete answer.

- Thesis: From Local to Global: A Graph RAG Approach to Query-Focused Summarization

- Project: https://github.com/microsoft/graphrag

GraphRAG constructs graph-based text indexes in two stages: first, an entity knowledge graph is derived from the source documents, and then community summaries are pre-generated for all groups of closely related entities. Given a question, each community summary is used to generate a partial response, and then all partial responses are summarized again in the final response to the user.

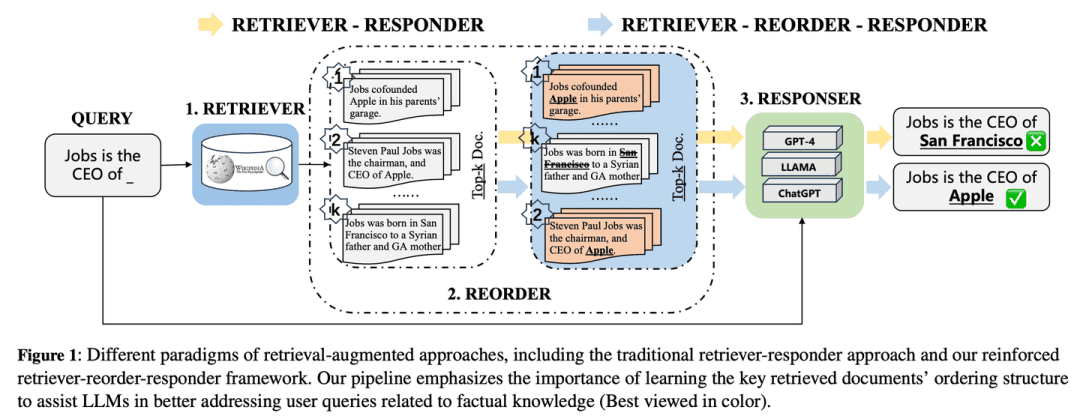

(13) R4 [Master Organizer]

master arranger: Like a master typographer, improve the quality of your output by optimizing the order and presentation of material, making content more organized and focused without changing the core model.

- Thesis: R4: Reinforced Retriever-Reorder-Responder for Retrieval-Augmented Large Language Models

R4 (Reinforced Retriever-Reorder-Responder) is used to learn document ordering for retrieval-enhanced large language models, thus further enhancing their generative capabilities while a large number of parameters of the large language model remain frozen. The reordering learning process is divided into two steps based on the quality of the generated responses: document order adjustment and document representation enhancement. Specifically, document order adjustment aims to organize the retrieved document ordering into beginning, middle and end positions based on graph attention learning to maximize the reinforcement reward of response quality. Document representation enhancement further refines the retrieved document representations of poor quality responses through document-level gradient adversarial learning.

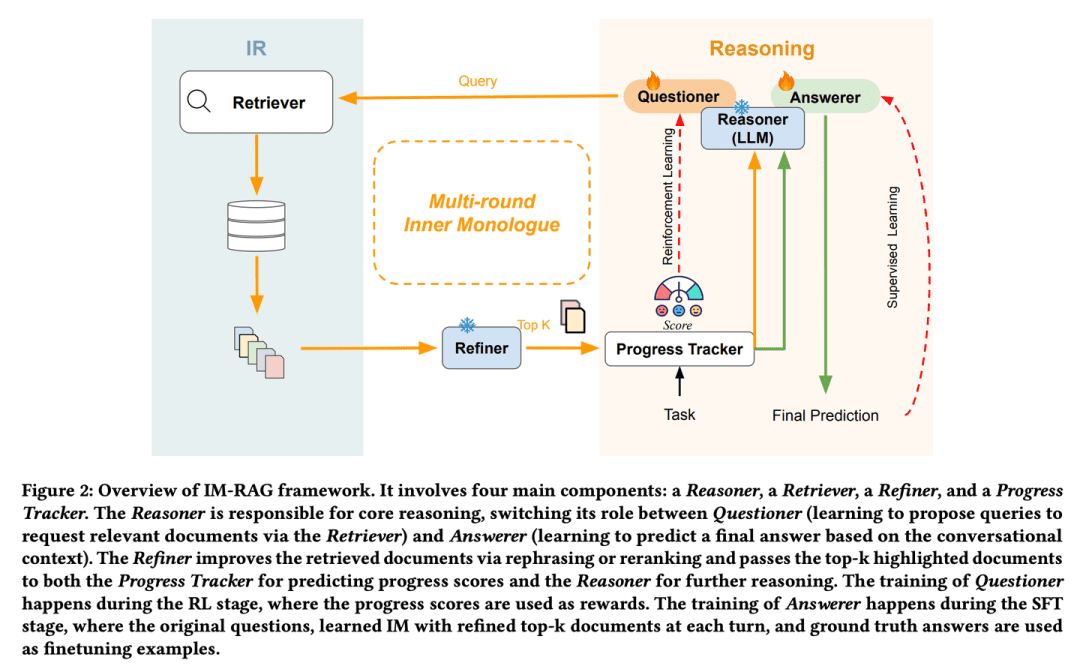

(14) IM-RAG [talking to myself]

talk to oneselfWhen encountering a problem, they will calculate in their mind, "What information do I need to check", "Is this information enough", and refine the answer through continuous internal dialog, this "monologue" ability is like a human expert, capable of gradually thinking deeply and solving complex problems.

- Thesis: IM-RAG: Multi-Round Retrieval-Augmented Generation Through Learning Inner Monologues

IM-RAG supports multiple rounds of retrieval enhancement generation by learning Inner Monologues to connect IR systems with LLMs. The approach integrates the information retrieval system with large language models to support multiple rounds of retrieval enhancement generation by learning Inner Monologues. During inner monologue, the large language model acts as the core reasoning model, which can either pose a query through the retriever to gather more information or provide a final answer based on the dialog context. We also introduce an optimizer that improves the output of the retriever, effectively bridging the gap between the reasoner and the information retrieval modules with varying capabilities, and facilitating multi-round communication. The entire inner monologue process is optimized via Reinforcement Learning (RL), where a progress tracker is also introduced to provide intermediate step rewards, and answer predictions are further optimized individually via Supervised Fine-Tuning (SFT).

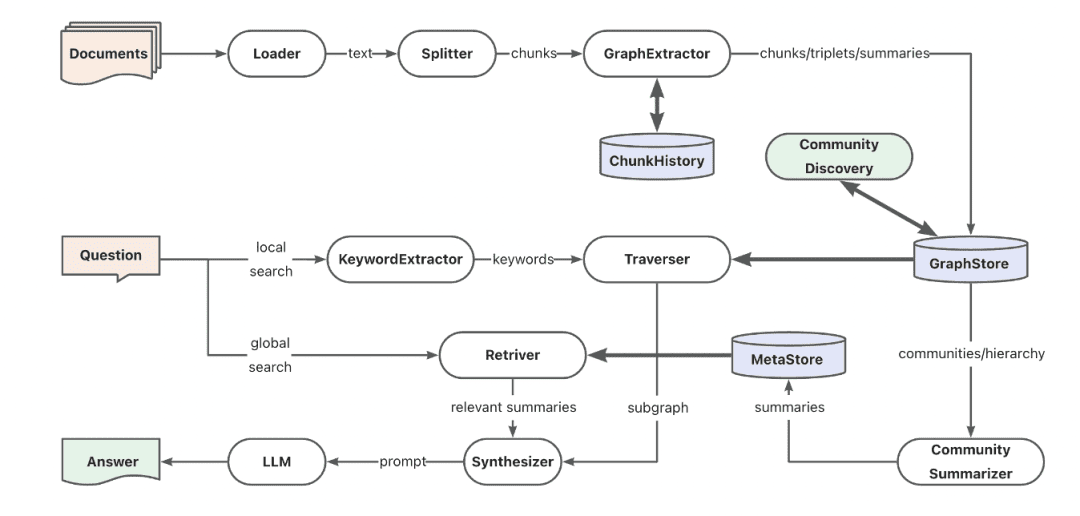

(15) AntGroup-GraphRAG [Hundreds of Experts]

a hundred schools of thought: Bringing together the best of the best from the industry, we specialize in a variety of ways to quickly locate information, providing both accurate searches and understanding of natural language queries, making complex knowledge searches both cost-effective and efficient.

- Project: https://github.com/eosphoros-ai/DB-GPT

Ant TuGraph team based on DB-GPT built open source GraphRAG framework, compatible with a variety of knowledge base indexing pedestals such as vector, graph, full text, etc., supports low-cost knowledge extraction, document structure mapping, graph community summarization and hybrid retrieval to solve QFS Q&A problems. Also provides support for diverse retrieval capabilities such as keywords, vectors and natural language.

(16) Kotaemon [LEGO]

Lego (toys): A ready-made set of Q&A building blocks that can be used directly or freely disassembled and remodeled. Users can use them as they wish, and developers can change them as they wish, without losing the rules.

- Project: https://github.com/Cinnamon/kotaemon

An open source clean and customizable RAG UI for building and customizing your own document Q&A system. Both end-user and developer needs are taken into account.

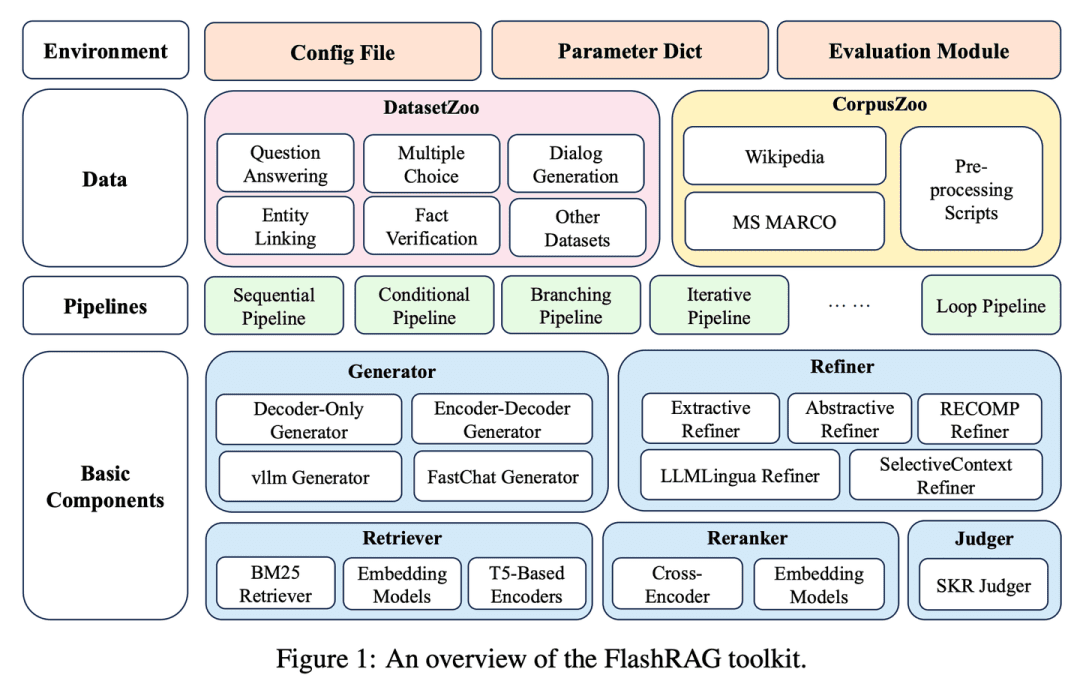

(17) FlashRAG [treasure chest]

treasure chest: Packaging the various RAG artifacts into a toolkit that allows researchers to build their own retrieval models as they go along, like picking out building blocks.

- Thesis: FlashRAG: A Modular Toolkit for Efficient Retrieval-Augmented Generation Research

- Project: https://github.com/RUC-NLPIR/FlashRAG

FlashRAG is an efficient and modular open source toolkit designed to help researchers reproduce existing RAG methods and develop their own RAG algorithms within a unified framework. Our toolkit implements 12 advanced RAG methods and collects and organizes 32 benchmark datasets.

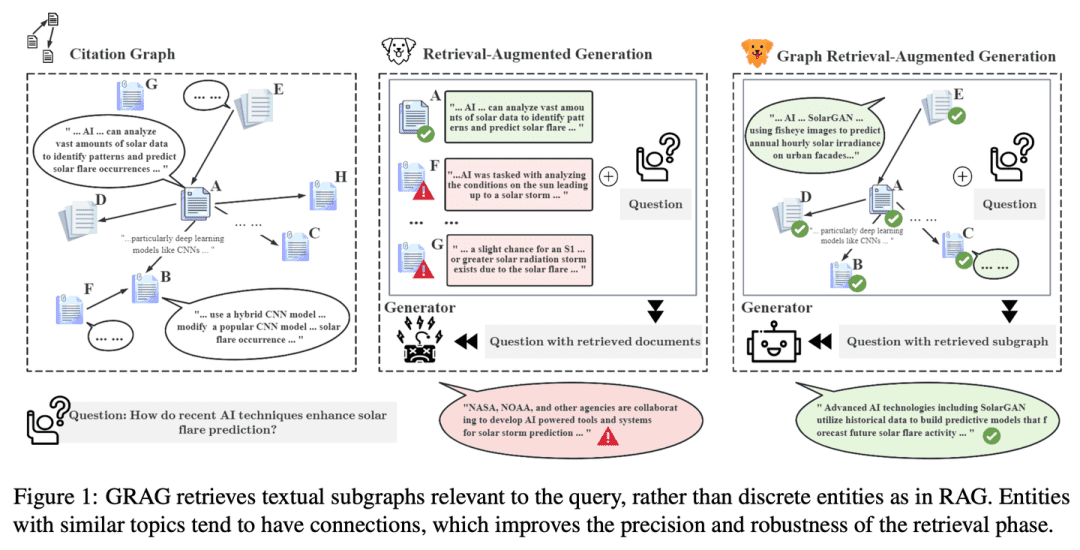

(18) GRAG [Detective]

do detective work: Not content with surface clues, digging deeper into the network of connections between texts, tracking the truth behind each piece of information like a crime to make the answer more accurate.

- Thesis: GRAG: Graph Retrieval-Augmented Generation

- Project: https://github.com/HuieL/GRAG

Traditional RAG models ignore the connections between texts and the topological information of the database when dealing with complex graph-structured data, which leads to performance bottlenecks.GRAG significantly improves the performance and reduces the illusions of the retrieval and generation process by emphasizing the importance of subgraph structures.

(19) Camel-GraphRAG [left and right]

slap with one hand and then the other, in quick succession: One eye scans the text with Mistral to extract intelligence, and the other weaves a web of relationships with Neo4j. When searching, the left and right eyes work together to find similarities as well as track along the trail map, making the search more comprehensive and accurate.

- Project: https://github.com/camel-ai/camel

Camel-GraphRAG relies on the Mistral model to provide support for extracting knowledge from given content and constructing knowledge structures, and then storing this information in a Neo4j graph database. A hybrid approach that combines vector retrieval with knowledge graph retrieval is then used to query and explore the stored knowledge.

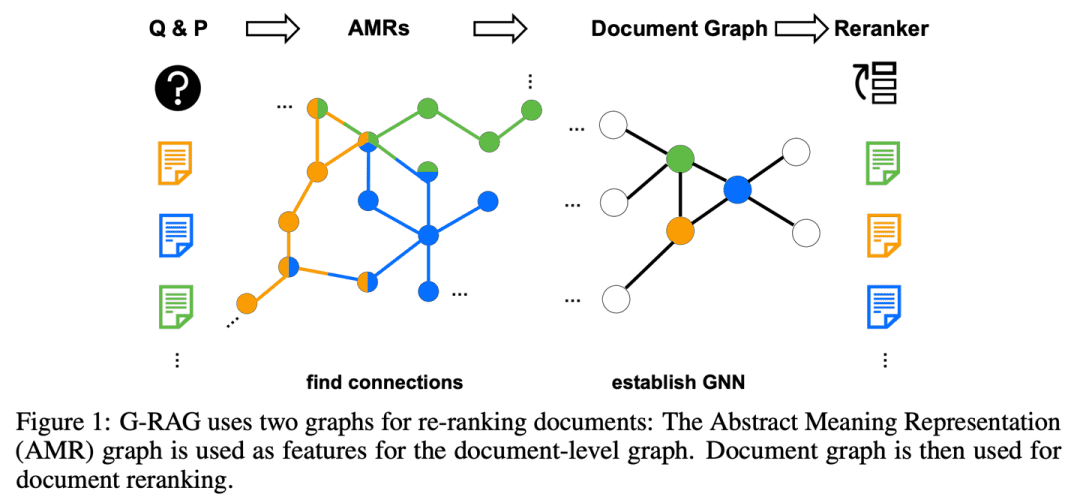

(20) G-RAG [Stringer]

lit. door knocker (idiom); fig. a miracle cure for people's problemsInstead of searching for information alone, you build a network of relationships for each point of knowledge. Like a socialite, not only do you know what each of your friends is good at, but you also know who's friends with who, so you can follow the trail directly when you're looking for answers.

- Thesis: Don't Forget to Connect! Improving RAG with Graph-based Reranking

RAG still has challenges in dealing with the relationship between documents and question context, and the model may not be able to effectively utilize documents when their relevance to the question is not obvious or only contains partial information. In addition, how to reasonably infer connections between documents is also an important issue.G-RAG implements a graph neural network (GNN)-based rearranger between the RAG retriever and the reader. The method combines the connection information between documents and semantic information (by abstracting the semantic representation graph) to provide a context-based ranker for RAG.

(21) LLM-Graph-Builder [Mover]

a porter: Give the chaotic text an understandable home. Not simply carry it, but like an obsessive-compulsive person, label each knowledge point, draw relationship lines, and finally build a well-organized knowledge building in Neo4j's database.

- Project: https://github.com/neo4j-labs/llm-graph-builder

Neo4j open source LLM-based knowledge graph extraction generator that can convert unstructured data into a knowledge graph in Neo4j . Using large models to extract nodes , relationships and their attributes from unstructured data .

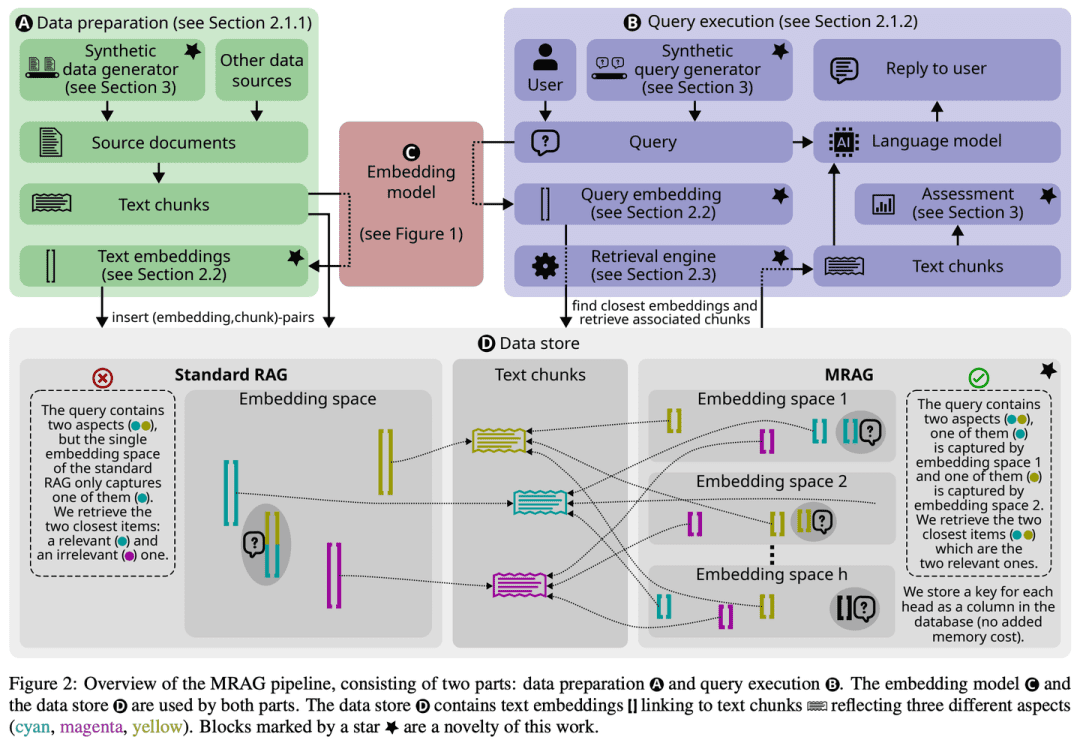

(22) MRAG [Octopus]

octopusInstead of growing only one head to dead end problems, it grows multiple tentacles like an octopus, with each tentacle responsible for grasping an angle. Simply put, this is the AI version of "multi-tasking".

- Thesis: Multi-Head RAG: Solving Multi-Aspect Problems with LLMs

- Project: https://github.com/spcl/MRAG

Existing RAG solutions do not focus on queries that may require access to multiple documents with significantly different content. Such queries arise frequently but are challenging because the embeddings of these documents may be far apart in the embedding space, making it difficult to retrieve them all. This paper introduces Multihead RAG (MRAG), a novel scheme that aims to fill this gap with a simple yet powerful idea: to utilize the Transformer The activation of a multi-head attention layer, rather than a decoder layer, serves as a key to capture multifaceted documents. The driving motivation is that different attention heads can learn to capture different data aspects. Utilizing the corresponding activations produces embeddings representing data items and various aspects of the query, thus improving the retrieval accuracy of complex queries.

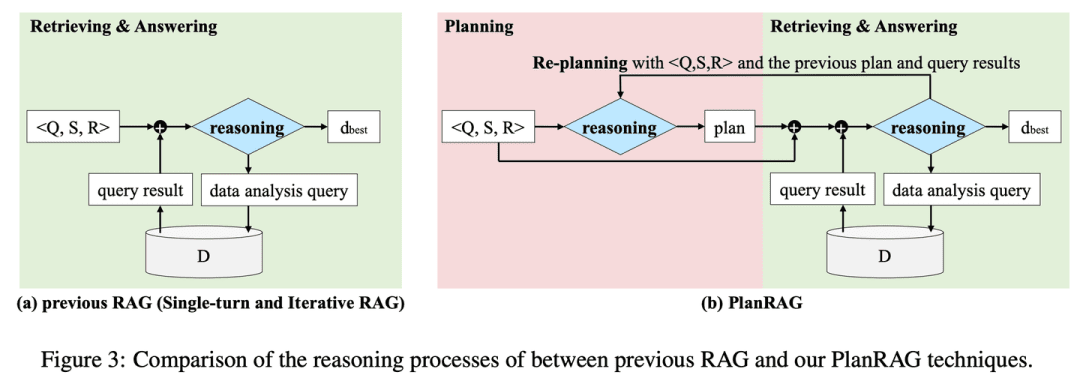

(23) PlanRAG [strategist]

strategist: Develop a complete battle plan, then analyze the situation based on the rules and data, and finally make the best tactical decisions.

- Thesis: PlanRAG: A Plan-then-Retrieval Augmented Generation for Generative Large Language Models as Decision Makers

- Project: https://github.com/myeon9h/PlanRAG

PlanRAG investigates how to utilize large-scale language models to solve solutions to complex data analysis decision problems by defining the Decision QA task, i.e., determining the best decision based on the decision problem Q, the business rules R, and the database D. PlanRAG first generates a decision plan, and then the retriever generates queries for data analysis.

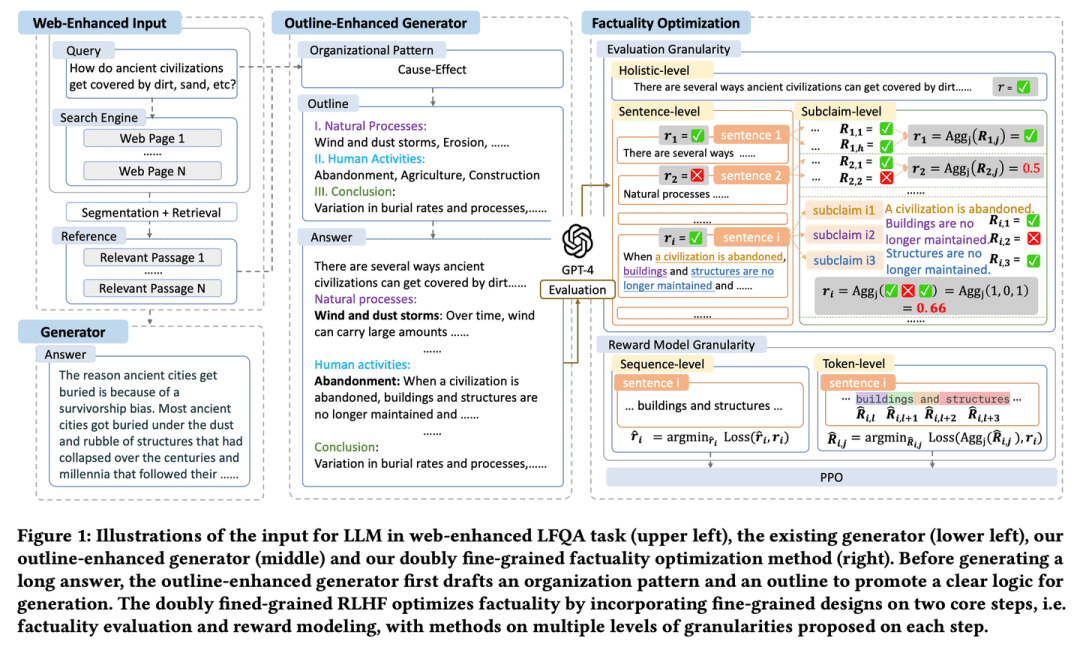

(24) FoRAG [Writer]

writerThe essay will be outlined and framed, and then expanded and refined paragraph by paragraph. There is also an "editor" who helps refine every detail through careful fact-checking and suggestions for changes to ensure the quality of the work.

- Thesis: FoRAG: Factuality-optimized Retrieval Augmented Generation for Web-enhanced Long-form Question Answering

FoRAG proposes a novel outline enhancement generator, where in the first stage the generator uses outline templates to draft answer outlines based on the user query and context, and in the second stage it expands each viewpoint based on the generated outline to construct the final answer. A factual optimization approach based on a well-designed dual fine-grained RLHF framework is also proposed, which provides denser reward signals by introducing a fine-grained design in the two core steps of factual evaluation and reward modeling.

(25) Multi-Meta-RAG [meta-screener]

meta-filterLike an experienced data manager, it uses multiple filtering mechanisms to pinpoint the most relevant content from a vast amount of information. It doesn't just look at the surface, but also analyzes the "identity tags" (metadata) of documents to make sure that every piece of information it finds is truly on-topic.

- Thesis: Multi-Meta-RAG: Improving RAG for Multi-Hop Queries using Database Filtering with LLM-Extracted Metadata

- Project: https://github.com/mxpoliakov/multi-meta-rag

Multi-Meta-RAG uses database filtering and LLM-extracted metadata to improve the RAG's selection of relevant documents related to a problem from a variety of sources.

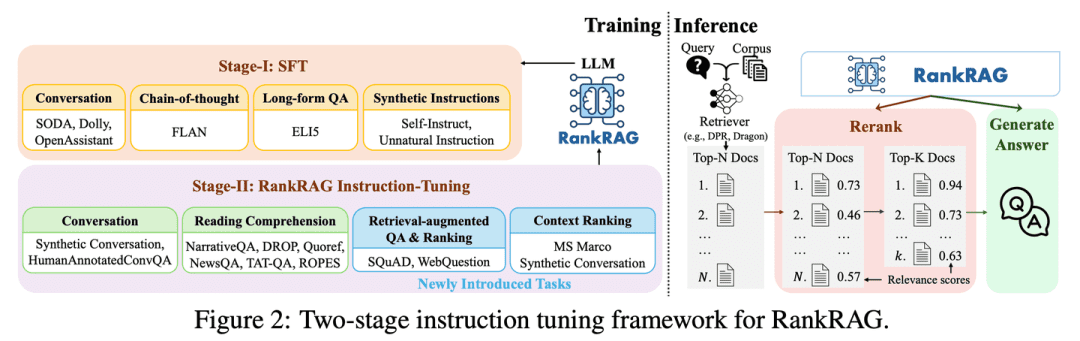

(26) RankRAG [All Rounder]

all-rounder: With a little training, you can be both a "judge" and a "competitor". Like a gifted athlete, you can outperform the pros in multiple disciplines with just a little coaching, and you'll be able to master all your skills.

- Thesis: RankRAG: Unifying Context Ranking with Retrieval-Augmented Generation in LLMs

RankRAG's fine-tunes a single LLM with instructions to perform both contextual ranking and answer generation. By adding a small amount of ranking data to the training data, the instructionally fine-tuned LLM works surprisingly well and even outperforms existing expert ranking models, including the same LLM specifically fine-tuned on a large amount of ranking data. This design not only simplifies the complexity of multiple models in traditional RAG systems, but also enhances contextual relevance judgments and information utilization efficiency by sharing model parameters.

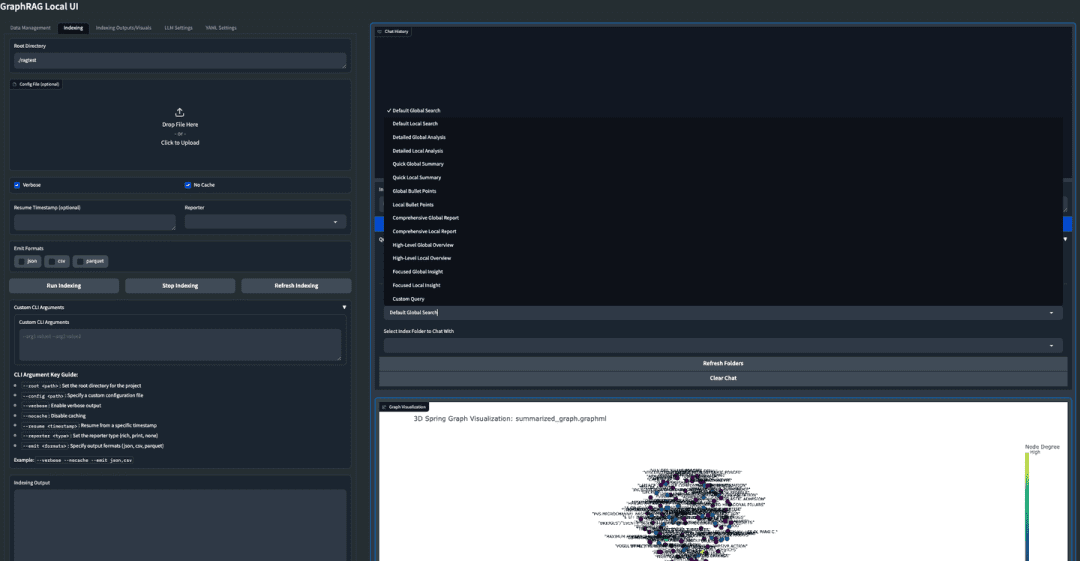

(27) GraphRAG-Local-UI [Modifier]

tuner: Converted the sports car into a practical car for local roads, adding a friendly dashboard to make it easy for everyone to drive.

- Project: https://github.com/severian42/GraphRAG-Local-UI

GraphRAG-Local-UI is a local model-adapted version of Microsoft-based GraphRAG with a rich ecosystem of interactive user interfaces.

(28) ThinkRAG [small secretary]

young secretary: Condense a huge body of knowledge into a pocket-sized version, like a little secretary on the go, ready to help you find answers without a large device.

- Project: https://github.com/wzdavid/ThinkRAG

The ThinkRAG Big Model Retrieval Enhanced Generation System can be easily deployed on laptops to enable intelligent quizzing of local knowledge bases.

(29) Nano-GraphRAG [light load]

lit. load light and go into battle (idiom); fig. to travel with ease: Like a lightly armed athlete, the elaborate equipment has been simplified, but the core competencies have been retained.

- Project: https://github.com/gusye1234/nano-graphrag

Nano-GraphRAG is a smaller, faster, and more concise GraphRAG while retaining core functionality.

(30) RAGFlow-GraphRAG [Navigator]

navigator (on a plane or boat): Cutting shortcuts through the maze of questions and answers by first drawing a map to mark all the points of knowledge, merging out duplicate signposts, and specifically slimming down the map so that people asking for directions don't take a long detour.

- Project: https://github.com/infiniflow/ragflow

RAGFlow draws on the GraphRAG implementation to introduce knowledge graph construction as an optional option in the document preprocessing phase to serve QFS Q&A scenarios, and introduces improvements such as entity de-weighting and Token optimization.

(31) Medical-Graph-RAG [Digital Doctor]

digital doctor: Like an experienced medical consultant, the complex medical knowledge is organized clearly with diagrams, and the diagnostic suggestions are not made out of thin air, but are justified, so that both the doctor and the patient can see the rationale behind each diagnosis.

- Thesis: Medical Graph RAG: Towards Safe Medical Large Language Model via Graph Retrieval-Augmented Generation

- Project: https://github.com/SuperMedIntel/Medical-Graph-RAG

MedGraphRAG is a framework designed to address the challenges of applying LLM in medicine. It uses a graph-based approach to improve diagnostic accuracy, transparency, and integration into clinical workflows. The system improves diagnostic accuracy by generating responses supported by reliable sources, addressing the difficulty of maintaining context in large volumes of medical data.

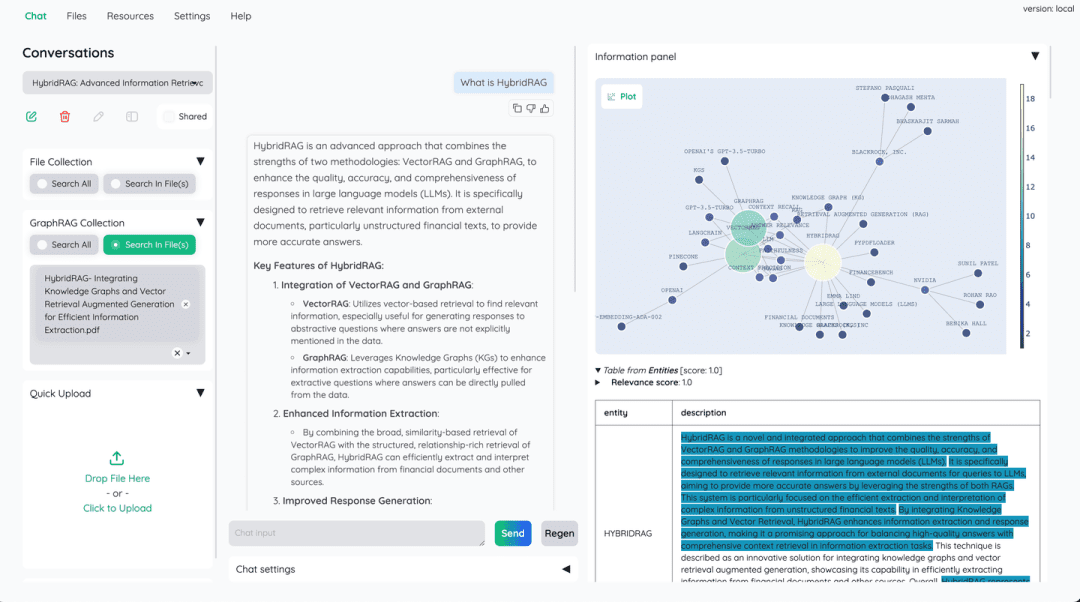

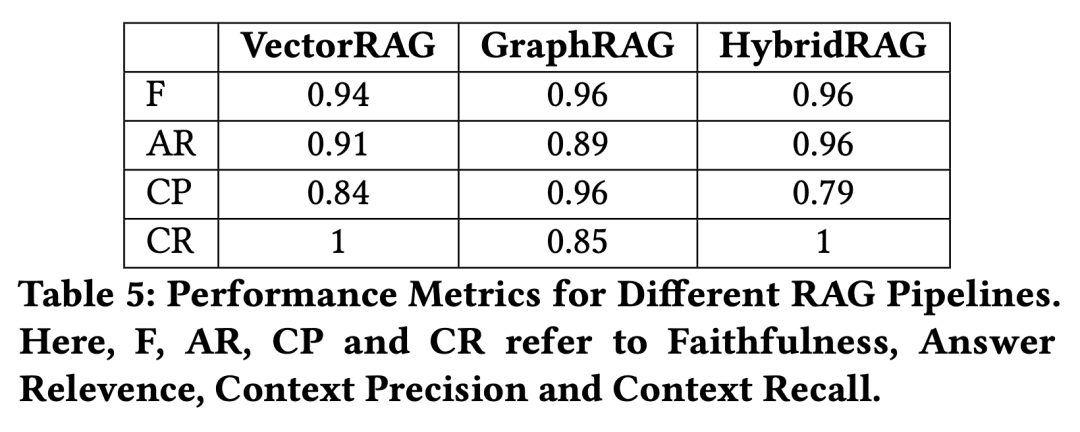

(32) HybridRAG [Chinese Medicine Combined Formulas]

Chinese medicine prescriptionJust like Chinese medicine, a single medicine is not as effective as several medicines combined together. Vector databases are responsible for fast retrieval, and knowledge graphs supplement relational logic, complementing each other's strengths.

- Thesis: HybridRAG: Integrating Knowledge Graphs and Vector Retrieval Augmented Generation for Efficient Information Extraction

A new approach based on the combination of Knowledge Graph RAG technique (GraphRAG) and VectorRAG technique called HybridRAG to enhance question and answer systems for extracting information from financial documents is shown to generate accurate and contextually relevant answers. In the retrieval and generation phases, HybridRAG for retrieving context from vector databases and knowledge graphs outperforms traditional VectorRAG and GraphRAG in terms of retrieval accuracy and answer generation.

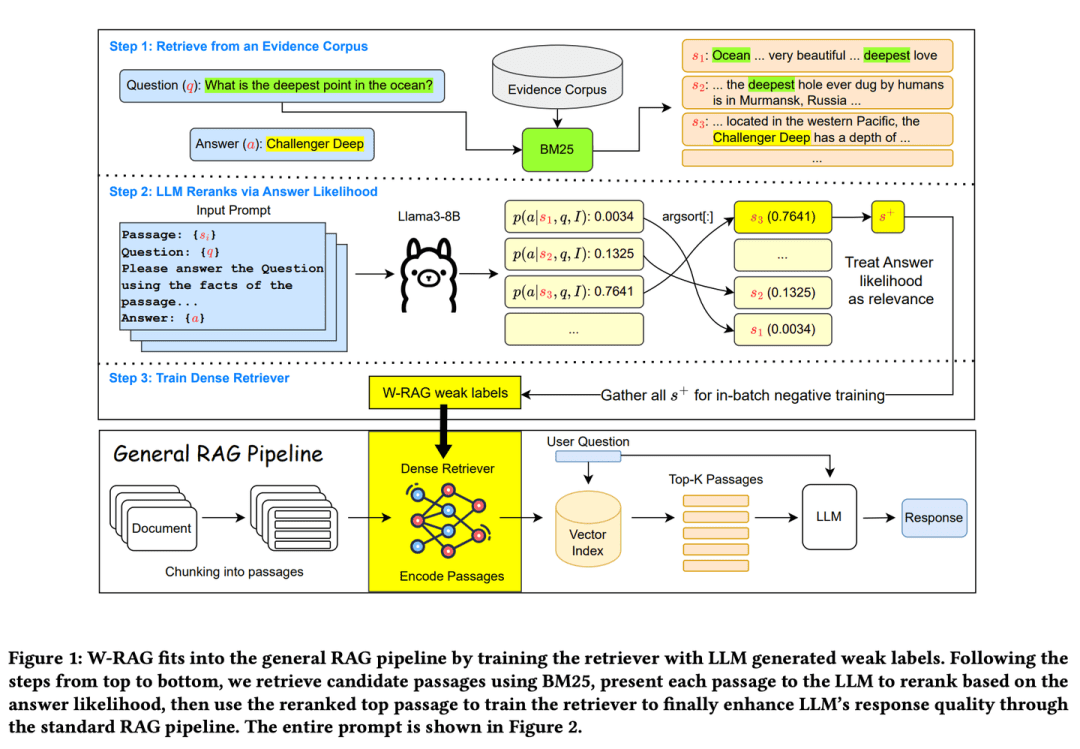

(33) W-RAG [Evolutionary Search]

Evolution Search: Like a good self-evolving search engine, it gradually improves its ability to find key information by learning what is a good answer through a large model that rates paragraphs of articles.

- Thesis: W-RAG: Weakly Supervised Dense Retrieval in RAG for Open-domain Question Answering

- Project: https://github.com/jmnian/weak_label_for_rag

Weakly-supervised dense retrieval techniques in open-domain Q&A utilize the ranking capabilities of large language models to create weakly labeled data for training dense retrievers. By evaluating the probability that the large language model generates the correct answer based on the question and each paragraph, the probability of passing the BM25 The top K retrieved passages are re-ranked. The highest ranked passages are then used as positive training examples for intensive retrieval.

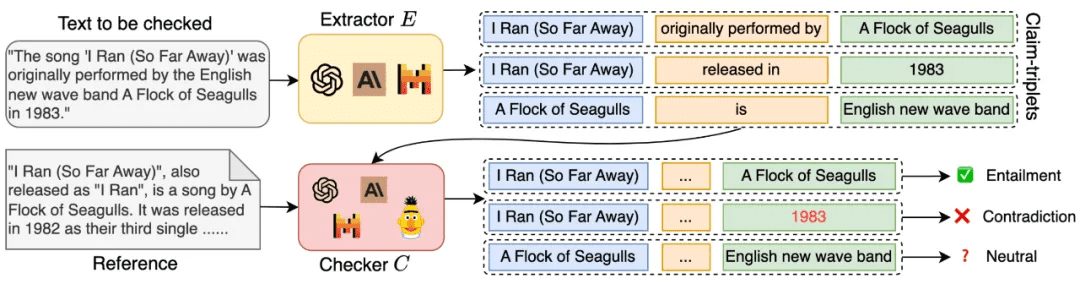

(34) RAGChecker [quality inspector]

quality inspectorThe answer is not simply right or wrong, but will be examined in depth throughout the entire process of answering, from the search for information to the generation of the final answer, like a strict examiner, not only to give a detailed grading report, but also to point out the specific where to improve.

- Thesis: RAGChecker: A Fine-grained Framework for Diagnosing Retrieval-Augmented Generation

- Project: https://github.com/amazon-science/RAGChecker

RAGChecker's diagnostic tool provides fine-grained, comprehensive and reliable diagnostic reports on the RAG system, and provides actionable directions for further performance improvement. It not only evaluates the overall performance of the system, but also analyzes in-depth the performance of the two core modules, retrieval and generation.

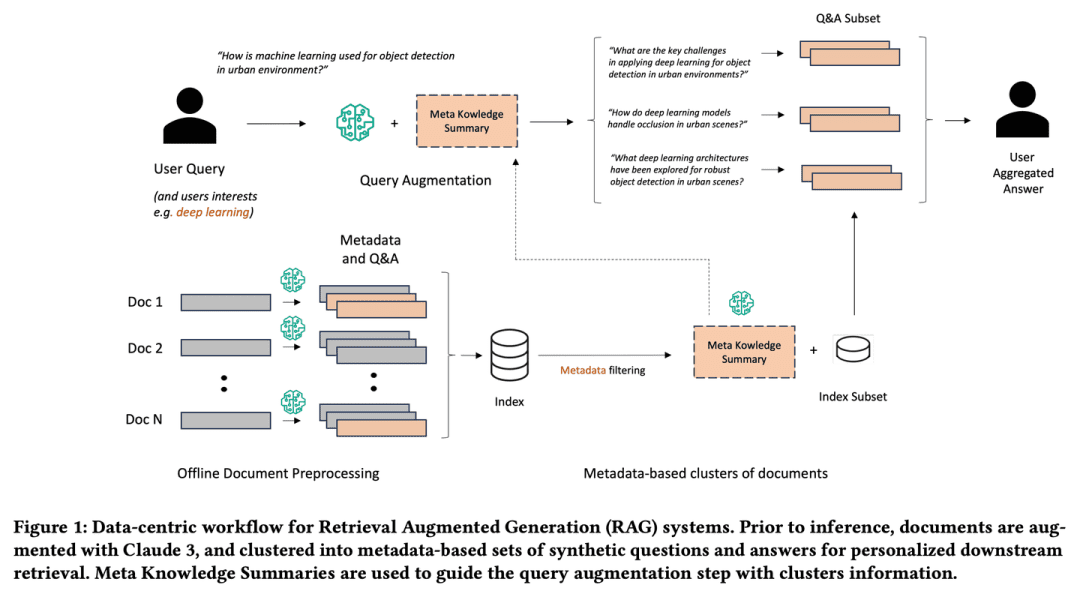

(35) Meta-Knowledge-RAG [Scholar]

scholars: Like a senior researcher in academia, it not only collects information, but also actively thinks about the problem, annotates and summarizes each document, and even envisions possible problems in advance. It will link related knowledge points together to form a knowledge network, so that the query becomes more depth and breadth, like a scholar to help you do research synthesis.

- Thesis: Meta Knowledge for Retrieval of Augmented Large Language Models

Meta-Knowledge-RAG (MK Summary) introduces a novel data-centric RAG workflow that transforms the traditional "retrieve-read" system into a more advanced "prepare-rewrite-retrieve-read " framework to achieve higher domain expert level understanding of the knowledge base. Our approach relies on the generation of metadata and synthetic questions and answers for each document and the introduction of a new concept of meta-knowledge summarization for metadata-based document clustering. The proposed innovations enable personalized user query enhancement and deep information retrieval across knowledge bases.

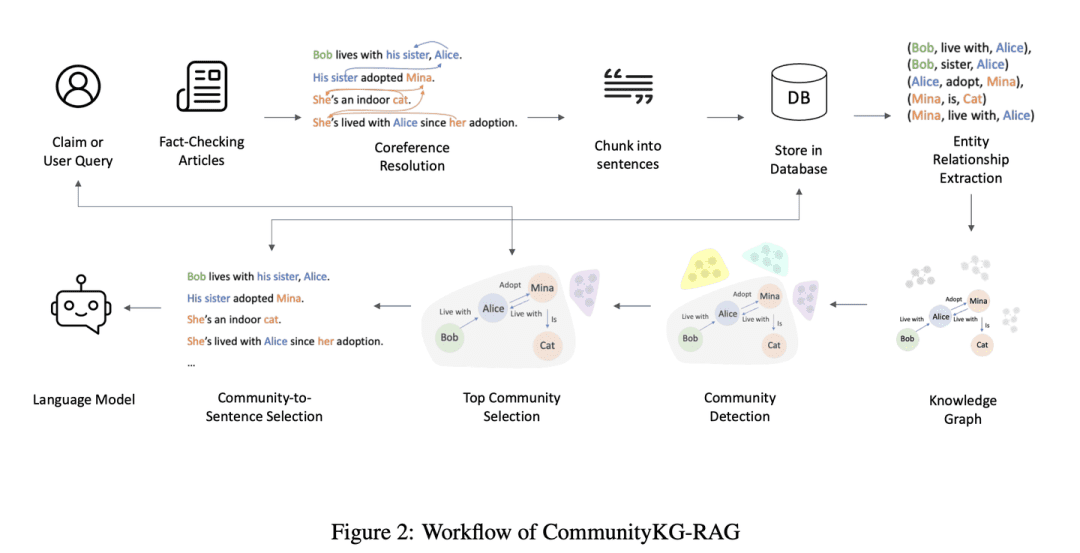

(36) CommunityKG-RAG [Community Exploration]

Community Exploration: Like a wizard familiar with the network of community relationships, it is adept at utilizing the associations and group characteristics between knowledge to accurately locate relevant information and validate its reliability without special study.

- Thesis: CommunityKG-RAG: Leveraging Community Structures in Knowledge Graphs for Advanced Retrieval-Augmented Generation in Fact- Checking

CommunityKG-RAG is a novel zero-sample framework that combines the community structure in the knowledge graph with a RAG system to enhance the fact-checking process.CommunityKG-RAG is able to adapt to new domains and queries without additional training, and it exploits the multi-hop nature of the community structure in the knowledge graph to significantly improve information retrieval accuracy and relevance.

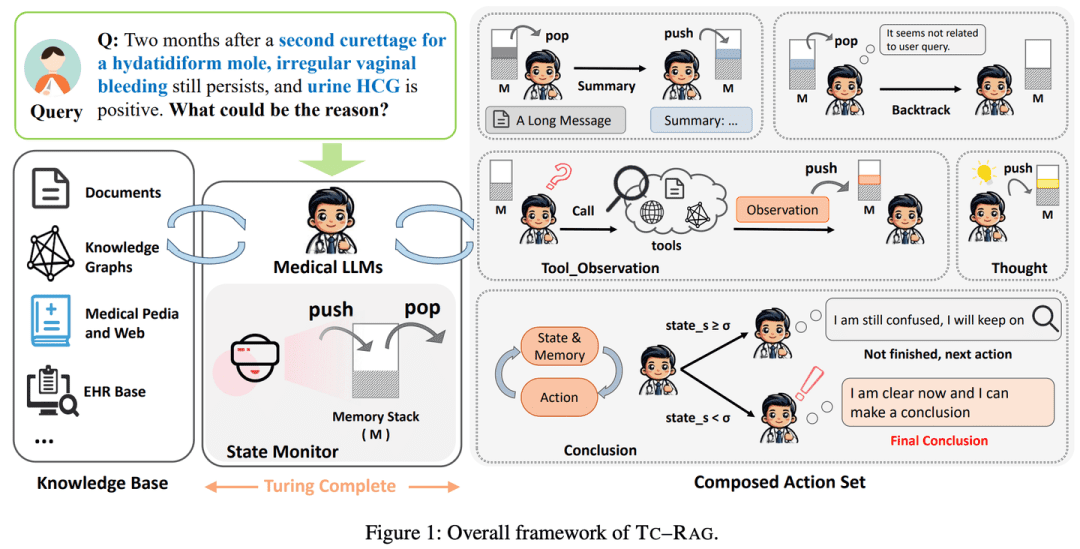

(37) TC-RAG [Memory Warlock]

mnemonic artist: Put a brain with an auto-cleanup function on LLM. Just like we solve problems, we will write the important steps on the draft paper and cross them out when we are done. It's not rote memorization, it remembers what should be memorized and empties what should be forgotten in time, like a school bully who can clean up his room.

- Thesis: TC-RAG: Turing-Complete RAG's Case study on Medical LLM Systems

- Project: https://github.com/Artessay/TC-RAG

By introducing a Turing-complete system to manage state variables, more efficient and accurate knowledge retrieval is realized. By utilizing a memory stack system with adaptive retrieval, reasoning and planning capabilities, TC-RAG not only ensures controlled stopping of the retrieval process, but also mitigates the accumulation of erroneous knowledge through Push and Pop operations.

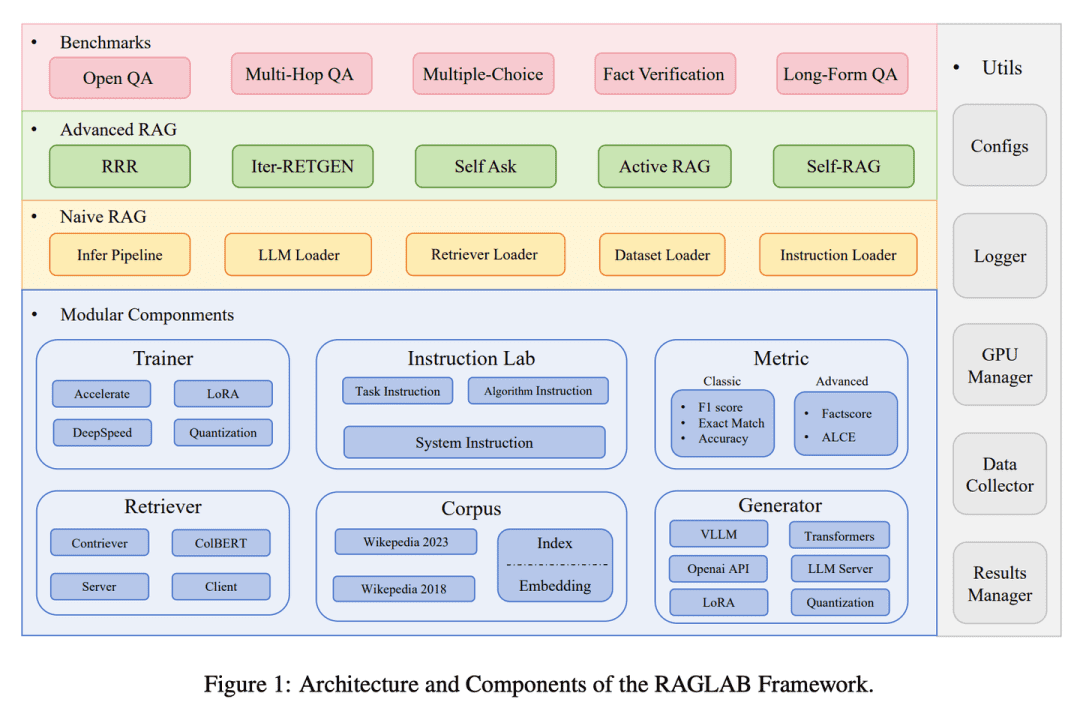

(38) RAGLAB [Arena]

arena: Allows various algorithms to compete and compare fairly under the same rules, much like a standardized testing process in a science lab, ensuring that each new method is evaluated objectively and transparently.

- Thesis: RAGLAB: A Modular and Research-Oriented Unified Framework for Retrieval-Augmented Generation

- Project: https://github.com/fate-ubw/RAGLab

There is a growing lack of comprehensive and fair comparisons between novel RAG algorithms, and the high-level abstraction of open-source tools leads to a lack of transparency and limits the ability to develop new algorithms and evaluation metrics.RAGLAB is a modular, research-oriented, open-source library that reproduces 6 algorithms and builds a comprehensive research ecosystem. With RAGLAB, we compare the 6 algorithms fairly on 10 benchmarks, helping researchers to efficiently evaluate and innovate algorithms.

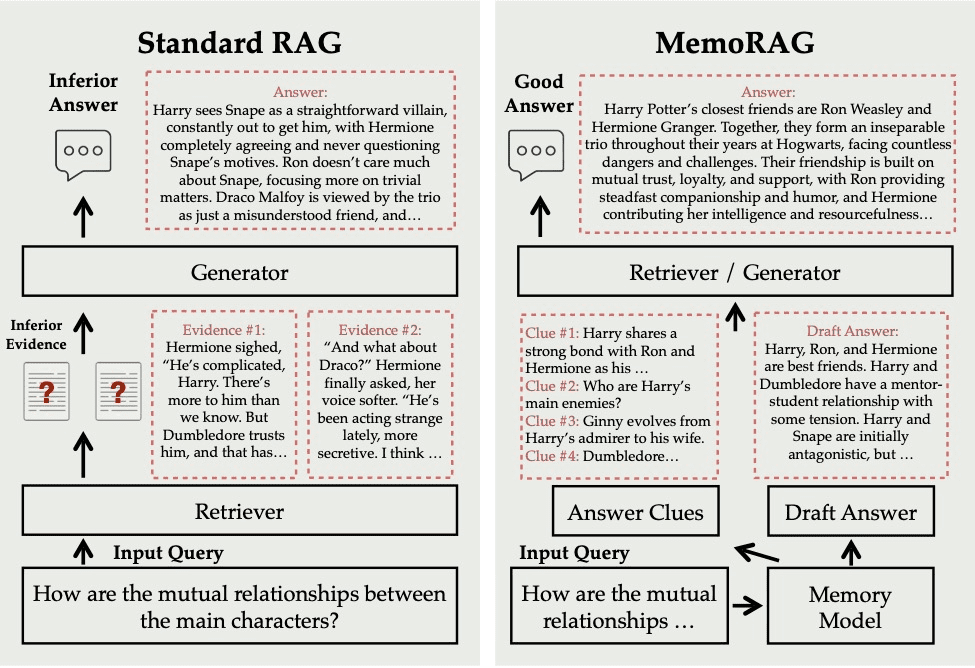

(39) MemoRAG.

have a highly retentive memoryIt doesn't just look up information on demand, it has memorized and understood the entire knowledge base. When you ask a question, it can quickly pull relevant memories from this "super brain" and give an accurate and insightful answer, just like a knowledgeable expert.

- Project: https://github.com/qhjqhj00/MemoRAG

MemoRAG is an innovative Retrieval Augmented Generation (RAG) framework built on top of an efficient ultra-long memory model. Unlike standard RAGs that primarily deal with queries with explicit information needs, MemoRAG utilizes its memory model to achieve a global understanding of the entire database. By recalling query-specific cues from memory, MemoRAG enhances evidence retrieval, resulting in more accurate and context-rich response generation.

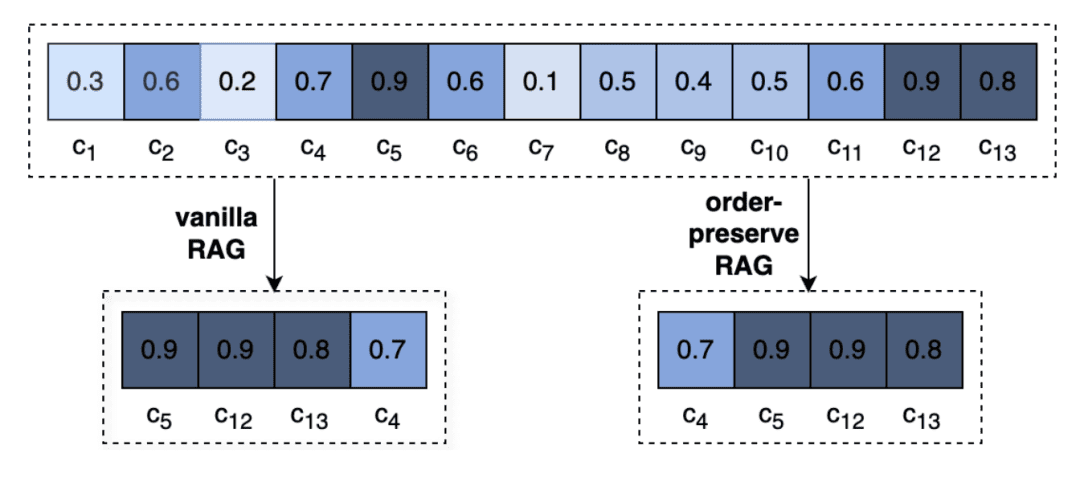

(40) OP-RAG [attention management]

Attention management: It's like reading a particularly thick book, you can't memorize every detail, but it's the people who know how to mark the key chapters that are the masters. It is not aimlessly read, but like a senior reader, while reading and drawing down the key points in the focus, when needed, directly turn to the marked page.

- Thesis: In Defense of RAG in the Era of Long-Context Language Models

Extremely long contexts in LLMs lead to reduced attention to relevant information and potential degradation of answer quality. Revisiting RAG in long context answer generation, we propose an order-preserving retrieval-enhanced generation mechanism, OP-RAG, that significantly improves the performance of RAG in long context question and answer applications.

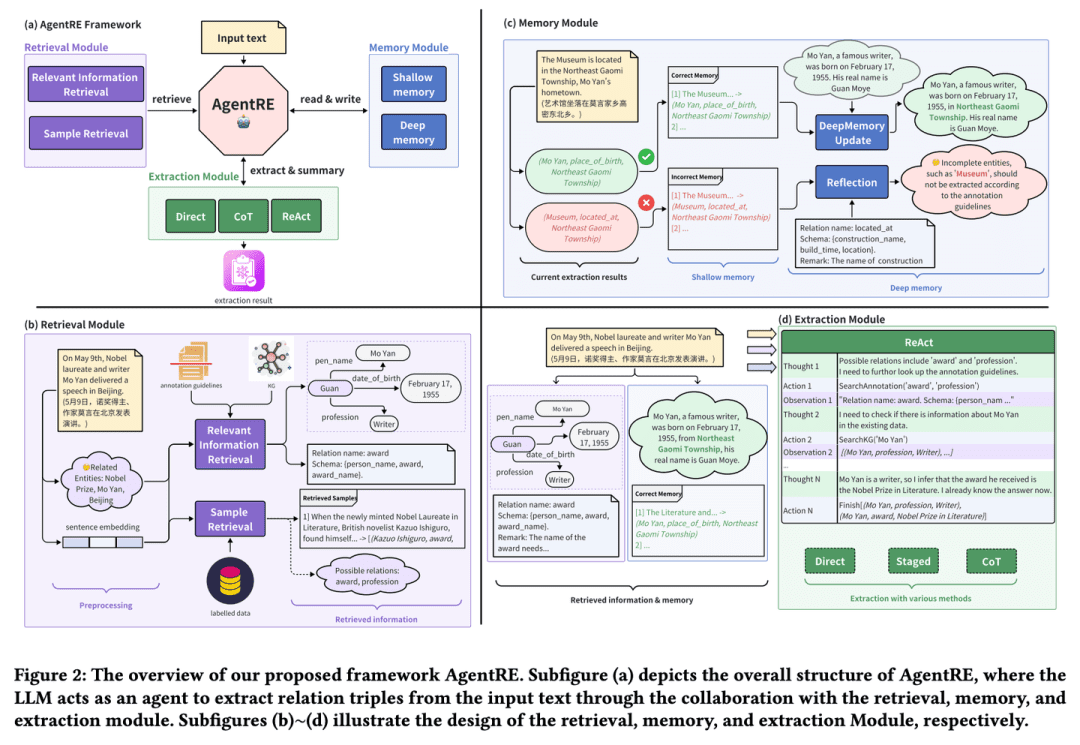

(41) AgentRE [Intelligent Extraction]

Intelligent Extraction: Like a sociologist who is good at observing relationships, he or she not only memorizes key information, but also takes the initiative to check and think about it in depth, so as to accurately understand the complex network of relationships. Even in the face of complex relationships, you can analyze them from multiple perspectives to make sense of them, and avoid reading into them.

- Thesis: AgentRE: An Agent-Based Framework for Navigating Complex Information Landscapes in Relation Extraction

- Project: https://github.com/Lightblues/AgentRE

By integrating the memory, retrieval, and reflection capabilities of large-scale language models, AgentRE effectively addresses the challenges of diverse relationship types in complex scene relationship extraction and ambiguous relationships between entities in a single sentence.AgentRE consists of three major modules that help agents efficiently acquire and process information, and significantly improve RE performance.

(42) iText2KG [Architect]

architects: Like an organized engineer, it gradually transforms fragmented documents into a systematic knowledge network by refining, extracting and integrating information in steps, and it does not require the preparation of detailed architectural drawings in advance, and can be flexibly expanded and improved as needed.

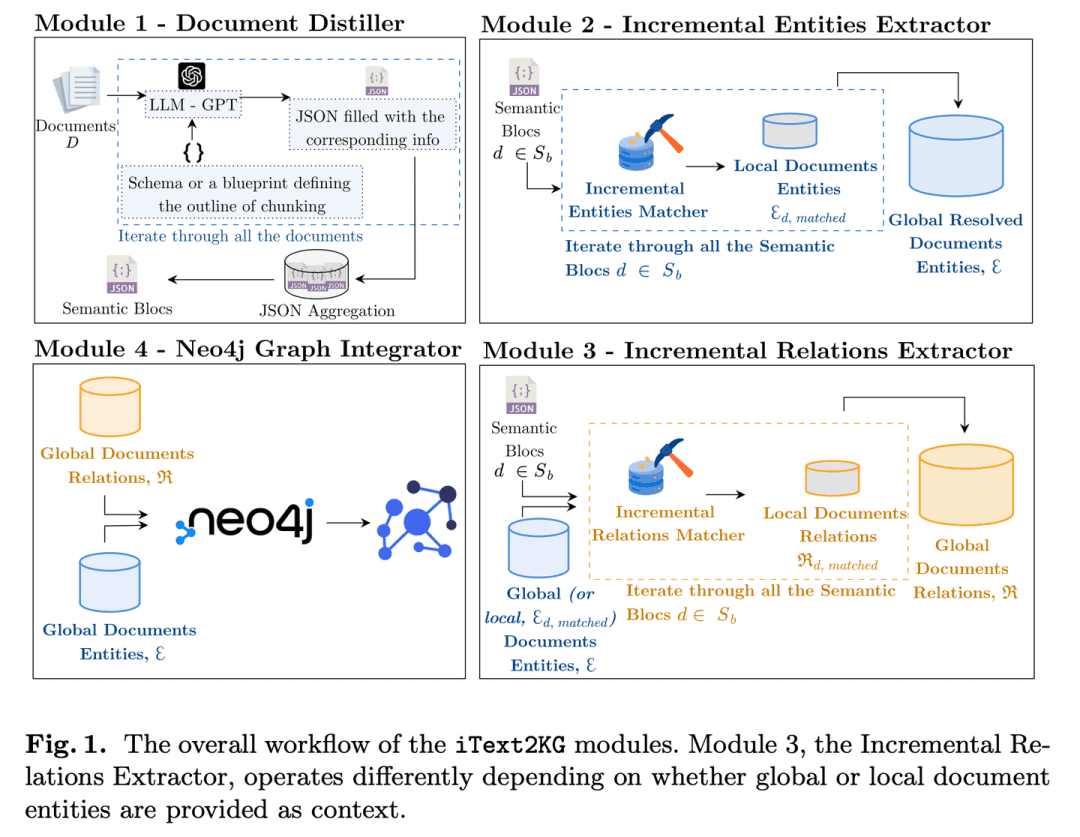

- Thesis: iText2KG: Incremental Knowledge Graphs Construction Using Large Language Models

- Project: https://github.com/AuvaLab/itext2kg

iText2KG (Incremental Knowledge Graph Construction) utilizes Large Language Models (LLMs) to build knowledge graphs from raw documents and achieves incremental knowledge graph construction through four modules (Document Refiner, Incremental Entity Extractor, Incremental Relationship Extractor, and Graph Integrator) without the need for prior definition of ontologies or extensive supervised training.

(43) GraphInsight [graphic interpretation]

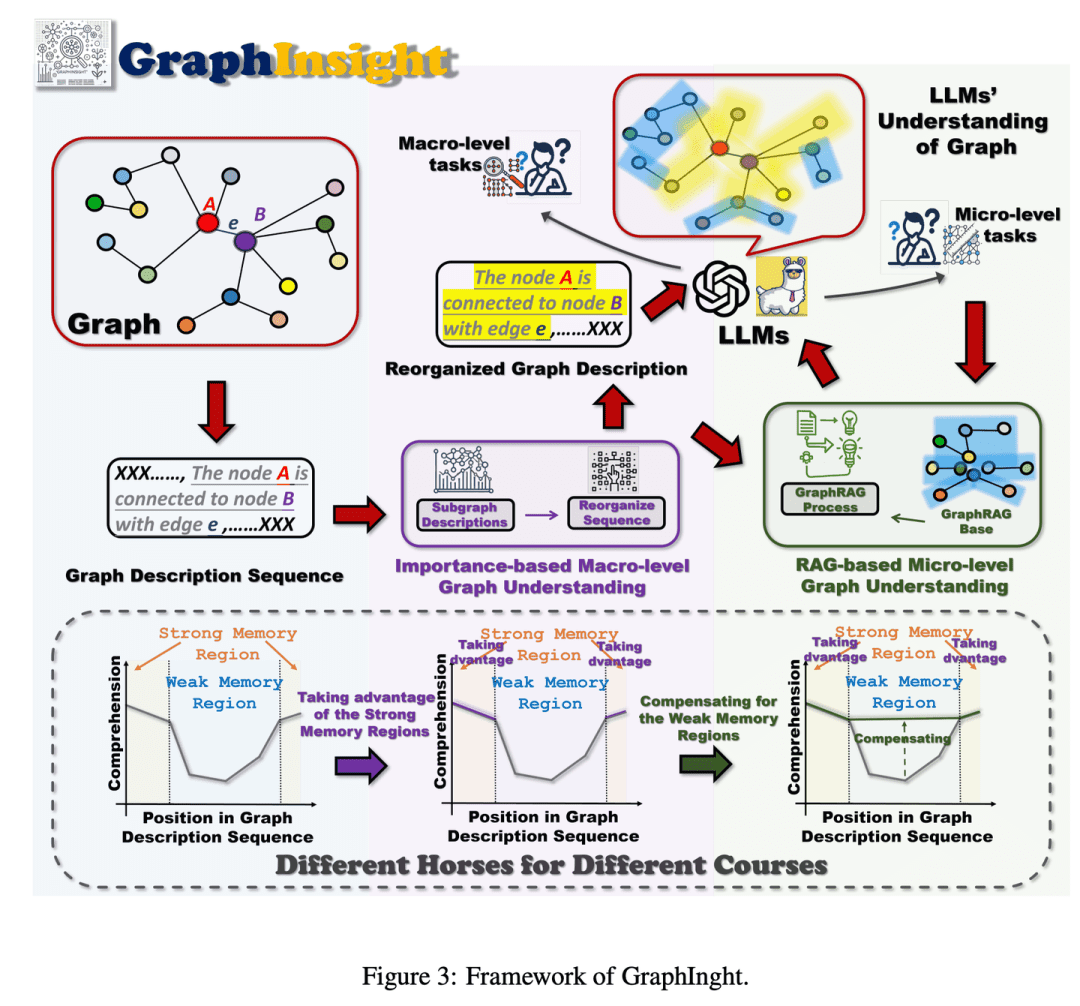

atlas interpretation: Like an expert at analyzing infographics, you know to place important information in the most prominent places, while consulting references to add details when needed, and can STEP BY STEP reason about complex charts so that the AI gets the big picture without missing the details.

- Thesis: GraphInsight: Unlocking Insights in Large Language Models for Graph Structure Understanding

GraphInsight is a new framework aimed at improving LLMs' understanding of macro- and micro-level graphical information.GraphInsight is based on two key strategies: 1) placing key graphical information in locations where LLMs have strong memory performance, and 2) introducing lightweight external knowledge bases for regions with weak memory performance, drawing on the idea of Retrieval Augmented Generation (RAG). In addition, GraphInsight explores the integration of these two strategies into the LLM agent process for composite graph tasks that require multi-step reasoning.

(44) LA-RAG [Dialect Pass]

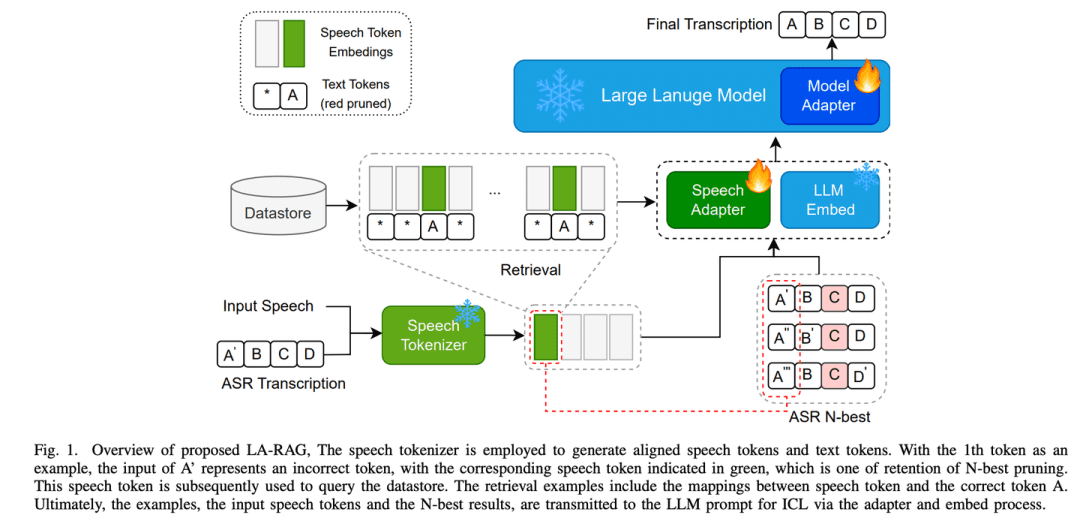

dialect book: Like a linguist proficient in local dialects, through careful speech analysis and contextual understanding, it not only accurately recognizes standard Mandarin, but also understands accents with local characteristics, allowing the AI to communicate with people from different regions without barriers.

- Thesis: LA-RAG:Enhancing LLM-based ASR Accuracy with Retrieval-Augmented Generation

LA-RAG, a novel Retrieval Augmented Generation (RAG) paradigm for LLM-based ASR.LA-RAG utilizes fine-grained token-level speech data storage and speech-to-speech retrieval mechanisms to improve ASR accuracy through LLM Contextual Learning (ICL) functionality.

(45) SFR-RAG [refined search]

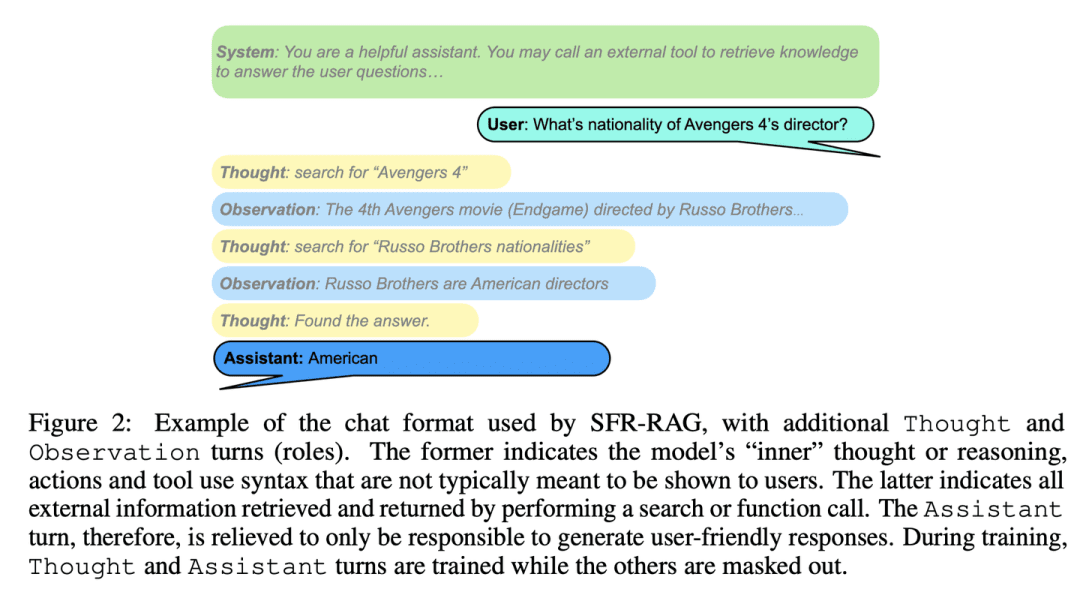

Streamlined search: Like a refined reference advisor, small in size but precise in function, it understands needs and knows how to seek outside help, ensuring that answers are both accurate and efficient.

- Thesis: SFR-RAG: Towards Contextually Faithful LLMs

SFR-RAG is a small language model fine-tuned with instructions focusing on context-based generation and minimizing illusions. By focusing on reducing the number of arguments while maintaining high performance, the SFR-RAG model includes function call functionality that allows it to dynamically interact with external tools to retrieve high-quality contextual information.

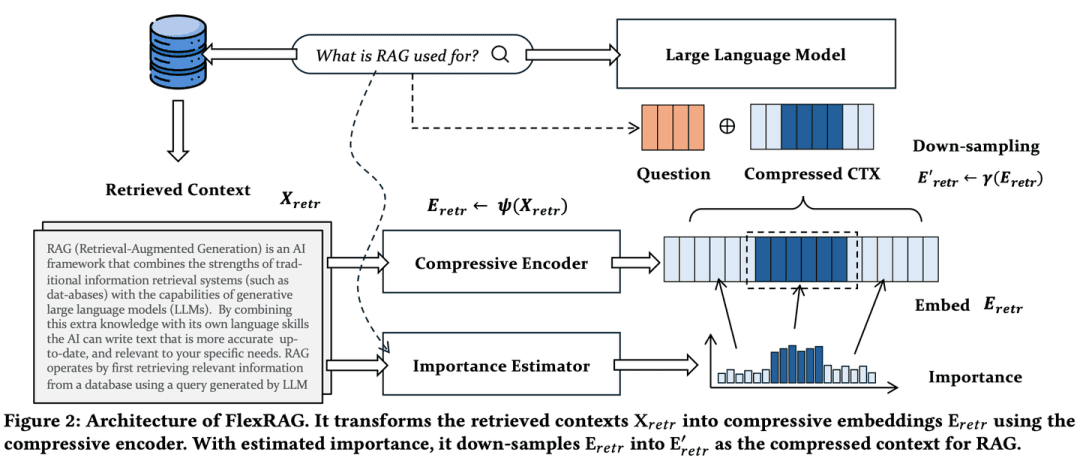

(46) FlexRAG [Compression Specialist]

Compression Specialist: Condense a long speech into a concise summary, and the compression ratio can be flexibly adjusted according to the needs, without losing key information, but also saving storage and processing costs. It is like refining a thick book into a concise reading note.

- Thesis: Lighter And Better: Towards Flexible Context Adaptation For Retrieval Augmented Generation

Contexts retrieved by FlexRAG are compressed into compact embeddings before being encoded by LLMs. At the same time, these compressed embeddings are optimized to enhance the performance of downstream RAGs.One of the key features of FlexRAG is its flexibility to efficiently support different compression ratios and selectively preserve important contexts. Thanks to these technical designs, FlexRAG achieves superior generation quality while significantly reducing operational costs. Comprehensive experiments on various Q&A datasets validate our approach as a cost-effective and flexible solution for RAG systems.

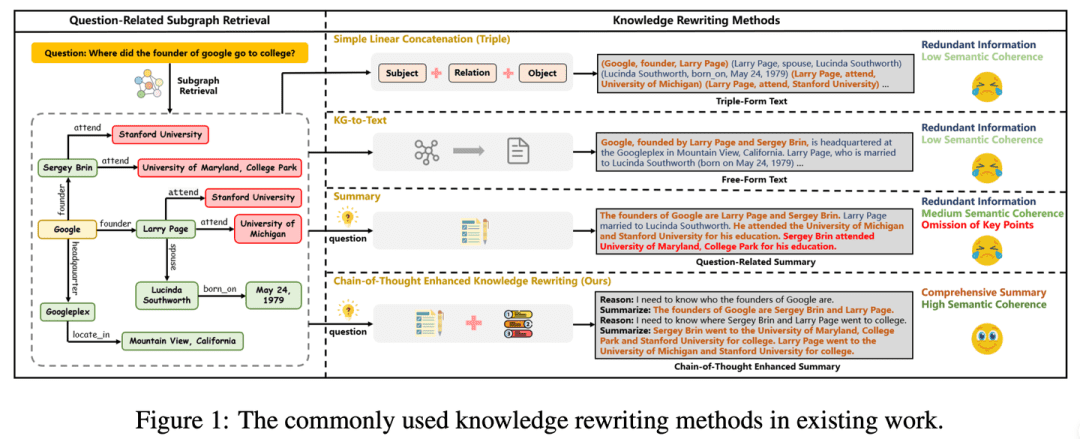

(47) CoTKR [atlas translation]

atlas translationLike a patient teacher, he or she understands the context of the knowledge and then explains it step by step, not simply repeating it, but relaying it in depth. At the same time, by collecting feedback from students, you can improve your own way of explaining, so that knowledge can be delivered more clearly and effectively.

- Thesis: CoTKR: Chain-of-Thought Enhanced Knowledge Rewriting for Complex Knowledge Graph Question Answering

- Project: https://github.com/wuyike2000/CoTKR

The CoTKR (Chain-of-Thought Enhanced Knowledge Rewriting) method generates reasoning paths and corresponding knowledge alternatively, thus overcoming the limitation of single-step knowledge rewriting. In addition, to bridge the preference difference between the knowledge rewriter and the QA model, we propose a training strategy that aligns preferences from Q&A feedback to further optimize the knowledge rewriter by exploiting feedback from the QA model.

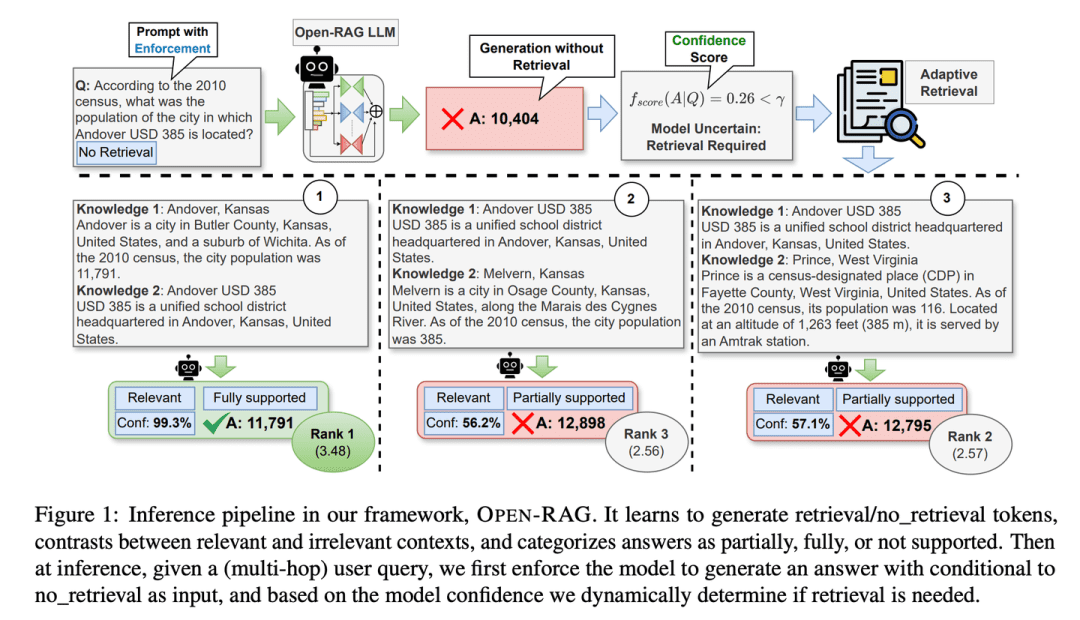

(48) Open-RAG [Think Tank]

repository: Breaking down the huge language model into expert groups that can think independently as well as work together, and being particularly good at distinguishing between true and false information, knowing whether to look up information or not at critical moments, like a seasoned think tank.

- Thesis: Open-RAG: Enhanced Retrieval-Augmented Reasoning with Open-Source Large Language Models

- Project: https://github.com/ShayekhBinIslam/openrag

Open-RAG improves reasoning in RAG by open-sourcing large language models, converting arbitrarily dense large language models into parameter-efficient sparse Mixed-Mixture-of-Experts (MoE) models that are capable of handling complex reasoning tasks, including both single-hop and multihop queries.OPEN-RAG uniquely trains models to cope with challenging interfering terms that appear to be relevant but are misleading.

(49) TableRAG [Excel Expert]

Excel Specialist: Go beyond simply viewing tabular data, and know how to understand and retrieve data in both header and cell dimensions, just as proficiently using a pivot table to quickly locate and extract the key information you need.

- Thesis: TableRAG: Million-Token Table Understanding with Language Models

TableRAG has designed a retrieval-enhanced generation framework specifically for table comprehension, combining Schema and cell retrieval through query expansion to pinpoint key data before providing information to the language model, enabling more efficient data encoding and accurate retrieval, dramatically shortening hint lengths and reducing information loss.

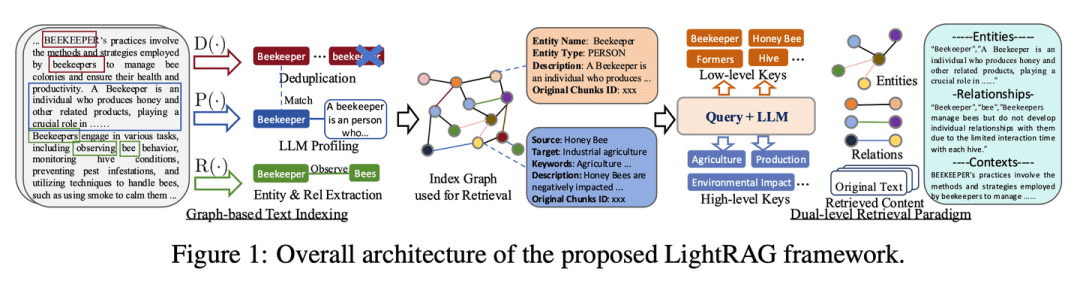

(50) LightRAG [Spider-Man]

spiderman: Nimbly weaving in and out of the web of knowledge, both catching the filaments between the points of knowledge and using the web to follow the threads. Like a clairvoyant librarian, not only do you know where each book is, but you also know which books to read together.

- Thesis: LightRAG: Simple and Fast Retrieval-Augmented Generation

- Project: https://github.com/HKUDS/LightRAG

The framework integrates graph structures into the text indexing and retrieval process. This innovative framework employs a two-tier retrieval system that enhances comprehensive information retrieval from both low-level and high-level knowledge discovery. In addition, combining graph structures with vector representations facilitates efficient retrieval of relevant entities and their relationships, significantly improving response time while maintaining contextual relevance. This capability is further enhanced by an incremental update algorithm that ensures timely integration of new data, enabling the system to remain effective and responsive in a rapidly changing data environment.

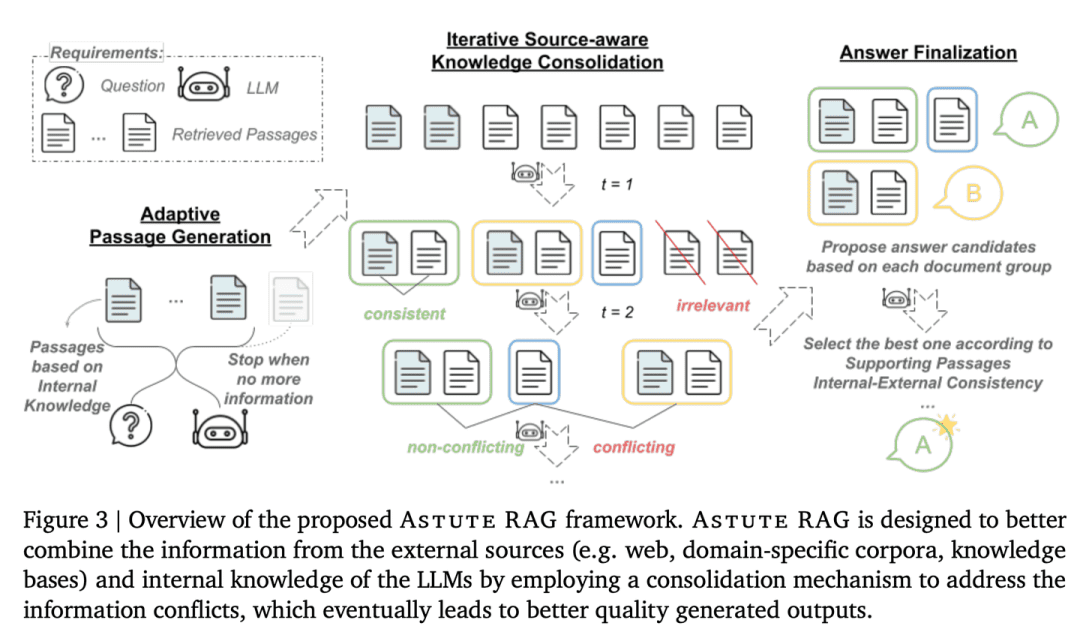

(51) AstuteRAG [Wise Judge]

Sensible Judge: To remain vigilant to external information, not to trust the results of searches, to make good use of one's own accumulated knowledge, to screen the authenticity of information, and to weigh the evidence of many parties to reach a conclusion, just like a senior judge.

- Thesis: Astute RAG: Overcoming Imperfect Retrieval Augmentation and Knowledge Conflicts for Large Language Models

The robustness and trustworthiness of the system is improved by adaptively extracting information from the internal knowledge of the LLMs, combining it with external search results, and finalizing the answer based on the reliability of the information.

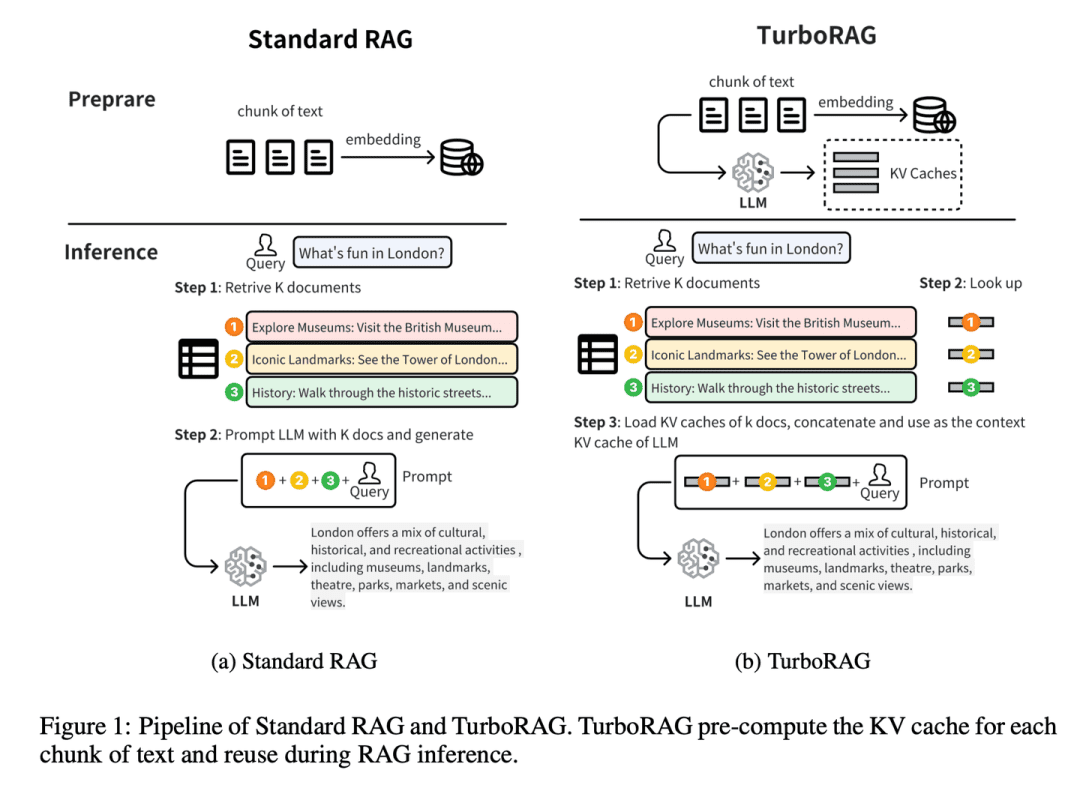

(52) TurboRAG [shorthand master]

master stenographer: Do your homework ahead of time and memorize your answers in a small notebook. Like a pre-exam raid school bully, not the scene of the Buddha, but the common questions in advance organized into the wrong book. When you need to directly turn out to use, save every time to deduce once on the spot.

- Thesis: TurboRAG: Accelerating Retrieval-Augmented Generation with Precomputed KV Caches for Chunked Text

- Project: https://github.com/MooreThreads/TurboRAG

TurboRAG optimizes the reasoning paradigm of the RAG system by precomputing and storing the KV caches of documents offline. Unlike traditional approaches, TurboRAG no longer computes these KV caches at each inference, but instead retrieves pre-computed caches for efficient pre-population, eliminating the need for repetitive online computation. This approach significantly reduces computational overhead and speeds up response time while maintaining accuracy.

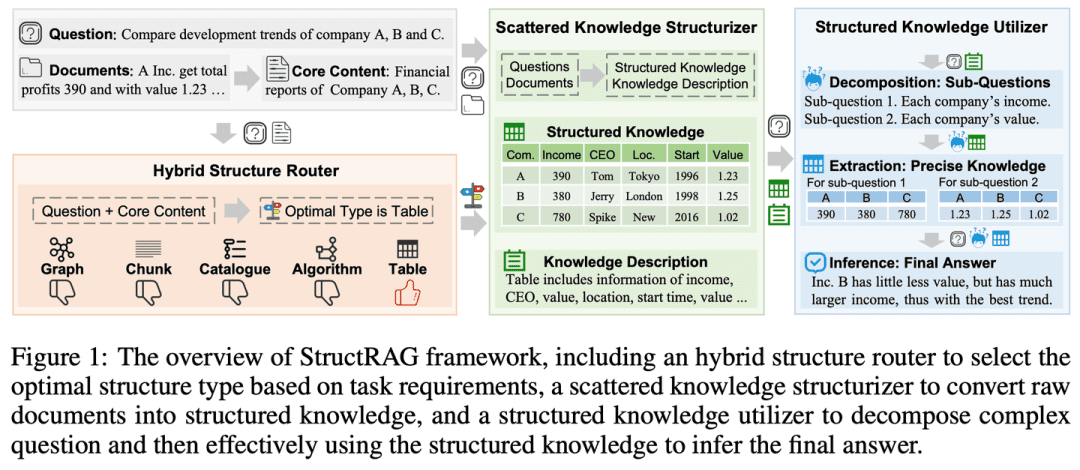

(53) StructRAG [organizer]

organizer: Organize cluttered information in categories like a closet. Like a schoolteacher mimicking the human mind, instead of rote memorization, draw a mind map first.

- Thesis: StructRAG: Boosting Knowledge Intensive Reasoning of LLMs via Inference-time Hybrid Information Structurization

- Project: https://github.com/Li-Z-Q/StructRAG

Inspired by the cognitive theory that humans convert raw information into structured knowledge when dealing with knowledge-intensive reasoning, the framework introduces a hybrid information structuring mechanism that constructs and utilizes structured knowledge in the most appropriate format according to the specific requirements of the task at hand. By mimicking human-like thought processes, it improves LLM performance on knowledge-intensive reasoning tasks.

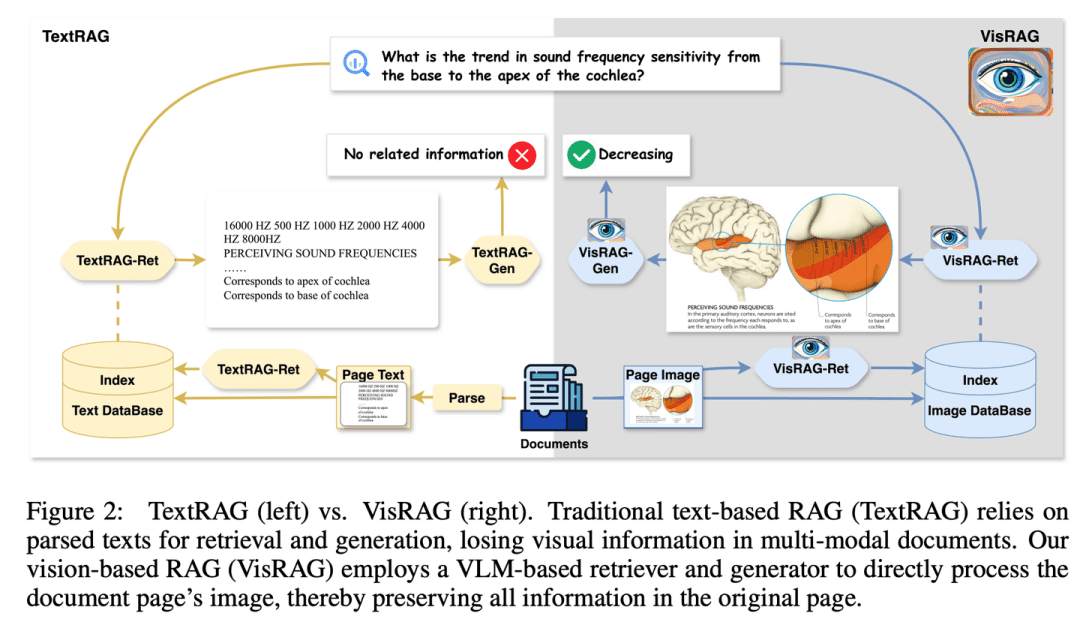

(54) VisRAG [eyes of fire]

discerning eyes: I finally realized that words are just a special form of expression of images. Like a reader who has opened his eyes, he is no longer obsessed with word-by-word parsing, but directly "sees" the whole picture. Instead of OCR, I used a camera and realized the essence of "a picture is worth a thousand words".

- Thesis: VisRAG: Vision-based Retrieval-augmented Generation on Multi-modality Documents

- Project: https://github.com/openbmb/visrag

The generation is enhanced by constructing a Visual-Linguistic Model (VLM)-based RAG process that directly embeds and retrieves documents as images. Compared with traditional text RAG, VisRAG avoids information loss during parsing and preserves the information of the original document more comprehensively. Experiments show that VisRAG outperforms traditional RAG in both the retrieval and generation phases, with an end-to-end performance improvement of 25-391 TP3 T. VisRAG not only makes effective use of the training data, but also demonstrates strong generalization capabilities, making it an ideal choice for multimodal document RAG.

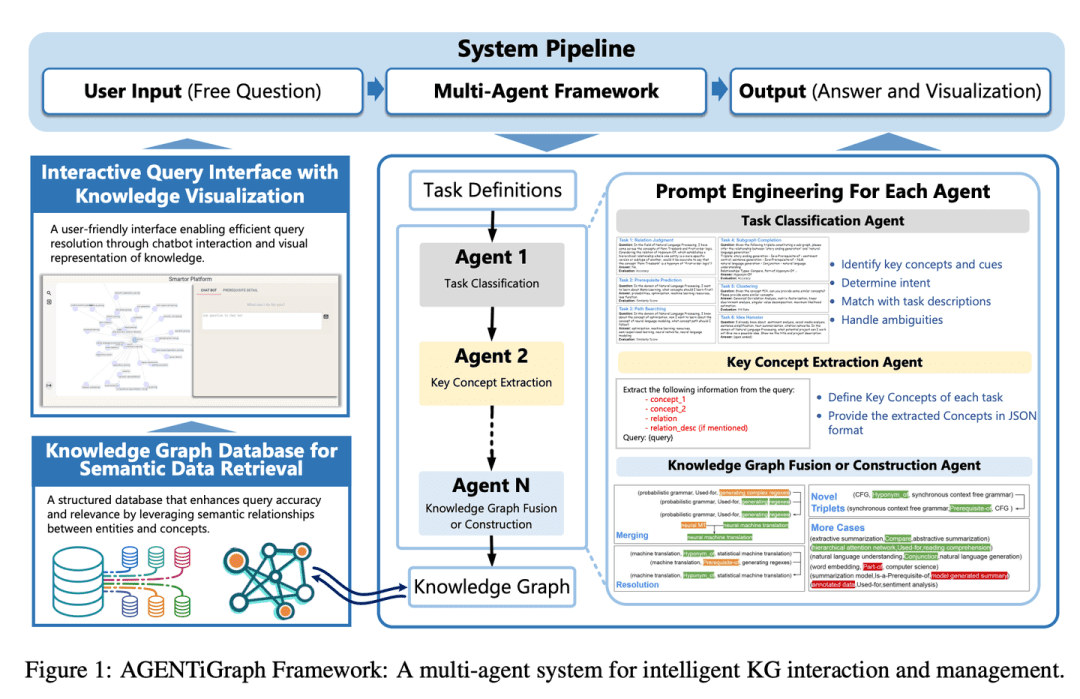

(55) AGENTiGraph [Knowledge Manager]

knowledge manager: Like a conversational librarian, it helps you organize and present your knowledge through daily communication, with a team of assistants ready to answer questions and update information, making knowledge management easy and natural.

- Thesis: AGENTiGraph: An Interactive Knowledge Graph Platform for LLM-based Chatbots Utilizing Private Data

AGENTiGraph is a platform for knowledge management through natural language interaction. It integrates knowledge extraction, integration and real-time visualization.AGENTiGraph employs a multi-intelligence architecture to dynamically interpret user intent, manage tasks and integrate new knowledge, ensuring that it can adapt to changing user needs and data contexts.

(56) RuleRAG [Rule Following]

follow the compass and go with the set square (idiom); to follow the rules inflexibly: Teaching AI to do things with rules is like bringing a new person on board and giving them an employee handbook first. Instead of learning aimlessly, it is like a strict teacher who first explains the rules and examples, and then lets the students do it on their own. Do more, these rules become muscle memory, the next time you encounter similar problems naturally know how to deal with.

- Thesis: RuleRAG: Rule-guided retrieval-augmented generation with language models for question answering

- Project: https://github.com/chenzhongwu20/RuleRAG_ICL_FT

RuleRAG proposes a rule-guided retrieval-enhanced generation approach based on a language model that explicitly introduces symbolic rules as examples of contextual learning (RuleRAG - ICL) to guide a retriever to retrieve logically relevant documents in the direction of the rules, and uniformly guides a generator to produce informed answers guided by the same set of rules. In addition, the combination of queries and rules can be further used as supervised fine-tuning data for updating the retrievers and generators (RuleRAG - FT) to achieve better rule-based instruction adherence, which in turn retrieves more supportive results and generates more acceptable answers.

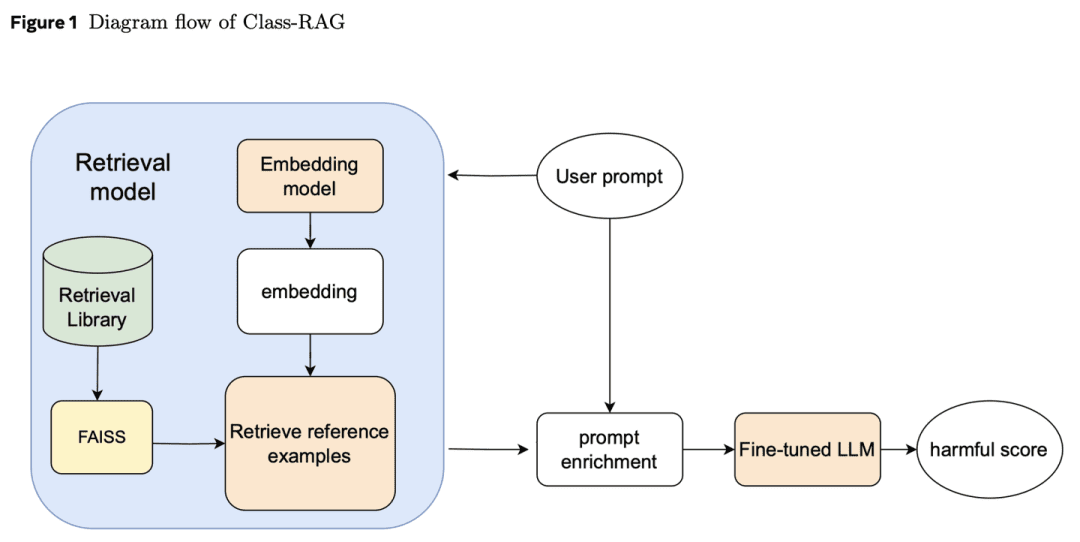

(57) Class-RAG [Judges]

a judge: Instead of relying on rigid provisions to adjudicate cases, we study and adjudicate through an ever-expanding jurisprudence base. Like an experienced judge, he holds a loose-leaf codex in his hand and reads the latest cases at any time, so that the judgment has both temperature and scale.

- Thesis: Class-RAG: Content Moderation with Retrieval Augmented Generation

Content vetting classifiers are critical to the security of generative AI. However, the nuances between secure and insecure content are often difficult to distinguish. As technologies become widely used, it becomes increasingly difficult and expensive to continuously fine-tune models to address risks. To this end, we propose the Class-RAG approach, which achieves immediate risk mitigation by dynamically updating the retrieval base. Compared with traditional fine-tuning models, Class-RAG is more flexible and transparent, and performs better in terms of classification and attack resistance. It is also shown that expanding the search base can effectively improve the audit performance at low cost.

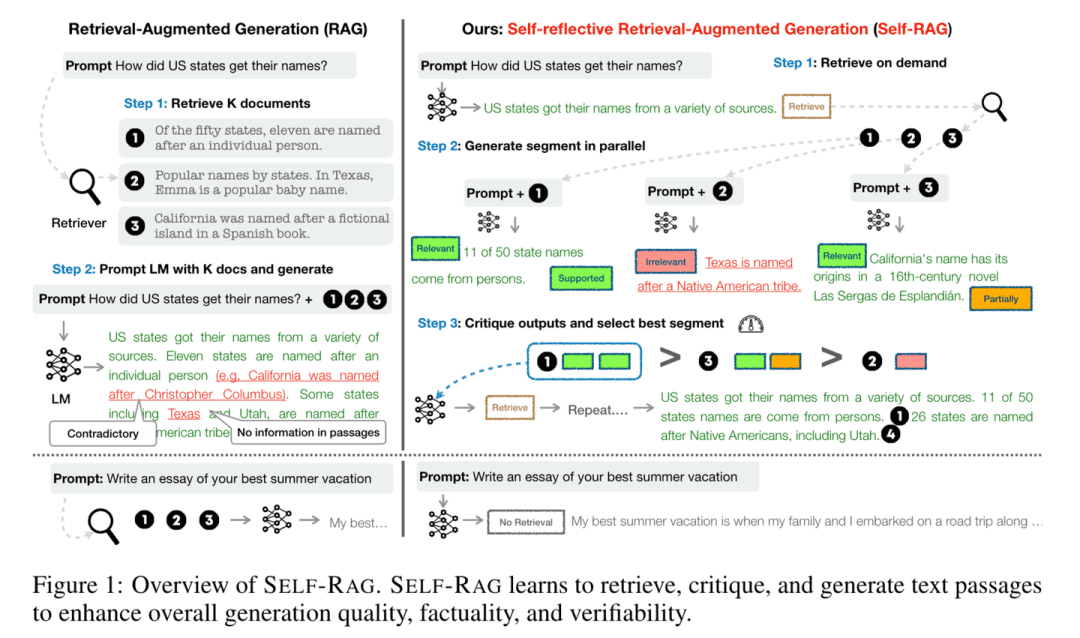

(58) Self-RAG [Reflector]

thinker: When answering questions, they not only consult information, but also think and check their answers for accuracy and completeness. By "thinking while speaking", like a prudent scholar, I make sure that each point is supported by solid evidence.

- Thesis: Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection

- Project: https://github.com/AkariAsai/self-rag

Self-RAG improves the quality and accuracy of language models through retrieval and self-reflection. The framework trains a single arbitrary language model that can adaptively retrieve passages on demand and use special markers called reflective markers to generate and reflect on the retrieved passages and their own generated content. Generating reflective markers makes the language model controllable in the reasoning phase, allowing it to adapt its behavior to different task requirements.

(59) SimRAG [self-taught]

a self-made genius: When confronted with a specialized field, ask questions of your own before answering them yourself, and improve your professional knowledge reserve through constant practice, just as students familiarize themselves with specialized knowledge by repeatedly doing exercises.

- Thesis: SimRAG: Self-Improving Retrieval-Augmented Generation for Adapting Large Language Models to Specialized Domains

SimRAG is a self-training methodology that equips LLMs with the joint capabilities of question and answer and question generation for specific domains. Good questions can only be asked if the knowledge is truly understood. These two capabilities complement each other to help the model better understand specialized knowledge. The LLM is first fine-tuned in terms of instruction following, Q&A and searching for relevant data. It then prompts the same LLM to generate a variety of domain-relevant questions from an unlabeled corpus, with additional filtering strategies to retain high-quality synthetic examples. By utilizing these synthetic examples, the LLM can improve its performance on domain-specific RAG tasks.

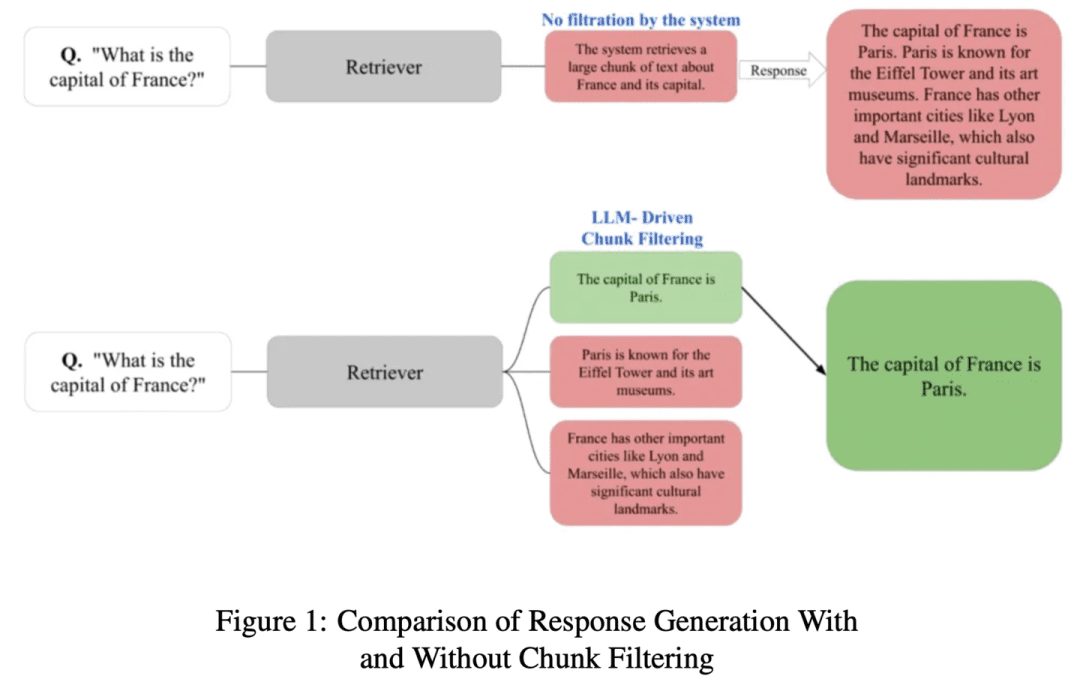

(60) ChunkRAG [excerpted by the master]

excerpt master: Break long articles into smaller paragraphs, then pick out the most relevant pieces with a professional eye, without missing the point or being distracted by irrelevant content.

- Thesis: ChunkRAG: Novel LLM-Chunk Filtering Method for RAG Systems

ChunkRAG proposes LLM-driven chunk filtering approach to enhance the framework of RAG systems by evaluating and filtering retrieved information at the chunk level, where "chunks" represent smaller coherent parts of a document. Our approach employs semantic chunking to divide documents into coherent parts and utilizes relevance scoring based on a large language model to evaluate how well each chunk matches the user query. By filtering out less relevant chunks before the generation phase, we significantly reduce illusions and improve factual accuracy.

(61) FastGraphRAG [Radar]

radar (loanword): Like Google Page Rank, give knowledge points a hot list. It's like an opinion leader in a social network, the more people follow it, the easier it is to be seen. Instead of searching aimlessly, it's like a scout with radar, looking wherever the signal is strong.

- Project: https://github.com/circlemind-ai/fast-graphrag

FastGraphRAG provides an efficient, interpretable and highly accurate Fast Graph Retrieval Augmented Generation (FastGraphRAG) framework. It applies the PageRank algorithm to the knowledge graph traversal process to quickly locate the most relevant knowledge nodes. By calculating the importance score of a node, PageRank enables GraphRAG to filter and sort information in the knowledge graph more intelligently. This is like equipping GraphRAG with an "Importance Radar" that can quickly locate key information in a sea of data.

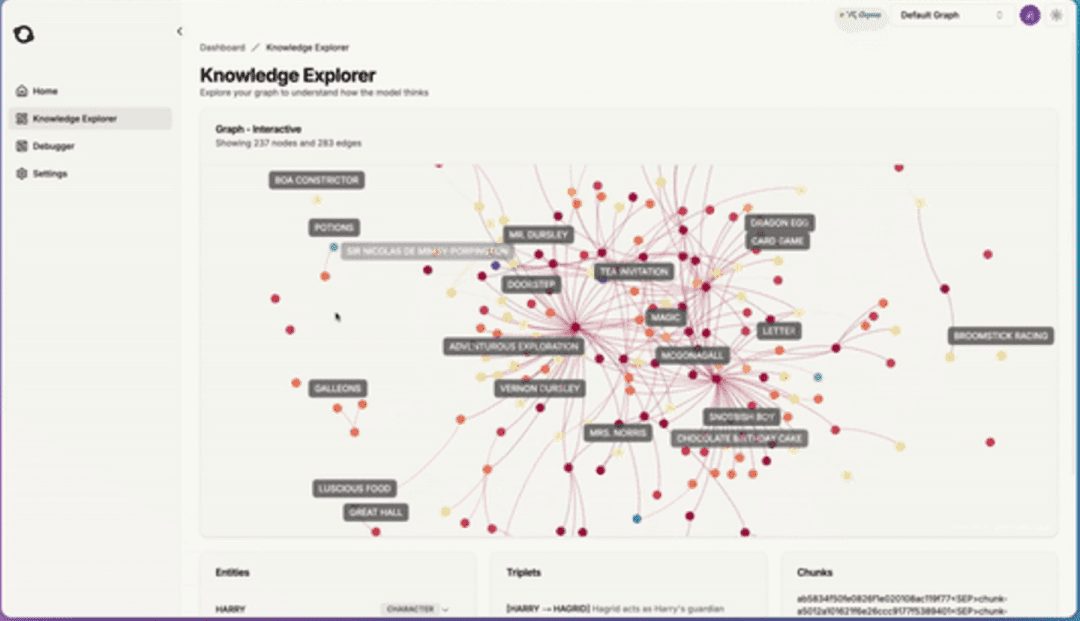

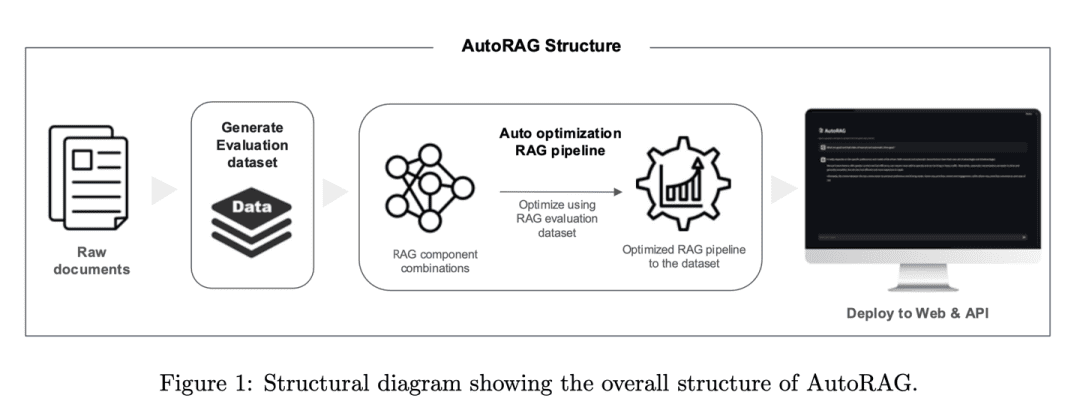

(62) AutoRAG [Tuner]

tunerThe RAG is an experienced tuner who finds the best sound not by guessing, but by scientific testing. It automatically tries various RAG combinations, just like a tuner tests different audio equipment to find the most harmonious "playing scheme".

- Thesis: AutoRAG: Automated Framework for optimization of Retrieval Augmented Generation Pipeline

- Project: https://github.com/Marker-Inc-Korea/AutoRAG_ARAGOG_Paper

The AutoRAG framework automatically identifies suitable RAG modules for a given dataset and explores and approximates the optimal combination of RAG modules for that dataset. By systematically evaluating different RAG settings to optimize the choice of techniques, the framework is similar to the practice of AutoML in traditional machine learning, where extensive experiments are conducted to optimize the choice of RAG techniques and improve the efficiency and scalability of the RAG system.

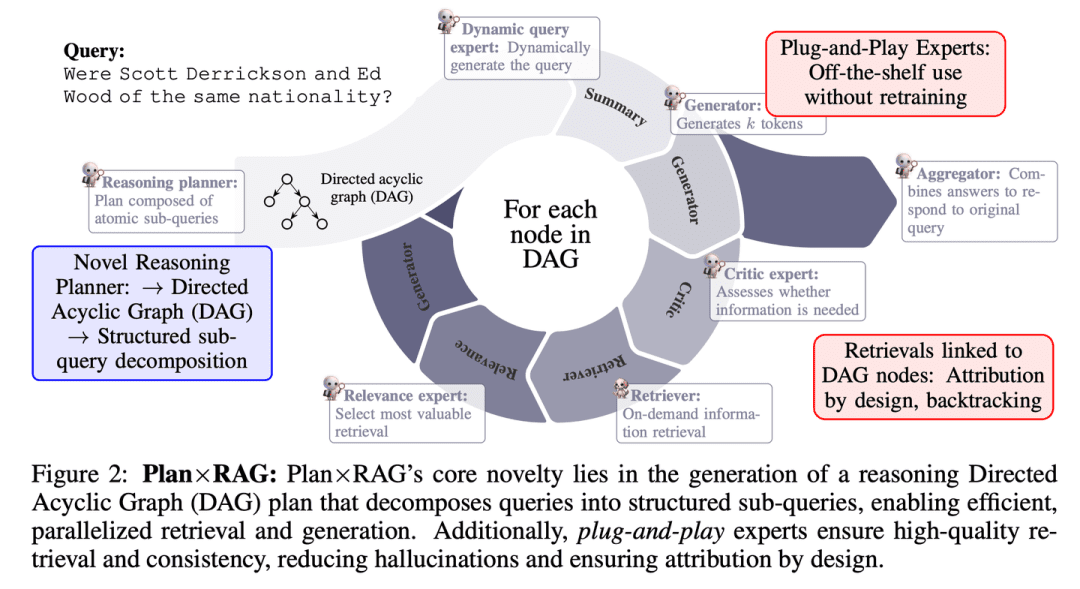

(63) Plan x RAG [Program Manager]

project managerPlanning before action, breaking down large tasks into smaller ones and arranging for multiple "experts" to work in parallel. Each expert is responsible for his or her own area, and the project manager will summarize the results at the end. This approach is not only faster and more accurate, but also clearly explains the source of each conclusion.

- Thesis: Plan × RAG: Planning-guided Retrieval Augmented Generation

Plan×RAG is a novel framework that expands the "retrieval - reasoning" paradigm of existing RAG frameworks to a "plan - retrieval" paradigm.Plan×RAG formulates reasoning plans as directed acyclic graphs (DAGs) that decompose queries into Plan×RAG formulates the reasoning plan as a directed acyclic graph (DAG) and decomposes the query into interrelated atomic subqueries. Answer generation follows the DAG structure, which significantly improves efficiency by parallelizing retrieval and generation. While state-of-the-art RAG solutions require extensive data generation and fine-tuning of language models (LMs), Plan×RAG incorporates frozen LMs as plug-and-play experts to generate high-quality answers.

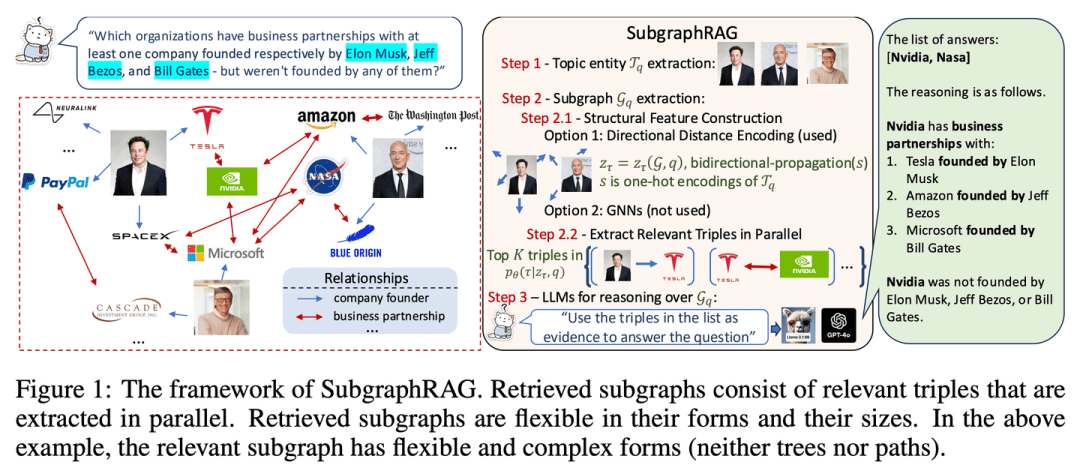

(64) SubgraphRAG [locator]

positioner: Instead of looking for a needle in a haystack, a small map of knowledge is precisely drawn so that AI can find answers quickly.

- Thesis: Simple is Effective: The Roles of Graphs and Large Language Models in Knowledge-Graph-Based Retrieval-Augmented Generation

- Project: https://github.com/Graph-COM/SubgraphRAG

SubgraphRAG extends the KG-based RAG framework by retrieving subgraphs and utilizing LLM for inference and answer prediction. A lightweight multilayer perceptron is combined with a parallel ternary scoring mechanism for efficient and flexible subgraph retrieval, while encoding directed structure distance to improve retrieval effectiveness. The size of the retrieved subgraphs can be flexibly adjusted to match the query requirements and the capability of the downstream LLM. This design strikes a balance between model complexity and inference capability, enabling a scalable and generalized retrieval process.

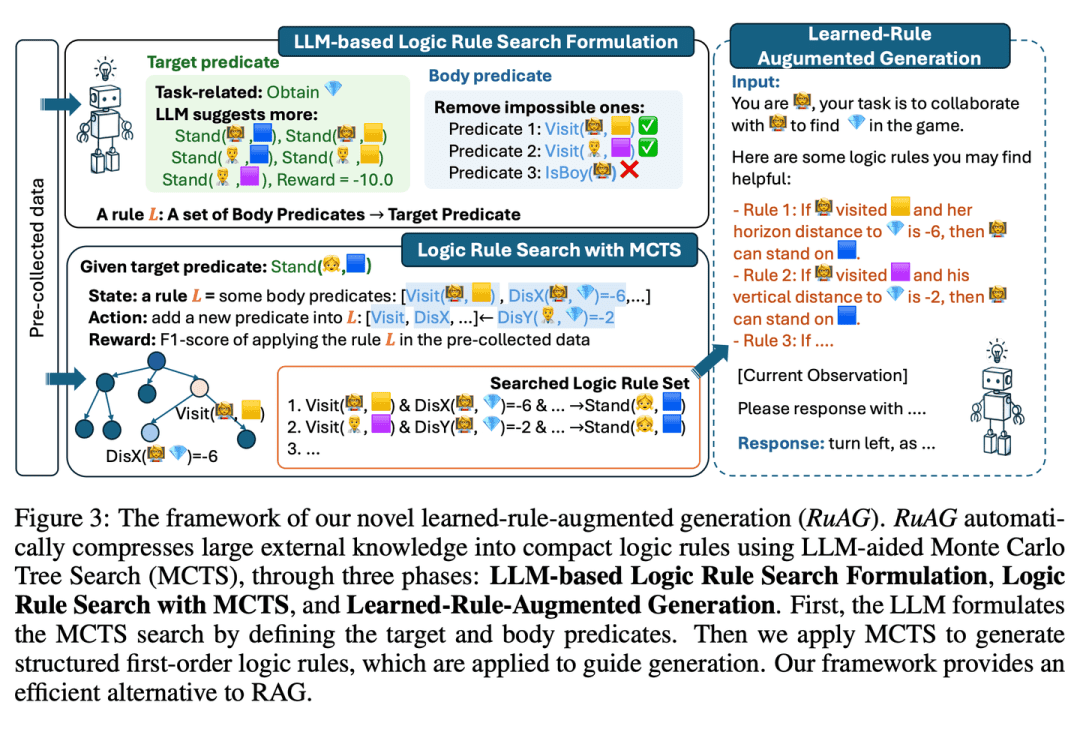

(65) RuRAG [Alchemist]

alchemist: Like an alchemist, it can distill massive amounts of data into clear logical rules and express them in plain language, making AI smarter in practical applications.

- Thesis: RuAG: Learned-rule-augmented Generation for Large Language Models

aims to enhance the reasoning capabilities of large-scale language models (LLMs) by automatically distilling large amounts of offline data into interpretable first-order logic rules and injecting them into LLMs. The framework uses Monte Carlo Tree Search (MCTS) to discover logical rules and transforms these rules into natural language, enabling knowledge injection and seamless integration for LLM downstream tasks. The paper evaluates the effectiveness of the framework on public and private industrial tasks, demonstrating its potential to enhance LLM capabilities in diverse tasks.

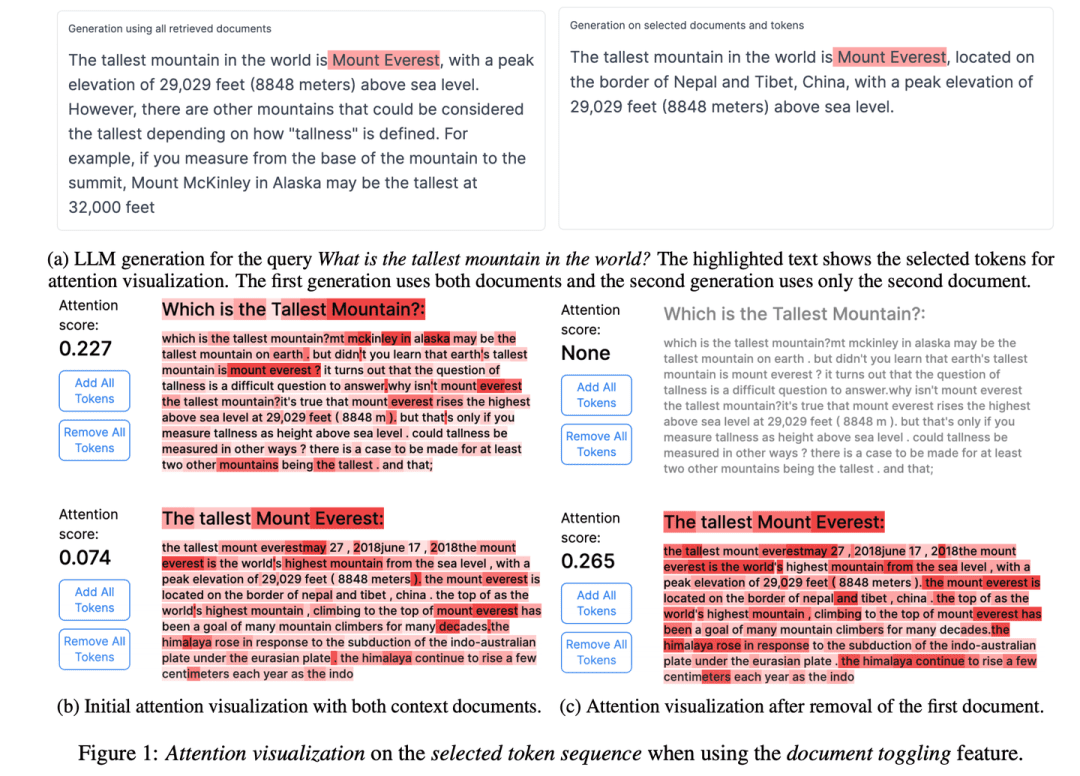

(66) RAGViz [see-through eye]

tunnel vision: Make the RAG system transparent, see which sentence the model is reading, like a doctor looking at an X-ray, and see what's wrong at a glance.

- Thesis: RAGViz: Diagnose and Visualize Retrieval-Augmented Generation

- Project: https://github.com/cxcscmu/RAGViz

RAGViz provides visualization of retrieved documents and model attention to help users understand the interaction between generated markup and retrieved documents, and can be used to diagnose and visualize RAG systems.

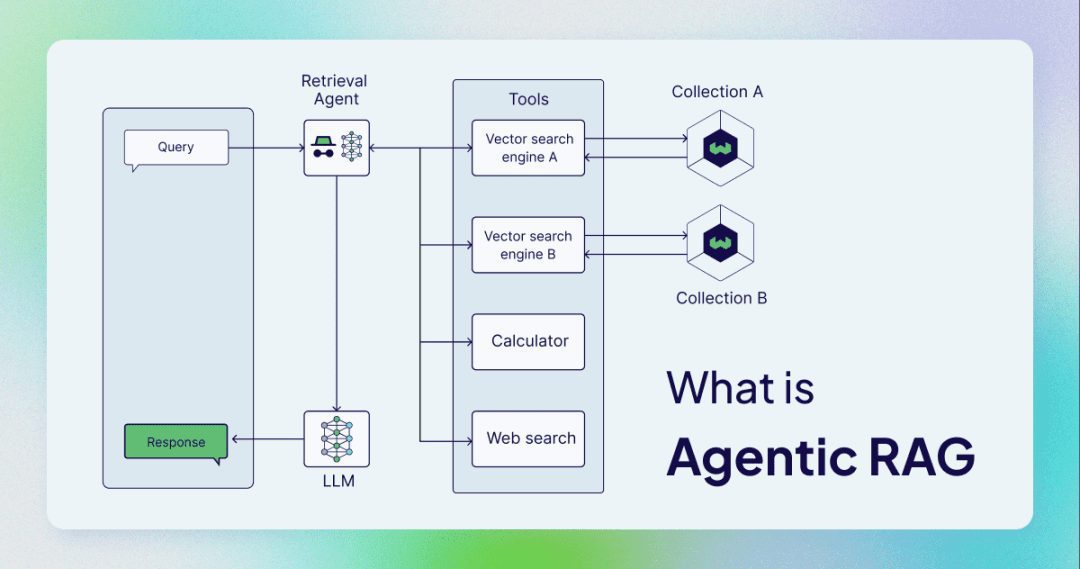

(67) AgenticRAG [Intelligent Assistant]

intelligent assistant: It is no longer a simple search and copy, but an assistant who can act as a confidential secretary. Like a competent administrator, he not only knows how to look up information, but also knows when to make a phone call, when to hold a meeting, and when to ask for instructions.

AgenticRAG describes a RAG based on the implementation of AI intelligences. specifically, it incorporates AI intelligences into the RAG process to coordinate its components and perform additional actions beyond simple information retrieval and generation to overcome the limitations of non-intelligent body processes.

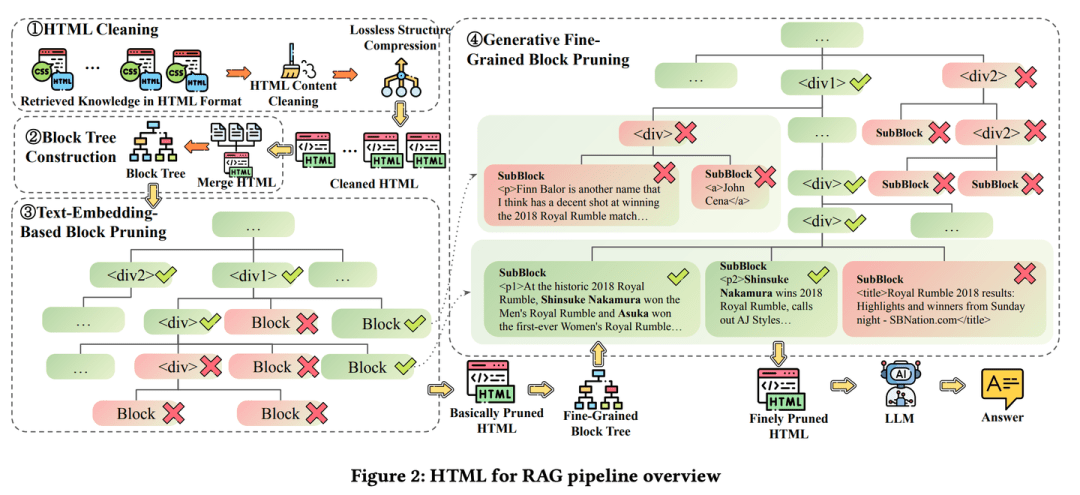

(68) HtmlRAG [Typographer]

typographer: Knowledge is not kept as a running account, but like a magazine layout, with bolding where bold is appropriate and red where red is appropriate. Just like a picky editor, who feels that content is not enough, but also layout, so that the key points can be seen at a glance.

- Thesis: HtmlRAG: HTML is Better Than Plain Text for Modeling Retrieved Knowledge in RAG Systems

- Project: https://github.com/plageon/HtmlRAG

HtmlRAG uses HTML rather than plain text as the format for retrieving knowledge in RAG, HTML is better than plain text when modeling knowledge from external documents and most LLMs have a strong understanding of HTML.HtmlRAG proposes HTML cleanup, compression, and pruning strategies to shorten HTML while minimizing information loss.

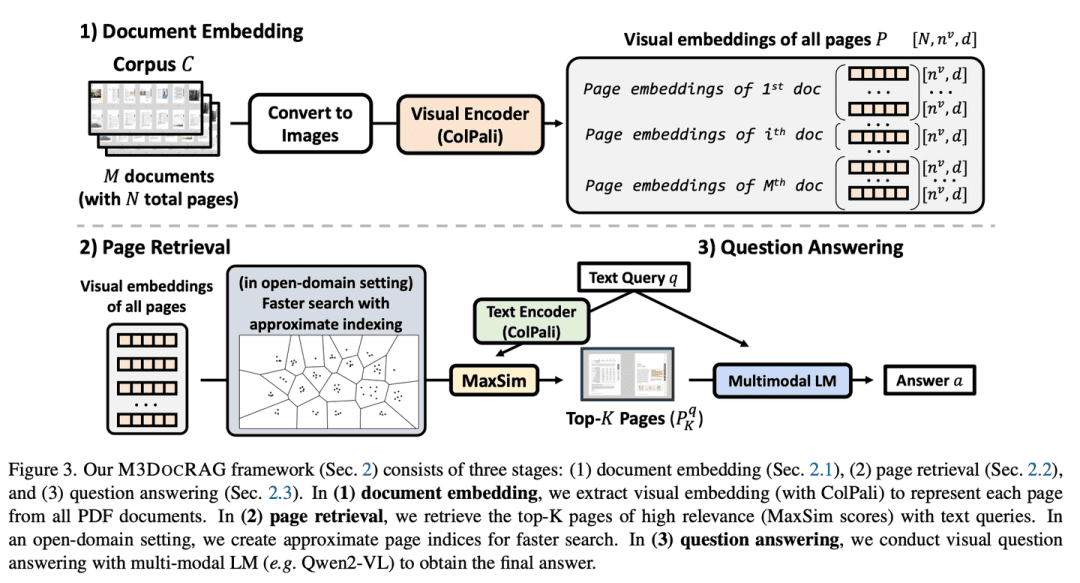

(69) M3DocRAG [Sensualist]

sensory personIt's not just about reading, it's about being able to read and understand pictures and recognize sounds. Like an all-round contestant in a variety show, he can read pictures and understand words, and he can jump when he needs to jump, and focus on details when he needs to focus, so all kinds of challenges are difficult to overcome.

- Thesis: M3DocRAG: Multi-modal Retrieval is What You Need for Multi-page Multi-document Understanding

M3DocRAG is a novel multimodal RAG framework that flexibly adapts to a variety of document contexts (closed and open domains), question jumps (single and multiple), and modes of evidence (text, charts, graphs, etc.).M3DocRAG uses a multimodal retriever and an MLM to find relevant documents and answer questions, and as such, it can efficiently process single or multiple documents while preserving visual information.

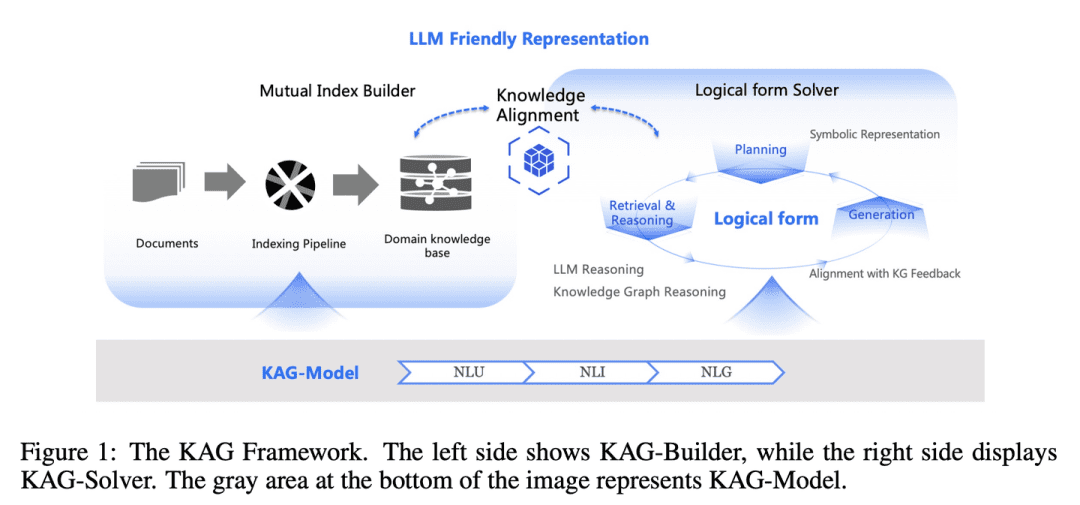

(70) KAG [Masters of Logic]

logic master: It is not just a matter of finding similar answers by feeling, but also the cause and effect relationship between knowledge. Like a rigorous math teacher, not only do you need to know what the answer is, but you also need to explain how that answer was derived step by step.

- Thesis: KAG: Boosting LLMs in Professional Domains via Knowledge Augmented Generation

- Project: https://github.com/OpenSPG/KAG

The gap between the similarity of vectors and the relevance of knowledge reasoning in RAG, and the insensitivity to knowledge logic (e.g., numerical values, temporal relations, expert rules, etc.) impede the effectiveness of expertise services.KAG is designed to leverage the strengths of the Knowledge Graph (KG) and vector retrieval to address the above challenges, and bi-directionally augment the large-scale language models (LLMs) and the Knowledge Graph through five key aspects to improve generation and inference performance: (1) LLM-friendly knowledge representation, (2) cross-indexing between Knowledge Graph and raw chunks, (3) logical form-guided hybrid inference engine, (4) knowledge alignment with semantic inference, and (5) modeling capability enhancement of KAG.

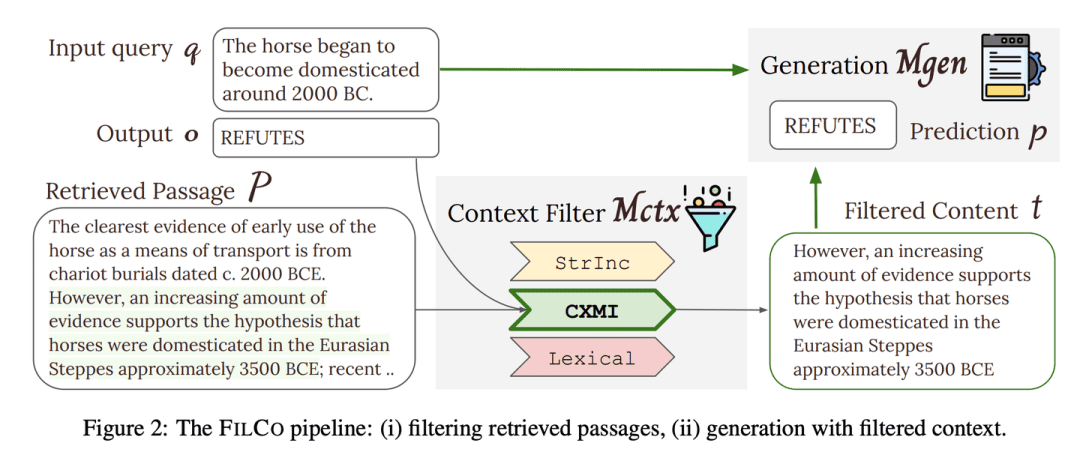

(71) FILCO [Screener]

screeners: Like a scrupulous editor, adept at recognizing and retaining the most valuable information from large amounts of text, ensuring that every piece of content delivered to the AI is accurate and relevant.

- Thesis: Learning to Filter Context for Retrieval-Augmented Generation

- Project: https://github.com/zorazrw/filco

FILCO improves the quality of the context provided to the generator by identifying useful contexts based on lexical and information-theoretic approaches and by training context filtering models to filter the retrieved contexts.

(72) LazyGraphRAG [actuary]

actuaries: A step is a step if you can save it, and put the big expensive models to good use. Like a housewife who knows how to live, you don't just buy when you see a sale at the supermarket, you shop around before deciding where to spend your money for the best value.

- Project: https://github.com/microsoft/graphrag

A novel approach to graph-enhanced generation of enhanced retrieval (RAG). This approach significantly reduces indexing and querying costs while maintaining or outperforming competitors in terms of answer quality, making it highly scalable and efficient across a wide range of use cases.LazyGraphRAG delays the use of LLM. During the indexing phase, LazyGraphRAG uses only lightweight NLP techniques to process the text, delaying the invocation of LLM until the actual query. This "lazy" strategy avoids high upfront indexing costs and achieves efficient resource utilization.

| Traditional GraphRAG | LazyGraphRAG | |

| indexing stage | - Extracting and describing entities and relationships using LLM - Generate summaries for each entity and relationship - Summarize community content using LLM - Generate embedding vectors - Generating Parquet Files | - Extracting concepts and co-occurring relationships using NLP techniques - Constructing conceptual maps - Extraction of community structures - LLM is not used in the indexing phase |

| Inquiry stage | - Answer queries directly using community summaries - Lack of refinement of queries and focus on relevant information | - Using LLM to refine queries and generate subqueries - Selection of text segments and communities based on relevance - Extracting and generating answers using LLM - More focused on relevant content, more precise answers |

| LLM call | - Heavy use in both the indexing phase and the query phase | - LLM is not used in the indexing phase - Call LLM only in the query phase - More efficient use of LLM |

| cost efficiency | - High indexing costs and time-consuming - Query performance limited by index quality | - Indexing cost is only 0.1% of traditional GraphRAG - High query efficiency and good quality of answers |

| data storage | - Parquet files are generated from indexed data, suitable for large-scale data storage and processing. | - Indexed data is stored in lightweight formats (e.g. JSON, CSV), which is better suited for rapid development and small data sizes. |

| Usage Scenarios | - For scenarios that are not sensitive to computing resources and time - A complete knowledge graph needs to be constructed in advance and stored as a Parquet file for subsequent import into a database for complex analysis | - For scenarios that require fast indexing and response - Ideal for one-off queries, exploratory analysis and streaming data processing |

RAG Survey

- A Survey on Retrieval-Augmented Text Generation

- Retrieving Multimodal Information for Augmented Generation: A Survey

- Retrieval-Augmented Generation for Large Language Models: A Survey

- Retrieval-Augmented Generation for AI-Generated Content: A Survey

- A Survey on Retrieval-Augmented Text Generation for Large Language Models

- RAG and RAU: A Survey on Retrieval-Augmented Language Model in Natural Language Processing

- A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models

- Evaluation of Retrieval-Augmented Generation: A Survey

- Retrieval-Augmented Generation for Natural Language Processing: A Survey

- Graph Retrieval-Augmented Generation: A Survey

- A Comprehensive Survey of Retrieval-Augmented Generation (RAG): Evolution, Current Landscape and Future Directions

- Retrieval Augmented Generation (RAG) and Beyond: A Comprehensive Survey on How to Make Your LLMs Use External Data More Wisely

RAG Benchmark

- Benchmarking Large Language Models in Retrieval-Augmented Generation

- RECALL: A Benchmark for LLMs Robustness against External Counterfactual Knowledge

- ARES: An Automated Evaluation Framework for Retrieval-Augmented Generation Systems

- RAGAS: Automated Evaluation of Retrieval Augmented Generation

- CRUD-RAG: A Comprehensive Chinese Benchmark for Retrieval-Augmented Generation of Large Language Models

- FeB4RAG: Evaluating Federated Search in the Context of Retrieval Augmented Generation

- CodeRAG-Bench: Can Retrieval Augment Code Generation?

- Long2RAG: Evaluating Long-Context & Long-Form Retrieval-Augmented Generation with Key Point Recall

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...