General Introduction

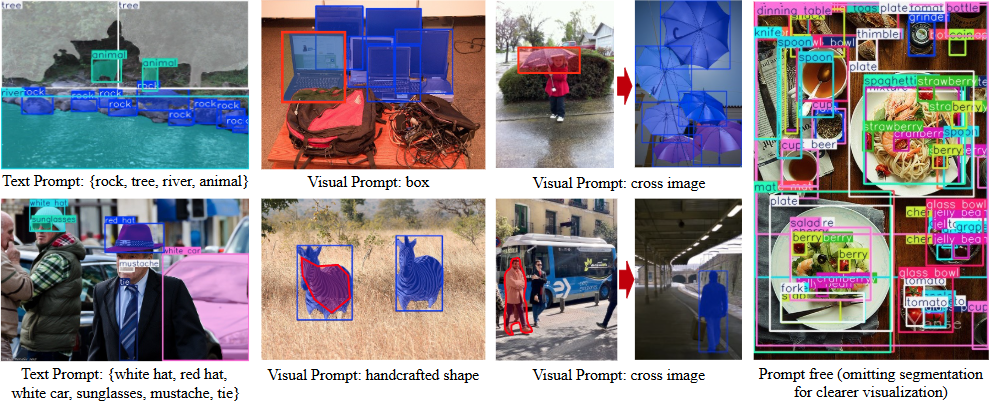

YOLOE is an open source project developed by the Multimedia Intelligence Group (THU-MIG) of Tsinghua University School of Software, with the full name "You Only Look Once Eye". It is based on PyTorch framework and belongs to YOLO An extension to the series that detects and segments any object in real time. The core feature of the project, hosted on GitHub, is that it supports three modes: textual cueing, visual cueing, and unprompted detection. Users can specify the target with text or images, or let the model automatically recognize more than 1,200 objects. Official data shows that YOLOE is 1.4x faster than YOLO-Worldv2 on the LVIS dataset and 3x cheaper to train, while maintaining high accuracy. The model can also be seamlessly converted to YOLOv8 or YOLO11 with no additional overhead, making it suitable for deployment on multiple devices.

Function List

- Supports real-time object detection to quickly recognize targets in images or videos.

- Provides instance segmentation function to accurately outline objects.

- Support text prompt detection, the user inputs text to specify the detection target.

- Provides visual cue detection to recognize similar objects by reference pictures.

- Built-in no-prompt mode automatically detects over 1200 common objects.

- Model can be reparameterized with YOLOv8/YOLO11 No inference overhead.

- Provides a variety of pre-trained models (S/M/L scale) to support different performance requirements.

- Open source code and documentation for developers to modify and extend.

Using Help

The use of YOLOE is divided into two parts: installation and operation. The following are detailed steps to ensure that users can get started easily.

Installation process

- Preparing the environment

Requires Python 3.10 and PyTorch. It is recommended that you use Conda to create a virtual environment:

conda create -n yoloe python=3.10 -y

conda activate yoloe

- Cloning Code

Download the YOLOE project from GitHub:

git clone https://github.com/THU-MIG/yoloe.git

cd yoloe

- Installation of dependencies

Install the necessary libraries, including CLIP and MobileCLIP:

pip install -r requirements.txt

pip install git+https://github.com/THU-MIG/yoloe.git#subdirectory=third_party/CLIP

pip install git+https://github.com/THU-MIG/yoloe.git#subdirectory=third_party/ml-mobileclip

pip install git+https://github.com/THU-MIG/yoloe.git#subdirectory=third_party/lvis-api

wget https://docs-assets.developer.apple.com/ml-research/datasets/mobileclip/mobileclip_blt.pt

- Download pre-trained model

YOLOE offers a variety of models, such asyoloe-v8l-seg.pt. Download with the following command:

pip install huggingface-hub==0.26.3

huggingface-cli download jameslahm/yoloe yoloe-v8l-seg.pt --local-dir pretrain

Or load it automatically with Python:

from ultralytics import YOLOE

model = YOLOE.from_pretrained("jameslahm/yoloe-v8l-seg.pt")

- Verify Installation

Run the test command to check if the environment is normal:python predict_text_prompt.py --source ultralytics/assets/bus.jpg --checkpoint pretrain/yoloe-v8l-seg.pt --names person dog cat --device cuda:0

Main Functions

1. Text alert detection

- Functional Description: Enter text to detect the corresponding object.

- procedure::

- Prepare images such as

bus.jpgThe - Run the command, specifying the target (e.g. "human, dog, cat"):

python predict_text_prompt.py --source ultralytics/assets/bus.jpg --checkpoint pretrain/yoloe-v8l-seg.pt --names person dog cat --device cuda:0 - View the results and the image will be labeled with the detected objects.

- Prepare images such as

- Method of adjustment: If a detection is missed, the confidence threshold can be lowered:

--conf 0.001

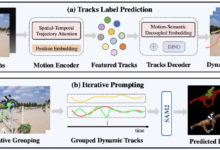

2. Visual cue detection

- Functional Description: Detecting similar objects with reference pictures.

- procedure::

- Prepare reference and target pictures.

- Training visual cue modules:

python tools/convert_segm2det.py python train_vp.py python tools/get_vp_segm.py - Run the test:

python predict_visual_prompt.py --source test.jpg --ref reference.jpg --checkpoint pretrain/yoloe-v8l-seg.pt - Check the output and confirm the result.

- caveat: Reference images need to be clear and well characterized.

3. Unprompted detection

- Functional Description: Automatically recognizes objects in pictures without typing prompts.

- procedure::

- Make sure the model is loaded with a pre-trained vocabulary list (1200+ categories supported).

- Run command:

python predict_prompt_free.py --source test.jpg --checkpoint pretrain/yoloe-v8l-seg.pt --device cuda:0 - View the results and all bodies will be labeled.

- Method of adjustment: If the detection is incomplete, the maximum number of detections can be increased:

--max_det 1000

4. Model conversion and deployment

- Functional Description: Convert YOLOE to YOLOv8/YOLO11 format for deployment to different devices.

- procedure::

- Install the export tool:

pip install onnx coremltools onnxslim - Run the export command:

python export.py --checkpoint pretrain/yoloe-v8l-seg.pt - The output format supports TensorRT (GPU) or CoreML (iPhone).

- Install the export tool:

- Performance data: on the T4 GPU.

yoloe-v8l-seg.ptThe FPS was 102.5; on the iPhone 12 it was 27.2.

5. Training of customized models

- Functional Description: Train YOLOE with your own dataset.

- procedure::

- Prepare a dataset such as Objects365v1 or GQA.

- Generate segmentation annotations:

python tools/generate_sam_masks.py --img-path ../datasets/Objects365v1/images/train --json-path ../datasets/Objects365v1/annotations/objects365_train.json - Generate a training cache:

python tools/generate_grounding_cache.py --img-path ../datasets/Objects365v1/images/train --json-path ../datasets/Objects365v1/annotations/objects365_train_segm.json - Run training:

python train_seg.py - Verify the effect:

python val.py

Other tools

- Web Demo: Launch the interface with Gradio:

pip install gradio==4.42.0 python app.pyinterviews

http://127.0.0.1:7860The

application scenario

- safety monitoring

Detecting people or objects in real time in the video, marking outlines for security management. - intelligent transportation

Recognize vehicles and pedestrians on the road to support traffic analysis or autonomous driving. - industrial quality control

Detecting part defects with visual cues improves productivity. - scientific research

Processes experimental images, automatically labels objects, and accelerates data processing.

QA

- What is the difference between YOLOE and YOLOv8?

YOLOE supports open scene detection (textual, visual, unprompted) while YOLOv8 is limited to fixed categories.YOLOE can also be converted to YOLOv8 with no additional overhead. - Need a GPU?

Not required.CPU can run, but GPU (e.g. CUDA) will be faster. - What if the test is not accurate?

Lowering the confidence threshold (--conf 0.001) or increase the number of tests (--max_det 1000). - What devices are supported?

Support for PC (TensorRT), iPhone (CoreML) and many other devices.