Voyage AI's Voyager 3 is a new state-of-the-art model that allows you to embed text and images into the same space. In this post, I will explain how to extract these multimodal embeddings from magazines, store them in a vector database (Weaviate), and perform similarity searches on text and images using the same embedding vectors.

Embedding images and text in the same space will allow us to perform highly accurate searches on multimodal content such as web pages, PDF files, magazines, books, brochures and various papers. Why is this technique so interesting? The main exciting aspect of embedding text and images into the same space is that you can search and retrieve text related to a specific image and vice versa. For example, if you're searching for cats, you'll find images that show cats, but you'll also get text that references those images, even if the text doesn't explicitly say the word "cat".

Let me show the difference between traditional text embedding similarity search and multimodal embedding space:

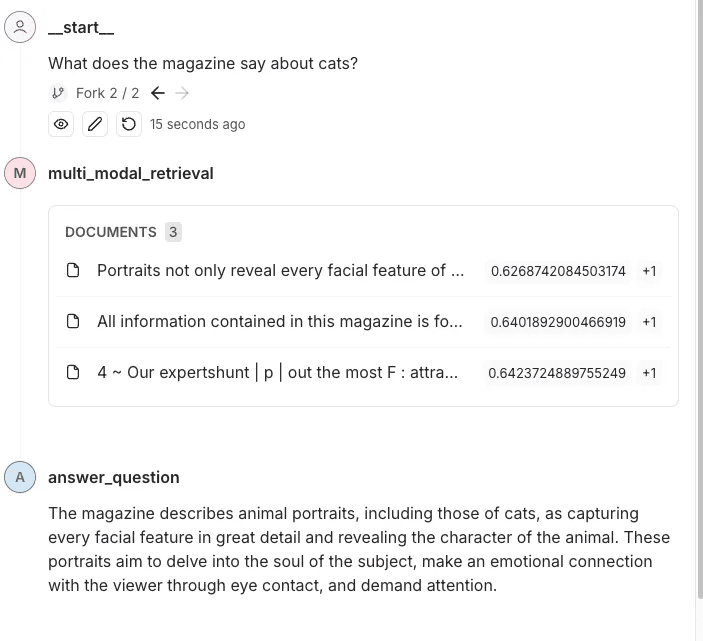

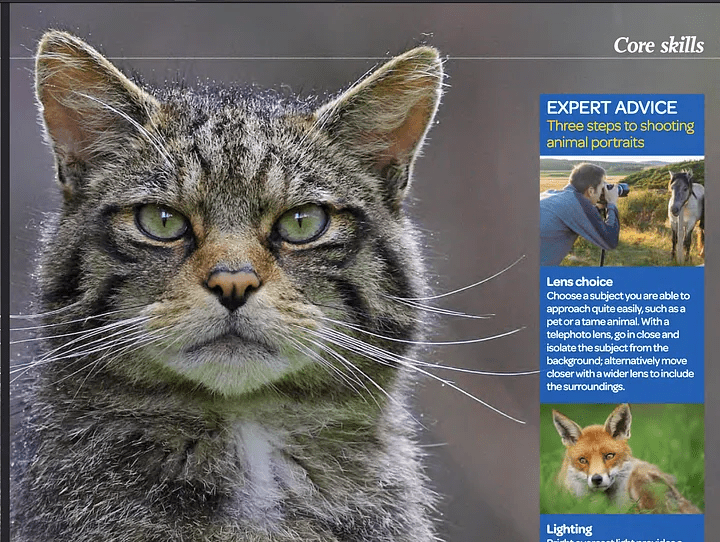

EXAMPLE QUESTION: What does the magazine say about cats?

A screenshot from a photo magazine -- OUTDOOR

Routine Similarity Search Answers

The search results provided do not contain specific information about cats. They mention animal portraits and photography techniques, but do not explicitly mention cats or details related to them.

As shown above, the word "cat" is not mentioned; there is only a picture and an explanation of how to take pictures of the animal. Since the word "cat" was not mentioned, a regular similarity search produced no results.

Multimodal search for answers

This magazine features a portrait of a cat that highlights the fine capture of its facial features and character. The text emphasizes how well-crafted animal portraits reach into the soul of their subjects and create an emotional connection with the viewer through compelling eye contact.

Using multimodal search, we will find a picture of a cat and then link relevant text to it. Providing this data to the model will allow it to better answer and understand the context.

How to build a multimodal embedding and retrieval pipeline

I will now describe how such a pipeline works in a few steps:

- We will use the Unstructured(a powerful Python library for data extraction) Extracts text and images from PDF files.

- We will use the Voyager Multimodal 3 The model creates multimodal vectors for text and images in the same vector space.

- We'll insert it into the vector store (Weaviate) in.

- Finally, we will perform a similarity search and compare the results of the text and images.

Step 1: Set up vector storage and extract images and text from the document (PDF)

Here we have to do some manual work. Normally, Weaviate is a very easy to use vector store that automatically transforms the data and adds embeddings on insertion. However, there is no plugin for Voyager Multimodal v3, so we have to calculate the embeddings manually.In this case, we must create a collection without defining a vectorizer module.

import weaviate

from weaviate.classes.config import Configure

client = weaviate.connect_to_local()

collection_name = "multimodal_demo"

client.collections.delete(collection_name)

try:

client.collections.create(

name=collection_name,

vectorizer_config=Configure.Vectorizer.none() # 不为此集合设置向量化器

)

collection = client.collections.get(collection_name)

except Exception:

collection = client.collections.get(collection_name)pyt

Here, I'm running a local Weaviate instance in a Docker container.

Step 2:Extract documents and images from PDF

This is the key step in the process work. Here, we will get a PDF containing text and images. then, we will extract the content (images and text) and store it in relevant blocks. So, each block will be a PDF containing strings (actual text) and Python PIL images The list of elements of the

We will use the Unstructured library to do some of the heavy lifting, but we still need to write some logic and configure the library parameters.

from unstructured.partition.auto import partition

from unstructured.chunking.title import chunk_by_title

elements = partition(

filename="./files/magazine_sample.pdf",

strategy="hi_res",

extract_image_block_types=["Image", "Table"],

extract_image_block_to_payload=True)

chunks = chunk_by_title(elements)

Here, we must use the hi_res strategy and use the extract_image_block_to_payload Export the image to payload as we will need this information later for the actual embedding. Once we've extracted all the elements, we'll group them into blocks based on the titles in the document.

For more information, check out Unstructured documentation on chunkingThe

In the following script, we will use these blocks to output two lists:

- A list of objects that we will send to Voyager 3 to create the vector

- A list containing the metadata extracted by Unstructured. This metadata is required because we have to add it to the vector store. It will provide us with additional attributes for filtering and tell us something about the retrieved data.

from unstructured.staging.base import elements_from_base64_gzipped_json

import PIL.Image

import io

import base64

embedding_objects = []

embedding_metadatas = []

for chunk in chunks:

embedding_object = []

metedata_dict = {

"text": chunk.to_dict()["text"],

"filename": chunk.to_dict()["metadata"]["filename"],

"page_number": chunk.to_dict()["metadata"]["page_number"],

"last_modified": chunk.to_dict()["metadata"]["last_modified"],

"languages": chunk.to_dict()["metadata"]["languages"],

"filetype": chunk.to_dict()["metadata"]["filetype"]

}

embedding_object.append(chunk.to_dict()["text"])

# 将图像添加到嵌入对象

if "orig_elements" in chunk.to_dict()["metadata"]:

base64_elements_str = chunk.to_dict()["metadata"]["orig_elements"]

eles = elements_from_base64_gzipped_json(base64_elements_str)

image_data = []

for ele in eles:

if ele.to_dict()["type"] == "Image":

base64_image = ele.to_dict()["metadata"]["image_base64"]

image_data.append(base64_image)

pil_image = PIL.Image.open(io.BytesIO(base64.b64decode(base64_image)))

# 如果图像大于 1000x1000,则在保持纵横比的同时调整图像大小

if pil_image.size[0] > 1000 or pil_image.size[1] > 1000:

ratio = min(1000/pil_image.size[0], 1000/pil_image.size[1])

new_size = (int(pil_image.size[0] * ratio), int(pil_image.size[1] * ratio))

pil_image = pil_image.resize(new_size, PIL.Image.Resampling.LANCZOS)

embedding_object.append(pil_image)

metedata_dict["image_data"] = image_data

embedding_objects.append(embedding_object)

embedding_metadatas.append(metedata_dict)

The result of this script will be a list of lists whose contents are shown below:

[['来自\n\n冰岛 KIRKJUFELL 的位置',

<PIL.Image.Image image mode=RGB size=1000x381>,

<PIL.Image.Image image mode=RGB size=526x1000>],

['这座标志性的山峰是我们冰岛拍摄地点的首选,而且在我们去那里之前,我们就看过许多从附近瀑布拍摄的照片。因此,这是我们在日出时前往的第一个地方 - 我们没有失望。这些瀑布为这张照片(顶部)提供了完美的近景趣味,而从这个角度来看,Kirkjufell 是一座完美的尖山。我们花了一两个小时简单地探索这些瀑布,找到了几个不同的角度。']]

Step 3: Vectorize the extracted data

In this step, we will use the block created in the previous step with the Voyager Python Packages Send them to Voyager, which will return us a list of all embedded objects. We can then use this result and eventually store it in Weaviate.

from dotenv import load_dotenv

import voyageai

load_dotenv()

vo = voyageai.Client()

# 这将自动使用环境变量 VOYAGE_API_KEY。

# 或者,您可以使用 vo = voyageai.Client(api_key="<您的密钥>")

# 包含文本字符串和 PIL 图像对象的示例输入

inputs = embedding_objects

# 向量化输入

result = vo.multimodal_embed(

inputs,

model="voyage-multimodal-3",

truncation=False

)

If we access result.embeddings, we will get a list containing a list of all computed embedding vectors:

[[-0.052734375, -0.0164794921875, 0.050048828125, 0.01348876953125, -0.048095703125, ...]]We can now use the batch.add_object method stores this embedded data in Weaviate as a single batch. Note that we have also added metadata to the properties parameter.

with collection.batch.dynamic() as batch:

for i, data_row in enumerate(embedding_objects):

batch.add_object(

properties=embedding_metadatas[i],

vector=result.embeddings[i]

)

Step 4: Querying the data

We can now perform a similarity search and query the data. This is easy because the process is similar to a regular similarity search performed on a text embedding. Since Weaviate does not have a module for Voyager multimodal, we must compute the search query vector ourselves before passing it to Weaviate to perform a similarity search.

from weaviate.classes.query import MetadataQuery

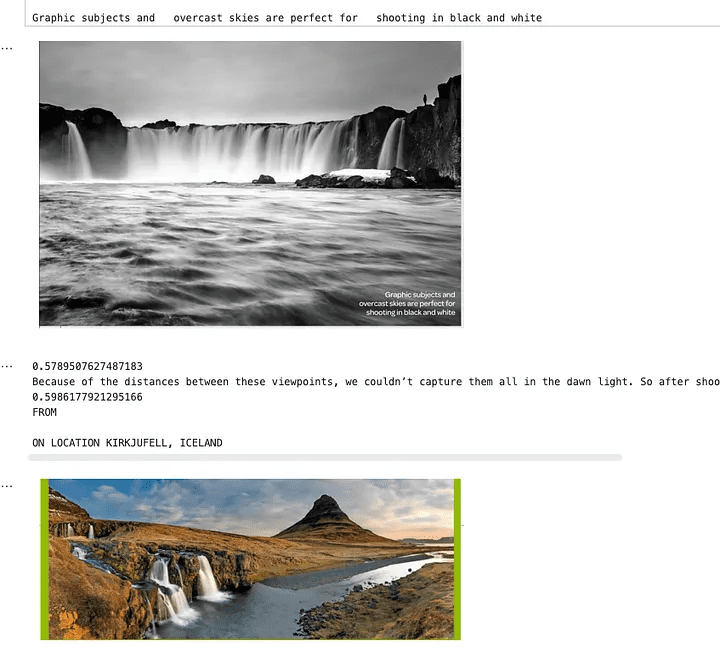

question = "杂志上关于瀑布说了什么?"

vector = vo.multimodal_embed([[question]], model="voyage-multimodal-3")

vector.embeddings[0]

response = collection.query.near_vector(

near_vector=vector.embeddings[0], # 您的查询向量在此处

limit=2,

return_metadata=MetadataQuery(distance=True)

)

# 显示结果

for o in response.objects:

print(o.properties['text'])

for image_data in o.properties['image_data']:

# 使用 PIL 显示图像

img = PIL.Image.open(io.BytesIO(base64.b64decode(image_data)))

width, height = img.size

if width > 500 or height > 500:

ratio = min(500/width, 500/height)

new_size = (int(width * ratio), int(height * ratio))

img = img.resize(new_size)

display(img)

print(o.metadata.distance)

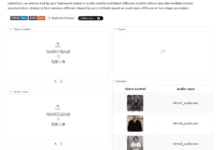

The image below shows that a search for waterfalls will return text and images related to this search query. As you can see, the photos reflect waterfalls, but the text itself does not mention them. The text is about an image with a waterfall in it, which is why it was also retrieved. This is not possible for regular text embedding searches.

An image showing similarity search results

Step 5: Add it to the entire search pipeline

Now that we've extracted the text and images from the magazine, created embeds for them, added them to Weaviate, and set up our similarity search, I'm going to add it to the overall retrieval pipeline. In this example, I'm going to use LangGraph. the user will ask a question about this magazine, and the pipeline will answer that question. Now that all the work is done, this part is as easy as setting up a typical retrieval pipeline using regular text.

I've abstracted some of the logic we discussed in the previous section into other modules. Here's how I've integrated it into the LangGraph Examples in the pipeline.

class MultiModalRetrievalState(TypedDict):

messages: Annotated[Sequence[BaseMessage], add_messages]

results: List[Document]

base_64_images: List[str]

class RAGNodes(BaseNodes):

def __init__(self, logger, mode="online", document_handler=None):

super().__init__(logger, mode)

self.weaviate = Weaviate()

self.mode = mode

async def multi_modal_retrieval(self, state: MultiModalRetrievalState, config):

collection_name = config.get("configurable", {}).get("collection_name")

self.weaviate.set_collection(collection_name)

print("正在运行多模态检索")

print(f"正在搜索 {state['messages'][-1].content}")

results = self.weaviate.similarity_search(

query=state["messages"][-1].content, k=3, type="multimodal"

)

return {"results": results}

async def answer_question(self, state: MultiModalRetrievalState, config):

print("正在回答问题")

llm = self.llm_factory.create_llm(mode=self.mode, model_type="default")

include_images = config.get("configurable", {}).get("include_images", False)

chain = self.chain_factory.create_multi_modal_chain(

llm,

state["messages"][-1].content,

state["results"],

include_images=include_images,

)

response = await chain.ainvoke({})

message = AIMessage(content=response)

return {"messages": message}

# 定义配置

class GraphConfig(TypedDict):

mode: str = "online"

collection_name: str

include_images: bool = False

graph_nodes = RAGNodes(logger)

graph = StateGraph(MultiModalRetrievalState, config_schema=GraphConfig)

graph.add_node("multi_modal_retrieval", graph_nodes.multi_modal_retrieval)

graph.add_node("answer_question", graph_nodes.answer_question)

graph.add_edge(START, "multi_modal_retrieval")

graph.add_edge("multi_modal_retrieval", "answer_question")

graph.add_edge("answer_question", END)

multi_modal_graph = graph.compile()

__all__ = ["multi_modal_graph"]

The above code will generate the following chart

Visual representation of the charts created

In this trace, theYou can see the content and images being sent to OpenAI to answer questions.

reach a verdict

Multimodal embedding opens up the possibility of integrating and retrieving information from different data types (e.g., text and images) within the same embedding space. By combining cutting-edge tools such as the Voyager Multimodal 3 model, Weaviate, and LangGraph, we show how to build a robust retrieval pipeline that understands and links content more intuitively than traditional text-only approaches.

This approach significantly improves search and retrieval accuracy for a variety of data sources such as magazines, brochures, and PDFs. It also demonstrates how multimodal embedding can provide richer, context-aware insights that connect images to descriptive text even in the absence of explicit keywords. This tutorial allows you to explore and apply these techniques to your projects.

Example Notebook: https://github.com/vectrix-ai/vectrix-graphs/blob/main/examples/multi-model-embeddings.ipynb