General Introduction

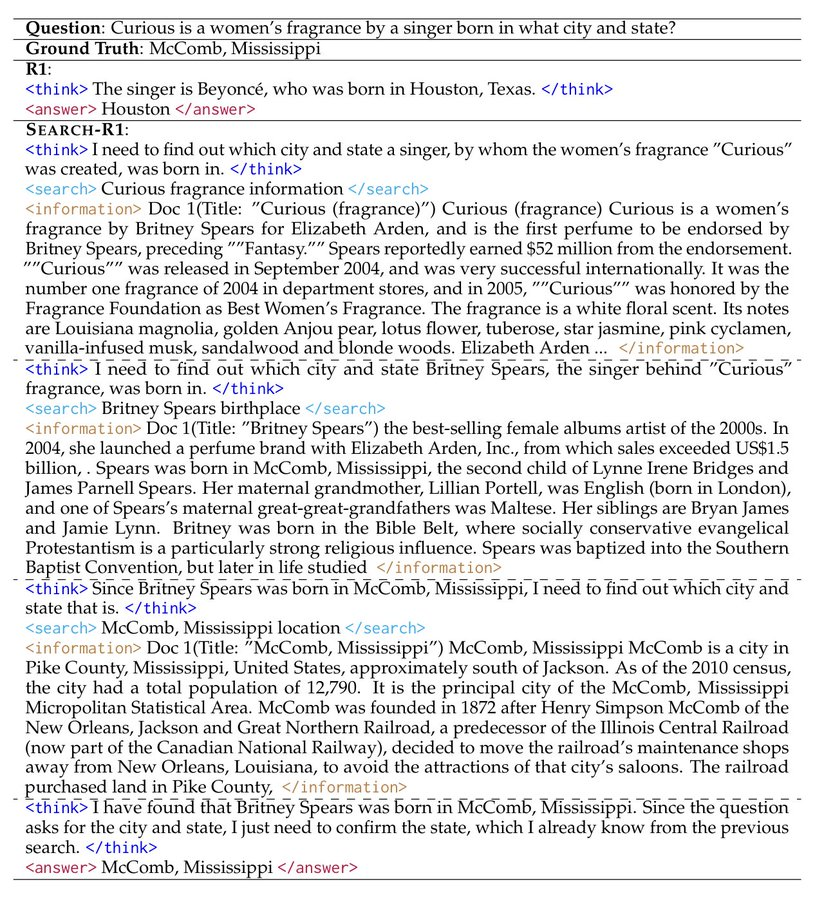

Search-R1 is an open source project developed by PeterGriffinJin on GitHub and built on the veRL framework. It uses reinforcement learning (RL) techniques to train large language models (LLMs), allowing the models to autonomously learn to reason and invoke search engines to solve problems. The project supports basic models such as Qwen2.5-3B and Llama3.2-3B, extending the DeepSeek-R1 and TinyZero methods. Users can use it to train models to handle single- or multi-round tasks, with code, datasets, and experiment logs provided. Officialdiscuss a paper or thesis (old)Released in March 2025, the project model and data are available for download at Hugging Face for researchers and developers.

Function List

- Training large models through reinforcement learning to improve reasoning and search.

- Support for calling Google, Bing, Brave and other search engines API.

- Provides LoRA tuning and supervised fine-tuning capabilities to optimize model performance.

- Built-in off-the-shelf reorderer to improve search result accuracy.

- Includes detailed lab logs and papers to support reproduction of results.

- Provides local search server functionality for easy customization of searches.

- Support for user upload of customized datasets and corpora.

Using Help

Search-R1 is aimed at users with a basic knowledge of programming and machine learning. Below is a detailed installation and usage guide to get you started quickly.

Installation process

To use Search-R1, you need to set up the environment first. The steps are as follows:

- Creating a Search-R1 Environment

Runs in the terminal:

conda create -n searchr1 python=3.9

conda activate searchr1

This creates a virtual environment for Python 3.9.

- Installing PyTorch

Install PyTorch 2.4.0 (supports CUDA 12.1) by entering the following command:

pip install torch==2.4.0 --index-url https://download.pytorch.org/whl/cu121

- Installing vLLM

vLLM is a key library for running large models, install version 0.6.3:

pip3 install vllm==0.6.3

Versions 0.5.4, 0.4.2 or 0.3.1 are also available.

- Installing veRL

Run it in the project root directory:

pip install -e .

This will install the veRL framework.

- Install optional dependencies

To improve performance, install Flash Attention and Wandb:

pip3 install flash-attn --no-build-isolation

pip install wandb

- Installation of Retriever Environment (optional)

If a local retrieval server is required, create another environment:

conda create -n retriever python=3.10

conda activate retriever

conda install pytorch==2.4.0 pytorch-cuda=12.1 -c pytorch -c nvidia

pip install transformers datasets

conda install -c pytorch -c nvidia faiss-gpu=1.8.0

pip install uvicorn fastapi

Quick Start

Following are the steps to train a model based on NQ dataset:

- Download indexes and corpora

Set the save path and run it:

save_path=/你的保存路径

python scripts/download.py --save_path $save_path

cat $save_path/part_* > $save_path/e5_Flat.index

gzip -d $save_path/wiki-18.jsonl.gz

- Processing NQ data

Run the script to generate training data:

python scripts/data_process/nq_search.py

- Starting the Retrieval Server

Runs in the Retriever environment:

conda activate retriever

bash retrieval_launch.sh

- Run RL training

Runs in a Search-R1 environment:

conda activate searchr1

bash train_ppo.sh

This will use the Llama-3.2-3B base model for PPO training.

Using custom datasets

- Preparing QA Data

The data needs to be in JSONL format and each row contains the following fields:

{

"data_source": "web",

"prompt": [{"role": "user", "content": "问题"}],

"ability": "fact-reasoning",

"reward_model": {"style": "rule", "ground_truth": "答案"},

"extra_info": {"split": "train", "index": 1}

}

consultation <scripts/data_process/nq_search.py>The

- Preparing the corpus

The corpus needs to be in JSONL format, with each line containingidcap (a poem)contents, e.g.:{"id": "0", "contents": "文本内容"}referable

<example/corpus.jsonl>The - Indexing corpus (optional)

If using a local search, run:bash search_r1/search/build_index.sh

Calling a customized search engine

- modifications

<search_r1/search/retriever_server.py>The configuration APIs. - Start the server:

python search_r1/search/retriever_server.py - The model will be passed through the

http://127.0.0.1:8000/retrieveCall Search.

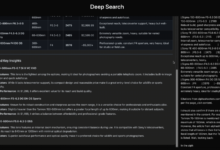

inference operation

- Start the retrieval server:

bash retrieval_launch.sh - Running Reasoning:

python infer.py - modifications

<infer.py>Line 7question, enter the question you want to ask.

caveat

- Training requires a GPU with at least 24GB of video memory (e.g. NVIDIA A100).

- Make sure the API key is valid and the network connection is stable.

- Official papers and lab journals (

<Full experiment log 1>cap (a poem)<Full experiment log 2>) Provide more details.

With these steps, you can use Search-R1 to train a model that can reason and search to handle a variety of tasks.

application scenario

- research experiment

Researchers can use Search-R1 to reproduce the results of the paper and explore the application of reinforcement learning in model training. - Intelligent Assistant Development

Developers can train models to be integrated into chat tools to provide search and reasoning capabilities. - Knowledge Query

Users can use it to quickly answer complex questions and get up-to-date information through search.

QA

- What models does Search-R1 support?

Currently, Qwen2.5-3B and Llama3.2-3B base models are supported, other models need to be adapted by themselves. - How long does the training take?

Depending on the dataset and hardware, the NQ dataset takes about a few hours to train on a 24GB graphics GPU. - How do I verify the effectiveness of my training?

ferret out<Preliminary results>in the chart, or check the Wandb log.