Artificial intelligence research company Runway recently released its next-generation media generation AI model series, Runway Gen-4, which is designed to address the consistency challenges prevalent in current AI video generation and improve the controllability of content generation, marking a significant step toward a more stable and narrative AI authoring This marks an important step towards a more stable and narrative-capable AI authoring tool.

Breaking through the consistency bottleneck

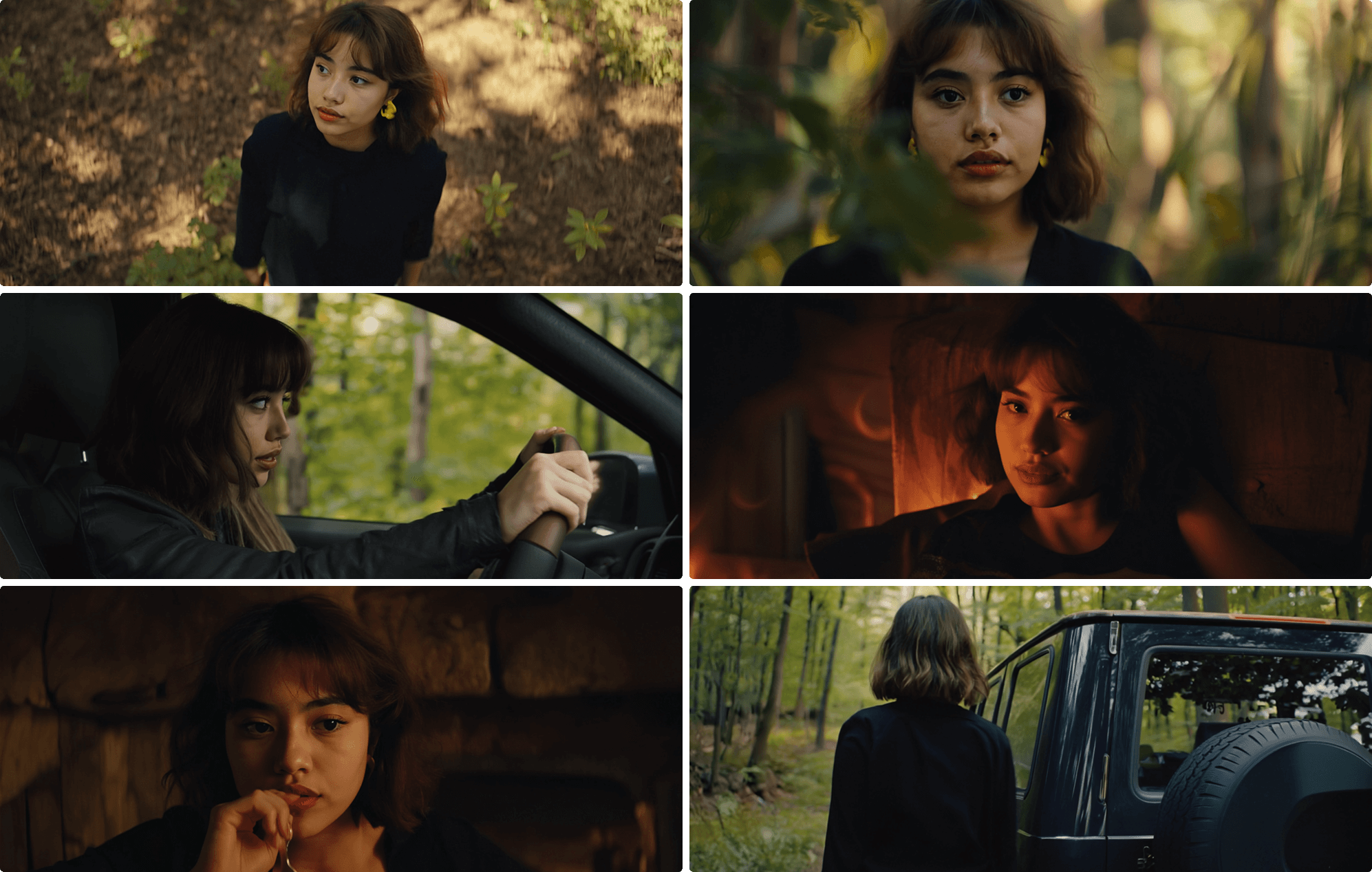

According to Runway, the core breakthrough of Runway Gen-4 is its ability to maintain "world consistency". Users can now more accurately generate characters, locations, and objects that are consistent from scene to scene. By setting the initial visual style and feel, the model is able to maintain a coherent world environment in subsequent generation, while preserving the unique style, mood and cinematography of each frame. More notably, the model supports the regeneration of these elements from multiple viewpoints and locations, which is critical for building complex narrative scenes.

Runway Gen-4 is able to combine visual references (such as single character pictures) and textual instructions to create new images and videos, ensuring a high degree of consistency in terms of style, subject, location and more. This means that creators have unprecedented creative freedom to tell their own stories, allowing virtual characters or objects to traverse different lighting, environments and treatments without the need for complex model fine-tuning or additional training.

Improve controllability and generation quality

In addition to its excellent consistency, Runway Gen-4 demonstrates its power in several dimensions:

- Coverage. Simply by providing a reference image of the subject and describing the desired shot composition, the Runway Gen-4 generates shots from different angles to meet the needs of the scene.

- Production-Ready Video. The model excels in generating highly dynamic, motion-natural videos while ensuring consistency of subject, object, and style. Its ability to understand cue words and simulate the physical world has also reached new levels.

- Physics. Runway claims that Gen-4 has made significant progress in simulating the laws of real-world physics, which is an important step towards a universal generative model that understands how the world works. While the accuracy and scope of the simulation needs to be further verified, it is certainly an important direction to explore in the field of AI video generation.

- Generative Visual Effects (GVFX). Runway Gen-4 introduces the concept of GVFX, which opens up new possibilities in visual effects production by providing a fast, controllable and flexible way to generate video that works seamlessly with live action, animation and traditional VFX content.

Narrative Potential and Industry Applications

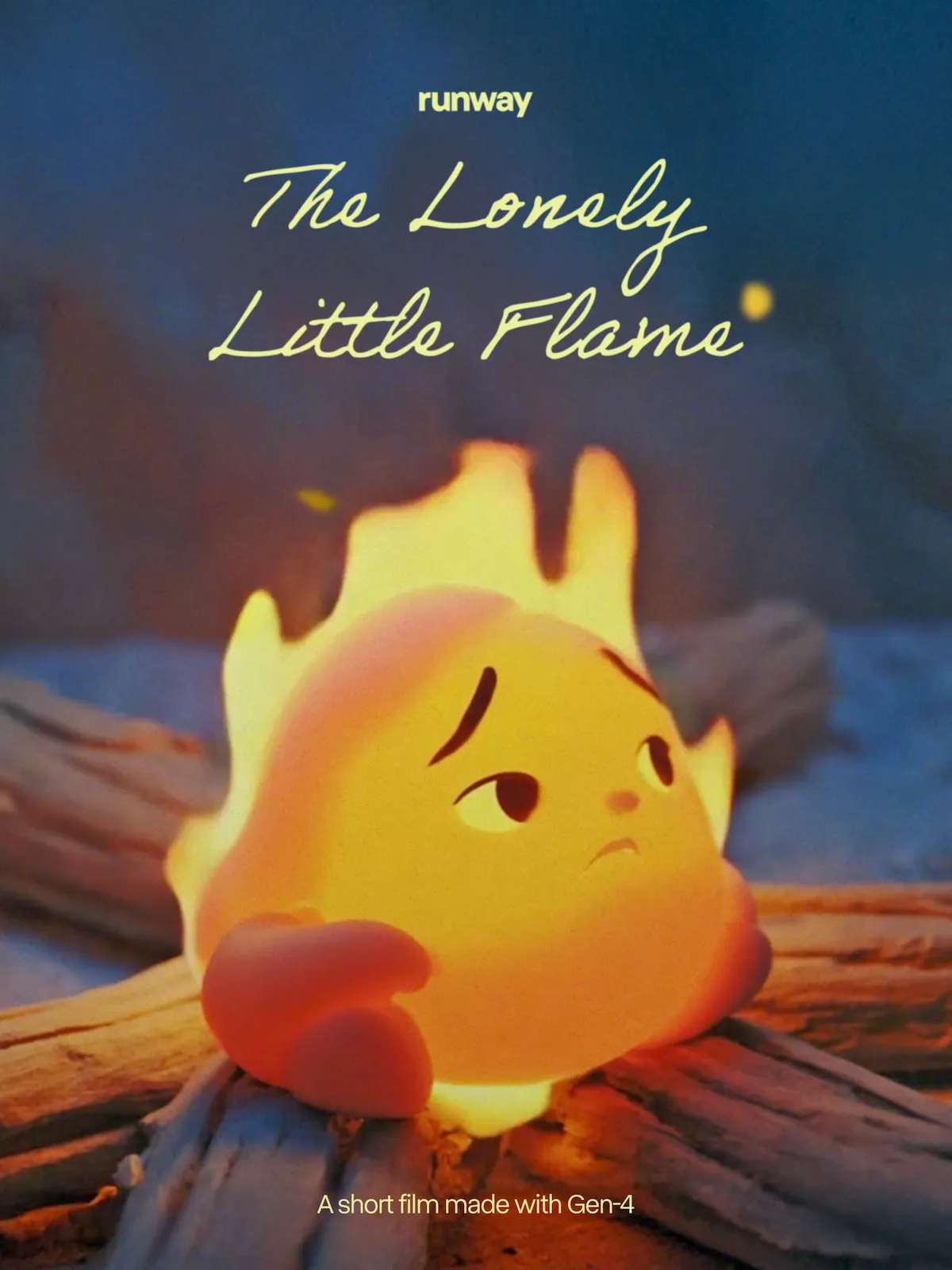

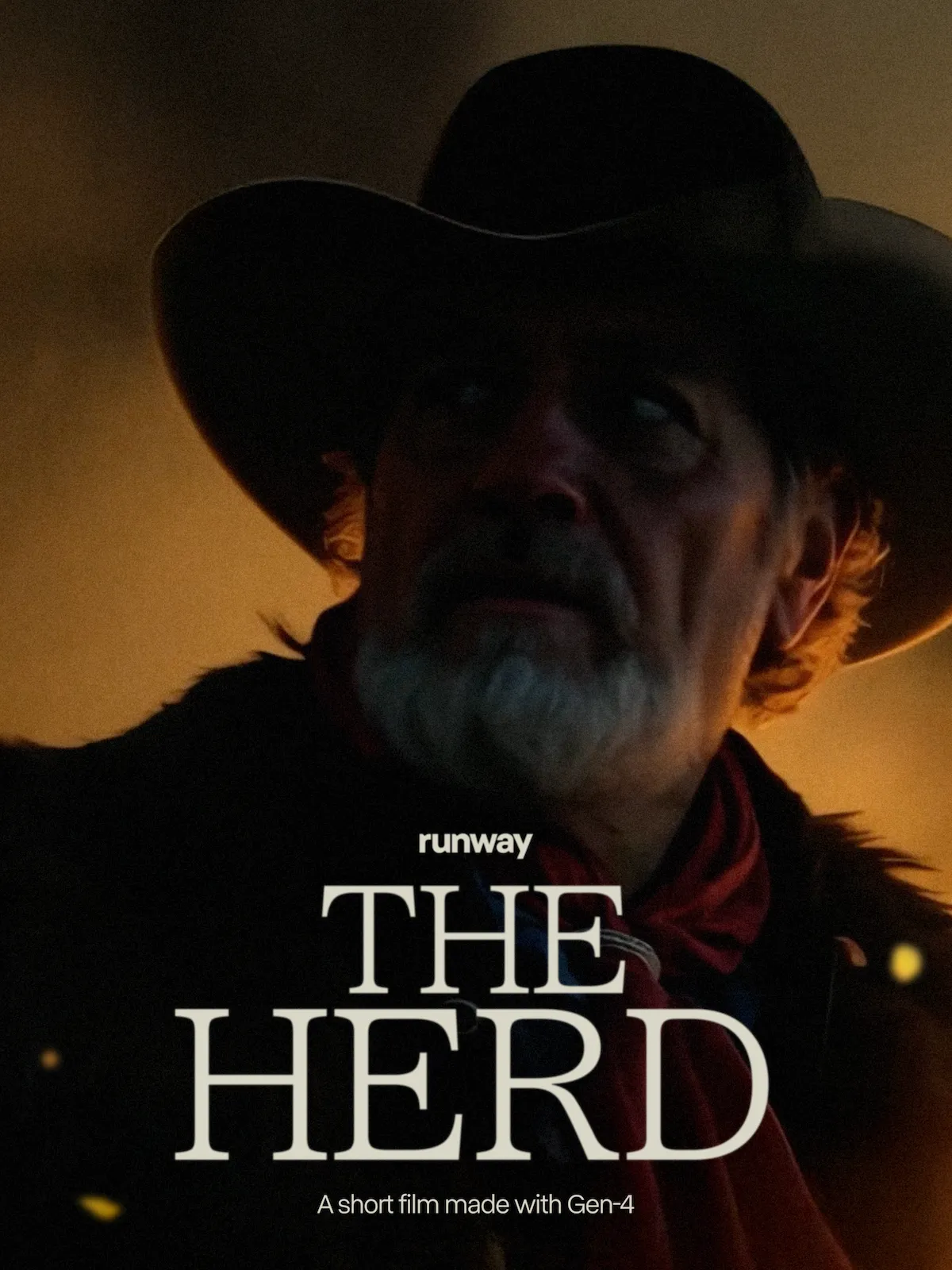

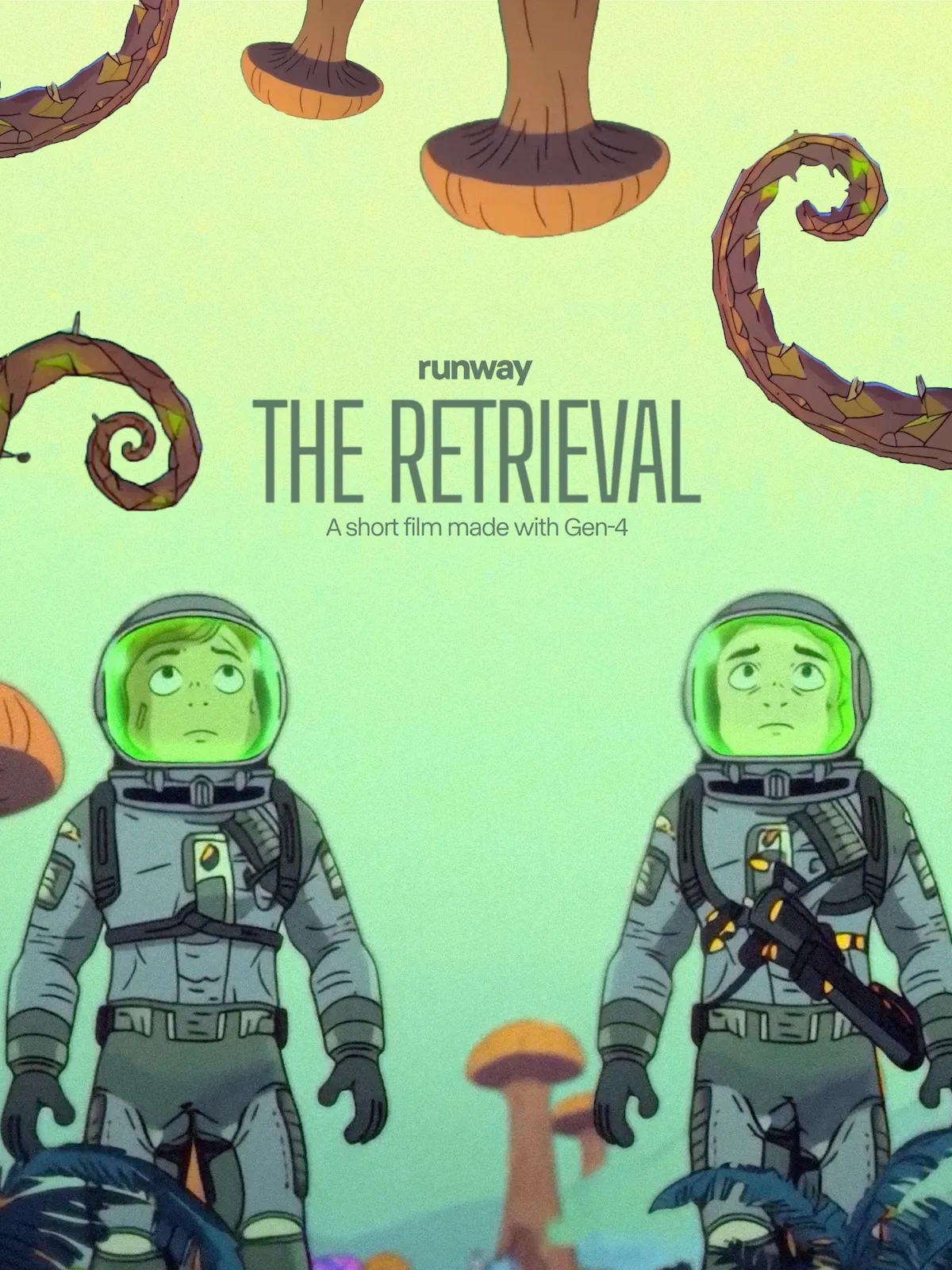

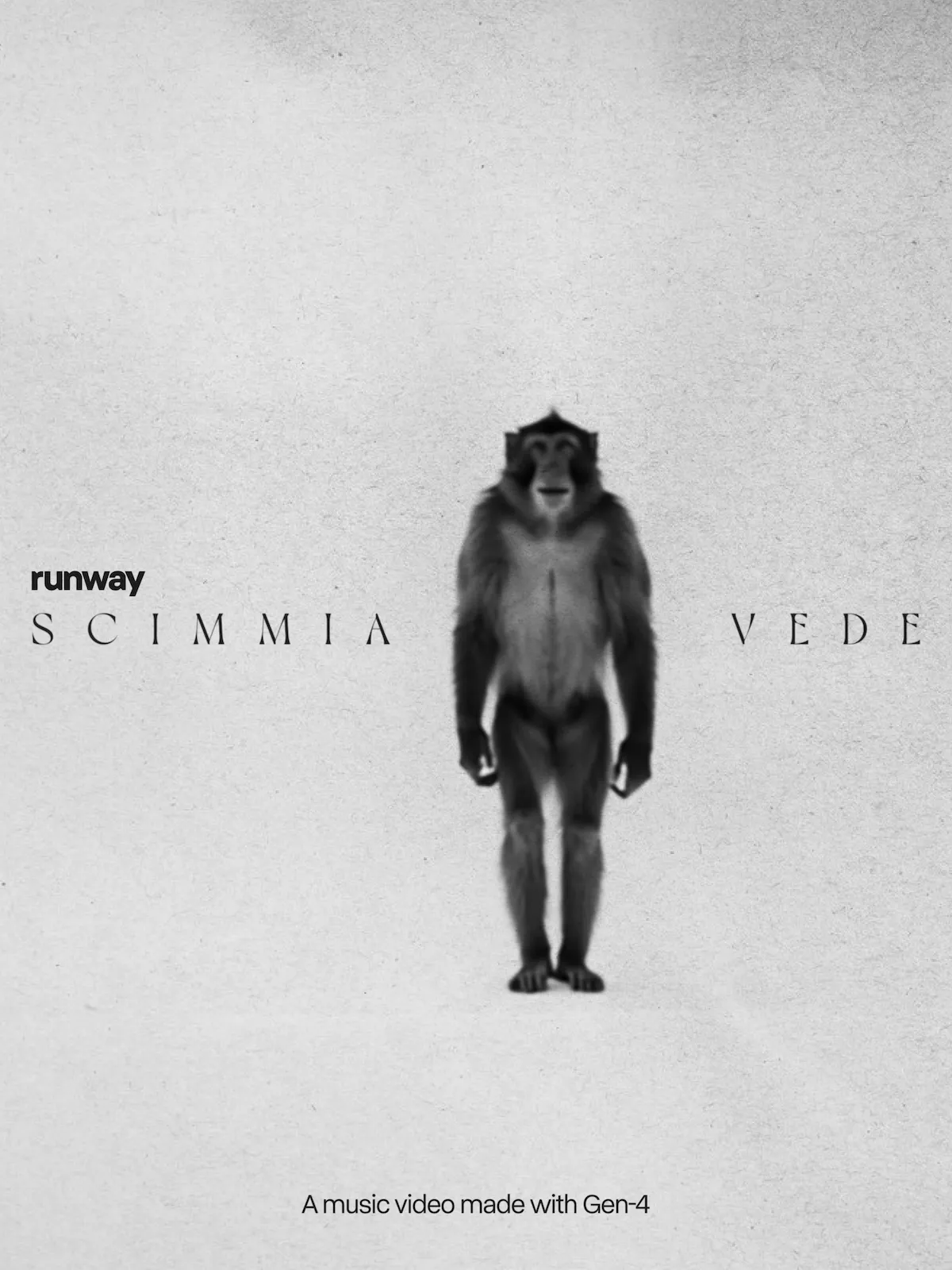

To validate the model's narrative capabilities, Runway created a series of short films and music videos using Gen-4. The work demonstrates how effective the model is at maintaining uniformity of character, environment, and style.

Learn more about the Narrative Competency Test

The release of Runway Gen-4 comes at a time when AI video generation technology is rapidly evolving. While there are other models on the market (e.g., OpenAI's Sora, Pika, etc.) that have demonstrated amazing capabilities in different aspects, Runway Gen-4 explicitly focuses on "consistency" and "controllability" as its core selling points, and emphasizes that The fact that this can be achieved without fine-tuning is a direct hit to the pain points that many creators are currently experiencing when using AI to grow content or complex scenarios. If its claimed capabilities can be widely verified and stably reproduced in real-world applications, it will undoubtedly have a far-reaching impact on the fields of movie production, advertising creativity, game development, etc., further lowering the threshold of high-quality visual content creation, and potentially changing the existing production process.

Additionally, Runway's recently announced collaborations with industry partners such as Lionsgate, Tribeca Festival, and Media.Monks demonstrate its commitment to taking Gen-4 to the professional level.

- Runway partners with Lionsgate Films

- Runway Explores the Future of Filmmaking with Tribeca Film Festival 2024

- Expanding Creative Boundaries with Media.Monks

Currently, users can try Runway Gen-4 through Runway's platform, and as the technology continues to evolve and more application scenarios emerge, the market will be watching to see if Runway Gen-4 can truly define the standard for the next generation of AI media creation.