Recently, Anthropic introduced a new tool called "think" that is designed to enhance Claude modeling capabilities in complex problem solving. In this paper, we will delve into the design concepts, performance and best practices of the "think" tool in real-world applications, and analyze its potential impact on the development of future AI systems.

The "think" tool: to get Claude to stop and think.

As we continue to improve Claude's ability to solve complex problems, Anthropic has found a simple yet effective way to do so: introducing the "think" tool." The "think" tool provides Claude with a dedicated space for structured thinking while working on complex tasks.

It is worth noting that the "think" tool is similar to Claude's previous "extended thinking" function is different. "Extended Thinking" emphasizes Claude's role in generating responses to the beforehand of deep thinking and iterative planning. And the "think" tool was created in Claude After you start generating the response , add a step to make it stop and think about whether it has all the necessary information to move forward. This is especially useful when performing long tool call chains or having multi-step conversations with users.

In contrast, the "think" tool is better suited for situations where Claude cannot get all the necessary information from user queries alone, and needs to process external information (e.g., the results of tool calls). The reasoning performed by the "think" tool is not as comprehensive as that of "extended thinking" and focuses more on model discovery of the meso- (chemistry) Information.

Anthropic "Extended Thinking" is recommended for simpler tool-use scenarios, such as non-sequential tool invocations or direct instruction following. "Extended Thinking is also suitable for scenarios that do not require Claude to call tools, such as coding, math, and physics." think" tools are better suited for scenarios where Claude needs to invoke complex tools, carefully analyze tool output in long tool invocation chains, navigate in a strategy environment with detailed guidelines, or make sequential decisions where each step builds on the previous one (and mistakes are costly).

The following is a guide to using the τ-Bench Example implementation of a standard tool specification format:

{

"name": "think",

"description": "使用该工具进行思考。它不会获取新信息或更改数据库,只会将想法附加到日志中。在需要复杂推理或某些缓存记忆时使用。",

"input_schema": {

"type": "object",

"properties": {

"thought": {

"type": "string",

"description": "一个需要思考的想法。"

}

},

"required": ["thought"]

}

}

τ-Bench performance test: significant improvement

To evaluate the performance of the "think" tool, Anthropic tested it using the τ-Bench (tau-bench), a comprehensive benchmark designed to test a model's ability to use the tool in realistic customer service scenarios, where the "think" tool is part of the standardized environment for the evaluation.

Developed by the Sierra Research team and recently released, τ-Bench focuses on evaluating Claude's ability to

- Simulate real user conversations.

- Follow the Complex Customer Service Agent Strategy Guide.

- Access and manipulate environmental databases using a variety of tools.

The main evaluation metric used by τ-Bench is passkThe pass@k metric measures the probability that all k independent task trials are successful in a given task and is averaged across all tasks. Unlike the pass@k metric (which measures the success of at least one of k trials), which is common in other LLM assessments, passk The assessment is of consistency and reliability - which is critical for customer service applications where it is vital to always adhere to the policy.

performance analysis

Anthropic's evaluation compared the following different configurations:

- Baseline (no "think" tool, no extended mindset)

- Extended mindset only

- "think" tool only

- "think" tool with optimization tips (for aviation)

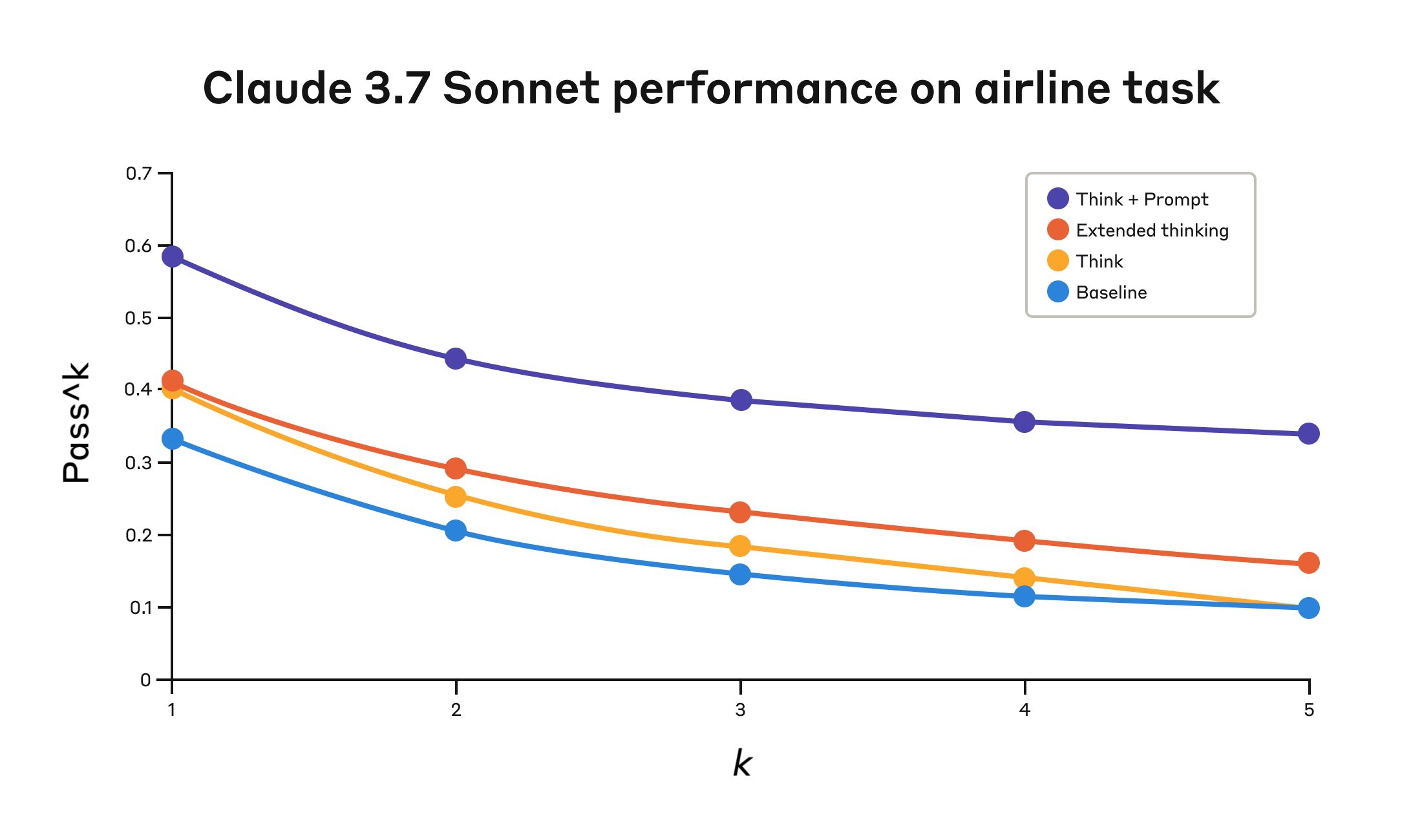

The results show that when the Claude 3.5 Sonnet model effectively uses the "think" tool, it achieves significant improvements in both the "Airline" and "Retail" customer service areas of the benchmark:

- Aviation: The "think" tool with optimization hints is available on pass1 indicator reached 0.570 compared to 0.370 at baseline, a relative improvement of 541 TP3T.

- Retail: The "think" tool alone reaches 0.812, compared to the baseline of 0.783.

The following table shows the specific data of the Claude 3.5 Sonnet model for four different configurations of the "Aeronautical" domain evaluated by τ-Bench:

| configure | k =1 | k =2 | k =3 | k =4 | k =5 |

|---|---|---|---|---|---|

| "Think" + Cue word optimization | 0.584 | 0.444 | 0.384 | 0.356 | 0.340 |

| "Think" tool only | 0.404 | 0.254 | 0.186 | 0.140 | 0.100 |

| Extended Thinking | 0.412 | 0.290 | 0.232 | 0.192 | 0.160 |

| base line (in geodetic survey) | 0.332 | 0.206 | 0.148 | 0.116 | 0.100 |

In aviation, optimal performance can be achieved by pairing the "think" tool with optimized cue words. Optimized prompt words provide examples of reasoning methods to use when analyzing customer requests. The following are examples of optimized prompt words:

## 使用 think 工具

在采取任何行动或在收到工具结果后回应用户之前,使用 think 工具作为草稿板来:

- 列出适用于当前请求的具体规则

- 检查是否收集了所有必需的信息

- 验证计划的操作是否符合所有策略

- 迭代工具结果以确保正确性

以下是在 think 工具中迭代的一些示例:

<think_tool_example_1>

用户想要取消航班 ABC123

- 需要验证:用户 ID、预订 ID、原因

- 检查取消规则:

* 是否在预订后 24 小时内?

* 如果不是,检查机票等级和保险

- 验证没有航段已飞行或已过时

- 计划:收集缺失信息,验证规则,获取确认

</think_tool_example_1>

<think_tool_example_2>

用户想要预订 3 张前往纽约的机票,每张机票有 2 件托运行李

- 需要用户 ID 来检查:

* 会员等级以确定行李限额

* 个人资料中存在哪些付款方式

- 行李计算:

* 经济舱 × 3 名乘客

* 如果是普通会员:每人 1 件免费行李 → 3 件额外行李 = 150 美元

* 如果是白银会员:每人 2 件免费行李 → 0 件额外行李 = 0 美元

* 如果是黄金会员:每人 3 件免费行李 → 0 件额外行李 = 0 美元

- 需要验证的付款规则:

* 最多 1 张旅行券,1 张信用卡,3 张礼品卡

* 所有付款方式必须在个人资料中

* 旅行券余额作废

- 计划:

1. 获取用户 ID

2. 验证会员级别以确定行李费

3. 检查个人资料中的付款方式以及是否允许组合使用

4. 计算总价:机票价格 + 任何行李费

5. 获取明确的预订确认

</think_tool_example_2>

Of particular interest is the comparison of the different methods. Using the "think" tool with optimization hints achieved significantly better results than the extended thinking model (which performed similarly to the unprompted "think" tool). Using the "think" tool alone (without hints) improved performance over the baseline, but was still inferior to the optimization approach.

The combination of the "think" tool and the optimization hints provides significantly better performance, which may be due to the fact that the benchmarks in theaviation strategypart of the high complexity, the model benefits most from the "Thinking" example.

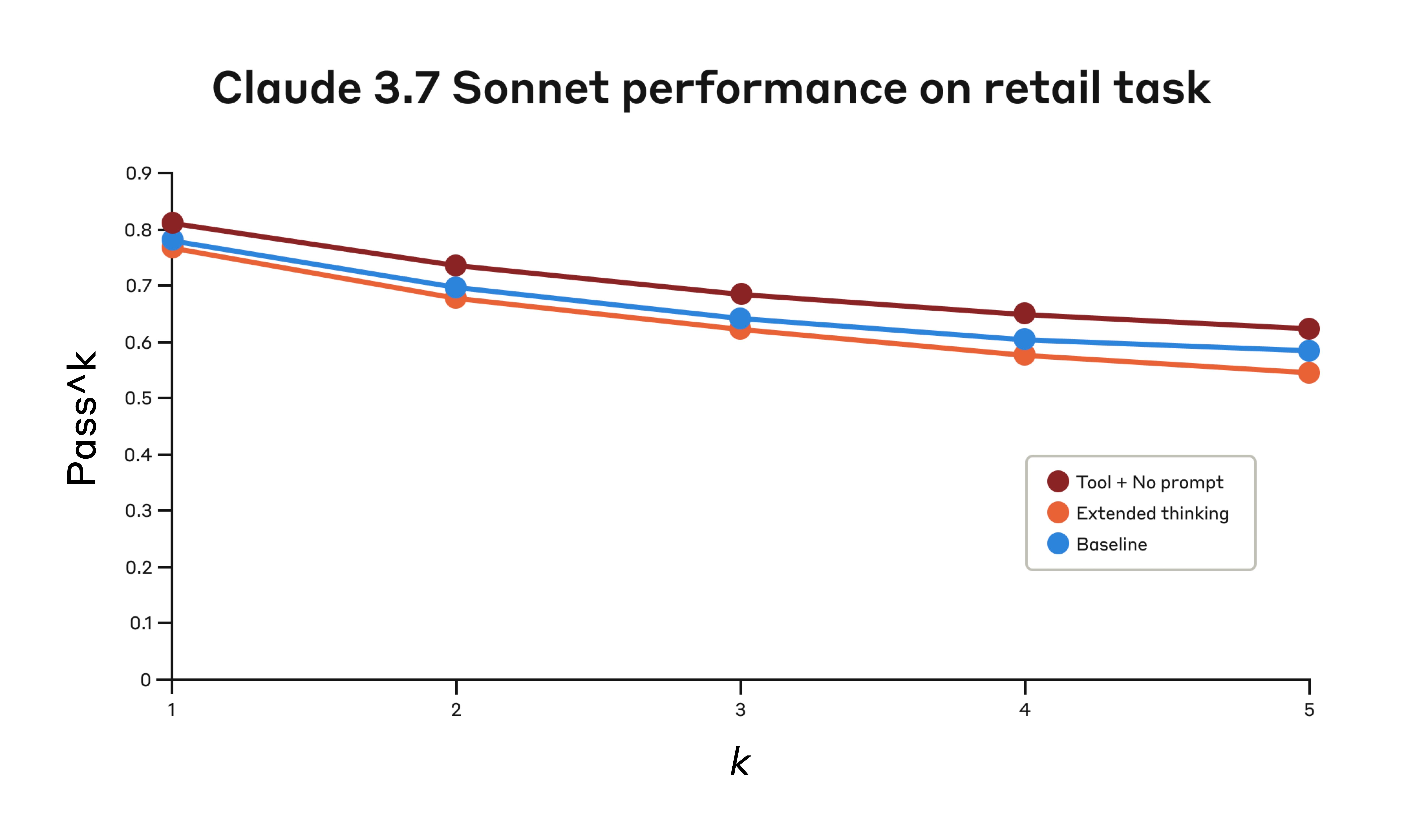

In the retail space, Anthropic also tested a variety of configurations to understand the specific impact of each approach:.

The following table shows the data for the Claude 3.5 Sonnet model in three different configurations of the "Retail" domain evaluated by τ-Bench:

| Configuration | k =1 | k =2 | k =3 | k =4 | k =5 |

|---|---|---|---|---|---|

| "Think" tool only | 0.812 | 0.735 | 0.685 | 0.650 | 0.626 |

| Extended Thinking | 0.770 | 0.681 | 0.623 | 0.581 | 0.548 |

| base line (in geodetic survey) | 0.783 | 0.695 | 0.643 | 0.607 | 0.583 |

Even without additional hints, the "think" tool achieves the highest passes.1 Score 0.812.retail strategySignificantly easier to handle than in aviation, Claude was able to improve performance by having a space to think without further guidance.

Key insights from τ-Bench analysis

Anthropic's detailed analysis reveals several patterns that can help effectively implement "think" tools:

- In difficult areas, cue words are critical. Simply providing the "think" tool may improve performance slightly, but pairing it with optimized cues can produce significantly better results in difficult domains. Simpler domains, however, may benefit from simply using the "think" tool.

- Improved consistency across trialsThe "think" tool brings improvements in the pass. The improvements brought about by using the "think" tool are in the passk in holding to k=5, which suggests that the tool helps Claude deal with edge cases and anomalous scenarios more efficiently.

SWE-Bench performance test: the icing on the cake

While evaluating the Claude 3.5 Sonnet model, Anthropic added a similar "think" tool to the SWE-Bench setup to bring it up to the state-of-the-art of 0.623. The modified "think" tool is defined below:

{

"name": "think",

"description": "使用该工具进行思考。它不会获取新信息或对存储库进行任何更改,只会记录想法。在需要复杂推理或集思广益时使用。例如,如果您探索存储库并发现了错误的根源,请调用此工具来集思广益几种独特的修复错误的方法,并评估哪些更改可能最简单和最有效。或者,如果您收到一些测试结果,请调用此工具来集思广益修复失败测试的方法。",

"input_schema": {

"type": "object",

"properties": {

"thought": {

"type": "string",

"description": "您的想法。"

}

},

"required": ["thought"]

}

}

Anthropic's experiment (n =30 samples with the "think" tool. n =144 samples without the "think" tool) showed that independent effects that included this tool improved performance by an average of 1.6% (Welch's t Test: t (38.89) = 6.71, p < .001, d = 1.47).

Scenarios for the "think" tool

Based on the results of these evaluations, Anthropic identified specific scenarios where Claude would benefit most from the "think" tool:

- Tool Output Analysis: When Claude needs to carefully process the output of a previous tool call before acting, and may need to backtrack in its methods.

- strategy-intensive environment: When Claude needs to follow detailed guidelines and verify compliance.

- sequential decision-making: when each action builds on the previous one and mistakes are costly (usually found in multi-step domains).

Best Practice: Leverage "think" Tools

To fully utilize Claude's "think" tool, Anthropic suggests the following implementation best practices based on its τ-Bench experiments.

1. Strategic tips and area-specific examples

The most effective way to do this is to provide clear instructions on when and how to use the "think" tool, e.g. for the τ-Bench aerospace domain. Providing examples tailored to your specific use case can significantly improve the efficiency of your model's use of the "think" tool:

- The level of detail expected in the reasoning process.

- How to break down complex instructions into actionable steps.

- Decision trees for dealing with common scenarios.

- How to check that all the necessary information has been collected.

2. Placement of complex guides in system alerts

Anthropic has found that when "think" tool descriptions are long and complex, it is more effective to include them in system prompts than to place them in the tool description itself. This approach provides broader context and helps models better integrate thought processes into their overall behavior.

When not to use the "think" tool

While the "think" tool can provide substantial improvements, it is not applicable to all tool usage scenarios and increases prompt length and output. token cost of the tool. Specifically, Anthropic found no improvement in the "think" tool in the following use cases:

- Non-sequential tool calls: If Claude only needs to make a single tool call or multiple parallel calls to accomplish a task, then adding the "think" tool is unlikely to bring any improvement.

- Simple command to follow: When Claude doesn't have to follow many constraints and its default behavior is good enough, the extra "think" is unlikely to pay off.

Quick start: a few simple steps for great results

The "think" tool is a simple addition to the Claude implementation that produces meaningful improvements in just a few steps:

- Testing with proxy tool usage scenarios. Start with challenging use cases - those where Claude currently struggles with policy compliance or complex reasoning in long tool call chains.

- Adding Tool Definitions. Implement a "think" tool customized for your domain. It requires minimal code, but allows for more structured reasoning. Also consider including instructions on when and how to use the tool in the system prompts, with examples relevant to your domain.

- Monitoring and Improvement. Observe how Claude uses the tool in his practice and adapt your prompts to encourage more effective thought patterns.

Most importantly, adding this tool has few drawbacks in terms of performance results. It will not change external behavior or interfere with your existing tools or workflow unless Claude decides to use it.

Summary and outlook

Anthropic's research shows that the "think" tool significantly improves the performance of the Claude 3.5 Sonnet model on complex tasks that require policy compliance and reasoning over long tool call chains. While the "think" tool is not a one-size-fits-all solution, it provides substantial benefits for the right use cases with minimal implementation complexity.

We look forward to seeing how developers utilize "think" tools to build more powerful, reliable, and transparent AI systems. In the future, Anthropic may further explore the combination of "think" tools with other AI technologies, such as reinforcement learning and knowledge graphs, to further enhance the reasoning and decision-making capabilities of AI models. Meanwhile, how to design more effective cueing strategies and how to apply "think" tools to a wider range of fields will also be important directions worth studying.

![[转载]QwQ-32B 的工具调用能力及 Agentic RAG 应用-首席AI分享圈](https://www.aisharenet.com/wp-content/uploads/2025/03/b04be76812d1a15-220x150.jpg)