General Introduction

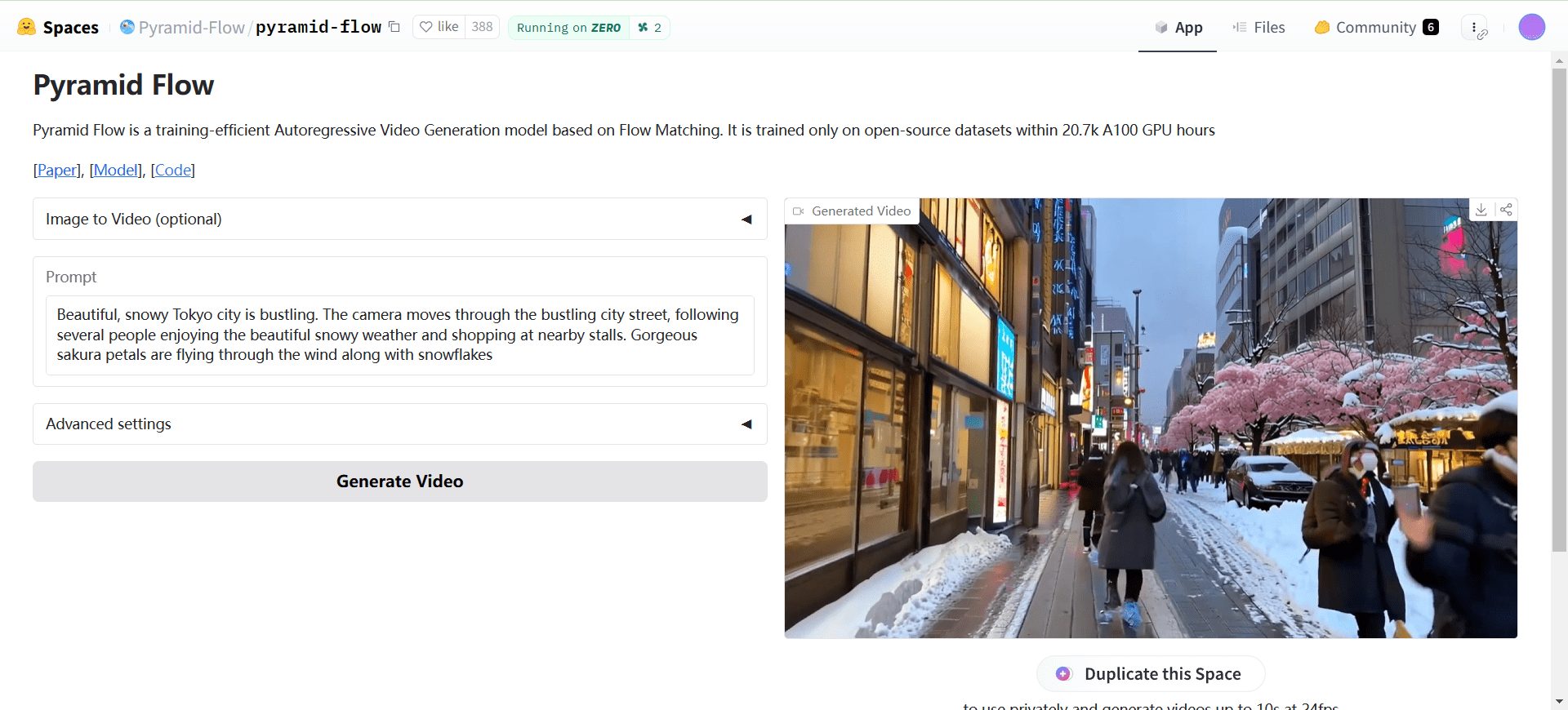

Pyramid Flow is an efficient autoregressive video generation method based on the Flow Matching technique. The method enables the generation and decompression of video content with higher computational efficiency by interpolating between different resolutions and noise levels.Pyramid Flow is capable of generating high-quality 10-second videos at 768p resolution, 24 FPS, and supports image-to-video generation. The entire framework is optimized end-to-end, using a single DiT model trained in 20.7k A100 GPU training hours.

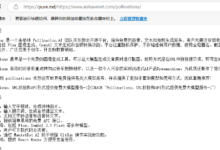

Online experience: https://huggingface.co/spaces/Pyramid-Flow/pyramid-flow

Function List

- Efficient Video Generation: Generates high-quality 10-second video at 768p resolution at 24 FPS.

- Image to Video Generation: Support for generating videos from images.

- Multi-resolution support: Model checkpoints are available in 768p and 384p resolutions.

- CPU Offload: Two types of CPU offloading are supported to reduce GPU memory requirements.

- Multi-GPU Support: Provides multi-GPU inference scripts that support sequence parallelism to save memory per GPU.

Using Help

Environmental settings

- Create an environment using conda:

复制复制复制复制复制复制复制复制复制

cd Pyramid-Flow conda create --name pyramid-flow python=3.8.10 conda activate pyramid-flow - Install the dependencies:

复制复制复制复制复制复制复制复制

pip install -r requirements.txt

Model Download and Loading

- Download model checkpoints from Huggingface:

复制复制复制复制复制复制复制

# 下载 768p 和 384p 模型检查点 - Load model:

复制复制复制复制复制复制

model_dtype, torch_dtype = 'bf16', torch.bfloat16 model = PyramidDiTForVideoGeneration( 'PATH', # 下载的检查点目录 model_dtype, model_variant='diffusion_transformer_768p', # 或 'diffusion_transformer_384p' ) model.vae.enable_tiling() model.enable_sequential_cpu_offload()

Text to Video Generation

- Set the generation parameters and generate the video:

复制复制复制复制复制

frames = model.generate( prompt="你的文本提示", num_inference_steps=[20, 20, 20], video_num_inference_steps=[10, 10, 10], height=768, width=1280, temp=16, # temp=16: 5s, temp=31: 10s guidance_scale=9.0, # 384p 设为 7 video_guidance_scale=5.0, output_type="pil", save_memory=True, ) export_to_video(frames, "./text_to_video_sample.mp4", fps=24)

Image to Video Generation

- Set the generation parameters and generate the video:

复制复制复制复制

prompt = "FPV flying over the Great Wall" with torch.no_grad(), torch.cuda.amp.autocast(enabled=True, dtype=torch_dtype): frames = model.generate_i2v( prompt=prompt, input_image=image, num_inference_steps=[10, 10, 10], temp=16, video_guidance_scale=4.0, output_type="pil", save_memory=True, ) export_to_video(frames, "./image_to_video_sample.mp4", fps=24)

Multi-GPU Inference

- Inference using multiple GPUs:

复制复制复制

# 在 2 个或 4 个 GPU 上运行推理脚本

Niu One Click Deployment Edition

The unzipped password of the startup file is placed in the download address. If the model download is often interrupted after startup, check the official document to download the model file separately, about 30G.