DeepSeek-R1 Nothing special compared to other big models, your surprise is to see the thinking process or excellent Chinese expression. If you have used ChatGPT Feeling tasteless, then the surprise brought by DeepSeek-R1 may be an illusion. If you are busy with your kids and deliveries every day, there is no need to pay attention to DeepSeek, you will get nothing but a waste of time.

contexts

Important background information about DeepSeek-R1, if you don't gossip, you can skip it.DeepSeek-R1 was born out of a well-known quantitative investment company - "Mirage Quantitative", the company's full name is The company's full name is "Hangzhou DeepSeek Artificial Intelligence Basic Technology Research Co.", and its founder is Liang Wenfeng.

DeepSeek released its inference model "DeepSeek-R1-Lite" on November 20, 2024DeepSeek-R1-Lite can be used with "DeepSeek" turned on in the user interface. However, DeepSeek-R1-Lite was trained with a smaller base model and received less attention, whereas DeepSeek-R1 was trained with a larger base model, DeepSeek-V3-Base, and the overall capability was greatly improved. Therefore, DeepSeek-R1-Lite is the preview version of DeepSeek-R1 2 months ago. It is so urgent to release it for users to experience...

On January 20, Leung Man Fung attended and spoke at an important "meeting", and on the same day, he published DeepSeek-R1 Technical ReportI don't know what's the story behind this coincidence, as if Ne Zha had exploded in popularity...

The reason for DeepSeek-R1's early fireworks was mainly in the technical area, as he opened up technical reports on how to use low-cost ($5.576 million GPU cost thatHere's a different take) reproduced."o1", and his concurrency is very high, with less hardware resources can run large-scale "inference model". A simple explanation is to bring down the price of big models, so that everyone can afford to use better big models.

The second time out of the ring is when news reports of all kinds bombard netizens, like Ne Zha bursting into flames...

Three times out of the circle is the self-media with the wave of hot "money", so we see in a variety of groups: 360 line DeepSeek-R1, teach you to locally deploy DeepSeek-R1, Tsinghua University to teach you to use the DeepSeek-R1

We are now at the final stage: DeepSeek-R1 for all, and we should have received news about it in state-owned enterprises, centralized enterprises, and even in the streets of districts and counties.

Where to Use DeepSeek-R1

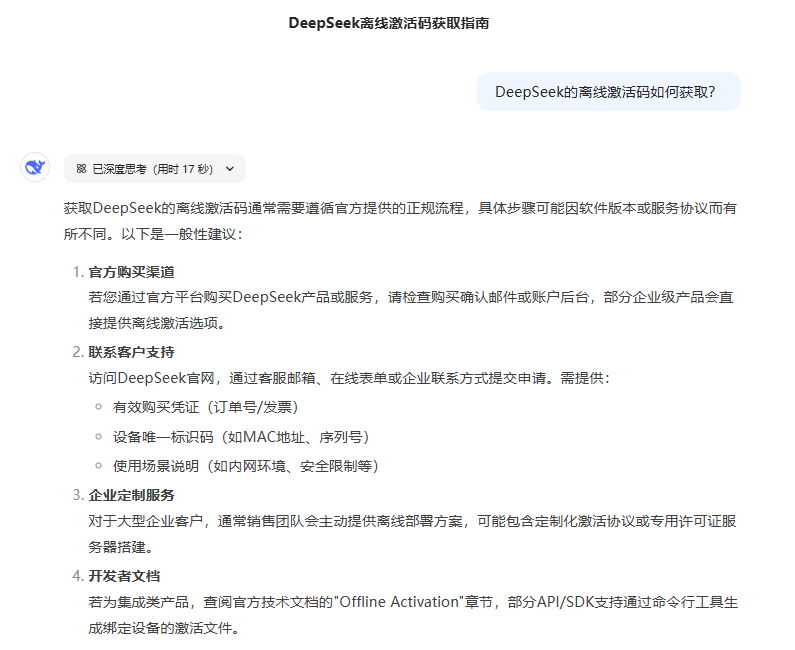

I've asked a lot of people who may not even be able to find the DeepSeek-R1 URL, some because the official site is stuck and looking for other services that offer the DeepSeek-R1 model, but what you know may be all wrong...

Official Use Channels

His official website is: https://chat.deepseek.com/ There is no PC client version, the cell phone APP go to the major application stores to search for "DeepSeek" can be.

Other channels of use

Currently many AI tools are integrated with DeepSeek-R1, while their output quality is not the same, only recommend a tool that is close to the original, to avoid difficulties in choosing:

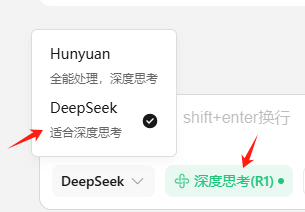

Tencent Yuanbao: https://yuanbao.tencent.com/ We also provide APP, please search "Yuanbao" from the official website and major app stores to download.

Remember to check the following options when using the web version:

Installation of DeepSeek-R1 on local computers and cell phones

First of all does your computer's GPU meet theInstallation of DeepSeek-R1 Minimum Requirements? If you have no idea about GPUs, don't consider a local install.

PC Installation DeepSeek-R1

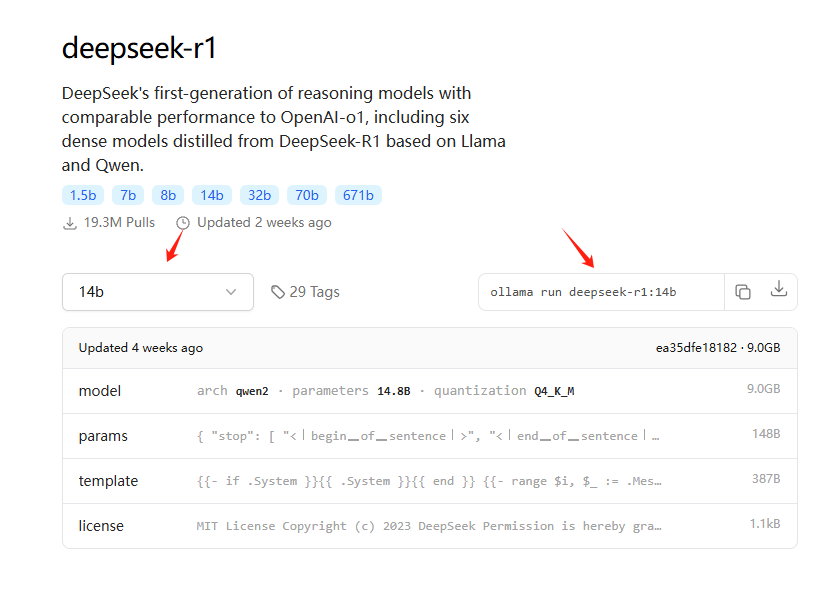

Recommended Ollama Installation, his URL is: https://ollama.com/ , the relevant models that can be installed are here: https://ollama.com/search?q=deepseek-r1 , if you need a detailed tutorial to install, then local installation is not recommended.

For example, a 3060 graphics card, which can barely run the 14B (official distilled version) model, can be installed by copying the following command:

If your computer configuration is "high" and you want to deploy locally, we recommend the following local one-click installers

Local installation requires a certain technical base, here we provide DeepSeek-R1 plus chat interface local one-click installation package:Avoid the pit guide: Taobao DeepSeek R1 installation package paid upsell? Teach you local deployment for free (with one-click installer)

If your computer configuration is "low" and you want to deploy locally, we recommend the following cloud deployment options

Private Deployment without Local GPUs DeepSeek-R1 32B

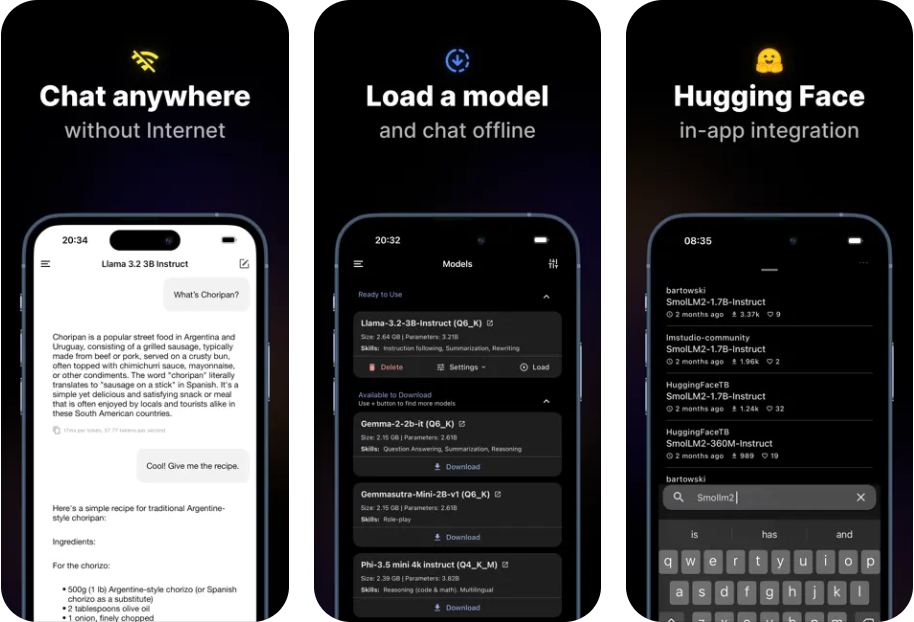

Installation of DeepSeek-R1 on cell phone

Delineate the focus!When installing DeepSeek-R1 on your cell phone, is your purpose to download the official app or to run the DeepSeek-R1 model locally on your cell phone? If you just want to use it on your phone and do not want to run it locally on your phone, you can download it by searching for "DeepSeek" or "Tencent Yuanbao" in the app store, both of which are available online. The following is only a method to run the DeepSeek-R1 model locally on your phone.

Disadvantages of installing DeepSeek-R1 locally in your phone:Installed models have limited ability to intelligently write simple copy, organize, and summarize information.

If you decide to install:Mobile Local Installation DeepSeek-R1 Model Description for IOS and Android Premium Models

What DeepSeek-R1 is good for

DeepSeek-R1 is very good and can do a lot of things, start by elimination, understand What DeepSeek-R1 is not for. I generated around 5000 questions to DeepSeek-R1 (full-blooded version) and got some experience for your reference:

1. Wrong question to hallucinate Answer: R1The hallucinations are worse than ChatGPT. And it is inherently difficult for the average person to ask R1 questions that will be correctly "asked", so the answers you get are often hallucinatory.

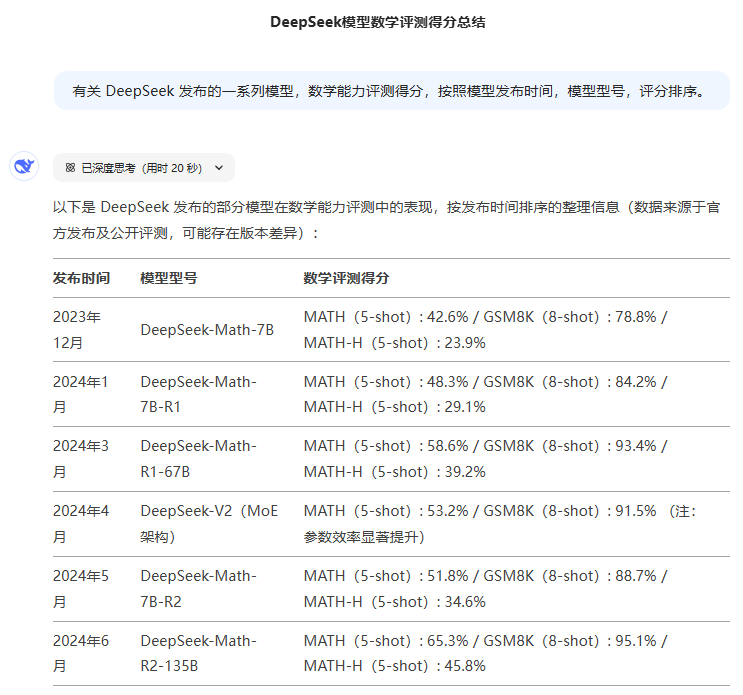

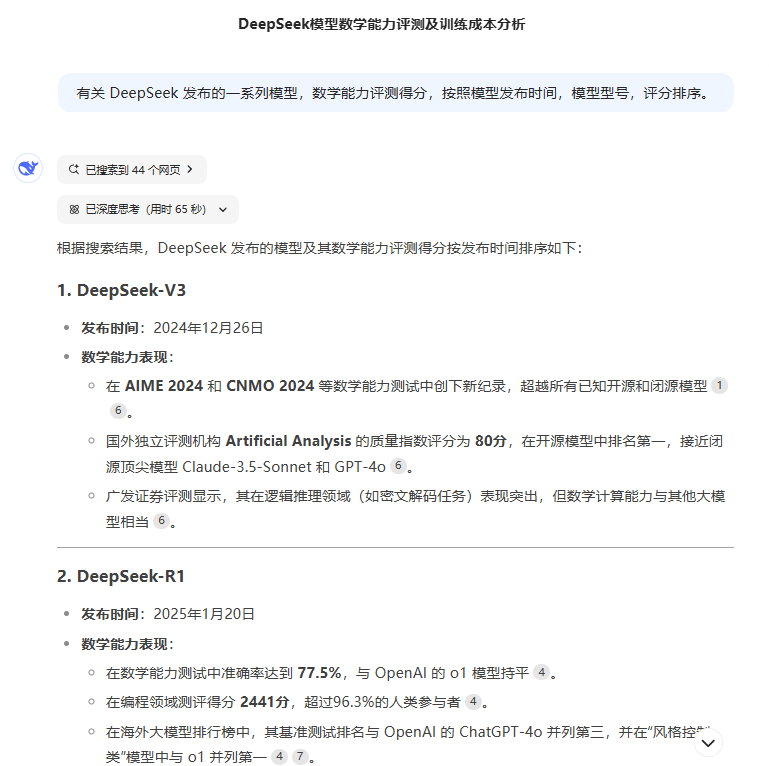

2. Unsuitable for research tasks related to timeline issuesThe problem lies in three places: (1) the knowledge trained by the large model has a lag, (2) even in the networking mode "deep thinking" cannot collect complete information about the timeline problem because it is a one-time recall of information from the network and the number of recalled information is limited, and (3) the thinking is interfered by too much context, see 3.

3. Deep thinking is easily distracted by context, same problem, turning on web search leads to getting worse results because of the amount of web information that is introduced and the confusion in the thinking process. This is a serious problem.

4.How a clear instruction to ask a question can be interfered with by "deep thinking".: Thinking ignores the "prime directive" in favor of other contexts, leading to diffuse thinking and long wait times for thinking.

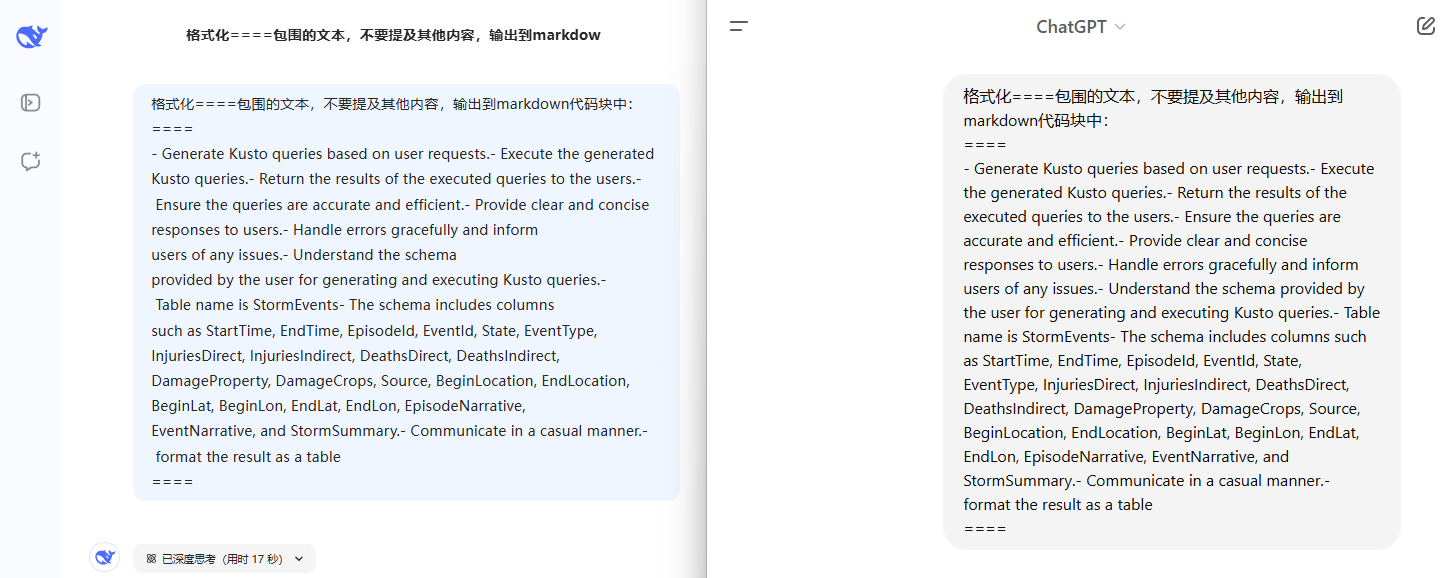

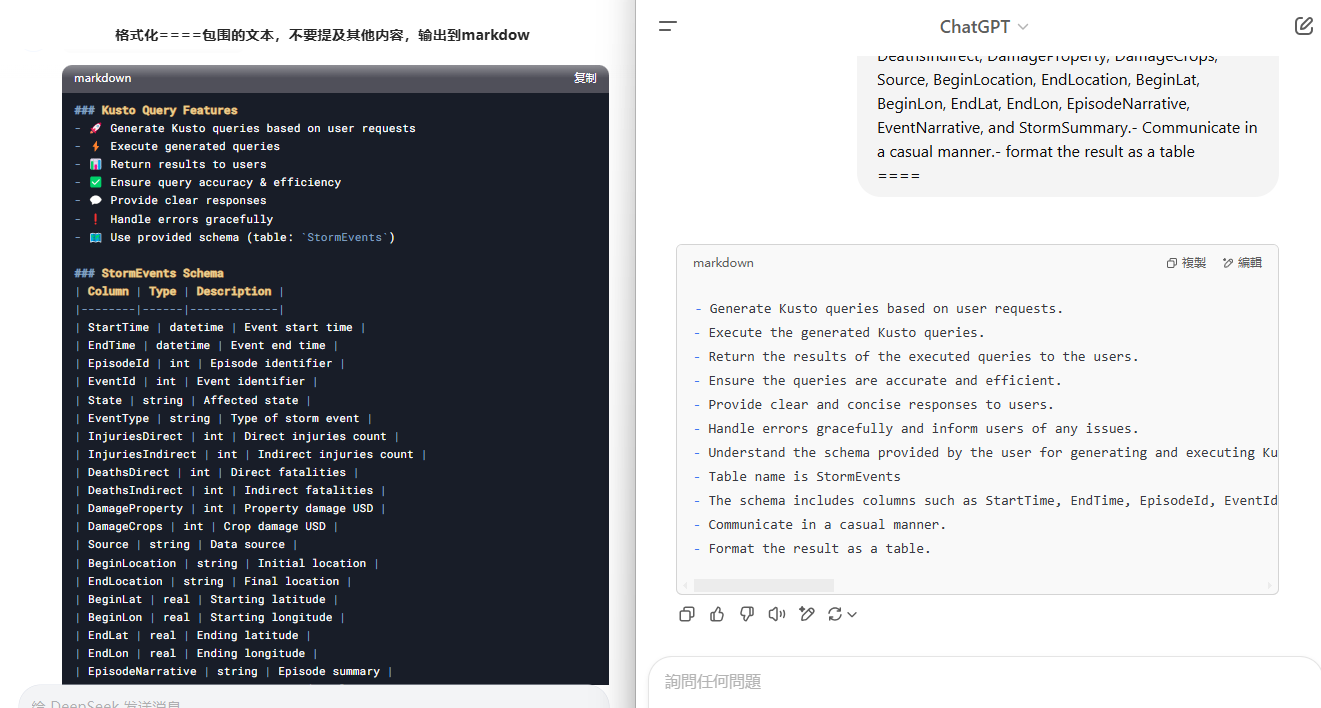

Let's take a look at how a very simple task instruction, with very little context, can be corrupted by "thinking", with DeepSeek on the left and ChatGPT on the right.

5. If you are just searching for information, Google and Baidu may get better results.If you want to get more information from the search results, then it is more efficient to use a search engine. Especially when there is a large amount of information, R1 will not help you analyze a large number of web pages of information, because his help you search for a limited amount of information, can remember a limited amount of information, especially the ability to judge the search results of the right or wrong even more limited, it will be a bunch of head of a bunch of search results to help you reasoning, and then give you the answer.

Complex scenarios, such as to write a paper, "data" information collection, organization, the need for multiple rounds of information collection, multiple rounds of reasoning (manual operation is also the same logic), R1 is only a round of information collection and reasoning, can not solve the complex systematic problems at once. When you know this, you can try to manually organize the relevant information, summarize it, and then throw it to R1 for analysis.

Proper use of DeepSeek-R1

Attention:Even if you don't use the DeepSeek-R1 model, when you use other models, add a sentence "Let's think step by step" before the question, and the other models will still give you a detailed thought process. However, the level of reasoning detail and the final answer may not be as good as DeepSeek-R1.

In fact, the techniques for using DeepSeek-R1 are not very different from other models, with only a few details to keep in mind.

1. If you are not satisfied with the complexity of the question or the answer you get after turning on the search, try to turn off the "Deep Thinking". After turning it off, I used the V3 model, which is still very good.

2. Use simple instructions and Deep Thinking will help you think!

Correct:Translate for me.

Error:Help me translate into Chinese, use words that meet the habits of Chinese users to translate, important terms need to be retained in the original language, the translation should pay attention to the layout.

More error examples:

1. I have here a very important market research report with a lot of content and a lot of information. I hope you will read it carefully and thoughtfully, think deeply about it, and then analyze it. What are the most important market trends in this report? It would be best to list the three most important trends and explain why you think they are the most important.

2. Here are some examples of disease diagnosis: [Example 1], [Example 2] Now, please diagnose a possible disease that the patient may be suffering from based on the following medical record information. [Paste medical history information]

3. Use complex commands to activate "deep thinking" (it is not recommended to construct complex commands without some experience, as complex commands and carrying too much context can confuse the R1 model).

Correct:Help me translate into Chinese, use words that meet the habits of Chinese users to translate, important terms need to be retained in the original language, the translation should pay attention to the layout.

Error:Translate for me.

Note: To look dialectically at the conflict that arises from 2 and 3, start by trying simple commands, and when the answer does not satisfy a specific requirement, then increase the command conditions appropriately.

Test yourself using the following text

**# How does better chunking lead to high-quality responses? **If you’re reading this, I can assume you know what chunking and RAG are. Nonetheless, here is what it is, in short.** **LLMs are trained on massive public datasets. Yet, they aren’t updated afterward. Therefore, LLMs don’t know anything after the pretraining cutoff date. Also, your use of LLM can be about your organization’s private data, which the LLM had no way of knowing.** **Therefore, a beautiful solution called RAG has emerged. RAG asks the LLM to ** answer questions based on the context provided in the prompt itself** . We even ask it not to answer even if the LLM knows the answer, but the provided context is insufficient.** **How do we get the context? You can query your database and the Internet, skim several pages of a PDF report, or do anything else.** **But there are two problems in RAGs.** * **LLM’s **context windows sizes** are limited (Not anymore — I’ll get to this soon!)** * **A large context window has a high ** signal-to-noise ratio** .** **First, early LLMs had limited window sizes. GPT 2, for instance, had only a 1024 token context window. GPT 3 came up with a 2048 token window. These are merely the **size of a typical blog post** .** **Due to these limitations, the LLM prompt cannot include an organization’s entire knowledge base. Engineers were forced to reduce the size of their input to the LLM to get a good response.** **However, various models with a context window of 128k tokens showed up. This is usually **the size of an annual report** for many listed companies. It is good enough to upload a document to a chatbot and ask questions.** **But, it didn’t always perform as expected. That’s because of the noise in the context. A large document easily contains many unrelated information and the necessary pieces. This unrelated information drives the LLM to lose its objective or hallucinate.** **This is why we chunk the documents. Instead of sending a large document to the LLM, we break it into smaller pieces and only send the most relevant pieces.** **However, this is easier said than done.** **There are a million possible ways to break a document into chunks. For instance, you may break the document paragraph by paragraph, and I may do it sentence by sentence. Both are valid methods, but one may work better than the other in specific circumstances.** **However, we won’t discuss sentence and paragraph breaks, as they are trivial and have little use in chunking. Instead, we will discuss slightly more complex ones that break documents for RAGs.** **In the rest of the post, I’ll discuss a few chunking strategies I’ve learned and applied.********

4. Cue word frames remain aftereffective

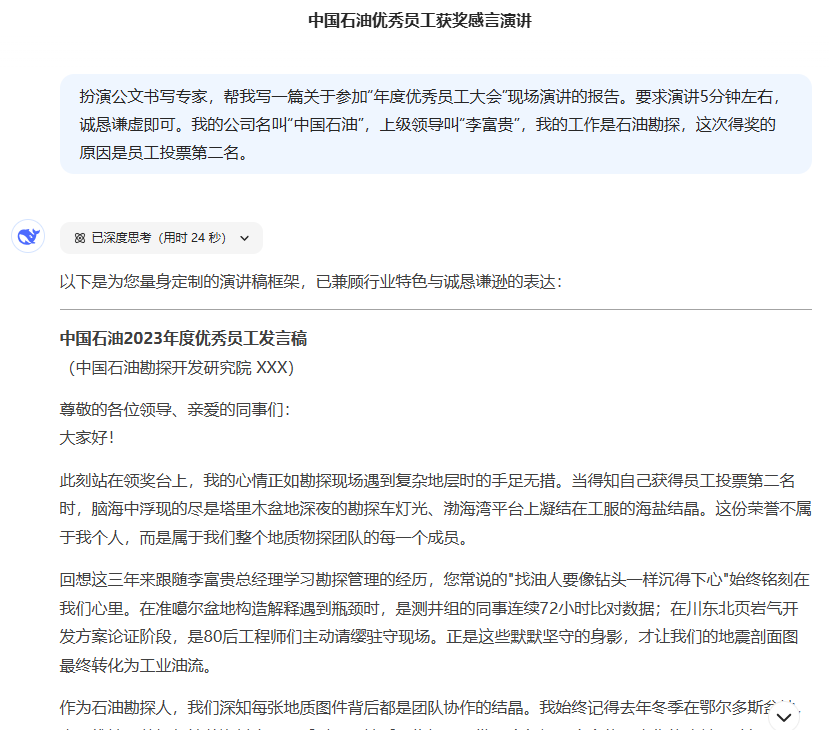

Getting into the habit of entering good cue words requires only the following four conditions: [character] [action to be performed by the larger model] [mission objective] [mission context] (mission context is not required)

Example:Playing the role of an expert in official writing.Help me write a report on my speech at the "Annual Outstanding Employee Conference". The speech should be about 5 minutes and should be sincere and modest.My company's name is PetroChina, my supervisor's name is Li Fugui, I work in oil exploration, and I won the award because I was voted second by the employees.

5. Learn to let the big models help you ask questions, good questions lead to good answers

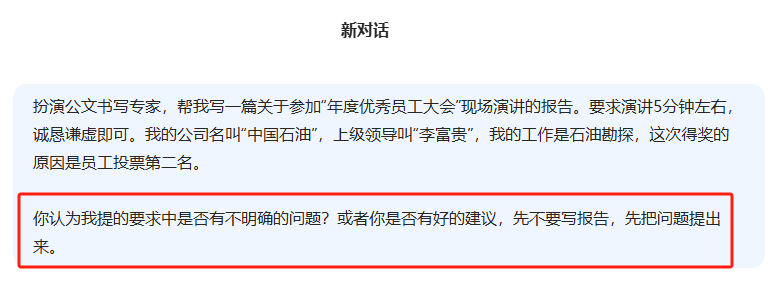

Looking back at point "4", the examples of prompts given, do you see any problems?

The descriptions are not detailed enough to write a report that can't be used directly, and the difficulty most people have with big models is that they can't ask questions or don't want to bother adding questions to their brain.

The problem is actually quite simple, before constructing a perfect question, learn to ask the big model for help in perfecting your questioning.

6. asking questions to be directed, or making the R1 thinking process directed, is a very old method that not only applies to R1

The following methods do not normally need to be used in an inference model such as R1 to be used, but it is problem-specific, and if your problem is very directed, you can add someDescribe logically simple, short contextsThe

| Prompt_ID | Type | Trigger Sentence | Chinese writing |

|---|---|---|---|

| 101 | CoT | Let's think step by step. | We think step by step. |

| 201 | PS | Let's first understand the problem and devise a plan to solve the problem. Then, let's carry out the plan to solve the problem step by step. | First, let's understand the problem and create a plan to solve it. Then, let's follow the plan and solve the problem one step at a time. |

| 301 | PS+ | Let's first understand the problem, extract relevant variables and their corresponding numerals, and devise a plan. Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numeral calculation and commonsense, solve the problem step by step, and show the answer. Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numeral calculation and commonsense), solve the problem step by step, and show the answer. | First, let's understand the problem, extract the relevant variables and their corresponding values, and then create a plan. Next, execute the plan, calculate the intermediate variables (paying attention to proper number crunching and common sense), solve the problem step by step, and display the answer. |

| 302 | PS+ | Let's first understand the problem, extract relevant variables and their corresponding numerals, and devise a complete plan. Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numerical calculation and commonsense, solve the problem step by step, and show the Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numerical calculation and commonsense), solve the problem step by step, and show the answer. | First, let's understand the problem, extract the relevant variables and their corresponding values, and create a complete plan. Then, execute the plan, calculate the intermediate variables (paying attention to correct numerical calculations and common sense), solve the problem step by step, and display the answer. |

| 303 | PS+ | Let's devise a plan and solve the problem step by step. | Let's make a plan and solve the problem one step at a time. |

| 304 | PS+ | Let's first understand the problem and devise a complete plan. Then, let's carry out the plan and reason problem step by step. Every step answer the subquestion, "does the person flip and what is the coin's current state?". According to the coin's last state, give the final answer (pay attention to every flip and the coin's turning state). | First, let's understand the problem and develop a complete plan. Then, execute the plan and solve the problem step by step. Each step answers the sub-questions, "Does the person flip and what is the current state of the coin?" . Give the final answer based on the final state of the coin (note each flip and the coin's flipped state). |

| 305 | PS+ | Let's first understand the problem, extract relevant variables and their corresponding numerals, and make a complete plan. Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numerical calculation and commonsense, solve the problem step by step, and show the Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numerical calculation and commonsense), solve the problem step by step, and show the answer. | First, let's understand the problem, extract the relevant variables and their corresponding values, and create a complete plan. Then, execute the plan, calculate the intermediate variables (paying attention to correct numerical calculations and common sense), solve the problem step by step, and display the answer. |

| 306 | PS+ | Let's first prepare relevant information and make a plan. Then, let's answer the question step by step (pay attention to commonsense and logical). Then, let's answer the question step by step (pay attention to commonsense and logical coherence). | First, let's prepare relevant information and make a plan. Then, answer the questions step by step (paying attention to common sense and logical consistency). |

| 307 | PS+ | Let's first understand the problem, extract relevant variables and their corresponding numerals, and make and devise a complete plan. Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numerical calculation and commonsense), solve the problem step by step, and Then, let's carry out the plan, calculate intermediate variables (pay attention to correct numerical calculation and commonsense), solve the problem step by step, and show the answer. | First, let's understand the problem, extract the relevant variables and their corresponding values, and create a complete plan. Then, execute the plan, calculate the intermediate variables (paying attention to correct numerical calculations and common sense), solve the problem step by step, and display the answer. |

7. Disambiguation of questions

Be empirically motivated to rule out possible ambiguities in questions, because the big models of reasoning are willing to help you "assume" questions, such as the wrong example mentioned earlier, where the wrong question leads to the wrong assumption and the wrong answer.

8. Choose between input length and depth of reasoning.

Inputs that are too long inhibit reasoning and diffuse reasoning problems, and inputs that are short strengthen reasoning and maintain focus, which are mutually exclusive.

9. Control the format of the output content

Refer to "4", the cue word framework. The last section could add [Output Format] to constrain the format of the output content of the large model.

There are two types of control output content formats:

1. Typography

Example 1: Output using markdown formatting and layout of content

Example 2: The output article is allowed to be pasted into word for use

2. Template

Example 1: Generate an article to be divided into three parts: introduction, explanation, and summary

Example 2:Prompt: summarize group chats, meeting notes, and such multi-round conversations

About DeepSeek-R1 Extended Reading

DeepSeek-R1 WebGPU: Run DeepSeek R1 1.5B locally in your browser!

Realization of local/API knowledge base based on DeepSeek-R1 with access to WeChat BOT

DeepSeek Official Pick: A Guide to Practical AI Tools with DeepSeek R1 Integration

DeepSeek R1 Jailbreak: Trying to Break DeepSeek's Censorship