General Introduction

II-Researcher is an open source AI research tool developed by the Intelligent-Internet team and hosted on GitHub. it is designed for deep search and complex reasoning, and is capable of answering complex questions through intelligent web searches and multi-step analysis. Released on March 27, 2025, the project supports multiple search and crawling tools (e.g. Tavily, SerpAPI, Firecrawl) and integrates with LiteLLM to call different AI models. Users can get the code for free and deploy or modify it by themselves, which is suitable for researchers, developers and other people who need efficient information processing. It is centered on open source, configurable and asynchronous operations, providing transparent research support.

Function List

- Intelligent web search: by Tavily and SerpAPI for accurate information.

- Web page crawling and extraction: Support Firecrawl, Browser, BS4 and other tools to extract content.

- Multi-step reasoning: the ability to break down a problem and reason step-by-step to an answer.

- Configurable models: support for tuning LLMs for different tasks (e.g. GPT-4o, DeepSeek).

- Asynchronous operations: improving the efficiency of search and processing.

- Generate detailed answers: provide comprehensive reports with references.

- Customized pipeline: users can adjust the search and reasoning process.

Using Help

Installation process

To use II-Researcher, you need to install and configure the environment. The following are the specific steps:

- Cloning Codebase

Enter the following command in the terminal to download the code:

git clone https://github.com/Intelligent-Internet/ii-researcher.git

cd ii-researcher

- Installation of dependencies

The project requires Python 3.7+. Run the following command to install the dependencies:

pip install -e .

- Setting environment variables

Configure the necessary API keys and parameters. Example:

export OPENAI_API_KEY="your-openai-api-key"

export TAVILY_API_KEY="your-tavily-api-key"

export SEARCH_PROVIDER="tavily"

export SCRAPER_PROVIDER="firecrawl"

Optional configuration (for compression or inference):

export USE_LLM_COMPRESSOR="TRUE"

export FAST_LLM="gemini-lite"

export STRATEGIC_LLM="gpt-4o"

export R_TEMPERATURE="0.2"

- Running the LiteLLM Local Model Server

Install LiteLLM:

pip install litellm

Creating Configuration Files litellm_config.yaml::

model_list:

- model_name: gpt-4o

litellm_params:

model: gpt-4o

api_key: ${OPENAI_API_KEY}

- model_name: r1

litellm_params:

model: deepseek-reasoner

api_key: ${OPENAI_API_KEY}

litellm_settings:

drop_params: true

Start the server:

litellm --config litellm_config.yaml

The default is to run in the http://localhost:4000The

- Docker deployment (optional)

After configuring the environment variables, run it:

docker compose up --build -d

Service Address:

- Front End:

http://localhost:3000 - Backend API:

http://localhost:8000 - LiteLLM:

http://localhost:4000

Main Functions

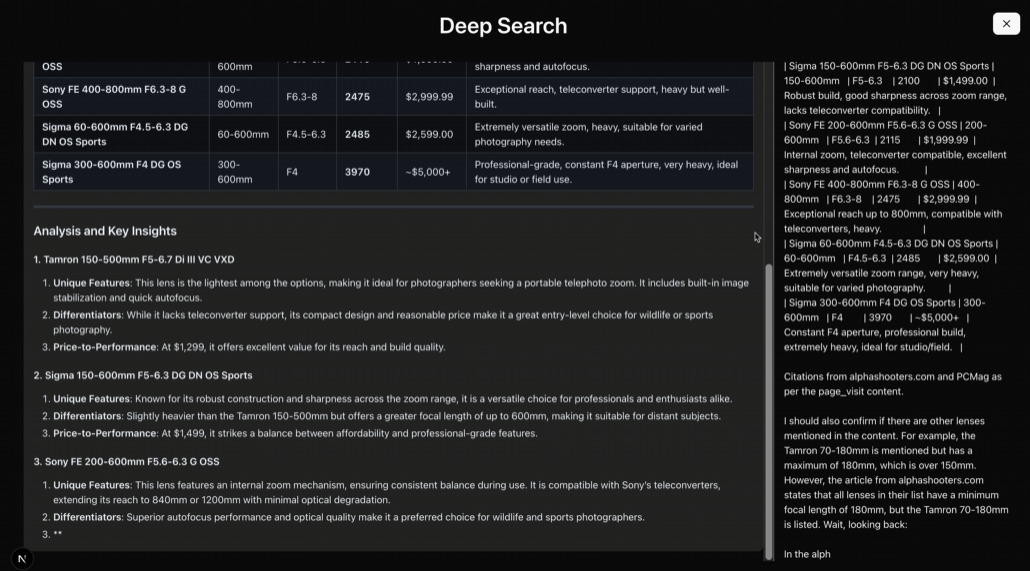

Intelligent Deep Search

- procedure::

- Run it from the command line:

python cli.py --question "AI如何改善教育质量?"

- The system calls Tavily or SerpAPI to search and return results.

- Functional Description: Supports multi-source searches, suitable for complex problems.

multistep reasoning

- procedure::

- Use the inference model:

python cli.py --question "AI在教育中的优缺点" --use-reasoning --stream

- The system analyzes and outputs conclusions step by step.

- Functional Description: Can handle tasks that require logical deduction.

web crawler

- procedure::

- configure

SCRAPER_PROVIDER="firecrawl"and the API key. - Run a search task to automatically crawl web content.

- Functional Description: Supports multiple crawling tools to ensure comprehensive content.

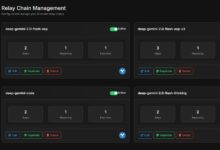

Web Interface Usage

- procedure::

- Launch the backend API:

python api.py

- go into

frontendfolder, install and run the front-end:

npm install

npm run dev

- interviews

http://localhost:3000, input problems.

- Functional Description: Provides a graphical interface for more intuitive operation.

caveat

- A stable network connection is required to access the API.

- Hardware requirements: 8GB RAM for basic functionality, 16GB+ and GPU recommended for large model inference.

- Log checking: check the logs with the

docker compose logs -fCheck the status of the operation. - Timeout Configuration: Default search timeout 300 seconds, adjustable

SEARCH_PROCESS_TIMEOUTThe

With these steps, users can easily deploy and utilize II-Researcher for the complete process from search to inference.

application scenario

- academic research

Researchers can use it to search the literature, analyze data, and generate reports. - technology development

Developers can develop customized search tools based on the framework. - Educational aids

Students can use it to organize information and answer questions. - market analysis

Companies can use it to gather industry information and generate trend analysis.

QA

- Is II-Researcher free?

Yes, it is an open source project and the code is free for users. - Need a programming foundation?

Requires basic knowledge of Python operations, but the documentation is detailed enough for beginners to get started. - Does it support Chinese?

Support, configure the appropriate model and search tool can handle the Chinese task. - What are the minimum hardware requirements?

8GB of RAM to run basic functions, 16GB+ and GPU recommended for large tasks.