In recent years, Large Language Modeling (LLM) technology has been growing at an unprecedented rate and gradually penetrating into various industries. At the same time, the demand for local deployment of LLMs is also growing. ollama, as a convenient local large model deployment tool, has been recognized for its ease of use and its ability to DeepSeek and other advanced models are favored by developers and technology enthusiasts.

However, while pursuing technological convenience, security risks are often easily overlooked. Some users deploy locally Ollama After the service, the Ollama service port may be exposed to the Internet due to improper configuration or weak security awareness, laying the foundation for potential security risks. In this paper, we will analyze this security risk from the beginning to the end, and provide corresponding preventive measures.

1. Ollama's convenience and potential safety hazards

The advent of Ollama has lowered the barriers to local deployment and use of large language models, allowing users to easily run high-performance models such as DeepSeek on their PCs or servers. This convenience has attracted a large number of users, but it has also created a problem that cannot be ignored:The Ollama service, in its default configuration, may be at risk of unauthorized access by the Internet.

Specifically, if a user deploys the Ollama service without making the necessary security configurations, such as restricting listening addresses or setting up firewall rules, then port 11434, on which Ollama listens by default, may be open to the public. This means that any Internet user can access your Ollama service via the network andUsing your computing resources for free may even cause more serious security problems.

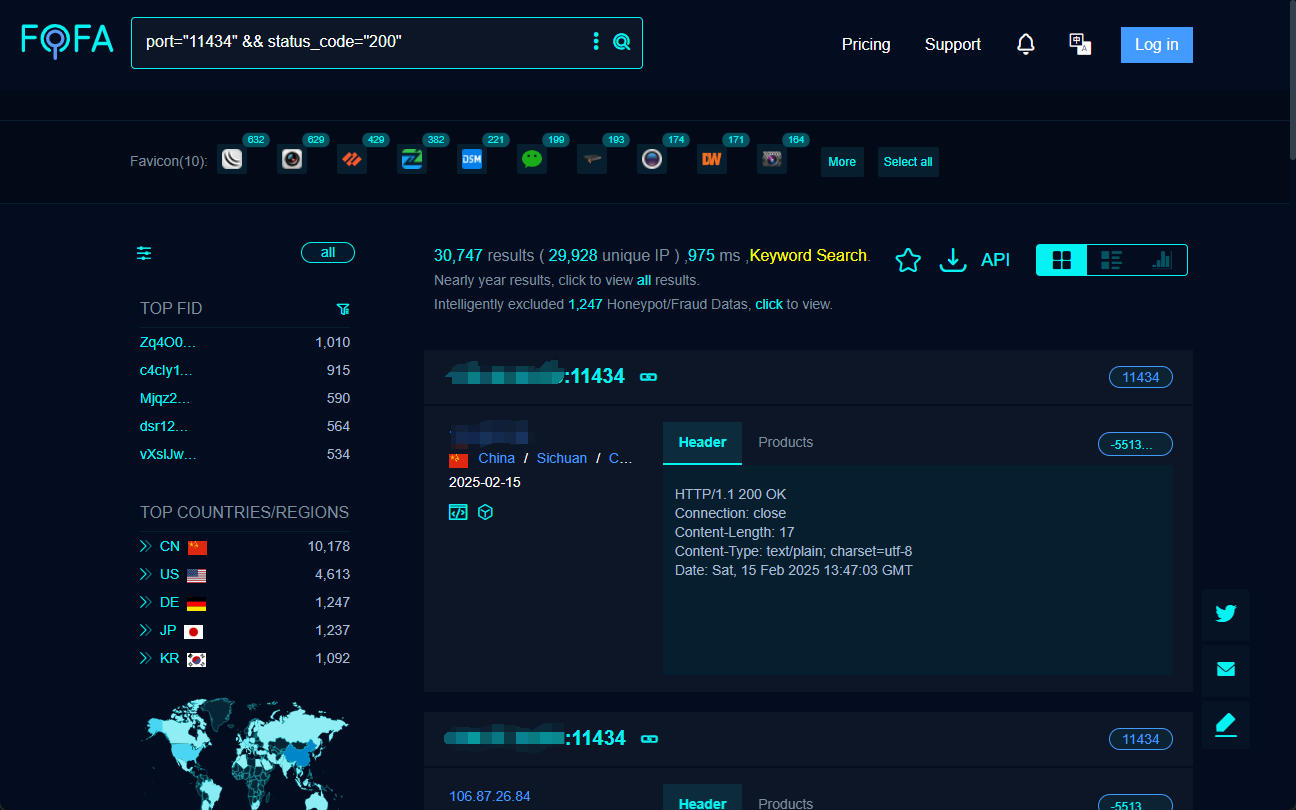

2. How to discover exposed Ollama services on the Internet? -- FOFA search engine

To understand the extent of exposure to Ollama services on the Internet, and the range of potential risks, we need to resort to cyberspace search engines.FOFA It is such a powerful tool that helps us to quickly retrieve and locate publicly exposed web services worldwide.

By carefully constructing FOFA search statements, we can effectively find hosts that expose Ollama service ports to the Internet. For example, the following FOFA search statement can be used to find targets with open port 11434 and status code 200:

port="11434" && status_code="200"

Performing the above search, FOFA lists qualified target IP addresses that are likely to be running Ollama services that are open to the public.

3. How to connect and "whore" the open Ollama service? -- ChatBox and Cherry Studio

Once the open Ollama service interface has been found through FOFA, the next step is to connect and interact with it using client-side tools.ChatBox cap (a poem) Cherry Studio are two commonly used AI client software, both of which support connecting to the Ollama service and making model calls and conversations.

3.1 Open Ollama Service using ChatBox Connection

ChatBox Known for its simplicity and ease of use, the steps to connect to the Open Ollama service are very simple:

- Download and install the ChatBox clientThe

- Configure the API address: exist ChatBox settings, set the API address to the IP address and port of the target server, for example

http://目标IP:11434/The - Select the model and start the dialog: Once the configuration is complete, you can select the model and start talking to the remote Ollama service.

3.2 Connecting to the Open Ollama Service with Cherry Studio

Cherry Studio It is more comprehensive and supports advanced features such as knowledge base management in addition to dialog functions. The steps to connect are as follows:

- Download and install the Cherry Studio clientThe

- Configure the Ollama interface: exist Cherry Studio In "Settings" → "Model Services" → "Ollama", set the API address to

http://目标IP:11434The - Add and validate the model: Add the target model in the model management page, such as "deepseek-r1:1.5b", and test the connection, and it will work after success.

With a client such as ChatBox or Cherry Studio, users can easily connect to Ollama services exposed on the Internet andFree unauthorized use of others' computing resources and modeling capabilitiesThe

After getting a random address, try the web plugin that natively supports reading Ollama models Page Assist Dialogue in the Middle:

4. Security risks behind the "free lunch": power theft, data leakage and legal pitfalls

Behind the seemingly "free lunch", there are many hidden security risks and potential legal problems:

- Arithmetic theft (misuse of resources). The most immediate risk is that an Ollama service provider's GPU computing power is taken by others without compensation, leading to wasted resources and performance degradation. Attackers may utilize automated scripts to bulk scan and connect to open Ollama services, turning their GPUs into "sweatshops" for training malicious models.

- Data Breach. If sensitive data is stored or processed in the Ollama service, there is a risk of leakage of this data in an open network environment. Users' conversation logs, training data, etc. could be transmitted over unencrypted channels and be maliciously listened to or stolen.

- Models for sale. Unencrypted model files are easily downloaded and copied, and if large industry models of commercial value are used in Ollama services, there is a risk that the models may be stolen and sold, resulting in significant financial losses.

- System intrusion. The Ollama service itself may have a security vulnerability that, if exploited by a hacker, could lead to remote code execution, allowing an attacker to take full control of a victim's server, turning itchange intoPart of a botnet used to launch larger-scale cyberattacks.

- Legal Risks. Unauthorized access to and use of other people's Ollama services, or even malicious use, may violate relevant laws and regulations and face legal liability.

5. Are Chinese users safer? -- Dynamic IP is not an absolute barrier

Some argue that "Chinese users are relatively safe from this 'white whoring' risk due to their low independent IP holdings". This view is somewhat one-sided.

It is true that a higher percentage of Chinese home broadband users use dynamic IPs compared to Western countries, which reduces the exposure risk to some extent. The uncertainty of dynamic IPs makes it difficult for attackers to track and locate specific users over time.

But this in no way means that Chinese users can rest easy. First, there are still a large number of Ollama servers in the Chinese Internet environment, including many with fixed IPs. Second, even dynamic IPs may remain unchanged for a certain period of time, and it is still possible for an attacker to carry out an attack during the window when the IP address has not been changed. More importantly.Security cannot be left to "luck" or "natural barriers" but should be based on proactive security measures.

6. Local Ollama Service Security Guidelines: Avoiding becoming a "Broiler"

In order to protect locally deployed Ollama services from being misused by others, users need to take proactive and effective security measures. Below are some guidelines for novice users:

6.1 Restricting Ollama Service Listening Addresses

Change the listening address of the Ollama service from the default 0.0.0.0 modify to 127.0.0.1It's okay.Forces the Ollama service to listen only for requests from the local machine and deny direct access from external networks. The specific steps are as follows:

- Find the configuration file: Ollama's configuration files are usually located in the

/etc/ollama/config.conf.. Open the file (or create it if it doesn't exist), find or add thebind_addressConfiguration item. - Modify the binding address: commander-in-chief (military)

bind_addressis set to the value of127.0.0.1and ensure thatportset to11434. A complete configuration example is shown below:bind_address = 127.0.0.1 port = 11434 - Save and restart the service: After saving the configuration file, execute the

sudo systemctl restart ollamacommand to restart the Ollama service for the configuration to take effect.

6.2 Configuring Firewall Rules

By configuring firewall rules, you canPrecise control over the range of IP addresses and ports allowed to access the Ollama service. For example, you can allow only IP addresses on your LAN to access port 11434 and block all access requests from the public network. The following describes how to configure the firewall in Windows and Linux environments respectively:

Windows environment configuration steps:

- Turn on Advanced Security Windows Defender Firewall: In "Control Panel", find "System and Security", click "Windows Defender Firewall", and then select "Advanced Settings". Advanced Settings".

- Create a new inbound rule: In "Inbound Rules", click "New Rule...", select "Port" rule type, and click "Next". Next".

- Specifies the port and protocol: The protocol is selected as "TCP" and the specific local port is set to

11434, click "Next". - Select the operation: Select "Allow connection" and click "Next".

- Configure the scope (optional): You can configure the rule scopes as needed, generally just keep the default settings and click "Next".

- Specify the name and description: Assign an easily recognizable name to the rule (e.g. "Ollama Service Port Restriction"), add a description (optional), and click "Finish" to complete the rule creation.

Linux environment (ufw firewall for example) Configuration steps:

- Enable ufw firewall: If ufw is not yet enabled, execute the following in the terminal

sudo ufw enablecommand to enable the firewall. - Allow LAN access: fulfillment

sudo ufw allow from 192.168.1.0/24 to any port 11434command, which allows the192.168.1.0/24The IP address of the LAN segment accesses port 11434. Please modify the IP address range according to the actual LAN segment. - Deny other access (optional): If the default policy is to allow all external access, you can execute the

sudo ufw deny 11434command to deny access to port 11434 to IP addresses from all other sources. - View the firewall status: fulfillment

sudo ufw status verbosecommand to check the firewall rule status and confirm that the configuration has taken effect.

6.3 Enabling Authentication and Access Control

For scenarios where you do need to provide Ollama services externally, be sure toenabling authentication mechanisms. to prevent unauthorized access. The following are some common authentication methods:

- HTTP Basic Authentication: HTTP basic authentication can be configured through a web server such as Apache or Nginx. This method is simple and easy to use, but the security is relatively low, suitable for scenarios that do not require high security. After configuring basic authentication, users need to provide username and password when accessing Ollama services.

- API key: Add API key verification mechanism to Ollama service front-end. When a client requests Ollama service, it needs to include a predefined API key in the request header or request parameters. The server side verifies the validity of the key and only requests with the correct key are processed. This approach is more secure than basic authentication and is easy to integrate into applications.

- OAuth 2.0 and other more advanced authentication authorization mechanisms: For scenarios with higher security requirements, you can consider using more complex authentication and authorization frameworks such as OAuth 2.0. OAuth 2.0 provides a perfect authorization and authentication process, supports multiple authorization modes, and can achieve fine-grained access control. However, the configuration and integration of OAuth 2.0 is relatively complex and requires a certain amount of development effort.

The choice of authentication method needs to be based on a combination of factors such as the application scenario of Ollama services, security requirements, and the cost of technical implementation.

6.4 Using a Reverse Proxy

Using a reverse proxy server (such as Nginx) you canActs as a front-end for the Ollama service, hiding the real IP address and port of the Ollama service and providing additional security features, for example:

- Hide the back-end server: The reverse proxy server acts as a "facade" for the Ollama service. External users can only see the IP address of the reverse proxy server and cannot directly access the real server where the Ollama service is located, thus improving security.

- Load Balancing: If the Ollama service is deployed on multiple servers, the reverse proxy server can implement load balancing to distribute user requests to different servers, improving service availability and performance.

- SSL/TLS encryption: The reverse proxy server can be configured with SSL/TLS certificates to realize HTTPS encrypted access and protect the security of data transmission.

- Web Application Firewall (WAF): Some reverse proxy servers integrate WAF functionality to detect and defend against common web attacks such as SQL injection, cross-site scripting attacks (XSS), and more, further enhancing the security of the Ollama service.

- Access Control: Reverse proxy servers can be configured with more flexible access control policies, such as access control based on IP address, user identity, request content, and so on.

7. Conclusion: security awareness and responsibility in action

The popularity of locally deployed large modeling tools such as Ollama has facilitated technological innovation and applications, but it has also posed new challenges to cybersecurity. The "free use" of Ollama services exposed on the Internet may seem tempting, but it is a hidden risk. Such behavior may not only violate laws and regulations and cyber ethics, but may also pose a threat to the cyber security of oneself and others.

For Ollama service deployers, raising security awareness and taking the necessary security measures is the responsibility of protecting their own computing resources and data security, as well as maintaining a healthy network environment. Do not turn your servers into "arithmetic broilers" or even become accomplices in cyberattacks because of momentary negligence.

While enjoying the dividends of technology, every Internet user should always be vigilant, establish a correct view of network security, and work together to build a safe, trustworthy and sustainable AI application ecology.

RISK ALERT AGAIN: Please check and harden your Ollama service immediately! Safety is no small matter!

The popularity of the DeepSeek model and the Ollama tool undoubtedly epitomizes the development of AI technology. However, safety is always the cornerstone of technological progress. Please Ollama usersImmediately review your local deployment configuration, assess potential security risks, and take the necessary hardening measures. It is not too late to mend.

For those who have not yet installed Ollama or are considering deploying Ollama, be sure to alsoFully recognize the security risks of locally deploying large modeling tools, prudently assess your own technical capabilities and level of security protection, and use them appropriately under the premise of ensuring security. Remember, cybersecurity is everyone's responsibility, and preventing it before it happens is the best strategy.