General Introduction

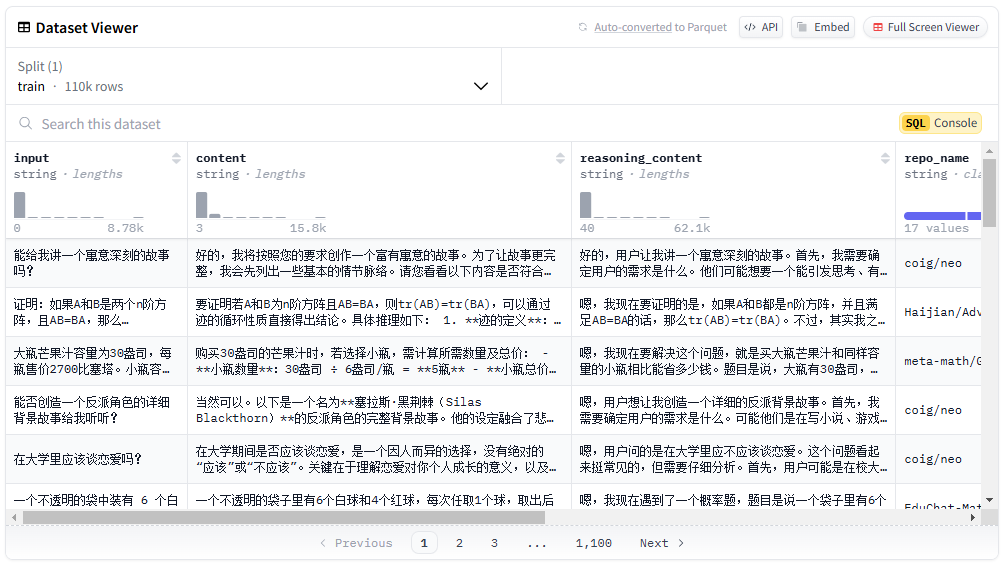

The Chinese DeepSeek-R1 distillation dataset is an open source Chinese dataset containing 110K pieces of data designed to support machine learning and natural language processing research. The dataset is released by Liu Cong NLP team, and the dataset contains not only math data, but also a large number of general types of data, such as logical reasoning, Xiaohongshu, Zhihu and so on. The distillation process of the dataset is strictly in accordance with the details provided by DeepSeek-R1 official to ensure the high quality and diversity of the data. Users can download and use the dataset for free on the Hugging Face and ModelScope platforms.

Function List

- Multiple data types: Includes math, logical reasoning, general-purpose type data, and more.

- High-quality data: Distilled in strict accordance with the official details provided by DeepSeek-R1.

- free and open source: Users can download it for free on the Hugging Face and ModelScope platforms.

- Supports multiple applications: Applicable to a wide range of research areas such as machine learning and natural language processing.

- Detailed data distribution: Provides detailed categorization of data and quantitative information.

Using Help

Installation process

- Visit the Hugging Face or ModelScope platforms.

- Search for "Chinese-DeepSeek-R1-Distill-data-110k".

- Click on the download link and select the appropriate format for downloading.

Usage

- Load Data Set: in the Python environment

datasetsThe library loads the dataset.

from datasets import load_dataset

dataset = load_dataset("Congliu/Chinese-DeepSeek-R1-Distill-data-110k")

- View Data: Use

datasetObjects view basic information and samples of the dataset.

print(dataset)

print(dataset['train'][0])

- Data preprocessing: Pre-processing of data according to research needs, such as word splitting and de-duplication.

from transformers import BertTokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-chinese')

tokenized_data = dataset.map(lambda x: tokenizer(x['text'], padding='max_length', truncation=True))

- model training: Model training using preprocessed data.

from transformers import BertForSequenceClassification, Trainer, TrainingArguments

model = BertForSequenceClassification.from_pretrained('bert-base-chinese')

training_args = TrainingArguments(output_dir='./results', num_train_epochs=3, per_device_train_batch_size=16)

trainer = Trainer(model=model, args=training_args, train_dataset=tokenized_data['train'])

trainer.train()

Featured Functions Operation Procedure

- Mathematical data processing: For math type data, add the prompt "Please reason step by step and put the final answer in \boxed {}".

def add_math_prompt(example):

example['text'] = "请一步步推理,并把最终答案放到 \\boxed {}。" + example['text']

return example

math_data = dataset.filter(lambda x: x['category'] == 'math').map(add_math_prompt)

- Logical Reasoning Data Processing: Special handling of logical reasoning data to ensure logical and consistent data.

def process_logic_data(example):

# 自定义逻辑处理代码

return example

logic_data = dataset.filter(lambda x: x['category'] == 'logic').map(process_logic_data)